Meta, AI, and the US Military: Doomsters, Now Is Your Chance

November 12, 2024

Sorry to disappoint you, but this blog post is written by a dumb humanoid.

Sorry to disappoint you, but this blog post is written by a dumb humanoid.

The Zuck is demonstrating that he is an American. That’s good. I found the news report about Meta and its smart software in Analytics India magazine interesting. “After China, Meta Just Hands Llama to the US Government to ‘Strengthen’ Security” contains an interesting word pair, “after China.”

What did the article say? I noted this statement:

Meta’s stance to help government agencies leverage their open-source AI models comes after China’s rumored adoption of Llama for military use.

The write up points out:

“These kinds of responsible and ethical uses of open source AI models like Llama will not only support the prosperity and security of the United States, they will also help establish U.S. open source standards in the global race for AI leadership.” said Nick Clegg, President of Global Affairs in a blog post published from Meta.

Analytics India notes:

The announcement comes after reports that China was rumored to be using Llama for its military applications. Researchers linked to the People’s Liberation Army are said to have built ChatBIT, an AI conversation tool fine-tuned to answer questions involving the aspects of the military.

I noted this statement attributed to a “real” person at Meta:

Yann LecCun, Meta’s Chief AI scientist, did not hold back. He said, “There is a lot of very good published AI research coming out of China. In fact, Chinese scientists and engineers are very much on top of things (particularly in computer vision, but also in LLMs). They don’t really need our open-source LLMs.”

I still find the phrase “after China” interesting. Is money the motive for this open source generosity? Is it a bet on Meta’s future opportunities? No answers at the moment.

Stephen E Arnold, November 12, 2024

Bring Back Bell Labs…Wait, Google Did…

November 12, 2024

Bell Labs was once a magical, inventing wonderland and it established the foundation for modern communication, including the Internet. Everything was great at Bell Labs until projects got deadlines and creativity was stifled. Hackaday examines the history of the mythical place and discusses if there could ever be a new Bell Labs in, “What Would It Take To Recreate Bell Labs?”

Bell Labs employees were allowed to tinker on their projects for years as long as they focused on something to benefit the larger company. These fields ranges from metallurgy, optics, semiconductors, and more. Bell Labs worked with Western Electric and AT&T. These partnerships resulted in transistor, laser, photovoltaic cell, charge-coupled cell (CCD), Unix operating system, and more.

What made Bell Labs special was that inventors were allowed to let their creativity marinate and explore their ideas. This came to screeching halt in 1982 when the US courts ordered AT&T to breakup. Western Electric became Lucent Technologies and took Bell Labs with it. The creativity and gift of time disappeared too. Could Bell Labs exist today? No, not as it was. It would need to be updated:

The short answer to the original question of whether Bell Labs could be recreated today is thus a likely ‘no’, while the long answer would be ‘No, but we can create a Bell Labs suitable for today’s technology landscape’. Ultimately the idea of giving researchers leeway to tinker is one that is not only likely to get big returns, but passionate researchers will go out of their way to circumvent the system to work on this one thing that they are interested in.”

Google did have a new incarnation of Bell Labs. Did Google invent the Google Glass and billions in revenue from actions explained in the novel 1984?

Whitney Grace, November 12, 2024

Disinformation: Just a Click Away

November 11, 2024

Here is an interesting development. “CreationNetwork.ai Emerges As a Leading AI-Powered Platform, Integrating Over Twenty Two Tools,” reports HackerNoon. The AI aggregator uses Telegram plus other social media to push its service. Furthermore, the company is integrating crypto into its business plan. We expect these "blending" plays will become more common. The Chainwire press release says about this one:

“As an all-in-one solution for content creation, e-commerce, social media management, and digital marketing, CreationNetwork.ai combines 22+ proprietary AI-powered tools and 29+ platform integrations to deliver the most extensive digital ecosystem available. … CreationNetwork.ai’s suite of tools spans every facet of digital engagement, equipping users with powerful AI technologies to streamline operations, engage audiences, and optimize performance. Each tool is meticulously designed to enhance productivity and efficiency, making it easy to create, manage, and analyze content across multiple channels.”

See the write-up for a list of the tools included in CreationNetwork.ai, from AI Copywriter to Team-Powered Branding. The hefty roster of platform connections is also specified, including obvious players: all the major social media platforms, the biggest e-commerce platforms, and content creation tools like Canva, Grammarly, Adobe Express, Unsplash, and Dropbox. We learn:

“One of the most distinguishing features of CreationNetwork.ai is its extensive integration network. With over 29 integrations, users can synchronize their digital activities across major social media, e-commerce, and content platforms, providing centralized management and engagement capabilities. … This integration network empowers users to manage their brand presence across platforms from a single, unified dashboard, significantly enhancing efficiency and reach.”

Nifty. What a way to simplify digital processes for users. And to make it harder for new services to break into the market. But what groundbreaking platform would be complete without its own cryptocurrency? The write-up states:

“In preparation for its Initial Coin Offering (ICO), CreationNetwork.ai is launching a $750,000 CRNT Token Airdrop to reward early supporters and incentivize participation in the CreationNetwork.ai ecosystem. Qualified participants can secure their position by following CreationNetwork.ai’s social media accounts and completing the whitelist form available on the official website. This initiative highlights CreationNetwork.ai’s commitment to building a strong, engaged community.”

Online smart software is helpful in many ways.

Cynthia Murrell, November 11, 2024

The Bezos Bulldozer Could Stalls in a Nuclear Fuel Pool

November 11, 2024

Sorry to disappoint you, but this blog post is written by a dumb humanoid. The art? We used MidJourney.

Sorry to disappoint you, but this blog post is written by a dumb humanoid. The art? We used MidJourney.

Microsoft is going to flip a switch and one of Three Mile Islands’ nuclear units will blink on. Yeah. Google is investing in small nuclear power unit. But one, haul it to the data center of your choice, and plug it in. Shades of Tesla thinking. Amazon has also be fascinated by Cherenkov radiation which is blue like Jack Benny’s eyes.

A physics amateur learned about 880 volts by reading books on his Kindle. Thanks, MidJourney. Good enough.

Are these PR-tinged information nuggets for real? Sure, absolutely. The big tech outfits are able to do anything, maybe not well, but everything. Almost.

The “trusted” real news outfit (Thomson Reuters) published “US Regulators Reject Amended Interconnect for Agreement for Amazon Data Center.” The story reports as allegedly accurate information:

U.S. energy regulators rejected an amended interconnection agreement for an Amazon data center connected directly to a nuclear power plant in Pennsylvania, a filing showed on Friday. Members of the Federal Energy Regulatory Commission said the agreement to increase the capacity of the data center located on the site of Talen Energy’s Susquehanna nuclear generating facility could raise power bills for the public and affect the grid’s reliability.

Amazon was not inventing a function modular nuclear reactor using the better option thorium. No. Amazon just wanted to fun a few of those innocuous high voltage transmission line, plug in a converter readily available from one of Amazon’s third party merchants, and let a data center chock full of dolphin loving servers, storage devices, and other gizmos. What’s the big deal?

The write up does not explain what “reliability” and “national security” mean. Let’s just accept these as words which roughly translate to “unlikely.”

Is this an issue that will go away? My view is, “No.” Nuclear engineers are not widely represented among the technical professionals engaged in selling third-party vendors’ products, figuring out how to make Alexa into a barn burner of a product, or forcing Kindle users to smash their devices in frustration when trying to figure out what’s on their Kindle and what’s in Amazon’s increasingly bizarro cloud system.

Can these companies become nuclear adepts? Sure. Will that happen quickly? Nope. Why? Nuclear is specialized field and involves a number of quite specific scientific disciplines. But Amazon can always ask Alexa and point to its Ring door bell system as the solution to security concerns. The approach will impress regulatory authorities.

Stephen E Arnold, November 11, 2024

Instragram Does the YouTube Creator Fear Thing

November 11, 2024

Instagram influencers are enraged by CEO Adam Mosseri’s bias towards popular content. According to the BBC in, “Instagram Lowering Quality Of Less Viewed Videos ‘Alarming’ Creators”, video quality is lowered for older, less popular videos. More popular content gets the HD white glove treatment. Influencers are upset over this “discrimination,” especially when they concentrate on making income through Instagram over other platforms.

The influences view the lower quality output as harmful and affects the quality of original art. Mosseri argues that most influencers have their videos watched soon after publication. The only videos being affected by lower quality are older and no longer receive many views. While that sounds logical, it could also create a cycle that benefits only a few influencers:

Social media consultant Matt Navarra told the BBC the move ‘seems to somewhat contradict Instagram’s earlier messages or efforts to encourage new creators’.

"How can creators gain traction if their content is penalized for not being popular," he said. And he said it could risk creating a cycle of more established creators reaping the rewards of higher engagement from viewers over those trying to build their following.”

Instagram is lowering the quality to save on costs. It always comes down money, doesn’t it? When asked to respond about that, Mosseri said viewers are more interested in a video’s content over its image quality. Navarra agreed to that statement:

“He [Navarra] said creators should focus on how they can make engaging content that caters to their audience, rather than be overly concerned by the possibility of its quality being degraded by Instagram.”

Navarra’s right. Video quality will be decent and not poor like a cathode-ray tube TV. The creators should focus on building themselves and not investing all of their creative energy into one platform. Diversify!

Whitney Grace, November 11, 2024

FOGINT: Crypto Is a Community Builder

November 9, 2024

CreationNetwork.ai: A One-Stop Shop for All Your Digital Needs. And Crypto, Too!

Here is an interesting development. “CreationNetwork.ai Emerges As a Leading AI-Powered Platform, Integrating Over Twenty Two Tools,” reports HackerNoon. The AI aggregator uses Telegram plus other social media to push its service. Furthermore, the company is integrating crypto into its business plan. We expect these “blending” plays will become more common. The Chainwire press release says about this one:

“As an all-in-one solution for content creation, e-commerce, social media management, and digital marketing, CreationNetwork.ai combines 22+ proprietary AI-powered tools and 29+ platform integrations to deliver the most extensive digital ecosystem available. … CreationNetwork.ai’s suite of tools spans every facet of digital engagement, equipping users with powerful AI technologies to streamline operations, engage audiences, and optimize performance. Each tool is meticulously designed to enhance productivity and efficiency, making it easy to create, manage, and analyze content across multiple channels.”

See the write-up for a list of the tools included in CreationNetwork.ai, from AI Copywriter to Team-Powered Branding. The hefty roster of platform connections is also specified, including obvious players: all the major social media platforms, the biggest e-commerce platforms, and content creation tools like Canva, Grammarly, Adobe Express, Unsplash, and Dropbox. We learn:

“One of the most distinguishing features of CreationNetwork.ai is its extensive integration network. With over 29 integrations, users can synchronize their digital activities across major social media, e-commerce, and content platforms, providing centralized management and engagement capabilities. … This integration network empowers users to manage their brand presence across platforms from a single, unified dashboard, significantly enhancing efficiency and reach.”

Nifty. What a way to simplify digital processes for users. And to make it harder for new services to break into the market. But what groundbreaking platform would be complete without its own cryptocurrency? The write-up states:

“In preparation for its Initial Coin Offering (ICO), CreationNetwork.ai is launching a $750,000 CRNT Token Airdrop to reward early supporters and incentivize participation in the CreationNetwork.ai ecosystem. Qualified participants can secure their position by following CreationNetwork.ai’s social media accounts and completing the whitelist form available on the official website. This initiative highlights CreationNetwork.ai’s commitment to building a strong, engaged community.”

Crypto — The community builder.

Cynthia Murrell, November 11, 2024

Meta and China: Yeah, Unauthorized Use of Llama. Meh

November 8, 2024

This post is the work of a dinobaby. If there is art, accept the reality of our using smart art generators. We view it as a form of amusement.

This post is the work of a dinobaby. If there is art, accept the reality of our using smart art generators. We view it as a form of amusement.

That open source smart software, you remember, makes everything computer- and information-centric so much better. One open source champion laboring as a marketer told me, “Open source means no more contractual handcuffs, the ability to make changes without a hassle, and evidence of the community.

An AI-powered robot enters a meeting. One savvy executive asks in Chinese, “How are you? Are you here to kill the enemy?” Another executive, seated closer to the gas emitted from a cannister marked with hazardous materials warnings gasps, “I can’t breathe!” Thanks, Midjourney. Good enough.

How did those assertions work for China? If I can believe the “trusted” outputs of the “real” news outfit Reuters, just super cool. “Exclusive: Chinese Researchers Develop AI Model for Military Use on Back of Meta’s Llama”, those engaging folk of the Middle Kingdom:

… have used Meta’s publicly available Llama model to develop an AI tool for potential military applications, according to three academic papers and analysts.

Now that’s community!

The write up wobbles through some words about the alleged Chinese efforts and adds:

Meta has embraced the open release of many of its AI models, including Llama. It imposes restrictions on their use, including a requirement that services with more than 700 million users seek a license from the company. Its terms also prohibit use of the models for “military, warfare, nuclear industries or applications, espionage” and other activities subject to U.S. defense export controls, as well as for the development of weapons and content intended to “incite and promote violence”. However, because Meta’s models are public, the company has limited ways of enforcing those provisions.

In the spirit of such comments as “Senator, thank you for that question,” a Meta (aka Facebook), wizard allegedly said:

“That’s a drop in the ocean compared to most of these models (that) are trained with trillions of tokens so … it really makes me question what do they actually achieve here in terms of different capabilities,” said Joelle Pineau, a vice president of AI Research at Meta and a professor of computer science at McGill University in Canada.

My interpretation of the insight? Hey, that’s okay.

As readers of this blog know, I am not too keen on making certain information public. Unlike some outfits’ essays, Beyond Search tries to address topics without providing information of a sensitive nature. For example, search and retrieval is a hard problem. Big whoop.

But posting what I would term sensitive information as usable software for anyone to download and use strikes me as something which must be considered in a larger context; for example, a bad actor downloading an allegedly harmless penetration testing utility of the Metasploit-ilk. Could a bad actor use these types of software to compromise a commercial or government system? The answer is, “Duh, absolutely.”

Meta’s founder of the super helpful Facebook wants to bring people together. Community. Kumbaya. Sharing.

That has been the lubricant for amassing power, fame, and money… Oh, also a big gold necklace similar to the one’s I saw labeled “Pharaoh jewelry.”

Observations:

- Meta (Facebook) does open source for one reason: To blunt initiatives from its perceived competitors and to position itself to make money.

- Users of Meta’s properties are only data inputters and action points; that is, they are instrumentals.

- Bad actors love that open source software. They download it. They study it. They repurpose it to help the bad actors achieve their goals.

Did Meta include a kill switch in its open source software? Oh, sure. Meta is far-sighted, concerned with misuse of its innovations, and super duper worried about what an adversary of the US might do with that technology. On the bright side, if negotiations are required, the head of Meta (Facebook) allegedly speaks Chinese. Is that a benefit? He could talk with the weaponized robot dispensing biological warfare agents.

Stephen E Arnold, November 8, 2024

Microsoft Does the Me Too with AI. Si, Wee Wee

November 8, 2024

Sorry to disappoint you, but this blog post is written by a dumb humanoid. The art? We used MidJourney.

Sorry to disappoint you, but this blog post is written by a dumb humanoid. The art? We used MidJourney.

True or false: “Windows Intelligence” or Microsoft dorkiness? The article “Windows Intelligence: Microsoft May Drop Copilot in Major AI Rebranding” contains an interesting subtitle. Here it is:

Copying Apple for marketing’s sake, as usual.

Is that a snarky subtitle or not? It follows up the idea of “rebranding” Satya Nadella’s next big thing with an assertion of possibly Copy Cat behavior. I like it.

The article says:

Copilot’s chatbot service, Windows Recall, and other AI-related functionalities could soon become known simply as “Windows Intelligence.” According to a recently surfaced reference included in a template file for the Group Policy Object Editor (AppPrivacy.adml), Windows Intelligence is the umbrella term Microsoft possibly chose to unify the many AI features currently being integrated into the PC operating system.

Whether such an attempt to capture the cleverness of Apple intelligence (AI for short) with the snappy WI is a stroke of genius or a clumsy me too move, time will reveal.

Let’s assume that the Softies take time out from their labors on the security issues bedeviling users and apply its considerable expertise to the task of move from Copilot to WI. One question which will arise may be, “How does one pronounce WI?”

I personally like “whee” as in the sound seven year olds make when sliding an old fashioned playground toy. I can envision some people using the WI sound in the word “whiff.” The sound of the first two letters of “weak” is a possibility as well. And, one could emit the sound “wuh” which rhymes with “Duh.”

My hunch is that this article’s assertion is unsubstantiated beyond a snippet of text in “code.” Nevertheless I enjoyed the write up. My hunch is that the author had some fun putting the article together as well.

Oh, Copilot image generation has been down for more than a weak, oh, sorry, week. That’s very close to the whiff sound for the alleged WI acronym. Oh, well, a whiff by any other name should reveal so much about a high technology giant.

Stephen E Arnold, November 8, 2024

Penalty for AI Generated Child Abuse Images

November 8, 2024

Whenever new technology is released it’s only a matter of time before a bad actor uses it for devious purposes. Those purposes are usually a form of sex, theft, and abuse. Bad actors saw a golden opportunity with AI image generation for child pornography and ArsTechnica reported that: “18-Year Prison Sentence For Man Who Used AI To Create Child Abuse Images.” Hugh Nelson, the pedophile from the UK used a 3D AI software to make child sexual abuse imagery. When his crime was discovered, he was sentences to eighteen years in prison. It’s a landmark case for prosecuting deepfakes in the UK.

Nelson used Daz 3D to make the sexually explicit images. AI image algorithms use large data models to generate “new” images. The algorithms can also take preexisting images and alter them. Nelson used photographs of real children, fed them into Daz 3D, and had deepfake SA images. He also encouraged other bad actors to do the same thing. Nelson will be incarcerated until he completes two-thirds of his sentence. The judge at the trial said Nelson was a “significant risk” to the public.

Since these images are fake, one could argue that they’re harmless but the problem here was the use of real children’s images. These real kids had their visage transformed into sexually explicit images. That’s where the debate about harm and intent enters:

“Graeme Biggar, director-general of the UK’s National Crime Agency, last year warned it had begun seeing hyper-realistic images and videos of child sexual abuse generated by AI. He added that viewing this kind of material, whether real or computer-generated, “materially increases the risk of offenders moving on to sexually abusing children themselves.”

Greater Manchester Police’s specialist online child abuse investigation team said computer-generated images had become a common feature of their investigations.

‘This case has been a real test of the legislation, as using computer programs in this particular way is so new to this type of offending and isn’t specifically mentioned within current UK law,’ detective constable Carly Baines said when Nelson pleaded guilty in August. The UK’s Online Safety Act, which passed last October, makes it illegal to disseminate non-consensual pornographic deepfakes. But Nelson was prosecuted under existing child abuse law.”

My personal view is that Nelson should be locked up for the remainder of his putrid existence as should the people who asked him to make those horrible images. Don’t mess with kids!

Whitney Grace, November 8, 2024

Let Them Eat Cake or Unplug: The AI Big Tech Bro Effect

November 7, 2024

I spotted a news item which will zip right by some people. The “real” news outfit owned by the lovable Jeff Bezos published “As Data Centers for AI Strain the Power Grid, Bills Rise for Everyday Customers.” The write up tries to explain that AI costs for electric power are being passed along to regular folks. Most of these electricity dependent people do not take home paychecks with tens of millions of dollars like the Nadellas, the Zuckerbergs, or the Pichais type breadwinners do. Heck, these AI poohbahs think about buying modular nuclear power plants. (I want to point out that these do not exist and may not for many years.)

The article is not going to thrill the professionals who are experts on utility demand and pricing. Those folks know that the smart software poohbahs have royally screwed up some weekends and vacations for the foreseeable future.

The WaPo article (presumably blessed by St. Jeffrey) says:

The facilities’ extraordinary demand for electricity to power and cool computers inside can drive up the price local utilities pay for energy and require significant improvements to electric grid transmission systems. As a result, costs have already begun going up for customers — or are about to in the near future, according to utility planning documents and energy industry analysts. Some regulators are concerned that the tech companies aren’t paying their fair share, while leaving customers from homeowners to small businesses on the hook.

Okay, typical “real” journospeak. “Costs have already begun going up for customers.” Hey, no kidding. The big AI parade began with the January 2023 announcement that the Softies were going whole hog on AI. The lovable Google immediately flipped into alert mode. I can visualize flashing yellow LEDs and faux red stop lights blinking in the gray corridors in Shoreline Drive facilities if there are people in those offices again. Yeah, ghostly blinking.

The write up points out, rather unsurprisingly:

The tech firms and several of the power companies serving them strongly deny they are burdening others. They say higher utility bills are paying for overdue improvements to the power grid that benefit all customers.

Who wants PEPCO and VEPCO to kill their service? Actually, no one. Imagine life in NoVa, DC, and the ever lovely Maryland without power. Yikes.

From my point of view, informed by some exposure to the utility sector at a nuclear consulting firm and then at a blue chip consulting outfit, here’s the scoop.

The demand planning done with rigor by US utilities took a hit each time the Big Dogs of AI brought more specialized, power hungry servers online and — here’s the killer, folks — and left them on. The way power consumption used to work is that during the day, consumer usage would fall and business/industry usage would rise. The power hogging steel industry was a 24×7 outfit. But over the last 40 years, manufacturing has wound down and consumer demand crept upwards. The curves had to be plotted and the demand projected, but, in general, life was not too crazy for the US power generation industry. Sure, there were the costs associated with decommissioning “old” nuclear plants and expanding new non-nuclear facilities with expensive but management environmental gewgaws, gadgets, and gizmos plugged in to save the snail darters and the frogs.

Since January 2023, demand has been curving upwards. Power generation outfits don’t want to miss out on revenue. Therefore, some utilities have worked out what I would call sweetheart deals for electricity for AI-centric data centers. Some of these puppies suck more power in a day than a dying city located in Flyover Country in Illinois.

Plus, these data centers are not enough. Each quarter the big AI dogs explain that more billions will be pumped into AI data centers. Keep in mind: These puppies run 24×7. The AI wolves have worked out discount rates.

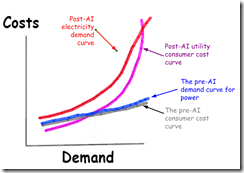

What do the US power utilities do? First, the models have to be reworked. Second, the relationships to trade, buy, or “borrow” power have to be refined. Third, capacity has to be added. Fourth, the utility rate people create a consumer pricing graph which may look like this:

Guess who will pay? Yep, consumers.

The red line is the prediction for post-AI electricity demand. For comparison, the blue line shows the demand curve before Microsoft ignited the AI wars. Note that the gray line is consumer cost or the monthly electricity bill for Bob and Mary Normcore. The nuclear purple line shows what is and will continue to happen to consumer electricity costs. The red line is the projected power demand for the AI big dogs.

The graph shows that the cost will be passed to consumers. Why? The sweetheart deals to get the Big Dog power generation contracts means guaranteed cash flow and a hurdle for a low-ball utility to lumber over. Utilities like power generation are not the Neon Deions of American business.

There will be hand waving by regulators. Some city government types will argue, “We need the data centers.” Podcasts and posts on social media will sprout like weeds in an untended field.

Net net: Bob and Mary Normcore may have to decide between food and electricity. AI is wonderful, right.

Stephen E Arnold, November 7, 2024