AI Girlfriends: The Opportunity of a Lifetime

April 26, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Relationships are hard. Navigating each other’s unique desires, personalities, and expectations can be a serious challenge. That is, if one is dating a real person. Why bother when, for just several thousand dollars a month, you can date a tailor-made AI instead? We learn from The Byte, “Tech Exec Predicts Billion-Dollar AI Girlfriend Industry.” Writer Noor Al-Sibai tells us:

“When witnessing the sorry state of men addicted to AI girlfriends, one Miami tech exec saw dollar signs instead of red flags. In a blog-length post on X-formerly-Twitter, former WeWork exec Greg Isenberg said that after meeting a young guy who claims to spend $10,000 a month on so-called ‘AI girlfriends,’ or relationship-simulating chatbots, he realized that eventually, someone is going to capitalize upon that market the way Match Group has with dating apps. ‘I thought he was kidding,’ Isenberg wrote. ‘But, he’s a 24-year-old single guy who loves it.’ To date, Match Group — which owns Tinder, Hinge, Match.com, OKCupid, Plenty of Fish, and several others — has a market cap of more than $9 billion. As the now-CEO of the Late Checkout holding company startup noted, someone is going to build the AI version and make a billion or more.”

Obviously. They are probably collaborating with the makers of sex robots already. Though many strongly object, it seems only a matter of time before fake women replace real ones for a significant number of men. Will this help assuage the loneliness epidemic, or only make it worse? There is also the digital privacy angle to consider. On the other hand, perhaps this is for the best in the long run. The Earth is overpopulated, anyway.

Cynthia Murrell, April 26, 2024

Telegram Barks, Whines, and Wants a Treat

April 25, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Tucker Carlson, an American TV star journalist lawyer person, had an opportunity to find his future elsewhere after changes at Rupert Murdoch’s talking heads channel. The future, it seems, is for Mr. Carlson to present news via Telegram, which is an end-to-end-encrypted messaging platform. It features selectable levels of encryption. Click enough and the content of the data passed via the platform is an expensive and time consuming decryption job. Mr. Carlson wanted to know more about his new broadcast home. It appears that as part of the tie up between Mr. Carlson and Mr. Durov, the latter would agree to a one-hour interview with the usually low profile, free speech flag waver Pavel Durov. You can watch the video on YouTube and be monitored by those soon-to-be-gone cookies or on Telegram and be subject to its interesting free speech activities.

A person dressed in the uniform of an unfriendly enters the mess hall of a fighting force engaged in truth, justice, and the American way. The bold lad in red forgets he is dressed as an enemy combatant and does not understand why everyone is watching him with suspicion or laughter because he looks like a fool or a clueless dolt. Thanks, MSFT Copilot. Good enough. Any meetings in DC today about security?

Pavel Durov insists that he not as smart as his brother. He tells Mr. Carlson [bold added for emphasis. Editor]:

So Telegram has been a tool for those to a large extent. But it doesn’t really matter whether it’s opposition or the ruling party that is using Telegram for us. We apply the rules equally to all sides. We don’t become prejudiced in this way. It’s not that we are rooting for the opposition or we are rooting for the ruling party. It’s not that we don’t care. But we think it’s important to have this platform that is neutral to all voices because we believe that the competition of different ideas can result in progress and a better world for everyone. That’s in stark contrast to say Facebook which has said in public. You know we tip the scale in favor of this or that movement and this or that country all far from the west and far from Western media attention. But they’ve said that what do you think of that tech companies choosing governments? I think that’s one of the reasons why we ended up here in the UAE out of all places right? You don’t want to be geopolitically aligned. You don’t want to select the winners in any of these political fights and that’s why you have to be in a neutral place. … We believe that Humanity does need a neutral platform like Telegram that would be respectful to people’s privacy and freedoms.

Wow, the royal “we.” The word salad. Then the Apple editorial control.

Okay, the flag bearer for secure communications yada yada. Do I believe this not-as-smart-as-my-brother guy?

No.

Mr. Pavlov says one thing and then does another, endangering lives and creating turmoil among those who do require secure communications. Whom you may ask? How about intelligence operatives, certain war fighters in Ukraine and other countries in conflict, and experts working on sensitive commercial projects. Sure, bad actors use Telegram, but that’s what happens when one embraces free speech.

Now it seems that Mr. Durov has modified his position to sort-of free speech.

I learned this from articles like “Telegram to Block Certain Content for Ukrainian Users” and “Durov: Apple Demands to Ban Some Telegram Channels for Users with Ukrainian SIM Cards.”

In the interview between two estimable individuals, Mr. Durov made the point that he was approached by individuals working in US law enforcement. In very nice language, Mr. Durov explained they were inept, clumsy, and focused on getting access to the data in his platform. He pointed out that he headed off to Dubai, where he could operate without having to bow down, lick boots, sell out, or cooperate with some oafs in law enforcement.

But then, I read about Apple demanding that Telegram curtail free speech for “some” individuals. Well, isn’t that special? Say one thing, criticize law enforcement, and then roll over for Apple. That is a company, as I recall, which is super friendly with another nation state somewhat orthogonal to the US. Furthermore, Apple is proud of its efforts to protect privacy. Rumors suggest Apple is not too eager to help out some individuals investigating crimes because the sacred iPhone is above the requirements of a mere country… with exceptions, of course. Of course.

The article “Durov: Apple Demands to Ban Some Telegram Channels for Users with Ukrainian SIM Cards” reports:

Telegram founder Pavel Durov said that Apple had sent a request to block some Telegram channels for Ukrainian users. Although the platform’s community usually opposes such blocking, the company has to listen to such requests in order to keep the app available in the App Store.

Why roll over? The write up quotes Mr. Durov as saying:

…, it doesn’t always depend on us.

Us. The royal we again. The company is owned by Mr. Durov. The smarter brother is a math genius like two PhDs and there are about 50 employees. “Us.” Who are the people in the collective consisting of one horn blower?

Several observations:

- Apple has more power or influence over Telegram than law enforcement from a government

- Mr. Durov appears to say one thing and then do the opposite, thinking no one will notice maybe?

- Relying on Telegram for secure communications may not be the best idea I have heard today.

Net net: Is this a “signal” that absolutely no service can be trusted? I don’t have a scorecard for trust bandits, but I will start one I think. In the meantime, face-to-face in selected locations without mobile devices may be one option to explore, but it sure is easy to use Telegram to transmit useful information to a drone operator in order to obtain a desire outcome. Like Mr. Snowden, Mr. Durov has made a decision. Actions have consequences; word sewage may not.

Stephen E Arnold, April 25, 2024

AI Versus People? That Is Easy. AI

April 25, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I don’t like to include management information in Beyond Search. I have noticed more stories related to management decisions related to information technology. Here’s an example of my breaking my own editorial policies. Navigate to “SF Exec Defends Brutal Tech Trend: Lay Off Workers to Free Up Cash for AI.” I noted this passage:

Executives want fatter pockets for investing in artificial intelligence.

Okay, Mr. Efficiency and mobile phone betting addict, you have reached a logical decision. Why are there no pictures of friends, family, and achievements in your window office? Oh, that’s MSFT Copilot’s work. What’s that say?

I think this means that “people resources” can be dumped in order to free up cash to place bets on smart software. The write up explains the management decision making this way:

Dropbox’s layoff was largely aimed at freeing up cash to hire more engineers who are skilled in AI.

How expensive is AI for the big technology companies? The write up provides this factoid which comes from the masterful management bastion:

Google AI leader Demis Hassabis said the company would likely spend more than $100 billion developing AI.

Smart software is the next big thing. Big outfits like Amazon, Google, Facebook, and Microsoft believe it. Venture firms appear to be into AI. Software development outfits are beavering away with smart technology to make their already stellar “good enough” products even better.

Money buys innovation until it doesn’t. The reason is that the time from roll out to saturation can be difficult to predict. Look how long it has taken the smart phones to become marketing exercises, not technology demonstrations. How significant is saturation? Look at the machinations at Apple or CPUs that are increasingly difficult to differentiate for a person who wants to use a laptop for business.

There are benefits. These include:

- Those getting fired can say, “AI RIF’ed me.”

- Investments in AI can perk up investors.

- Jargon-savvy consultants can land new clients.

- Leadership teams can rise about termination because these wise professionals are the deciders.

A few downsides can be identified despite the immaturity of the sector:

- Outputs can be incorrect leading to what might be called poor decisions. (Sorry, Ms. Smith, your child died because the smart dosage system malfunctioned.)

- A large, no-man’s land is opening between the fast moving start ups who surf on cloud AI services and the behemoths providing access to expensive infrastructure. Who wants to operate in no-man’s land?

- The lack of controls on smart software guarantee that bad actors will have ample tools with which to innovate.

- Knock-on effects are difficult to predict.

Net net: AI may be diffusing more quickly and in ways some experts chose to ignore… until they are RIF’ed.

Stephen E Arnold, April 25, 2024

Kicking Cans Down the Street Is Not Violence. Is It a Type of Fraud Perhaps?

April 25, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Ah, spring, when young men’s fancies turn to thoughts of violence. Forget the Iran Israel dust up. Forget the Russia special operation. Think about this Bloomberg headline:

Tech’s Cash Crunch Sees Creditors Turn ‘Violent’ With One Another

Thanks, ChatGPT. Good enough.

Will this be drones? Perhaps a missile or two? No. I think it will be marketing hoo hah. Even though news releases may not inflict mortal injury, although someone probably has died from bad publicity, the rhetorical tone seems — how should we phrase it — over the top maybe?

The write up says:

Software and services companies are in the spotlight after issuing almost $30 billion of debt that’s classed as distressed, according to data compiled by Bloomberg, the most in any industry apart from real estate.

How do wizards of finance react to this “risk”? Answer:

“These two phenomena, coupled with the covenant-lite nature of leveraged loans today, have been the primary drivers of the creditor-on-creditor violence we’re seeing,” he [Jason Mudrick, founder of distressed credit investor Mudrick Capital] said.

Shades of the Sydney slashings or vehicle fires in Paris.

Here’s an example:

One increasingly popular maneuver these days, known as non-pro rata uptiering, sees companies cut a deal with a small group of creditors who provide new money to the borrower, pushing others further back in the line to be repaid. In return, they often partake in a bond exchange in which they receive a better swap price than other creditors.

Does this sound like “Let’s kick the can down the road.” Not articulated is the idea, “Let’s see what happens. If we fail, our management team is free to bail out.”

Nifty, right?

Financial engineering is a no harm, no foul game for some. Those who lose money? Yeah, too bad.

Stephen E Arnold, April 25, 2024

AI Transforms Memories Into Real Images

April 25, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Human memory is unreliable. It’s unreliable because we forget details, remember things incorrectly, believe things happened when they didn’t, and have different perspectives. We rely on human memory for everything, especially when it comes to recreating the past. The past can be recorded and recreated but one company is transforming photos into real images. The Technology Review explains how in, “Generative AI Can Turn Your Most Precious Memories Into Photos That Never Existed.”

Synthetic Memories is a studio that takes memories and uses AI to make them real. Pau Garcia founded the studio and got the idea to start Synthetic Memories after speaking with Syrian refugees. An elderly Syrian wanted to capture her memories for her descendants and Garcia graffitied a building to record them. His idea has taken off:

"Dozens of people have now had their memories turned into images in this way via Synthetic Memories, a project run by Domestic Data Streamers. The studio uses generative image models, such as OpenAI’s DALL-E, to bring people’s memories to life. Since 2022, the studio, which has received funding from the UN and Google, has been working with immigrant and refugee communities around the world to create images of scenes that have never been photographed, or to re-create photos that were lost when families left their previous homes.”

Domestic Data Streamers are working in a building next to the Barcelona Design Museum to record and recreate people’s memories of the city. Anyone is allowed to add a memory to the archive.

In order to recreate a memory, Garcia and his team developed a simple process. An interviewer sits with a subject, who then asks them to recount a memory. A prompt engineer writes a prompt for a model on a laptop to generate an image. Garcia’s team have written an entire glossary of prompting terms. The terms need to be edited to be accurate, so the engineers work with the subjects.

Garcia and his team learned that older subjects connect better with physical copies of their images. Images that are also blurry and warped resonate more with people too, because memories aren’t remembered in crisp detail. Garcia also stresses it is important to remember the difference between synthetic images and real photography. He says synthetic memories aren’t meant to be factual. He worries that if a larger company use better versions of DALL-E they’ll forgo older models for photorealism.

Whitey Grace, April 25, 2024

Is Grandma Google Making Erratic Decisions?

April 24, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Clowny Fish TV is unknown to me. The site published a write up which I found interesting and stuffed full of details I had not seen previously. The April 18, 2024, essay is “YouTubers Claim YouTube is Very Broken Right Now.” Let’s look at a handful of examples and see if these spark any thoughts in my dinobaby mind. As Vladimir Shmondenko says, “Let’s go.”

Grandma Googzilla has her view of herself. Nosce teipsum, right? Thanks, MSFT Copilot. How’s your security today?

Here’s a statement to consider:

Over the past 72 hours, YouTubers have been complaining on X about everything from delayed comments to a noticeable decline in revenue and even videos being removed by Google for nebulous reasons after being online for years.

Okay, sluggish functions from the video ad machine. I have noticed either slow-loading or dead video ads; that is, the ads take a long time (maybe a second or two to 10 seconds to show up) or nothing happens and a “Skip” button just appears. No ad to skip. I wonder, “Do the advertisers pay for a non-displayed ad followed by a skip?” I assume there is some fresh Google word salad available in the content cafeteria, but I have not spotted it. Those arrests have, however, caught my attention.

Another item from the essay:

In fact, many longtime YouTube content creators have announced their retirements from the platform over the past year, and I have to wonder if these algorithm changes aren’t a driving force behind that. There’s no guarantee that there will be room for the “you” in YouTube six months from now, let alone six years from now.

I am not sure I know many of the big-time content creators. I do know that the famous Mr. Beast has formed a relationship with the Amazon Twitch outfit. Is that megastar hedging his bets? I think he is. Those videos cost big bucks and could be on broadcast TV if there were a functioning broadcast television service in the US.

How about this statement:

On top of the algorithm shift, and on top of the monetization hit, Google is now reportedly removing old videos that violate their current year Terms of Service.

Shades of the 23andMe approach to Terms of Service. What struck me is that one of my high school history teachers — I think his name was Earl Skaggs — railed against Joseph Stalin’s changing Russian history and forcing textbooks to be revised to present Mr. Stalin’s interpretation of reality. Has Google management added changing history to their bag of tricks. I know that arresting employees is a useful management tool, but I have been relying on news reports. Maybe those arrests were “fake news.” Nothing surprises me where online information is in the mix.

I noted this remarkable statement in the Clown Fish TV essay:

Google was the glue that held all these websites together and let people get found. We’re seeing what a world looks like without Google. Because for many content creators and journalists, it’ll be practically worthless going forward.

I have selected a handful of items. The original article includes screenshots, quotes from people whom I assume are “experts” or whatever passes as an authority today, and a of Google algorithm questioning. But any of the Googlers with access to the algorithm can add a tweak or create a “wrapper” to perform a specific task. I am not sure too many Googlers know how to fiddle the plumbing anymore. Some of the “clever” code is now more than 25 years old. (People make fun of mainframes. Should more Kimmel humor be directed at 25 year old Google software?)

Observations are indeed warranted:

- I read Google criticism on podcasts; I read criticism of Google online. Some people are falling out of love with the Google.

- Google muffed the bunny with its transformer technology. By releasing software as open source, the outfit may have unwittingly demonstrated how out of touch its leadership team is and opened the door to some competitors able to move more quickly than Grandma Google. Microsoft. Davos. AI. Ah, yes.

- The Sundar & Prabhakar School of Strategic Thinking has allowed Google search to become an easy target. Metasearch outfits recycling poor old Bing results are praised for being better than Google. That’s quite an achievement and a verification that some high-school science club management methods don’t work as anticipated. I won’t mention arresting employees again. Oh, heck. I will. Google called the police on its own staff. Slick. Professional.

Net net: Clown Fish TV definitely has presented a useful image of Grandma Google and her video behaviors.

Stephen E Arnold, April 24, 2024

From the Cyber Security Irony Department: We Market and We Suffer Breaches. Hire Us!

April 24, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Irony, according to You.com, means:

Irony is a rhetorical device used to express an intended meaning by using language that conveys the opposite meaning when taken literally. It involves a noticeable, often humorous, difference between what is said and the intended meaning. The term “irony” can be used to describe a situation in which something which was intended to have a particular outcome turns out to have been incorrect all along. Irony can take various forms, such as verbal irony, dramatic irony, and situational irony. The word “irony” comes from the Greek “eironeia,” meaning “feigned ignorance”

I am not sure I understand the definition, but let’s see if these two “communications” capture the smart software’s definition.

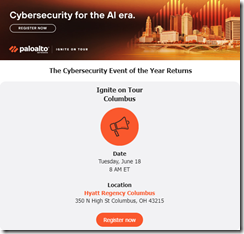

The first item is an email I received from the cyber security firm Palo Alto Networks. The name evokes the green swards of Stanford University, the wonky mall, and the softball games (co-ed, of course). Here’s the email solicitation I received on April 15, 2024:

The message is designed to ignite my enthusiasm because the program invites me to:

Join us to discover how you can harness next-generation, AI-powered security to:

- Solve for tomorrow’s security operations challenges today

- Enable cloud transformation and deployment

- Secure hybrid workforces consistently and at scale

- And much more.

I liked the much more. Most cyber outfits do road shows. Will I drive from outside Louisville, Kentucky, to Columbus, Ohio? I was thinking about it until I read:

“Major Palo Alto Security Flaw Is Being Exploited via Python Zero-Day Backdoor.”

Maybe it is another Palo Alto outfit. When I worked in Foster City (home of the original born-dead mall), I think there was a Palo Alto Pizza. But my memory is fuzzy and Plastic Fantastic Land does blend together. Let’s look at the write up:

For weeks now, unidentified threat actors have been leveraging a critical zero-day vulnerability in Palo Alto Networks’ PAN-OS software, running arbitrary code on vulnerable firewalls, with root privilege. Multiple security researchers have flagged the campaign, including Palo Alto Networks’ own Unit 42, noting a single threat actor group has been abusing a vulnerability called command injection, since at least March 26 2024.

Yep, seems to be the same outfit wanting me to “solve for tomorrow’s security operations challenges today.” The only issue is that the exploit was discovered a couple of weeks ago. If the write up is accurate, the exploit remains unfixed.,

Perhaps this is an example of irony? However, I think it is a better example of the over-the-top yip yap about smart software and the efficacy of cyber security systems. Yes, I know it is a zero day, but it is a zero day only to Palo Alto. The bad actors who found the problem and exploited already know the company has a security issue.

I mentioned in articles about some intelware that the developers do one thing, the software project manager does another, and the marketers output what amounts to hoo hah, baloney, and Marketing 101 hyperbole.

Yep, ironic.

Stephen E Arnold, April 24, 2024

Fake Books: Will AI Cause Harm or Do Good?

April 24, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read what I call a “howl” from a person who cares about “good” books. Now “good” is a tricky term to define. It is similar to “quality” or “love.” I am not going to try to define any of these terms. Instead I want to look at one example of smart software creating a problem for humans who create books. Then I want to focus attention on Amazon, the online bookstore. I think about two-thirds of American shoppers have some interaction with Amazon. That percentage is probably low if we narrow to top earners in the US. I want to wrap up with a reminder to those who think about smart software that the diffusion of technology chugs along and then — bang! — phase change. Spoiler: That’s what AI is doing now, and the pace is accelerating.

The Copilot images illustrates how smart software spreads. Cleaning up is a bit of a chore. The table cloth and the meeting may be ruined. But that’s progress of sorts, isn’t it?

The point of departure is an essay cum “real” news write up about fake books titled “Amazon Is Filled with Garbage Ebooks. Here’s How They Get Made.”

. These books are generated by smart software and Fiverr-type labor. Dump the content in a word processor, slap on a title, and publish the work on Amazon. I write my books by hand, and I try to label that which I write or pay people to write as “the work of a dumb dinobaby.” Other authors do not follow my practice. Let many flowers bloom.

The write up states:

It’s so difficult for most authors to make a living from their writing that we sometimes lose track of how much money there is to be made from books, if only we could save costs on the laborious, time-consuming process of writing them. The internet, though, has always been a safe harbor for those with plans to innovate that pesky writing part out of the actual book publishing.

This passage explains exactly why fake books are created. The fact of fake books makes clear that AI technology diffusing; that is, smart software is turning up in places and ways that the math people fiddling the numerical recipes or the engineers hooking up thousands of computing units envisioned. Why would they? How many mathy types are able to remember their mother’s birthday?

The path for the “fake book” is easy money. The objective is not excellence, sophisticated demonstration of knowledge, or the mindlessness of writing a book “because.” The angst in the cited essay comes from the side of the coin that wants books created the old-fashioned way. Yeah, I get it. But today it is clear that the hand crafted books are going to face some challenges in the marketplace. I anticipate that “quality” fake books will convert the “real” book to the equivalent of a cuneiform tablet. Don’t like this? I am a dinobaby, and I call the trajectory as my experience and research warrants.

Now what about buying fake books on Amazon? Anyone can get an ISBN, but for Amazon, no ISBN is (based on our tests) no big deal. Amazon has zero incentive to block fake books. If someone wants a hard copy of a fake book, let Amazon’s own instant print service produce the copy. Amazon is set up to generate revenue, not be a grumpy grandmother forcing grandchildren to pick up after themselves. Amazon could invest to squelch fraudulent or suspect behaviors. But here’s a representative Amazon word salad explanation cited in the “Garbage Ebooks” essay:

In a statement, Amazon spokesperson Ashley Vanicek said, “We aim to provide the best possible shopping, reading, and publishing experience, and we are constantly evaluating developments that impact that experience, which includes the rapid evolution and expansion of generative AI tools.”

Yep, I suggest not holding one’s breath until Amazon spends money to address a pervasive issue within its service array.

Now the third topic: Slowly, slowly, then the frog dies. Smart software in one form or another has been around a half century or more. I can date smart software in the policeware / intelware sector to the late 1990s when commercial services were no longer subject to stealth operation or “don’t tell” business practices. For the ChatGPT-type services, NLP has been around longer, but it did not work largely due to computational costs and the hit-and-miss approaches of different research groups. Inference, DR-LINK, or one of the other notable early commercial attempts, anyone?

Okay, now the frog is dead, and everyone knows it. Better yet, navigate to any open source repository or respond to one of those posts on Pinboard or listings in Product Hunt, and you are good to go. Anthropic has released a cook book, just do-it-yourself ideas for building a start up with Anthropic tools. And if you write Microsoft Excel or Word macros for a living, you are already on the money road.

I am not sure Microsoft’s AI services work particularly well, but the stuff is everywhere. Microsoft is spending big to make sure it is not left out of an AI lunches in Dubai. I won’t describe the impact of the Manhattan chatbot. That’s a hoot. (We cover this slip up in the AItoAI video pod my son and I do once each month. You can find that information about NYC at this link.)

Net net: The tipping point has been reached. AI is tumbling and its impact will be continuous — at least for a while. And books? Sure, great books like those from Twitter luminaries will sell. To those without a self-promotion rail gun, cloudy days ahead. In fact, essays like “Garbage Ebooks” will be cranked out by smart software. Most people will be none the wiser. We are not facing a dead Internet; we are facing the death of high-value information. When data are synthetic, what’s original thinking got to do with making money?

Stephen E Arnold, April 24, 2024

So Much for Silicon Valley Solidarity

April 23, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I thought the entity called Benzinga was a press release service. Guess not. I received a link to what looked like a “real” news story written by a Benzinga Staff Writer name Jain Rounak. “Elon Musk Reacts As Marc Andreessen Says Google Is ‘Literally Run By Employee Mobs’ With ‘Chinese Spies’ Scooping Up AI Chip Designs.” The article is a short one, and it is not exactly what the title suggested to me. Nevertheless, let’s take a quick look at what seems to be some ripping of the Silicon Valley shibboleth of solidarity.

The members of the Happy Silicon Valley Social club are showing signs of dissention. Thanks, MSFT Copilot. How is your security today? Oh, really.

The hook for the story is another Google employee protest. The cause was a deal for Google to provide cloud services to Israel. I assume the Googlers split along ethno-political-religious lines: One group cheering for Hamas and another for Israel. (I don’t have any first-hand evidence, so I am leveraging the scant information in the Benzinga news story.

Then what? Apparently Marc Andreessen of Netscape fame and AI polemics offered some thoughts. I am not sure where these assertions were made or if they are on the money. But, I grant to Benzinga, that the Andreessen emissions are intriguing. Let’s look at one:

“The company is literally overrun by employee mobs, Chinese spies are walking AI chip designs out the door, and they turn the Founding Fathers and the Nazis black.”

The idea that there are “Google mobs” running from Foosball court to vending machines and then to their quiet space and then to the parking lot is interesting. Where’s Charles Dickens of Tale of Two Cities fame when you need an observer to document a revolution. Are Googlers building barricades in the passage ways? Are Prius and Tesla vehicles being set on fire?

In the midst of this chaotic environment, there are Chinese spies. I am not sure one has to walk chip designs anywhere. Emailing them or copying them from one Apple device to another works reasonably well in my experience. The reference to the Google art is a reminder that the high school management club approach to running a potential trillion dollar, alleged monopoly need some upgrades.

Where’s the Elon in this? I think I am supposed to realize that Elon and Andreessen are on the same mental wave length. The Google is not. Therefore, the happy family notion is shattered. Okay, Benzinga. Whatever. Drop those names. The facts? Well, drop those too.

Stephen E Arnold, April 23, 2024

Google AI: Who Is on First? I Do Not Know. No, No, He Is on Third

April 23, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

A big reorg has rattled the Googlers. Not only are these wizards candidates for termination, the work groups are squished like the acrylic pour paintings thrilling YouTube crafters.

Image from Vizoli Art via YouTube at https://www.youtube.com/@VizoliArt

The image might be a representation of Google’s organization, but I am just a dinobaby without expertise in art or thing Googley. Let me give you an example.

I read “Google Consolidates Its DeepMind and Research Teams Amid AI Push” (from the trust outfit itself, Thomson Reuters). The story presents the date as April 18, 2024. I learned:

The search engine giant had merged its research units Google Brain and DeepMind a year back to sharpen its focus on AI development and get ahead of rivals like Microsoft, a partner of ChatGPT and Sora maker OpenAI.

And who moves? The trust outfit says:

Google will relocate its Responsible AI teams – which focuses on safe AI development – from Research to DeepMind so that they are closer to where AI models are built and scaled, the company said in a blog post.

Ars Technica, which publishes articles without self-identifying with trust. “Google Merges the Android, Chrome, and Hardware Divisions.” That write up channels the acrylic pour approach to management, which Ars Technica describes this way:

Google Hardware SVP Rick Osterloh will lead the new “Platforms and Devices” division. Hiroshi Lockheimer, Google’s previous head of software platforms like Android and ChromeOS, will be headed to “some new projects” at Google.

Why? AI, of course.

But who runs this organizational mix up?

One answer appears in an odd little “real” news story from an outfit called Benzinga. “Google’s DeepMind to Lead Unified AI Charge as Company Seeks to Outpace Microsoft.” The write up asserts:

The reorganization will see all AI-related teams, including the development of the Gemini chatbot, consolidated under the DeepMind division led by Demis Hassabis. This consolidation encompasses research, model development, computing resources, and regulatory compliance teams…

I assume that the one big happy family of Googlers will sort out the intersections of AI, research, hardware, app software, smart software, lines of authority, P&L responsibility, and decision making. Based on my watching Google’s antics over the last 25 years, chaos seems to be part of the ethos of the company. One cannot forget that for the AI razzle dazzle, Code Red, and reorganizational acrylic pouring, advertising accounts for about 60 percent of the firm’s financial footstool.

Will Google’s management team be able to answer the question, “Who is on first?” Will the result of the company’s acrylic pour approach to organizational structures yield a YouTube video like this one? The creator Left Brained Artist explains why acrylic paints cracked, come apart, and generally look pretty darned terrible.

Will Google’s pouring units together result in a cracked result? Left Brained Artist’s suggestions may not apply to an online ad company trying to cope with difficult-to-predict competitors like the Zucker’s Meta or the Microsoft clump of AI stealth fighters: OpenAI, Mistral, et al.

Reviewing the information in these three write ups about Google, I will offer several of my unwanted and often irritating observations. Ready?

- Comparing the Microsoft AI re-organization to the Google AI re-organization it seems to be that Microsoft has a more logical set up. Judging from the information to which I have access, Microsoft is closing deals for its AI technology with government entities and selected software companies. Microsoft is doing practical engineering drawings; Google is dumping acrylic paint, hoping it will be pretty and make sense.

- Google seems to be struggling from a management point of view. We have sit ins, we have police hauling off Googlers, and we have layoffs. We have re-organizations. We have numerous signals that the blue chip consulting approach to an online advertising outfit is a bit unpredictable. Hey, just sell ads and use AI to help you do it without creating 1960s’ style college sophomore sit ins.

- Get organized. Make an attempt to answer the question, “Who is on first?

As Abbott and Costello explained:

Costello: Well, all I’m trying to find out is what’s the guy’s name on first base?

Abbott: Oh, no, no. What is on second base?

Costello: I’m not asking you who’s on second.

Abbott: Who’s on first.

Exactly. Just sell online ads.

Stephen E Arnold, April 23, 2024