News Flash: Software Has a Quality Problem. Insight!

November 3, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “The Great Software Quality Collapse: How We Normalized Catastrophe.” What’s interesting about this essay is that the author cares about doing good work.

The write up states:

We’ve normalized software catastrophes to the point where a Calculator leaking 32GB of RAM barely makes the news. This isn’t about AI. The quality crisis started years before ChatGPT existed. AI just weaponized existing incompetence.

Marketing is more important than software quality. Right, rube? Thanks, Venice.ai. Good enough.

The bound phrase “weaponized existing incompetence” points to an issue in a number of knowledge-value disciplines. The essay identifies some issues he has tracked; for example:

- Memory consumption in Google Chrome

- Windows 11 updates breaking the start menu and other things (printers, mice, keyboards, etc.)

- Security problems such as the long-forgotten CrowdStrike misstep that cost customers about $10 billion.

But the list of indifferent or incompetent coding leads to one stop on the information superhighway: Smart software. The essay notes:

But the real pattern is more disturbing. Our research found:

AI-generated code contains 322% more security vulnerabilities

45% of all AI-generated code has exploitable flaws

Junior developers using AI cause damage 4x faster than without it

70% of hiring managers trust AI output more than junior developer code

We’ve created a perfect storm: tools that amplify incompetence, used by developers who can’t evaluate the output, reviewed by managers who trust the machine more than their people.

I quite like the bound phrase “amplify incompetence.”

The essay makes clear that the wizards of Big Tech AI prefer to spend money on plumbing (infrastructure), not software quality. The write up points out:

When you need $364 billion in hardware to run software that should work on existing machines, you’re not scaling—you’re compensating for fundamental engineering failures.

The essay concludes that Big Tech AI as well as other software development firms shift focus.

Several observations:

- Good enough is now a standard of excellence

- “Go fast” is better than “good work”

- The appearance of something is more important than its substance.

Net net: It’s a TikTok-, YouTube, and carnival midway bundled into a new type of work environment.

Stephen E Arnold, November 3, 2025

Don Quixote Takes on AI in Research Integrity Battle. A La Vista!

November 3, 2025

Scientific publisher Frontiers asserts its new AI platform is the key to making the most of valuable research data. ScienceDaily crows, “90% of Science is Lost. This New AI Just Found It.” Wow, 90%. Now who is hallucinating? Turns out that percentage only applies if one is looking at new research submitted within Frontiers’ new system. Cutting out past and outside research really narrows the perspective. The press release explains:

“Out of every 100 datasets produced, about 80 stay within the lab, 20 are shared but seldom reused, fewer than two meet FAIR standards, and only one typically leads to new findings. … To change this, [Frontiers’ FAIR² Data Management Service] is designed to make data both reusable and properly credited by combining all essential steps — curation, compliance checks, AI-ready formatting, peer review, an interactive portal, certification, and permanent hosting — into one seamless process. The goal is to ensure that today’s research investments translate into faster advances in health, sustainability, and technology. FAIR² builds on the FAIR principles (Findable, Accessible, Interoperable and Reusable) with an expanded open framework that guarantees every dataset is AI-compatible and ethically reusable by both humans and machines.”

That does sound like quite the time- and hassle- saver. And we cannot argue with making it easier to enact the FAIR principles. But the system will only achieve its lofty goals with wide buy-in from the academic community. Will Frontiers get it? The write-up describes what participating researchers can expect:

“Researchers who submit their data receive four integrated outputs: a certified Data Package, a peer-reviewed and citable Data Article, an Interactive Data Portal featuring visualizations and AI chat, and a FAIR² Certificate. Each element includes quality controls and clear summaries that make the data easier to understand for general users and more compatible across research disciplines.”

The publisher asserts its system ensures data preservation, validation, and accessibility while giving researchers proper recognition. The press release describes four example datasets created with the system as well as glowing reviews from select researchers. See the post for those details.

Cynthia Murrell, November 3, 2025

Academic Libraries May Get Fast Journal Access

November 3, 2025

Here is a valuable resource for academic librarians. Katina Magazine tells us, “For the User Who Needed an Article Yesterday, This Platform Delivers.” Reviewer Josh Zeller tells us:

“Article Galaxy Scholar (AGS) is a premediated browser-based document delivery platform from Research Solutions (formerly Reprints Desk). Integrated with discovery search, it provides users ‘just-in-time’ access to PDFs of articles from a vast collection of titles at a relatively low per-article cost. The platform’s administrative tools are highly granular, allowing library staff to control every dimension of user purchasing, while its precise usage statistics can help inform future collection decisions.”

For better or worse, the system is powered by AWS and typically delivers results via email in fewer than seven seconds. It works with all major academic publishers and the average cost per article is $29. Though most AGS customers are in the US, enough are based in other countries to give Research Solutions experience in foreign copyright laws and access to more resources. Zeller describes the process:

“Both the front- and back-end interfaces of the AGS platform are entirely browser-based. Users typically submit AGS document delivery requests through their library’s discovery interface, into which AGS is fully integrated. For libraries using Ex Libris Primo, this is accomplished using the system’s OpenURL link resolver (Christopher & Edwards, 2024; Hlasten, 2024). Application programming interface (API) integrations are also available for all of the major interlibrary loan (ILL) and resource sharing platforms, including RapidILL, Rapido, ILLiad, Tipasa, and IDS Project (Landolt & Friesen, 2025). AGS links can also be integrated into other discovery services, like EBSCO FOLIO and WorldCat Discovery.”

Though AGS itself does not incorporate AI, it can be integrated with the AI source-evaluation tool Scite. See the write-up for those and other details.

Cynthia Murrell, November 3, 2025

Hollywood Has to Learn to Love AI. You Too, Mr. Beast

October 31, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Russia’s leadership is good at talking, stalling, and doing what it wants. Is OpenAI copying this tactic? ”OpenAI Cracks Down on Sora 2 Deepfakes after Pressure from Bryan Cranston, SAG-AFTRA” reports:

OpenAI announced on Monday [October 20, 2025] in a joint statement that it will be working with Bryan Cranston, SAG-AFTRA, and other actor unions to protect against deepfakes on its artificial intelligence video creation app Sora.

Talking, stalling or “negotiating,” and then doing what it wants may be within the scope of this sentence.

The write up adds via a quote from OpenAI leadership:

“OpenAI is deeply committed to protecting performers from the misappropriation of their voice and likeness,” Altman said in a statement. “We were an early supporter of the NO FAKES Act when it was introduced last year, and will always stand behind the rights of performers.”

This sounds good. I am not sure it will impress teens as much as Mr. Altman’s posture on erotic chats, but the statement sounds good. If I knew Russian, it would be interesting to translate the statement. Then one could compare the statement with some of those emitted by the Kremlin.

Producing a big budget commercial film or a Mr. Beast-type video will look very different in 18 to 24 months. Thanks, Venice.ai. Good enough.

Several observations:

- Mr. Altman has to generate cash or the appearance of cash. At some point investors will become pushy. Pushy investors can be problematic.

- OpenAI’s approach to model behavior does not give me confidence that the company can figure out how to engineer guard rails and then enforce them. Young men and women fiddling with OpenAI can be quite ingenious.

- The BBC ran a news program with the news reader as a deep fake. What does this suggest about a Hollywood producer facing financial pressure working out a deal with an AI entrepreneur facing even greater financial pressure? I think it means that humanoids are expendable first a little bit and then for the entire digital production. Gamification will be too delicious.

Net net: I think I know how this interaction will play out. Sam Altman, the big name stars, and the AI outfits know. The lawyers know. Who doesn’t know? Frankly everyone knows how digital disintermediation works. Just ask a recent college grad with a degree in art history.

Stephen E Arnold, October 31, 2025

FAIR Squared Data Management Promises to Find Missing Data

October 31, 2025

It’s very true that information is lost or hidden away in archives never to see the light of day. That’s why it’s important to preserve the information and even use AI to make it available. Science Daily reports on new information management tool that claims to have a solution: “90% Of Science Is Lost. This New AI Just Found It.” FAIR² Data Management is designed by Frontiers and is:

“…described as the world’s first comprehensive, AI-powered research data service. It is designed to make data both reusable and properly credited by combining all essential steps — curation, compliance checks, AI-ready formatting, peer review, an interactive portal, certification, and permanent hosting — into one seamless process. The goal is to ensure that today’s research investments translate into faster advances in health, sustainability, and technology.”

The data management system is built on a robust AI algorithm. Researchers feed their their data into FAIR² and four integrated outputs are returned: a certificate, an interactive data portal with AI chat and visualizations, peer-reviewed and citable data article, and a certified data package. All of these components work “[t]ogether,…to…ensure that every dataset is preserved, validated, citable, and reusable, helping accelerate discovery while giving researchers proper recognition.”

This is a great idea and how AI should ideally be used to ensure that information is credible. If only all AI algorithms employed a data management algorithm like this to prevent AI slop, drivel, and garbage from clogging up the Internet and our brains.

Whitney Grace, October 31, 2025

Will AMD Deal Make OpenAI Less Deal Crazed? Not a Chance

October 31, 2025

Why does this deal sound a bit like moving money from dad’s coin jar to mom’s spare change box? AP News reports, “OpenAI and Chipmaker AMD Sign Chip Supply Partnership for AI Infrastructure.” We learn AMD will supply OpenAI with hardware so cutting edge it won’t even hit the market until next year. The agreement will also allow OpenAI to buy up about 10% of AMD’s common stock. The day the partnership was announced, AMD’s shares went up almost 24%, while rival chipmaker Nvidia’s went down 1%. The write-up observes:

“The deal is a boost for Santa Clara, Calif.-based AMD, which has been left behind by rival Nvidia. But it also hints at OpenAI’s desire to diversify its supply chain away from Nvidia’s dominance. The AI boom has fueled demand for Nvidia’s graphics processing chips, sending its shares soaring and making it the world’s most valuable company. Last month, OpenAI and Nvidia announced a $100 billion partnership that will add at least 10 gigawatts of data center computing power. OpenAI and its partners have already installed hundreds of Nvidia’s GB200, a tall computing rack that contains dozens of specialized AI chips within it, at the flagship Stargate data center campus under construction in Abilene, Texas. Barclays analysts said in a note to investors Monday that OpenAI’s AMD deal is less about taking share away from Nvidia than it is a sign of how much computing is needed to meet AI demand.”

No doubt. We are sure OpenAI will buy up all the high-powered graphics chips it can get. But after it and other AI firms acquire their chips, will there be any left for regular consumers? If so, expect their costs to remain sky high. Just one more resource AI firms are devouring with little to no regard for the impact on others.

Cynthia Murrell, October 31, 2025

AI Will Kill, and People Will Grow Accustomed to That … Smile

October 30, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I spotted a story in SFGate, which I think was or is part of a dead tree newspaper. What struck me was the photograph (allegedly not a deep fake) of two people looking not just happy. I sensed a bit of self satisfaction and confidence. Regardless, both people gracing “Society Will Accept a Death Caused by a Robotaxi, Waymo Co-CEO Says.” Death, as far back as I can recall as an 81-year-old dinobaby, has never made me happy, but I just accepted the way life works. Part of me says that my vibrating waves will continue. I think Blaise Pascal suggested that one should believe in God because what’s the downside. Go, Blaise, a guy who did not get to experience an an accident involving a self-driving smart vehicle.

A traffic jam in a major metro area. The cause? A self-driving smart vehicle struck a school bus. But everyone is accustomed to this type of trivial problem. Thanks, MidJourney. Good enough like some high-tech outfits’ smart software.

But Waymo is a Google confection dating from 2010 if my memory is on the money. Google is a reasonably big company. It brokers, sells, and creates a market for its online advertising business. The cash spun from that revolving door is used to fund great ideas and moon shots. Messrs. Brin, Page, and assorted wizards had some time to kill as they sat in their automobiles creeping up and down Highway 101. The idea of a self-driving car that would allow a very intelligent, multi-tasking driver to do something productive than become a semi-sentient meat blob sparked an idea. We can rig a car to creep along Highway 101. Cool. That insight spawned what is now known as Waymo.

An estimable Google Waymo expert found himself involved in litigation related to Google’s intellectual property. I had ignored Waymo until the Anthony Levandowski founded a company, sold it to Uber, and then ended up in a legal matter that last from 2017 to 2019. Publicity, I have heard, whether positive or negative, is good. I knew about Waymo: A Google project, intellectual property, and litigation. Way to go, Waymo.

For me, Waymo appears in some social media posts (allegedly actual factual) when Waymo vehicles get trapped in a dead end in Cow Town. Sometimes the Waymos don’t get out of the way of traffic barriers and sit purring and beeping. I have heard that some residents of San Francisco have [a] kicked, [b] sprayed graffiti on Waymos, and/or [c] put traffic cones in certain roads to befuddle the smart Google software-powered vehicles. From a distance, these look a bit like something from a Mad Max motion picture.

My personal view is that I would never stand in front of a rolling Waymo. I know that [a] Google search results are not particularly useful, [b] Google’s AI outputs crazy information like glue cheese on pizza, and [c] Waymo’s have been involved in traffic incidents which cause me to stay away from Waymos.

The cited article says that the Googler said in response to a question about a Waymo hypothetical killing of a person:

“I think that society will,” Mawakana answered, slowly, before positioning the question as an industry wide issue. “I think the challenge for us is making sure that society has a high enough bar on safety that companies are held to.” She said that companies should be transparent about their records by publishing data about how many crashes they’re involved in, and she pointed to the “hub” of safety information on Waymo’s website. Self-driving cars will dramatically reduce crashes, Mawakana said, but not by 100%: “We have to be in this open and honest dialogue about the fact that we know it’s not perfection.” [Emphasis added by Beyond Search]

My reactions to this allegedly true and accurate statement from a Googler are:

- I am not confident that Google can be “transparent.” Google, according to one US court is a monopoly. Google has been fined by the European Union for saying one thing and doing another. The only reason I know about these court decisions is because legal processes released information. Google did not provide the information as part of its commitment to transparency.

- Waymos create problems because the Google smart software cannot handle the demands of driving in the real world. The software is good enough, but not good enough to figure out dead ends, actions by human drivers, and potentially dangerous situations. I am aware of fender benders and collisions with fixed objects that have surfaced in Waymo’s 15 year history.

- Self driving cars specifically Waymo will injure or kill people. But Waymo cars are safe. So some level of killing humans is okay with Google, regulators, and the society in general. What about the family of the person who is killed by good enough Google software? The answer: The lawyers will blame something other than Google. Then fight in court because Google has oodles of cash from its estimable online advertising business.

The cited article quotes the Waymo Googler as saying:

“If you are not being transparent, then it is my view that you are not doing what is necessary in order to actually earn the right to make the roads safer,” Mawakana said. [Emphasis added by Beyond Search]

Of course, I believe everything Google says. Why not believe that Waymos will make self driving vehicle caused deaths acceptable? Why not believe Google is transparent? Why not believe that Google will make roads safer? Why not?

But I like the idea that people will accept an AI vehicle killing people. Stuff happens, right?

Stephen E Arnold, October 30, 2025

Old Social Media Outfits May Be Vulnerable: Wrong Product, Wrong Time

October 30, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “Social Media Became Television. Gen Z Changed the Channel.” I liked the title. I liked the way data were used to support the assertion about young people. I don’t think the conclusion is accurate.

Let’s look at what the write up asserts.

First, I noted this statement:

Turns out, infinite video from people you don’t know has a name we already invented in 1950: Television.

I think this means that digital services are the “vast wasteland” that Newton Minnow identified this environment. I was 17 years old and a freshman in college. My parents acquired a TV set in 1956 when I was 12 years old. I vaguely remember that it sucked. My father watched the news. My mother did not pay any attention as far as I can recall. Not surprisingly I was not TV oriented, and I am not today.

The write up says:

For twenty years, tech companies optimized every platform toward the same end state. Student directories became feeds. Messaging apps became feeds. AI art tools became feeds. Podcasts moved to video. Newsletters added video. Everything flowed toward the same product: endless short videos recommended by machines.

I agree. But that is a consequence of shifting to digital media. Fast, easy, crispy information becomes “important.”

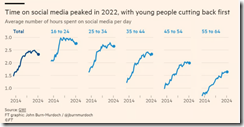

The write up says via a quote from an entity known as Jon Burn-Murdoch:

It has gone largely unnoticed that time spent on social media peaked in 2022 and has since gone into steady decline

That’s okay. I don’t know if the statement is true or false. This chart is presented to support the assertion:

The problem is that the downturn in the 16 to 24 graph looks like a dip but a dip from a high level of consumption. And what about the 11 to 15 year olds, what I call GenAI? Not on the radar.

This quote supports the assertion that content consumption has shifted from friends to anonymous sources:

Today, only a fraction of time spent on Meta’s services—7% on Instagram, 17% on Facebook—involves consuming content from online “friends” (“friend sharing”). A majority of time spent on both apps is watching videos, increasingly short-form videos that are “unconnected”—i.e., not from a friend or followed account—and recommended by AI-powered algorithms Meta developed as a direct competitive response to TikTok’s rise, which stalled Meta’s growth.

Okay, Meta has growth problems. I would add that Telegram has growth problems. The antics of the Googlers make clear that the firm has growth problems. I would argue that Microsoft has growth problems. Each of these outfits has run out of prospects. Lower birth rates, cost, and the fear-centric environment may have something to do with online behaviors.

My view is that social media and short videos are not going away. New services are going to emerge. Meta-era outfits are just experiencing what happened to the US steel industry when newer technology became available in lower-cost countries. The US auto industry is in a vulnerable position because of China’s manufacturing, labor cost, and regulatory environment.

The flow of digital information is not stopping. Those who lose the ability to think will find ways to pretend to be learning, having fun, and contributing to society. My concern is that what these young people think and actually do are likely to be more surprising than the magnetism of platforms a decade old, crafted for users who have moved on.

The buzzy services will be anchored in AI and probably feature mental health, personalized “chats,” and synthetic relationships. Yep, a version of a text chat or radio.

Stephen E Arnold, October x, 2025

Is It Unfair to Blame AI for Layoffs? Sure

October 30, 2025

When AI exploded onto the scene, we were promised the tech would help workers, not replace them. Then that story began to shift, with companies revealing they do plan to slash expenses by substituting software for humans. But some are skeptical of this narrative, and for good reason. Techspot asks, “Is AI Really Behind Layoffs, or Just a Convenient Excuse for Companies?” Reporter Rob Thubron writes:

“Several large organizations, including Accenture, Salesforce, Klarna, Microsoft, and Duolingo, have said they are reducing staff numbers as AI helps streamline operations, reduce costs, and increase efficiency. But Fabian Stephany, Assistant Professor of AI & Work at the Oxford Internet Institute, told CNBC that companies are ‘scapegoating’ the technology.”

Stephany notes many companies are still trying to expel the extra humans they hired during the pandemic. Apparently, return-to-office mandates have not driven out as many workers as hoped. The write-up continues:

“Blaming AI for layoffs also has its advantages. Multibillion- and trillion-dollar companies can not only push the narrative that the changes must be made in order to stay competitive, but doing so also makes them appear more cutting-edge, tech-savvy, and efficient in the eyes of potential investors. Interestingly, a study by the Yale Budget Lab a few weeks ago showed there is little evidence that AI has displaced workers more severely than earlier innovations such as computers or the internet. Meanwhile, Goldman Sachs Research has estimated that AI could ultimately displace 6 to 7 percent of the US workforce, though it concluded the effect would likely be temporary.”

The write-up includes a graph Anthropic made in 2023 that compares gaps between actual and expected AI usage by occupation. A few fields overshot the expectation– most notably in computer and mathematical jobs. Most, though, fell short. So are workers really losing their jobs to AI? Or is that just a high-tech scapegoat?

Cynthia Murrell, October 30, 2025

Creative Types: Sweating AI Bullets

October 30, 2025

Artists and authors are in a tizzy (and rightly so) because AI is stealing their content. AI algorithms potentially will also put them out of jobs, but the latest data from Nieman Labs explains that people are using chatbots for information seeking over content: “People Are Using ChatGPT Twice As Much As They Were Last Year. They’re Still Just As Skeptical Of AI In News.”

Usage has doubled of AI chatbots in 2024 compared to the previous years. It’s being used for tasks formerly reserved for search engines and news outlets. There is still ambivalence about the information it provides.

Here are stats about information consumption trends:

“For publishers worried about declining referral traffic, our findings paint a worrying picture, in line with other recent findings in industry and academic research. Among those who say they have seen AI answers for their searches, only a third say they “always or often” click through to the source links, while 28% say they “rarely or never” do. This suggests a significant portion of user journeys may now end on the search results page.

Contrary to some vocal criticisms of these summaries, a good chunk of population do seem to find them trustworthy. In the U.S., 49% of those who have seen them express trust in them, although it is worth pointing out that this trust is often conditional.”

When it comes to trust habits, people believe AI on low-stakes, “first pass” information or the answer is “good enough,” because AI is trained on large amounts of data. When the stakes are higher, people will do further research. There is a “comfort gap” between AI news and human oversight. Very few people implicitly trust AI. People still prefer humans curating and writing the news over a machine. They also don’t mind AI being used for assisting tasks such as editing or translation, but a human touch is still needed o the final product.

Humans are still needed as is old-fashioned information getting. The process remains the same, the tools have just changed.

Whitney Grace, October 30, 2025