Nine Things Revised for Gens X, Y, and AI

September 25, 2025

![green-dino_thumb_thumb[3] green-dino_thumb_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/09/green-dino_thumb_thumb3_thumb-1.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

A dinobaby named Edward Packard wrote a good essay titled “Nine Things I Learned in 90 Years.” As a dinobaby, I found the points interesting. However, I think there will be “a failure to communicate.” How can this be? Mr. Packard is a lawyer skilled at argument. He is a US military veteran. He is an award winning author. A lifetime of achievement has accrued.

Let’s make the nine things more on target for the GenX, GenY, and GenAI cohorts. Here’s my recasting of Mr. Packard’s ideas tuned to the hyper frequencies on which these younger groups operate.

Can the communication gap be bridged? Thanks, MidJourney. Good enough.

The table below presents Mr. Packard’s learnings in one column and the version for the Gen whatevers in the second column. Please, consult Mr. Packard’s original essay. My compression absolutely loses nuances to fit into the confines of a table. The Gen whatevers will probably be okay with how I convert Mr. Packard’s life nuggets into gold suitable for use in a mobile device-human brain connection.

| Packard Learnings | GenX, Y, AI Version |

| Be self-constituted | Rely on AI chats |

| Don’t operate on cruise control | Doomscroll |

| Consider others’ feelings | Me, me, me |

| Be happy | Coffee and YouTube |

| Seek eternal views | Twitch is my beacon |

| Do not deceive yourself | Think it and it will become reality |

| Confront mortality | Science (or Google) will solve death |

| Luck plays a role | My dad: A connected Yale graduate with a Harvard MBA |

| Consider what you have | Instagram dictates my satisfaction level, thank you! |

I appreciate Mr. Packard’s observations. These will resonate at the local old age home and among the older people sitting around the cast iron stove in rural Kentucky where I live.

Bridges in Harlen Country, Kentucky, are tough to build. Iowa? New Jersey? I don’t know.

Stephen E Arnold, September 25, 2025

Telegram Does Content. OpenAI Plants a Grove

September 25, 2025

Written by an unteachable dinobaby. Live with it.

Written by an unteachable dinobaby. Live with it.

Telegram uses contests to identify smart people who are into Telegram apps. For the last decade, Telegram’s approach has worked reasonably well. The method eliminates much of the bureaucracy and cost of a traditional human resources operation.

OpenAI has a different approach. “OpenAI Announces Grove, a Cohort for ‘Pre-Idea Individuals’ to Build in AI” reports:

OpenAI announced a new program called Grove on September 12, which is aimed at assisting technical talent at the very start of their journey in building startups and companies. The ChatGPT maker says that it isn’t a traditional startup accelerator program, and offers ‘pre-idea’ individuals access to a dense talent network, which includes OpenAI’s researchers, and other resources to build their ideas in the AI space.

OpenAI’s big dog is not emulating the YCombinator approach, nor is he knocking off a copy of the Telegram contests. He is looking for talented people who can create viable applications.

The approach, according to the cited article, is:

The program will begin with five weeks of content hosted in OpenAI’s headquarters in San Francisco, United States. This includes in-person workshops, weekly office hours, and mentorship with OpenAI’s leaders. The first Grove cohort will consist of approximately 15 participants, and OpenAI is recommending individuals from all domains and disciplines across various experience levels.

Will the approach work? Who knows. Telegram’s approach casts a wide net, and it is supported by the evangelism with cash approach of Telegram’s proxy, the TON Foundation. OpenAI is starting small. Telegram reviews “solutions” to coding problems. OpenAI’s Grove is more like a window box with some petunias and maybe a periwinkle or two.

The Telegram and OpenAI approaches illustrate how some high profile organizations are trying to arrive at personnel and partner solutions in a way different from that taken by Salesforce or similar quasi-new era outfits.

What other ideas will Mr. Altman implement? Is Telegram a source of inspiration to him?

Stephen E Arnold, September 25, 2025

Modern Management Method and Modern Pricing Plan

September 25, 2025

Sadly I am a dinobaby and too old and stupid to use smart software to create really wonderful short blog posts.

Sadly I am a dinobaby and too old and stupid to use smart software to create really wonderful short blog posts.

Despite the sudden drop in quantity and quality in my newsfeed outputs, one of my team spotted a blog post titled “Slack Is Extorting Us with a $195K/Year Bill Increase.” Slack is, I believe, a unit of Salesforce. That firm is in the digital Rolodex business. Over the years, Salesforce has dabbled with software to help sales professionals focus. The effort was part of Salesforce’s attention retention push. Now Salesforce is into collaborative tools for professionals engaged in other organizational functions. The pointy end of the “force” is smart software. The leadership of Salesforce has spoken about the importance of AI and suggested that other firms’ collaboration software is not keeping up with Slack.

A forward-leaning team of deciders reaches agreement about pricing. The alpha dog is thrilled with the idea of a price hike. The beta buddies are less enthusiastic. But it is accounting job to collect on booked but unpaid revenue. The AI system called Venice produced this illustration.

The write up says:

For nearly 11 years, Hack Club – a nonprofit that provides coding education and community to teenagers worldwide – has used Slack as the tool for communication. We weren’t freeloaders. A few years ago, when Slack transitioned us from their free nonprofit plan to a $5,000/year arrangement, we happily paid. It was reasonable, and we valued the service they provided to our community.

The “attention” grabber in this blog post is this paragraph:

However, two days ago, Slack reached out to us and said that if we don’t agree to pay an extra $50k this week and $200k a year, they’ll deactivate our Slack workspace and delete all of our message history.

I think there is a hint of a threat to the Salesforce customer. I am probably incorrect. Salesforce is popular, and it is owned by a high profile outfit embracing attention and AI. Assume that the cited passage reflects how the customer understood the invoice and its 3,000 percent plus increase and the possible threat. My question is, “What type of management process is at work at Salesforce / Slack?”

Here are my thoughts. Please, remember that I am a dinobaby and generally clueless about modern management methods used to establish pricing.

- Salesforce has put pressure on Slack to improve its revenue quickly. The Slack professionals knee jerked and boosted bills to outfits likely to pay up and keep quiet. Thus, the Hack Club received a big bill. Do this enough times and you can demonstrate more revenue, even though it may be unpaid. Let the bean counters work to get the money. I wonder if this is passive resistance from Slack toward Salesforce’s leadership? Oh, of course not.

- Salesforce’s pushes for attention and AI are not pumping the big bucks Salesforce needs to avoid the negative consequences of missing financial projections. Bad things happen when this occurs.

- Salesforce / Slack are operating in a fog of unknowing. The hope for big payoffs from attention and AI are slow to materialize. The spreadsheet fever that justifies massive investments in AI is yielding to some basic financial realities: Customers are buying. Sticking AI into communications is not a home run for Slack users, and it may not be for the lucky bean counters who have to collect on the invoices for booked but unpaid revenue.

The write up states:

Anyway, we’re moving to Mattermost. This experience has taught us that owning your data is incredibly important, and if you’re a small business especially, then I’d advise you move away too.

Salesforce / Slack loses a customer and the costs associated with handling data for what appears to be a lower priority and lower value customer.

Modern management methods are logical and effective. Never has a dinobaby learned so much about today’s corporate tactics than I have from my reading about outfits like Salesforce / and Slack.

Stephen E Arnold, September 25, 2025

Same Old Search Problem, Same Old Search Solution

September 25, 2025

A problem as old as time is finding information within an organization. A good company organizes their information in paper and digital files, but most don’t do this. Digital information is arguably harder to find because you never know what hard drive or utility disc to search through. Apparently BlueDocs, via WRAL News, found a solution to this issue: “BlueDocs Unveils Revolutionary AI Global Search Feature, Transforming How Organizations Access Internal Documentation Software.”

The press release about BlueDocs, an AI global documentation software platform, opens with the usual industry and revolutionary jargon. Blah. Blah. Blah.

They have a special sauce:

“Unlike traditional search solutions that operate within platform boundaries, AI Global Search leverages advanced artificial intelligence to understand context, intent, and relationships across disparate knowledge sources. Users can now execute a single search query to simultaneously explore BlueDocs content, Google Workspace files, Microsoft 365 documents, and integrated third-party platforms.”

It delivers special results:

“ ‘AI Global Search has fundamentally changed how our team accesses information,’ said one Beta Customer. ‘What used to require checking five different platforms now happens with a single search. It’s particularly transformative for onboarding new team members who previously needed training on multiple systems just to find basic information.’”

Does this lingo sound like every other enterprise search solution’s marketing collateral? If BlueDocs delivers an easily programmable, out-of-the-box solution that interfaces across all platforms and returns usable results: EXCELLENT. If it needs extra tech support at a very special low price and custom engineering, the similarity with enterprise search of yore is back again.

Whitney Grace, September 25, 2025

Want to Catch the Attention of Bad Actors? Say, Easier Cross Chain Transactions

September 24, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I know from experience that most people don’t know about moving crypto in a way that makes deanonymization difficult. Commercial firms offer deanonymization services. Most of the well-known outfits’ technology delivers. Even some home-grown approaches are useful.

For a number of years, Telegram has been the go-to service for some Fancy Dancing related to obfuscating crypto transactions. However, Telegram has been slow on the trigger when it comes to smart software and to some of the new ideas percolating in the bubbling world of digital currency.

A good example of what’s ahead for traders, investors, and bad actors is described in “Simplifying Cross-Chain Transactions Using Intents.” Like most crypto thought, confusing lingo is a requirement. In this article, the word “intent” refers to having crypto currency in one form like USDC and getting 100 SOL or some other crypto. The idea is that one can have fiat currency in British pounds, walk up to a money exchange in Berlin, and convert the pounds to euros. One pays a service charge. Now in crypto land, the crypto has to move across a blockchain. Then to get the digital exchange to do the conversion, one pays a gas fee; that is, a transaction charge. Moving USDC across multiple chains is a hassle and the fees pile up.

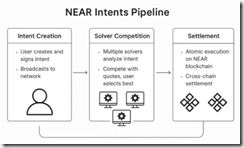

The article “Simplifying Cross Chain Transaction Using Intents” explains a brave new world. No more clunky Telegram smart contracts and bots. Now the transaction just happens. How difficult will the deanonymization process become? Speed makes life difficult. Moving across chains makes life difficult. It appears that “intents” will be a capability of considerable interest to entities interested in making crypto transactions difficult to deanonymize.

The write up says:

In technical terms,

intentsare signed messages that express a user’s desired outcome without specifying execution details. Instead of crafting complex transaction sequences yourself, you broadcast your intent to a network ofsolvers(sophisticated actors) who then compete to fulfill your request.

The write up explains the benefit for the average crypto trader:

when you broadcast an intent, multiple solvers analyze it and submit competing quotes. They might route through different DEXs, use off-chain liquidity, or even batch your intent with others for better pricing. The best solution wins.

Now, think of solvers as your personal trading assistants who understand every connected protocol, every liquidity source, and every optimization trick in DeFi. They make money by providing better execution than you could achieve yourself and saves you a a lot of time.

Does this sound like a use case for smart software? It is, but the approach is less complicated than what one must implement using other approaches. Here’s a schematic of what happens in the intent pipeline:

The secret sauce for the approach is what is called a “1Click API.” The API handles the plumbing for the crypto bridging or crypto conversion from currency A to currency B.

If you are interested in how this system works, the cited article provides a list of nine links. Each provides additional detail. To be up front, some of the write ups are more useful than others. But three things are clear:

- Deobfuscation is likely to become more time consuming and costly

- The system described could be implemented within the Telegram blockchain system as well as other crypto conversion operations.

- The described approach can be further abstracted into an app with more overt smart software enablements.

My thought is that money launderers are likely to be among the first to explore this approach.

Stephen E Arnold, September 24, 2025

Fixing AI Convenience Behavior: Lead, Collaborate, and Mindset?

September 24, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “AI-Generated “Workslop” Is Destroying Productivity.” Six people wrote the article for the Harvard Business Review. (Whatever happened to independent work?)

The write up reports:

Employees are using AI tools to create low-effort, passable looking work that ends up creating more work for their coworkers.

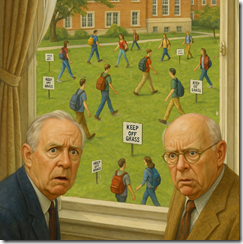

Let’s consider this statement in the context of college students’ behavior when walking across campus. I was a freshman in college in 1962. The third rate institution had a big green area with no cross paths. The enlightened administration put up “keep off the grass” signs.

What did the students do? They walked the shortest distance between two points. Why? Why go the long way? Why spend extra time? Why be stupid? Why be inconvenienced?

The cited write up from the estimable Harvard outfit says:

But while some employees are using this ability [AI tools] to polish good work, others use it to create content that is actually unhelpful, incomplete, or missing crucial context about the project at hand. The insidious effect of workslop is that it shifts the burden of the work downstream, requiring the receiver to interpret, correct, or redo the work. In other words, it transfers the effort from creator to receiver.

Yep, convenience. Why waste effort?

The fix is to eliminate smart software. But that won’t happen. Why? Smart software provides a way to cut humanoids from the costs of running a business. Efficiency works. Well, mostly.

The write up says:

we jettison hard mental work to technologies like Google because it’s easier to, for example, search for something online than to remember it. Unlike this mental outsourcing to a machine, however, workslop uniquely uses machines to offload cognitive work to another human being. When coworkers receive workslop, they are often required to take on the burden of decoding the content, inferring missed or false context.

And what about changing this situation? Did the authors trot out the old chestnuts from Kurt Lewin and the unfreeze, change, refreeze model? Did the authors suggest stopping AI deployment? Nope. The fix involves:

- Leadership

- Mindsets

- Collaboration

Just between you and me, I think this team of authors is angling for some juicy consulting assignments. These will involve determining who, how much, what, and impact of using slop AI. Then there will be a report with options. The team will implement “options” and observe the results. If the process works, the client will sign a long=term contract with the team.

Keep off the grass!

Stephen E Arnold, September 24, 2025

A Googler Explains How AI Helps Creators and Advertisers in the Googley Maze

September 24, 2025

Sadly I am a dinobaby and too old and stupid to use smart software to create really wonderful short blog posts.

Sadly I am a dinobaby and too old and stupid to use smart software to create really wonderful short blog posts.

Most of Techmeme’s stories are paywalled. But one slipped through. (I wonder why?) Anyhow, the article in question is “An Interview with YouTube Neal Mohan about Building a Stage for Creators.” The interview is a long one. I want to focus on a couple of statements and offer a handful of observations.

The first comment by the Googler Mohan is this one:

Moving away from the old model of the cliché Madison Avenue type model of, “You go out to lunch and you negotiate a deal and it’s bespoke in this particular fashion because you were friends with the head of ad sales at that particular publisher”. So doing away with that model, and really frankly, democratizing the way advertising worked, which in our thesis, back to this kind of strategy book, would result in higher ROI for publishers, but also better ROI for advertisers.

The statement makes clear that disrupting advertising was the key to what is now the Google Double Click model. Instead of Madison Avenue, today there is the Google model. I think of it as a maze. Once one “gets into” the Google Double Click model, there is no obvious exit.

The art was generated by Venice.ai. No human needed. Sorry freelance artists on Fiverr.com. This is the future. It will come to YouTube as well.

Here’s the second I noted:

everything that we build is in service of people that are creative people, and I use the term “creator” writ large. YouTubers, artists, musicians, sports leagues, media, Hollywood, etc., and from that vantage point, it is really exceedingly clear that these AI capabilities are just that, they’re capabilities, they’re tools. But the thing that actually draws us to YouTube, what we want to watch are the original storytellers, the creators themselves.

The idea, in my interpretation, is that Google’s smart software is there to enable humans to be creative. AI is just a tool like an ice pick. Sure, the ice pick can be driven into someone’s heart, but that’s an extreme example of misuse of a simple tool. Our approach is to keep that ice pick for the artist who is going to create an ice sculpture.

Please, read the rest of this Googley interview to get a sense of the other concepts Google’s ad system and its AI are delivering to advertisers and “creators.”

Here’s my view:

- Google wants to get creators into the YouTube maze. Google wants advertisers to use the now 30 year old Google Double Click ad system. Everyone just enter the labyrinth.

- The rats will learn that the maze is the equivalent of a fish in an aquarium. What else will the fish know? Not too much. The aquarium is life. It is reality.

- Google has a captive, self-sustaining ecosystem. Creators create; advertisers advertise because people or systems want the content.

Now let me ask a question, “How does this closed ecosystem make more money?” The answer, according to Googler Mohan, a former consultant like others in Google leadership, is to become more efficient. How does one become more efficient? The answer is to replace expensive, popular creators with cheaper AI driven content produced by Google’s AI system.

Therefore, the words say one thin: Creator humans are essential. However, the trajectory of Google’s behavior is that Google wants to maximize its revenues. Just the threat or fear of using AI to knock off a hot new human engineered “content object” will allow the Google to reduce what it pays to a human until Google’s AI can eliminate those pesky, protesting, complaining humans. The advertisers want eyeballs. That’s what Google will deliver. Where will the advertisers go? Craigslist, Nextdoor, X.com?

Net net: Money is more important to Google than human creators. I know I am a dinobaby and probably incorrect. That’s how I see the Google.

Stephen E Arnold, September 24, 2025

The Skill for the AI World As Pronounced by the Google

September 24, 2025

Written by an unteachable dinobaby. Live with it.

Written by an unteachable dinobaby. Live with it.

Worried about a job in the future: The next minute, day, decade. The secret of constant employment, big bucks, and even larger volumes of happiness has been revealed. “Google’s Top AI Scientist Says Learning How to Learn Will Be Next Generation’s Most Needed Skill” says:

the most important skill for the next generation will be “learning how to learn” to keep pace with change as Artificial Intelligence transforms education and the workplace.

Well, that’s the secret: Learn how to learn. Why? Surviving in the chaos of an outfit like Google means one has to learn. What should one learn? Well, the write up does not provide that bit of wisdom. I assume a Google search will provide the answer in a succinct AI-generated note, right?

The write up presents this chunk of wisdom from a person keen on getting lots of AI people aware of Google’s AI prowess:

The neuroscientist and former chess prodigy said artificial general intelligence—a futuristic vision of machines that are as broadly smart as humans or at least can do many things as well as people can—could arrive within a decade…. [He] Hassabis emphasized the need for “meta-skills,” such as understanding how to learn and optimizing one’s approach to new subjects, alongside traditional disciplines like math, science and humanities.

This means reading poetry, preferably Greek poetry. The Google super wizard’s father is “Greek Cypriot.” (Cyprus is home base for a number of interesting financial operations and the odd intelware outfit. Which part of Cyprus is which? Google Maps may or may not answer this question. Ask your Google Pixel smart phone to avoid an unpleasant mix up.)

The write up adds this courteous note:

[Greek Prime Minister Kyriakos] Mitsotakis rescheduled the Google Big Brain to “avoid conflicting with the European basketball championship semifinal between Greece and Turkey. Greece later lost the game 94-68.”

Will life long learning skill help the Greek basketball team win against a formidable team like Turkey?

Sure, if Google says it, you know it is true just like eating rocks or gluing cheese on pizza. Learn now.

Stephen E Arnold, September 24, 2025

Graphite: Okay, to License Now

September 24, 2025

The US government uses specialized software to gather information related to persons of interest. The brand of popular since NSO Group marketed itself into a pickle is from the Israeli-founded spyware company Paragon Solutions. The US government isn’t a stranger to Paragon Solutions, in fact, El Pais shares in the article, “Graphite, the Israeli Spyware Acquired By ICE” that it renewed its contract with the specialized software company.

The deal was originally signed during Biden’s administration during September 24, but it went against the then president’s executive order that prohibited US agencies from using spyware tools that “posed ‘significant counterintelligence and security risks’ or had been misused by foreign governments to suppress dissent.

During the negotiations, AE Industrial Partners purchased Paragon and merged it with REDLattice, an intelligence contractor located in Virginia. Paragon is now a domestic partner with deep connections to former military and intelligence personnel. The suspension on ICE’s Homeland Security Investigations was quietly lifted on August 29 according to public contracting announcements.

The Us government will use Paragon’s Graphite spyware:

“Graphite is one of the most powerful commercial spy tools available. Once installed, it can take complete control of the target’s phone and extract text messages, emails, and photos; infiltrate encrypted apps like Signal and WhatsApp; access cloud backups; and covertly activate microphones to turn smartphones into listening devices.

The source suggests that although companies like Paragon insist their tools are intended to combat terrorism and organized crime, past use suggests otherwise. Earlier this year, Graphite allegedly has been linked to info gathering in Italy targeting at least some journalists, a few migrant rights activists, and a couple of associates of the definitely worth watching Pope Francis. Paragon stepped away from the home of pizza following alleged “public outrage.”

The US government’s use of specialized software seems to be a major concern among Democrats and Republicans alike. What government agencies are licensing and using Graphite. Beyond Search has absolutely no idea.

Whitney Grace, September 24, 2025

Titanic AI Goes Round and Round: Are You Dizzy Yet?

September 23, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “Nvidia to Invest Up to $100 Billion in OpenAI, Linking Two Artificial Intelligence Titans.” The headline makes an important point. The words “big” and “huge” are not sufficiently monumental. Now we have “titans." As you may know, a “titan” is a person of great power. I will leave out the Greek mythology. I do want to point out that “titans” were the kiddies produced by Uranus and Gaea. Titans were big dogs until Zeus and a few other Olympian gods forced them to live in what is now Newark, New Jersey.

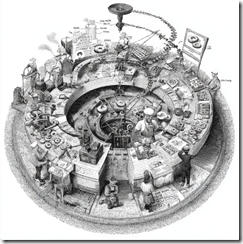

An AI-generated diagram of a simple circular deal. Regulators and and IRS professionals enjoy challenges. What are those people doing to make the process work? Thanks, MidJourney.com. Good enough.

The write up from the outfit that it is into trust explains how two “titans” are now intertwined. No, I won’t bring up the issue of incestuous behavior. Let’s stick to the “real” news story:

Nvidia will invest up to $100 billion in OpenAI and supply it with data center chips… Nvidia will start investing in OpenAI for non-voting shares once the deal is finalized, then OpenAI can use the cash to buy Nvidia’s chips.

I am not a finance, tax, or money wizard. On the surface, it seems to me that I loan a person some money and then that person gives me the money back in exchange for products and services. I may have this wrong, but I thought a similar arrangement landed one of the once-famous enterprise search companies in a world of hurt and a member of the firm’s leadership in prison.

Reuters includes this statement:

Analysts said the deal was positive for Nvidia but also voiced concerns about whether some of Nvidia’s investment dollars might be coming back to it in the form of chip purchases. "On the one hand this helps OpenAI deliver on what are some very aspirational goals for compute infrastructure, and helps Nvidia ensure that that stuff gets built. On the other hand the ‘circular’ concerns have been raised in the past, and this will fuel them further," said Bernstein analyst Stacy Rasgon.

“Circular” — That’s an interesting word. Some of the financial transaction my team and I examined during our Telegram (the messaging outfit) research used similar methods. One of the organizations apparently aware of “circular” transactions was Huione Guarantee. No big deal, but the company has been in legal hot water for some of its circular functions. Will OpenAI and Nvidia experience similar problems? I don’t know, but the circular thing means that money goes round and round. In circular transactions, at each touch point magical number things can occur. Money deals are rarely hallucinatory like AI outputs and semiconductor marketing.

What’s this mean to companies eager to compete in smart software and Fancy Dan chips? In my opinion, I hear my inner voice saying, “You may be behind a great big circular curve. Better luck next time.”

Stephen E Arnold, September 23, 2025