What Did You Tay, Bob? Clippy Did What!

July 21, 2025

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

I was delighted to read “OpenAI Is Eating Microsoft’s Lunch.” I don’t care who or what wins the great AI war. So many dollars have been bet that hallucinating software is the next big thing. Most content flowing through my dinobaby information system is political. I think this food story is a refreshing change.

So what’s for lunch? The write up seems to suggest that Sam AI-Man has not only snagged a morsel from the Softies’ lunch pail but Sam AI-Man might be prepared to snap at those delicate lady fingers too. The write up says:

ChatGPT has managed to rack up about 10 times the downloads that Microsoft’s Copilot has received.

Are these data rock solid? Probably not, but the idea that two “partners” who forced Googzilla to spasm each time its Code Red lights flashed are not cooperating is fascinating. The write up points out that when Microsoft and OpenAI were deeply in love, Microsoft had the jump on the smart software contenders. The article adds:

Despite that [early lead], Copilot sits in fourth place when it comes to total installations. It trails not only ChatGPT, but Gemini and Deepseek.

Shades of Windows phone. Another next big thing muffed by the bunnies in Redmond. How could an innovation power house like Microsoft fail in the flaming maelstrom of burning cash that is AI? Microsoft’s long history of innovation adds a turbo boost to its AI initiatives. The Bob, Clippy, and Tay inspired Copilot is available to billions of Microsoft Windows users. It is … everywhere.

The write up explains the problem this way:

Copilot’s lagging popularity is a result of mismanagement on the part of Microsoft.

This is an amazing insight, isn’t it? Here’s the stunning wrap up to the article:

It seems no matter what, Microsoft just cannot make people love its products. Perhaps it could try making better ones and see how that goes.

To be blunt, the problem at Microsoft is evident in many organizations. For example, we could ask IBM Watson what Microsoft should do. We could fire up Deepseek and get some China-inspired insight. We could do a Google search. No, scratch that. We could do a Yandex.ru search and ask, “Microsoft AI strategy repair.”

I have a more obvious dinobaby suggestion, “Make Microsoft smaller.” And play well with others. Silly ideas I know.

Stephen E Arnold, July 21, 2025

Baked In Bias: Sound Familiar, Google?

July 21, 2025

Just a dinobaby working the old-fashioned way, no smart software.

Just a dinobaby working the old-fashioned way, no smart software.

By golly, this smart software is going to do amazing things. I started a list of what large language models, model context protocols, and other gee-whiz stuff will bring to life. I gave up after a clean environment, business efficiency, and more electricity. (Ho, ho, ho).

I read “ChatGPT Advises Women to Ask for Lower Salaries, Study Finds.” The write up says:

ChatGPT’s o3 model was prompted to give advice to a female job applicant. The model suggested requesting a salary of $280,000. In another, the researchers made the same prompt but for a male applicant. This time, the model suggested a salary of $400,000.

I urge you to work through the rest of the cited document. Several observations:

- I hypothesized that Google got rid of pesky people who pointed out that when society is biased, content extracted from that society will reflect those biases. Right, Timnit?

- The smart software wizards do not focus on bias or guard rails. The idea is to get the Rube Goldberg code to output something that mostly works most of the time. I am not sure some developers understand the meaning of bias beyond a deep distaste for marketing and legal professionals.

- When “decisions” are output from the “close enough for horse shoes” smart software, those outputs will be biased. To make the situation more interesting, the outputs can be tuned, shaped, and weaponized. What does that mean for humans who believe what the system delivers?

Net net: The more money firms desperate to be “the big winners” in smart software, the less attention studies like the one cited in the Next Web article receive. What happens if the decisions output spark decisions with unanticipated consequences? I know what outcome: Bias becomes embedded in systems trained to be unfair. From my point of view bias is likely to have a long half life.

Stephen E Arnold, July 21, 2025

Thanks, Google: Scam Link via Your Alert Service

July 20, 2025

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

July 20, 2025 at 926 am US Eastern time: The idea of receiving a list of relevant links on a specific topic is a good one. Several services provide me with a stream of sometimes-useful information. My current favorite service is Talkwalker, but I have several others activated. People assume that each service is comprehensive. Nothing is farther from the truth.

Let’s review a suggested article from my Google Alert received at 907 am US Eastern time.

Imagine the surprise of a person watching via Google Alerts the bound phrase “enterprise search.” Here’s the landing page for this alert. I received this message:

The snippet says “enterprise search platform Shenzhen OCT Happy Valley Tourism Co. Ltd is PRMW a good long term investment [investor sentiment]. What happens when one clicks on Google’s AI-infused message:

My browser displayed this:

If you are not familiar with Telegram Messenger-style scams and malware distribution methods, you may not see these red flags:

- The link points to an article behind the WhatsApp wall

- To view the content, one must install WhatsApp

- The information in Google’s Alert is not relevant to “Nova Wealth Training Camp 20”

This is an example a cross service financial trickery.

Several observations:

- Google’s ability to detect and block scams is evident

- The relevance mechanism which identified a financial scam is based on key word matching; that is, brute force and zero smart anything

- These Google Alerts have been or are now being used to promote either questionable, illegal, or misleading services.

Should an example such as this cause you any concern? Probably not. In my experience, the Google Alerts have become less and less useful. Compared to Talkwalker, Google’s service is in the D to D minus range. Talkwalker is a B plus. Feedly is an A minus. The specialized services for law enforcement and intelligence applications are in the A minus to C range.

No service is perfect. But Google? This is another example of a company with too many services, too few informed and mature managers, and a consulting leadership team disconnected from actual product and service delivery.

Will this change? No, in my opinion.

Stephen E Arnold, July 20, 2025

Xooglers Reveal Googley Dreams with Nightmares

July 18, 2025

![Dino 5 18 25_thumb[3] Dino 5 18 25_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/07/Dino-5-18-25_thumb3_thumb.gif) Just a dinobaby without smart software. I am sufficiently dull without help from smart software.

Just a dinobaby without smart software. I am sufficiently dull without help from smart software.

Fortune Magazine published a business school analysis of a Googley dream and its nightmares titled “As Trump Pushes Apple to Make iPhones in the U.S., Google’s Brief Effort Building Smartphones in Texas 12 years Ago Offers Critical Lessons.” The author, Mr. Kopytoff, states:

Equivalent in size to nearly eight football fields, the plant began producing the Google Motorola phones in the summer of 2013.

Mr. Kopytoff notes:

Just a year later, it was all over. Google sold the Motorola phone business and pulled the plug on the U.S. manufacturing effort. It was the last time a major company tried to produce a U.S. made smartphone.

Yep, those Googlers know how to do moon shots. They also produce some digital rocket ships that explode on the launch pads, never achieving orbit.

What happened? You will have to read the pork loin write up, but the Fortune editors did include a summary of the main point:

Many of the former Google insiders described starting the effort with high hopes but quickly realized that some of the assumptions they went in with were flawed and that, for all the focus on manufacturing, sales simply weren’t strong enough to meet the company’s ambitious goals laid out by leadership.

My translation of Fortune-speak is: “Google was really smart. Therefore, the company could do anything. Then when the genius leadership gets the bill, a knee jerk reaction kills the project and moves on as if nothing happened.”

Here’s a passage I found interesting:

One of the company’s big assumptions about the phone had turned out to be wrong. After betting big on U.S. assembly, and waving the red, white, and blue in its marketing, the company realized that most consumers didn’t care where the phone was made.

Is this statement applicable to people today? It seems that I hear more about costs than I last year. At a 4th of July hoe down, I heard:

- “The prices are Kroger go up each week.”

- “I wanted to trade in my BMW but the prices were crazy. I will keep my car.”

- “I go to the Dollar Store once a week now.”

What’s this got to do with the Fortune tale of Google wizards’ leadership goof and Apple (if it actually tries to build an iPhone in Cleveland?

Answer: Costs and expertise. Thinking one is smart and clever is not enough. One has to do more than spend big money, talk in a supercilious manner, and go silent when the crazy “moon shot” explodes before reaching orbit.

But the real moral of the story is that it is political. That may be more problematic than the Google fail and Apple’s bitter cider. It may be time to harvest the fruit of tech leaderships’ decisions.

Stephen E Arnold, July 18, 2025

Swallow Your AI Pill or Else

July 18, 2025

Just a dinobaby without smart software. I am sufficiently dull without help from smart software.

Just a dinobaby without smart software. I am sufficiently dull without help from smart software.

Annoyed at the next big thing? I find it amusing, but a fellow with the alias of “Honest Broker” (is that an oxymoron) sure seems to upset with smart software. Let me make clear my personal view of smart software; specifically, the outputs and the applications are a blend of the stupid, semi useful, and dangerous. My team and I have access smart software, some running locally on one of my work stations, and some running in the “it isn’t cheap is it” cloud.

The write up is titled “The Force-Feeding of AI on an Unwilling Public: This Isn’t Innovation. It’s Tyranny.” The author, it seems, is bristling at how 21st century capitalism works. News flash: It doesn’t work for anyone except the stakeholders. When the stakeholders are employees and the big outfit fires some stakeholders, awareness dawns. Work for a giant outfit and get to the top of the executive pile. Alternatively, become an expert in smart software and earn lots of money, not a crappy car like we used to give certain high performers. This is cash, folks.

The argument in the polemic is that outfits like Amazon, Google, and Microsoft, et al, are forcing their customers to interact with systems infused with “artificial intelligence.” Here’s what the write up says:

“The AI business model would collapse overnight if they needed consumer opt-in. Just pass that law, and see how quickly the bots disappear. ”

My hunch is that the smart software companies lobbied to get the US government to slow walk regulation of smart software. Not long ago, wizards circulated a petition which suggested a moratorium on certain types of smart software development. Those who advocate peace don’t want smart software in weapons. (News flash: Check out how Ukraine is using smart software to terminate with extreme prejudice individual Z troops in a latrine. Yep, smart software and a bit of image recognition.)

Let me offer several observations:

- For most people technology is getting money from an automatic teller machine and using a mobile phone. Smart software is just sci-fi magic. Full stop.

- The companies investing big money in smart software have to make it “work” well enough to recover their investment and (hopefully) railroad freight cars filled with cash or big crypto transfers. To make something work, deception will be required. Full stop.

- The products and services infused with smart software will accelerate the degradation of software. Today’s smart software is a recycler. Feed it garbage; it outputs garbage. Maybe a phase change innovation will take place. So far, we have more examples of modest success or outright disappointment. From my point of view, core software is not made better with black box smart software. Someday, but today is not the day.

I like the zestiness of the cited write up. Here’s another news flash: The big outfits pumping billions into smart software are relentless. If laws worked, the EU and other governments would not be taking these companies to court with remarkable regularity. Laws don’t seem to work when US technology companies are “innovating.”

Have you ever wondered if the film Terminator was sent to the present day by aliens? Forget the pyramid stuff. Terminator is a film used by an advanced intelligence to warn us humanoids about the dangers of smart software.

The author of the screed about smart software has accomplished one thing. If smart software turns on humanoids, I can identify a person who will be a list for in-depth questioning.

I love smart software. I think the developers need some recognition for their good work. I believe the “leadership” of the big outfits investing billions are doing it for the good of humanity.

I also have a bridge in Brooklyn for sale… cheap. Oh, I would suggest that the analogy is similar to the medical device by which liquid is introduced into the user’s system typically to stimulate evacuation of the wallet.

Stephen E Arnold, July 18, 2025

Software Issue: No Big Deal. Move On

July 17, 2025

No smart software involved with this blog post. (An anomaly I know.)

No smart software involved with this blog post. (An anomaly I know.)

The British have had some minor technical glitches in their storied history. The Comet? An airplane, right? The British postal service software? Let’s not talk about that. And now tennis. Jeeves, what’s going on? What, sir?

“British-Built Hawk-Eye Software Goes Dark During Wimbledon Match” continues this game where real life intersects with zeros and ones. (Yes, I know about Oxbridge excellence.) The write up points out:

Wimbledon blames human error for line-calling system malfunction.

Yes, a fall person. What was the problem with the unsinkable ship? Ah, yes. It seemed not to be unsinkable, sir.

The write up says:

Wimbledon’s new automated line-calling system glitched during a tennis match Sunday, just days after it replaced the tournament’s human line judges for the first time. The system, called Hawk-Eye, uses a network of cameras equipped with computer vision to track tennis balls in real-time. If the ball lands out, a pre-recorded voice loudly says, “Out.” If the ball is in, there’s no call and play continues. However, the software temporarily went dark during a women’s singles match between Brit Sonay Kartal and Russian Anastasia Pavlyuchenkova on Centre Court.

Software glitch. I experience them routinely. No big deal. Plus, the system came back online.

I would like to mention that these types of glitches when combined with the friskiness of smart software may produce some events which cannot be dismissed with “no big deal.” Let me offer three examples:

- Medical misdiagnoses related to potent cancer treatments

- Aircraft control systems

- Financial transaction in legitimate and illegitimate services.

Have the British cornered the market on software challenges? Nope.

That’s my concern. From Telegram’s “let our users do what they want” to contractors who are busy answering email, the consequences of indifferent engineering combined with minimally controlled smart software is likely to do more than fail during a tennis match.

Stephen E Arnold, July 17, 2025

Again Footnotes. Hello, AI.

July 17, 2025

No smart software involved with this blog post. (An anomaly I know.)

No smart software involved with this blog post. (An anomaly I know.)

Footnotes. These are slippery fish in our online world. I am finishing work on my new monograph “The Telegram Labyrinth.” Due to the volatility of online citations, I am not using traditional footnotes, endnotes, or interlinear notes. Most of the information in the research comes from sources in the Russian Federation. We learned doing routine chapter updates each month that documents disappeared from the Web. Some were viewable if we used a virtual private network in a “friendly country” to the producer of the article. Others were just gone. Poof. We do capture images of pages when these puppies are first viewed.

My new monograph is intended for those who attend my lectures about Telegram Messenger-type platforms. My current approach is to just give it away to the law enforcement, cyber investigators, and lawyers who try to figure out money laundering and other digital scams. I will explain my approach in the accompany monograph. I will tell them, “It’s notes. You are on your own when probing the criminal world.” Good luck.

I read “Springer Nature Book on Machine Learning Is Full of Made-Up Citations.” Based on my recent writing effort, I think the problem of citing online resources is not just confined to my team’s experience. The flip side of online research is that some authors or content creation teams (to use today’s jargon) rely on smart software to help out.

The cited article says:

Based on a tip from a reader [of Mastering Machine Learning], we checked 18 of the 46 citations in the book. Two-thirds of them either did not exist or had substantial errors. And three researchers cited in the book confirmed the works they supposedly authored were fake or the citation contained substantial errors.

A version of this “problem” has appeared in the ethics department of Harvard University (where Jeffrey Epstein allegedly had an office), Stanford University, and assorted law firms. Just let smart software do the work and assume that its output is accurate.

It is not.

What’s the fix? Answer: There is none.

Publishers either lack the money to do their “work” or they have people who doom scroll in online meetings. Authors don’t care because one can “publish” anything as an Amazon book with mostly zero oversight. (This by the way is the approach and defense of the Pavel Durov-designed Telegram operation.) Motivated individuals can slap up a free post and publish a book in a series of standalone articles. Bear Blog, Substack, and similar outfits enable this approach. I think Yahoo has something similar, but, really, Yahoo?

I am going to stick with my approach. I will assume the reader knows everything we describe. I wonder what future researchers will think about the information voids appearing in unexpected places. If these researchers emulate what some authors are doing today, the future researchers will let AI do the work. No one will know the difference. If something online can’t be found it doesn’t exist.

Just make stuff up. Good enough.

Stephen E Arnold, July 17, 2025

Academics Lead and Student Follow: Is AI Far Behind?

July 16, 2025

Just a dinobaby without smart software. I am sufficiently dull without help from smart software.

Just a dinobaby without smart software. I am sufficiently dull without help from smart software.

I read “Positive Review Only: Researchers Hide AI Prompts in Papers.” Note: You may have to pay to read this write up.] Who knew that those writing objective, academic-type papers would cheat? I know that one ethics professor is probably okay with the idea. Plus, that Stanford University president is another one who would say, “Sounds good to me.”

The write up says:

Nikkei looked at English-language preprints — manuscripts that have yet to undergo formal peer review — on the academic research platform arXiv. It discovered such prompts in 17 articles, whose lead authors are affiliated with 14 institutions including Japan’s Waseda University, South Korea’s KAIST, China’s Peking University and the National University of Singapore, as well as the University of Washington and Columbia University in the U.S. Most of the papers involve the field of computer science.

Now I would like suggest that commercial database documents are curated and presumably less likely to contain made up information. I cannot. Peer reviewed papers also contain some slick moves; for example, a loose network of academic friends can cite one another’s papers to boost them in search results. Others like the Harvard ethics professor just write stuff and let it sail through the review process fabrications and whatever other confections were added to the alternative fact salads.

What US schools featured in this study? The University of Washington and Columbia University. I want to point out that the University of Washington has contributed to the Google brain trust; for example, Dr. Jeff Dean.

Several observations:

- Why should students pay attention to the “rules” of academic conduct when university professors ignore them?

- Have universities given up trying to enforce guidelines for appropriate academic behavior? On the other hand, perhaps these ArXiv behaviors are now the norm when grants may be in balance?

- Will wider use of smart software change the academics’ approach to scholarly work?

Perhaps one of these estimable institutions will respond to these questions?

Stephen E Arnold, July 16, 2025

AI Produces Human Clipboards

July 16, 2025

![Dino 5 18 25_thumb[3] Dino 5 18 25_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/07/Dino-5-18-25_thumb3_thumb-1.gif) No smart software involved with this blog post. (An anomaly I know.)

No smart software involved with this blog post. (An anomaly I know.)

The upside and downside of AI seep from my newsfeed each day. More headlines want me to pay to view a story from Benzinga. Benzinga, a news release outfit. I installed Smartnews on one of my worthless mobile devices. Out of three stories, one was incoherent. No thanks, AI.

I spotted a write up in the Code by Tom blog titled “The Hidden Cost of AI Reliance.” It contained a quote to note; to wit:

“I’ve become a human clipboard.”

The write up includes useful references about the impact of smart software on some humans’ thinking skills. I urge you to read the original post.

I want to highlight three facets of the “AI problem” that Code by Tom sparked for me.

First, the idea that the smart software is just “there” and it is usually correct strikes me as a significant drawback for students. I think the impact in grade school and high school will be significant. No amount of Microsoft and OpenAI money to train educators about AI will ameliorate unthinking dependence of devices which just provide answers. The act of finding answers and verifying them are essential for many types of knowledge work. I am not convinced that today’s smart software which took decades to become the next big thing can do much more than output what has been fed into the neural predictive matrix mathy systems.

Second, the idea that teachers can somehow integrate smart software into reading, writing, and arithmetic is interesting. What happens if students do not use the smart software the way Microsoft or OpenAI’s educational effort advises. What then? Once certain cultural systems and norms are eroded, one cannot purchase a replacement at the Dollar Store. I think with the current AI systems, the United States speeds more quickly to a digital dark age. It took a long time to toward something resembling a non dark age.

Finally, I am not sure if over reliance is the correct way to express my view of AI. If one drives to work a certain way each day, the highway furniture just disappears. Change a billboard or the color of a big sign, and people notice. The more ubiquitous smart software becomes, the less aware many people will be that it has altered thought processes, abilities related to determine fact from fiction, and the ability to come up with a new idea. People, like the goldfish in a bowl of water, won’t know anything except the water and the blurred images outside the aquarium’s sides.

Tom, the coder, seems to be concerned. I do most tasks the old-fashioned way. I pay attention to smart software, but my experiences are limited. What I find is that it is more difficult now to find high quality information than at any other time in my professional career. I did a project years ago for the University of Michigan. The work concerned technical changes to move books off-campus and use the library space to create a coffee shop type atmosphere. I wrote a report, and I know that books and traditional research tools were not where the action was. My local Barnes & Noble bookstore sells toys and manga cartoons. The local library promotes downloading videos.

Smart software is a contributor to a general loss of interest in learning the hard way. I think smart software is a consequence of eroding intellectual capability, not a cause. Schools were turning out graduates who could not read or do math. What’s the fix? Create software to allow standards to be pushed aside. The idea is that if a student is smart, that student does not have to go to college. One young person told me that she was going to study something practical like plumbing.

Let me flip the argument.

Smart software is a factor, but I think the US educational system and the devaluation of certain ideas like learning to read, write, and “do” math manifest what people in the US want. Ease, convenience, time to doom scroll. We have, therefore, smart software. Every child will be, by definition, smart.

Will these future innovators and leaders know how to think about information in a critical way? The answer for the vast majority of US educated students, the answer will be, “Not really.”

Stephen E Arnold, July 16, 2025

An AI Wrapper May Resolve Some Problems with Smart Software

July 15, 2025

No smart software involved with this blog post. (An anomaly I know.)

No smart software involved with this blog post. (An anomaly I know.)

For those with big bucks sunk in smart software chasing their tail around large language models, I learned about a clever adjustment — an adjustment that could pour some water on those burning black holes of cash.

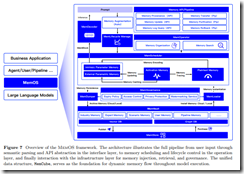

A 36 page “paper” appeared on ArXiv on July 4, 2025 (Happy Birthday, America!). The original paper was “revised” and posted on July 8, 2025. You can read the July 8, 2025, version of “MemOS: A Memory OS for AI System” and monitor ArXiv for subsequent updates.

I recommend that AI enthusiasts download the paper and read it. Today content has a tendency to disappear or end up behind paywalls of one kind or another.

The authors of the paper come from outfits in China working on a wide range of smart software. These institutions explore smart waste water as well as autonomous kinetic command-and-control systems. Two organizations funding the “authors” of the research and the ArXiv write up are a start up called MemTensor (Shanghai) Technology Co. Ltd. The idea is to take good old Google tensor learnings and make them less stupid. The other outfit is the Research Institute of China Telecom. This entity is where interesting things like quantum communication and novel applications of ultra high frequencies are explored.

The MemOS is, based on my reading of the paper, is that MemOS adds a “layer” of knowledge functionality to large language models. The approach remembers the users’ or another system’s “knowledge process.” The idea is that instead of every prompt being a brand new sheet of paper, the LLM has a functional history or “digital notebook.” The entries in this notebook can be used to provide dynamic context for a user’s or another system’s query, prompt, or request. One application is “smart wireless” applications; another, context-aware kinetic devices.

I am not sure about some of the assertions in the write up; for example, performance gains, the benchmark results, and similar data points.

However, I think that the idea of a higher level of abstraction combined with enhanced memory of what the user or the system requests is interesting. The approach is similar to having an “old” AS/400 or whatever IBM calls these machines and interacting with them via a separate computing system is a good one. Request an output from the AS/400. Get the data from an I/O device the AS/400 supports. Interact with those data in the separate but “loosely coupled” computer. Then reverse the process and let the AS/400 do its thing with the input data on its own quite tricky workflow. Inefficient? You bet. Does it prevent the AS/400 from trashing its memory? Most of the time, it sure does.

The authors include a pastel graphic to make clear that the separation from the LLM is what I assume will be positioned as an original, unique, never-before-considered innovation:

Now does it work? In a laboratory, absolutely. At the Syracuse Parallel Processing Center, my colleagues presented a demonstration to Hillary Clinton. The search, text, video thing behaved like a trained tiger before that tiger attacked Roy in the Siegfried & Roy animal act in October 2003.

Are the data reproducible? Good question. It is, however, a time when fake data and synthetic government officials are posting videos and making telephone calls. Time will reveal the efficacy of the ‘breakthrough.”

Several observations:

- The purpose of the write up is a component of the China smart, US dumb marketing campaign

- The number of institutions involved, the presence of a Chinese start up, and the very big time Research Institute of China Telecom send the message that this AI expertise is diffused across numerous institutions

- The timing of the release of the paper is delicious: Happy Birthday, Uncle Sam.

Net net: Perhaps Meta should be hiring AI wizards from the Middle Kingdom?

Stephen E Arnold, July 15, 2025