Telegram Notes: AI Slop Analyzes TON Strategy and Demonstrates Shortcomings

January 1, 2026

![green-dino_thumb_thumb[3] green-dino_thumb_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/12/green-dino_thumb_thumb3_thumb-2.gif) Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

If you want to get a glimpse of AI financial analysis, navigate to “My Take on TON Strategy’s NASDAQ Listing Warning.” The video is ascribed to Corporate Decoder. But there is no “my” in the old-fashioned humanoid sense. Click on the “more” link in the YouTube video, and you will see this statement:

The highlight points to the phrase, “All videos are AI generated…”

The six minute video does not point out some of the interesting facets of the Telegram / TON Foundation inspired “beep beep zoom” approach to getting a “rules observing listing” on the US NASDAQ. Yep, beep beep. Road Runner inspired public lists with an alleged decade of history. I find this fascinating.

The video calls attention to superficial aspects of the beep beep Road Runner company spun up in a matter of weeks in late 2025. The company is TON Strategy captained by Manny Stotz, formerly the president or CEO or temporary top dog at the TON Foundation. That’s the outfit Pavel Durov thought would be a great way to convert GRAMcoin into TONcoin, gift the Foundation with the TON blockchain technology, and allow the Foundation to handle the marketing. (Telegram itself does not marketing except leverage the image of Pavel Durov, the self-proclaimed GOAT of Russian technology culture. In addition to being the GOAT, Mr. Durov is reporting to his nanny in the French judiciary as he awaits a criminal trial on nine or ten serious offenses. But who is counting?

What’s the AI video do other than demonstrate that YouTube does not make easily spotted AI labels obvious.

The video does not provide any insight into some questions my team and I had about TON Strategy Company, its executive chairperson Manual “Manny” Stotz, or the $400 million plus raised to get the outfit afloat. The video does not call attention to the presence of some big, legitimate, brands in the world of crypto like Blockchain.com.

The video tries to explain that the firm is trying to become an asset outfit like Michael Saylor’s Strategy Company. But the key difference is not pointed out; that is, Mr. Saylor bet on Bitcoin. Mr. Stotz is all in on TONcoin. He believes in TONcoin. He wanted to move fast. He is going to have to work hard to overcome what might be some modest potholes as his crypto vehicle chugs along the Information Highway.

The first crack in the asphalt is the TONcoin itself. Mr. Stotz “bought” TONcoins at about a value point of $5.00. That was several weeks ago. Those same TONcoins can be had for $1.62 at about noon on December 31, 2025. Source you ask? Okay, here’s the service I consulted: https://www.tradingview.com/symbols/TONUSD/

The second little dent in the road is the price of the TON Strategy Company’s NASDAQ stock. At about noon on December 31, 2025, it was going for $2.00 a share. What did the TONX stock cost in September 2025? According to Google Finance it was in the $21.00 range. Is this a problem? Probably not for Mr. Stotz because his Kingsway Capital is separate from TON Strategy Company. Plus, Kingsway Capital is separate from its “owner” Koenigsweg Holdings. Will someone care if TONX gets delisted? Yep, but I am not prepared to talk about the outfits who have an interest in Koenigsweg and Kingsway. My hunch is that a couple of these outfits may want to take a ride with Manny to talk, to share ideas, and to make sure everyone is on the same page. In Russian, Google Translate says this sequence of words might pop up during the ride: ?? ?????????, ??????

What are some questions the AI system did not address? Here are a few:

- How does the current market value of TONcoin affect the information in the SEC document the AI analyzed?

- Where do these companies fit into the TON Strategy outfit? Why the beep beep approach when two established outfits like Kingsway Capital and Koenigsweg Holdings could have handled the deal?

- What connections exist between the TON Foundation and Mr. Stotz? What connection does the deal have to the TON Foundation’s ardent supported, DWF Labs (a crypto market maker of repute)?

- Who is president of the TON Strategy Company? What is the address of the company for the SEC document? Where does Veronika Kapustina reside in the United States? Why do Rory Cutaia, Veronika Kapustina, and TON Strategy Company share a residential address in Las Vegas?

- What role does Sarah Olsen, a former Rockefeller financial analyst play in the company from her home, possibly in Miami, Florida?

- What is the Plan B if the VERB-to-TON Strategy Company continues to suffer from the down market in crypto? What will outfits like DWF Labs do? What will the TON Foundation do? What will Pavel Durov, the GOAT, do in addition to wait for the big, sluggish wheels of the French judicial system to grind forward?

The AI did not probe like an over-achieving MBA at an investment firm would do to keep her job. Nope. Hit the pause switch and use whatever the AI system generates. Good enough, right?

What does this AI generated video reveal about smart software playing the role of a human analyst? Our view is:

- Quick and sloppy content

- Failure to chase obvious managerial and financial loose ends

- Ignoring obvious questions about how a “sophisticated” pivot can garner two notes from Mother SEC in a couple of weeks.

Net net: AI is not ready for some types of intellectual work.

Want for more Telegram Notes’ content? More information about our Telegram-related information service in the new year.

Stephen E Arnold, January 1, 2026

Software in 2026: Whoa, Nellie! You Lame, Girl?

December 31, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

When there were Baby Bells, I got an email from a person who had worked with me on a book. That person told me that one of the Baby Bells wanted to figure out how to do their Yellow Pages as an online service. The problem, as I recall my contact’s saying, was “not to screw up the ad sales in the dead tree version of the Yellow Pages.” I won’t go into the details about the complexities of this project. However, my knowledge of how pre- and post-Judge Green was supposed to work and some on-site experience creating software for what did business as Bell Communications Research gave me some basic information about the land of Bell Heads. (If you don’t get the reference, that’s okay. It’s an insider metaphor but less memorable than Achilles’ heel.)

A young whiz kid from a big name technology school experiences a real life learning experience. Programming the IBM MVS TSO set up is different from vibe coding an app from a college dorm room. Thanks, Qwen, good enough.

The point of my reference to a Baby Bell was that a newly minted stand alone telecommunications company ran on what was a pretty standard line up of IBM and B4s (designed by Bell Labs, the precursor to Bellcore) plus some DECs, Wangs, and other machines. The big stuff ran on the IBM machines with proprietary, AT&T specific applications on the B4s. If you are following along, you might have concluded that slapping a Yellow Pages Web application into the Baby Bell system was easy to talk about but difficult to do. We did the job using my old trick. I am a wrapper guy. Figure out what’s needed to run a Yellow Pages site online, what data are needed and where to get it and where to put it, and then build a nice little Web set up and pass data back and forth via what I call wrapper scripts and code. The benefits of the approach are that I did not have to screw around with the software used to make a Baby Bell actually work. When the Web site went down, no meetings were needed with the Regional Manager who had many eyeballs trained on my small team. Nope, we just fixed the Web site and keep on doing Yellow Page things. The solution worked. The print sales people could use the Web site to place orders or allow the customer to place orders. Open the valve to the IBM and B4s, push in data just the way these systems wanted it, and close the valve. Hooray.

Why didn’t my team just code up the Web stuff and run it on one of those IBM MVS TSO gizmos? The answer appears, quite surprisingly in a blog post published December 24, 2025. I never wrote about the “why” of my approach. I assumed everyone with some Young Pioneer T shirts knew the answer. Guess not. “Nobody Knows How Large Software Products Work” provides the information that I believed every 23 year old computer whiz kid knew.

The write up says:

Software is hard. Large software products are prohibitively complicated.

I know that the folks at Google now understand why I made cautious observations about the complexity of building interlocking systems without the type of management controls that existed at the pre break up AT&T. Google was very proud of its indexing, its 150 plus signal scores for Web sites, and yada yada. I just said, “Those new initiatives may be difficult to manage.” No one cared. I was an old person and a rental. Who really cares about a dinobaby living in rural Kentucky. Google is the new AT&T, but it lacks the old AT&T’s discipline.

Back to the write up. The cited article says:

Why can’t you just document the interactions once when you’re building each new feature? I think this could work in theory, with a lot of effort and top-down support, but in practice it’s just really hard….The core problem is that the system is rapidly changing as you try to document it.

This is an accurate statement. AT&T’s technical regional managers demanded commented code. Were the comments helpful? Sometimes. The reality is that one learns about the cute little workarounds required for software that can spit out the PIX (plant information exchange data) for a specific range of dialing codes. Google does some fancy things with ads. AT&T in the pre Judge Green era do some fancy things for the classified and unclassified telephone systems for every US government entity, commercial enterprises, and individual phones and devices for the US and international “partners.”

What does this mean? In simple terms, one does not dive into a B4 running the proprietary Yellow Page data management system and start trying to read and write in real time from a dial up modem in some long lost corner of a US state with a couple of mega cities, some military bases, and the national laboratory.

One uses wrappers. Period. Screw up with a single character and bad things happen. One does not try to reconstruct what the original programming team actually did to make the PIX system “work.”

The write up says something that few realize in this era of vibe coding and AI output from some wonderful system like Claude:

It’s easier to write software than to explain it.

Yep, this is actual factual. The write up states:

Large software systems are very poorly understood, even by the people most in a position to understand them. Even really basic questions about what the software does often require research to answer. And once you do have a solid answer, it may not be solid for long – each change to a codebase can introduce nuances and exceptions, so you’ve often got to go research the same question multiple times. Because of all this, the ability to accurately answer questions about large software systems is extremely valuable.

Several observations are warranted:

- One gets a “feel” for how certain large, complex systems work. I have, prior to my retiring, had numerous interactions with young wizards. Most were job hoppers or little entrepreneurs eager to poke their noses out of the cocoon of a regular job. I am not sure if these people have the ability to develop a “feel” for a large complex of code like the old AT&T had assembled. These folks know their code, I assume. But the stuff running a machine lost in the mists of time. Probably not. I am not sure AI will be much help either.

- The people running some of the companies creating fancy new systems are even more divorced from the reality of making something work and how to keep it going. Hence, the problems with computer systems at airlines, hospitals, and — I hate to say it — government agencies. These problems will only increase, and I don’t see an easy fix. One sure can’t rely on ChatGPT, Gemini, or Grok.

- The push to make AI your coding partner is sort of okay. But the old-fashioned way involved a young person like myself working side by side with expert DEC people or IBM professionals, not AI. What one learns is not how to do something but how not to do something. Any one, including a software robot, can look up an instruction in a manual. But, so far, only a human can get a “sense” or “hunch” or “intuition” about a box with some flashing lights running something called CB Unix. There is, in my opinion, a one way ticket down the sliding board to system failure with the today 2025 approach to most software. Think about that the next time you board an airplane or head to the hospital for heart surgery.

Net net: Software excitement ahead. And that’s a prediction for 2026. I have a high level of confidence in this peek at the horizon.

Stephen E Arnold, December 31, 2025

Consultants, the Sky Is Falling. The Sky Is Falling But Billing Continues

December 30, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Have you ever heard of a person with minimal social media footprints named Veronika Kapustina? I sure hadn’t. She popped up as a chief executive officer of an instant mashed potato-type of company named TON Strategy Company. I was listening to her Russian-accented presentation at a crypto conference via YouTube. My mobile buzzed and I saw a link from one of my team to this story: “Why the McKinsey Layoffs Are a Warning Signal for Consulting in the AI Age.”

Yep, the sky is falling, the sky is falling. As an 81 year old dinobaby, I recall my mother reading me a book in which a cartoon character was saying, “The sky is falling.” News flash: The sky is still there, and I would wager a devalued US$1.00 that the sky will be “there” tomorrow.

This sort of strange write up asserts:

While the digital age reduced information asymmetry, the AI age goes further. It increasingly equalizes analytical and recommendation capabilities.

The author is allegedly a former blue chip consultant. Therefore, this person knows about that which he opines. The main point is that consulting firms may not need to hire freshly credentialed MBAs. The grunt work can be performed by an AI system. True, most AI systems make up information with estimates running between 10 and 33 percent of the outputs. I suppose that’s about par for an MBA whose father is a United States senator or the CEO of a client of the blue chip firm. Maybe the progeny of the powerful are just smarter than an AI. I don’t know. I am a dinobaby, and it has been decades since I worked at a blue chip outfit.

What pulled me from my looking for information about the glib Russian, Veronika K? That’s an easy question. I think the write up misses the entire point of a blue chip consulting firm and for some quite specialized consulting firms like the nuclear outfit employing me before I jumped to the blue chip’s carpetland.

The cited write up states as actual factual:

As the center of gravity shifts toward execution depth and the ability to drive continuous change, success will depend on how effectively firms rewire their DNA—building the operating model and talent engine required to implement and scale tech-led transformation.

Yep, this sounds wise. Success depends on clients “rewiring their DNA.” Okay, but what do consulting firms have to do? Those outfits have to rewire as well and that spells T-R-O-U-B-L-E for big outfits whether they do big think stuff like business strategy or financial engineering or for outfits that do semi-strategy and plumbing.

The hybrid teams from a blue chip consultant are poised to start work immediately. Rates are the same, but fewer humans means more profits. It helps that the intern’s father is the owner of the business devasted by a natural disaster. Thanks, Qwen, good enough.

Let me provide a different view of the sky is falling notion.

- As stated, the sky is not falling. Change happens. Some outfits adapt; others don’t. Hasta, la vista Arthur Andersen.

- Blue chip consulting firms (strategy or financial engineering) are like comfort food. The vast majority of engagements are follow on or repeat business. There is are tactics that are in place to make this happen. Clients like to eat burgers and pizza. Consultants sell the knowledge processed goodies.

- New hires don’t always do “real” work. New hires provide (hopefully) connections, knowledge not readily available to the firm, and the equivalent of Russia’s meat assaults. Hey, someone has to work on that study of world economic change due in 14 days.

- Clients hire consultants for many reasons; for example, to help get a colleague fired or sent to the office in Nome, Alaska; to have the prestige halo at the country club; to add respectability to what is a criminal enterprise (hello, Arthur Andersen. Remember Enron’s special purpose vehicles? No just graduated MBA thinks those up at a fraternity party do they?)

Translating this to real world consulting impact means:

- Old line dinobaby consultants like me will grouse that AI is wrong and must be checked by someone who knows when the AI system is in hallucination mode and can fix the error before something bad like fentanyl happens

- Good enough AI will diffuse because AI is cheaper than humans who have to be managed (who wants to do that?), given health care, and provided with undeserved and unpredictable vacation requests, and a retirement account (who really wants to retire after 30 years at a blue chip consultant? I sure didn’t. Get the experience and get out of Dodge).

- Consulting is like love, truth (really popular these days), justice, and the American way. These reference points means that as more actual hands on work becomes a service, consulting, not software, consumes every revenue generating function. If some big firms disappear, that’s life.

Consulting is forever and someone will show up to buy up a number of firms in a niche and become the new big winner. The dinobaby blue chips will just coalesce into one big firm. At the outfit which employed me, we joked about how similar our counterparts were at other firms. We weren’t individuals. We were people who could snap in, learn quickly, and output words that made clients believe they had just learned something like e=mc^2. Others were happy to have that idiot in the Pittsburgh office sent to learn the many names of snow in Nome.

The sky is not falling. The sun is rising. A billable day arrives for the foreseeable future.

Stephen E Arnold, December 30, 2025

Is This Correct? Google Sues to Protect Copyright

December 30, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

This headline stopped me in my tracks: “Google Lawsuit Says Data Scraping Company Uses Fake Searches to Steal Web Content.” The way my dinobaby brain works, I expect to see a publisher taking the world’s largest online advertising outfit in the crosshairs. But I trust Thomson Reuters because they tell me they are the trust outfit.

Google apparently cannot stop a third party from scraping content from its Web site. Is this SEO outfit operating at a level of sophistication beyond the ken of Mandiant, Gemini, and the third-party cyber tools the online giant has? Must be I guess. Thanks, Venice.ai. Good enough.

The alleged copyright violator in this case seems to be one of those estimable, highly professional firms engaged in search engine optimization. Those are the folks Google once saw as helpful to the sale of advertising. After all, if a Web site is not in a Google search result, that Web site does not exist. Therefore, to get traffic or clicks, the Web site “owner” can buy Google ads and, of course, make the Web site Google compliant. Maybe the estimable SEO professional will continue to fiddle and doctor words in a tireless quest to eliminate the notion of relevance in Google search results.

Now an SEO outfit is on the wrong site of what Google sees as the law. The write up says:

Google on Friday [December 19, 2025] sued a Texas company that “scrapes” data from online search results, alleging it uses hundreds of millions of fake Google search requests to access copyrighted material and “take it for free at an astonishing scale. The lawsuit against SerpApi, filed in federal court in California, said the company bypassed Google’s data protections to steal the content and sell it to third parties.

To be honest the phrase “astonishing scale” struck me as somewhat amusing. Google itself operates on “astonishing scale.” But what is good for the goose is obviously not good for the gander.

I asked You.com to provide some examples of people suing Google for alleged copyright violations. The AI spit out a laundry list. Here are four I sort of remembered:

- News Outlets & Authors v. Google (AI Training Copyright Cases)

- Google Users v. Google LLC (Privacy/Data Class Action with Copyright Claims)

- Advertisers v. Google LLC (Advertising Content Class Action)

- Oracle America, Inc. v. Google LLC

My thought is that with some experience in copyright litigation, Google is probably confident that the SEO outfit broke the law. I wanted to word it “broke the law which suits Google” but I am not sure that is clear.

Okay, which company will “win.” An SEO firm with resources slightly less robust than Google’s or Google?

Place your bet on one of the online gambling sites advertising everywhere at this time. Oh, Google permits online gambling ads in areas allowing gambling and with appropriate certifications, licenses, and compliance functions.

I am not sure what to make of this because Google’s ability to filter, its smart software, and its security procedures obviously are either insufficient, don’t work, or are full of exploitable gaps.

Stephen E Arnold, December 30, 2025

Telegram Notes: Occasional Observations

December 29, 2025

![green-dino_thumb_thumb[3]_thumb green-dino_thumb_thumb[3]_thumb](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/12/green-dino_thumb_thumb3_thumb_thumb.gif) Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

My new Telegram Notes’ service is coming along. Watch Beyond Search for a link to the stories. We will post a short summary of a story in Beyond Search which I have producing since 2008. The longer Telegram Notes’ essays will appear in the new service. We are testing a couple of options. We have a masthead. The art was produced by Google and Venice smart software. If you know nothing about Telegram, its Messenger Service, or its new financial services, the illustration won’t make much sense. If you know a bit about Telegram, you will know about the GOAT. The references to crypto and other content are references to allegations about the content on the platform.

The working version of the Telegram Notes’ masthead. Thanks, AI services. You are good enough.

Beyond Search will continue to host new articles in our traditional format. However, as we figure out how to best use our time, the flow of stories is likely to be uneven. (Personally I love the GOAT picture. But what’s that on the information highway beneath the GOAT? Probably nothing.

Stephen E Arnold, December 29, 2025

Salesforce: Winning or Losing?

December 29, 2025

![green-dino_thumb_thumb[3] green-dino_thumb_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/12/green-dino_thumb_thumb3_thumb.gif) Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

One of the people on my team told me at our holiday staff lunch (I had a coupon, thank you), that Salesforce signed up thousands of new customers because of AI. Lunch included one holiday beverage, so I dismissed this statement as one of those wild and crazy end-of-year statements from a person who had just consumed one burrito deluxe and a chicken fajita. Let’s assume the number is correct.

That means whatever the leadership of Salesforce is doing, that team is winning. AI may be one reason for the sales success if true. On the other hand, I had the small chicken burrito and an iced tea. I said, “Maybe Microsoft is screwing up with AI so much, commercial outfits are turning to Salesforce?”

The answer for many in Silicon Valley is, “Go in.” Forget the Chinese focus on practical applications of smart software. Go in, win big. Thanks, Venice.ai. Good enough.

Of course, no one agreed. The conversation turned to the hopelessness of catching flights home and the lack of people in my favorite coupon issuing Mexican themed restaurant in rural Kentucky. I stopped thinking about Salesforce until…

I read “Salesforce Regrets Firing 4000 Experienced Staff and Replacing Them with AI.” The write up is interesting. If the statements in the document are accurate, AI may become a bit of a problem for some outfits in 2026. Be sure to read the original essay by an entity identified as Maarthandam. I am only going to highlight a couple of sentences.

The first passage I noted is:

You cannot put a bot where a human is supposed to be. “We assumed the technology was further along than it actually was.”

Well, that makes sense. High technology folks read science fiction instead of Freddy the Pig books when in middle school. The efforts to build smart software, data centers in space, ray guns, etc. etc. are works in progress. Plus the old admonition of high school teachers, “Never assume, or you will make an “ass” of “u” and “me” seems to have been ignored. Yeah. Wizards.

The second passage of interest to me was:

Executives now concede that removing large numbers of trained support staff created gaps that AI could not immediately fill, forcing teams to reassign remaining employees and increase human oversight of automated systems.

The main idea for me is that humans have to do extra work to keep AI from creating more problems. These are problems unique to a situation. Therefore, something more than today’s AI systems is needed. The “something” is humans who can keep the smart software from wandering into the no man’s land of losing a customer or having a smart self driving car kill the Mission District’s most loved stray cat. Yeah. More humans, expensive ones at that.

The final passage I circled was:

Salesforce has now begun reframing its AI strategy, shifting away from the language of replacement toward what executives call “rebalancing.”

This passage means to this dinobaby, “We screwed up, have paid a consultant to craft some smarmy words, and are trying to contain the damage.” I may be wrong, but the Salesforce situation seems to be a mess. Yeah, leadership Silicon Valley style.

Net net: Is that a blimp labeled “Enron” circling the Salesforce offices?

Stephen E Arnold, December 29, 2025

Way to Go, Waymo: The Non-Googley Drivers Are Breaking the Law

December 26, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

To be honest I like to capture Googley moments in the real world. Forget the outputs of Google’s wonderful Web search engine. (Hey, where did those pages of links go?) The Google-reality interface is a heck of lot more fun.

Consider this article, which I assume like everything I read on the Internet, to be rock solid capital T truth. “Waymo Spotted Driving Wrong Way Down Busy Street.” The write up states as actual factual:

This week, one of Waymo’s fully driverless cabs was spotted blundering down the wrong side of a street in Austin, Texas, causing the human motorists driving in the correct direction to cautiously come to a halt, not unlike hikers encountering a bear.

That was no bear. That was a little Googzilla. These creatures, regardless of physical manifestation, operate by a set of rules and cultural traditions understandable only to those who have been in the Google environment.

Thanks to none of the AI image generators. I had to use three smart software to create a pink car driving the wrong way on a one way street. Great proof of a tiny problem with today’s best: ChatGPT, Venice, and MidJourney. Keep up the meh work.

The cited article continues:

The incident was captured in footage uploaded to Reddit. For a split second, it shows the Waymo flash its emergency signal, before switching to its turn signal. The robotaxi then turns in the opposite direction indicated by its blinker and pulls into a gas station, taking its sweet time.

I beg to differ. Google does not operate on “sweet time.” Google time is a unique way to move toward its ultimate goal: Humans realizing that they are in the path of a little Googzilla. Therefore, adapt to the Googleplex. The Googleplex does not adapt to humanoids. Humanoids click and buy things. Google facilitates this by allowing humanoids to ride in little Googzilla vehicles and absorb Google advertisements.

The write up illustrates that it fails to grasp the brilliance of the Googzilla’s smart software; to wit:

Waymo recalled a software patch after its robotaxis were caught blowing past stopped school buses with active warning lights and stop signs, including at least one incident where a Waymo drove right by students who were disembarking. Twenty of these incidents were reported in Austin alone, MySA noted, prompting the National Highway Traffic Safety Administration to open an investigation into the company. It’s not just school buses: the cabs don’t always stop for law enforcement, either. Earlier this month, a Waymo careened into an active police standoff, driving just a few feet away from a suspect who was lying facedown in the asphalt while cops had their guns trained on him.

These examples point out the low level of understanding that exists among the humanoids who consume advertising. Googzilla would replace humanoids if it could, but — for now — big and little Googzillas have to tolerate the inefficient friction humanoids introduce to the Google systems.

Let’s recap:

- Humans fail to understand Google rules

- Examples of Waymo “failures” identify the specific weaknesses Gemini can correct

- Little Googzillas define traffic rules.

So what if a bodega cat goes to the big urban area with dark alleys in the sky. Study Google and learn.

Stephen E Arnold, December 26, 2025

Yep, Making the AI Hype Real Will Be Expensive. Hundreds of Billions, Probably More, Says Microsoft

December 26, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I really don’t want to write another “if you think it, it will become real.” But here goes. I read “Microsoft AI CEO Mustafa Suleyman Says It Will Cost Hundreds of Billions to Keep Up with Frontier AI in the Next Decade.”

What’s the pitch? The write up says:

Artificial general intelligence, or AGI, refers to AI systems that can match human intelligence across most tasks. Superintelligence goes a step further — systems that surpass human abilities.

So what’s the cost? Allegedly Mr. AI at Microsoft (aka Microsoft AI CEO Mustafa Suleyman) asserts:

it’s going to cost “hundreds of billions of dollars” to compete at the frontier of AI over the next five to 10 years….Not to mention the prices that we’re paying for individual researchers or members of technical staff.

Microsoft seems to have some “we must win” DNA. The company appears to be willing to ignore users requests for less of that Copilot goodness.

The vice president of AI finance seems shocked by an AI wizard’s request for additional funds… right now. Thanks, Qwen. Good enough.

Several observations:

- The assumption is that more money will produce results. When? Who knows?

- The mental orientation is that outfits like Microsoft are smart enough to convert dreams into reality. That is a certain type of confidence. A failure is a stepping stone, a learning experience. No big deal.

- The hype has triggered some non-AI consequences. The lack of entry level jobs that AI will do is likely to derail careers. Remember the baloney that online learning was better than sitting in a classroom. Real world engagement is work. Short circuiting that work in my opinion is a problem not easily corrected.

Let’s step back. What’s Microsoft doing? First, the company caught Google by surprise in 2022. Now Google is allegedly as good or better than OpenAI’s technology. Microsoft, therefore, is the follower instead of the pace setter. The result is mild concern with a chance of fear tomorrow. the company’s “leadership” is not stabilizing the company, its messages, and its technology offerings. Wobble wobble. Not good.

Second, Microsoft has demonstrated its “certain blindness” to two corporate activities. The first is the amount of money Microsoft has spent and apparently will continue to spend. With inputs from the financially adept Mr. Suleyman, the bean counters don’t have a change. Sure, Microsoft can back out of some data center deals and it can turn some knobs and dials to keep the company’s finances sparkling in the sun… for a while. How long? Who knows?

Third, even Microsoft fan boys are criticizing the idea of shifting from software that a users uses for a purpose to an intelligent operating system that users its users. My hunch is that this bulldozing of user requests, preferences, and needs may be what some folks call a “moment.” Google’s Waymo killed a cat in the Mission District. Microsoft may be running over its customers. Is this risky? Who knows.

Fourth, can Microsoft deliver AI that is not like AI from other services; namely, the open source solutions that are available and the customer-facing apps built on Qwen, for example. AI is a utility and not without errors. Some reports suggest that smart software is wrong two thirds of the time. It doesn’t matter what the “real” percentage is. People now associate smart software with mistakes, not a rock solid tool like a digital tire pressure gauge.

Net net: Mr. Suleyman will have an opportunity to deliver. For how long? Who knows?

Stephen E Arnold, December 26, 2025

Forget AI AI AI. Think Enron Enron Enron

December 25, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Happy holidays AI industry. The Financial Times seems to be suggesting that lignite coal may be in your imitative socks hanging on your mantels. In the nightmare before Christmas edition of the orange newspaper, the story “Tech Groups Shift $120bn of AI Data Centre Debt Off Balance Sheets” says:

Creative financing helps insulate Big Tech while binding Wall Street to a future boom or bust.

What’s this mean? The short answer in my opinion is, “Enron Enron Enron.” That was the online oil information short cake that was inedible, choking a big accounting firm and lots of normal employees and investors. Some died. Houston and Wall Street had a problem. for years after the event, the smell of burning credibility could be detected by those with sensitive noses.

Thanks, Venice.ai. Good enough.

The FT, however, is not into Enron Enron Enron. The FT is into AI AI AI.

The write up says:

Financial institutions including Pimco, BlackRock, Apollo, Blue Owl Capital and US banks such as JPMorgan have supplied at least $120bn in debt and equity for these tech groups’ computing infrastructure, according to a Financial Times analysis.

So what? The FT says:

That money is channeled through special purpose holding companies known as SPVs. The rush of financings, which do not show up on the tech companies’ balance sheets, may be obscuring the risks that these groups are running — and who will be on the hook if AI demand disappoints. SPV structures also increase the danger that financial stress for AI operators in the future could cascade across Wall Street in unpredictable ways.

These sentence struck me as a little to limp. First, everyone knows what happens if AI works and creates the Big Rock Candy Mountain the tech bros will own. That’s okay. Lots of money. No worries. Second, the more likely outcome is [a] rain pours over the sweet treat and it melts gradually or [b] a huge thundercloud perches over the fragile peak and it goes away in a short time. One day a mountain and the next a sticky mess.

How is this possible? The FT states:

Data center construction has become largely reliant on deep-pocketed private credit markets, a rapidly inflating $1.7tn industry that has itself prompted concerns due to steep rises in asset valuations, illiquidity and concentration of borrowers.

The FT does not mention the fact that there may be insufficient power, water, and people to pull off the data center boom. But that’s okay, the FT wants to make clear that “risky lending” seems to be the go-approach for some of the hopefuls in the AI AI AI hoped-for boom.

What can make the use of financial engineering to do Enron Enron Enron maneuvers more tricky? How about this play:

A number of tech bankers said they had even seen securitization deals on AI debt in recent months, where lenders pool loans and sell slices of them, known as asset-backed securities, to investors. Two bankers estimated these deals currently numbered in the single-digit billions of dollars. These deals spread the risk of the data center loans to a much wider pool of investors, including asset managers and pension funds.

When playing Enron Enron Enron games, the ideas for “special purpose vehicles” or SPVs reduce financial risk. Just create a separate legal entity with its own balance sheet. If the SPV burns up (salute to Enron), the parent company’s assets are in theory protected. Enron’s money people cooked up some chrome trim for their SPVs; for example, just fund the SPVs with Enron stock. What could go wrong? Nothing unless, the stock tanked. It did. Bingo, another big flame out. Great idea as long as the rain clouds did not park over Big Rock Candy Mountain. But the rains came and stayed.

The result is that the use of these financial fancy dance moves suggests that some AI AI AI outfits are learning the steps to the Enron Enron Enron boogie.

Several observations:

- The “think it and it will work” folks in the AI AI AI business have some doubters among their troops

- The push back about AI leads to wild and crazy policies like those promulgated by Einstein’s old school. See ETH’s AI Policies. These indicate no one is exactly what to do with AI.

- Companies like Microsoft are experiencing what might be called post-AI AI AI digital Covid. If the disease spreads, trouble looms until herd immunity kicks in. Time costs money. Sick AI AI AI could be fatal.

Net net: The FT has sent an interesting holiday greeting to the AI AI AI financial engineers. 2026 will be exciting and perhaps a bit stressful for some in my opinion. AI AI AI.

Stephen E Arnold, December 25, 2025

Google Web Indexing: Some Think It Is Degrading. Impossible

December 25, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I think Google’s Web indexing is the cat’s pajamas. It is the best. It has the most Web pages. It digs deep into sites few people visit like the Inter-American Foundation. Searches are quick, especially if you don’t use Gemini which seems to hang in dataspace today (December 12, 2025).

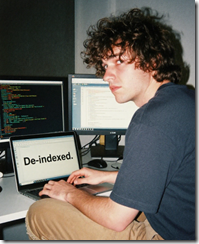

Imagine my reaction when I read that a person using a third-party blog publishing service was de-indexed. Now the pointer is still there, but it is no longer displayed by the esteemed Google system. You can read the human’s version of the issue he encountered in “Google De-Indexed My Entire Bear Blog and I Don’t Know Why.”

Google has brightened the day of a blogger. Google understands advertising. Some other things are bafflers to Googzilla. Thanks, Qwen. Good enough, but you spelled “de-indexed” correctly.

The write up reveals the issue. The human clicked on something and Google just followed its rules. Here’s the “why” from the source document:

On Oct 14, as I was digging around GSC, I noticed that it was telling me that one of the URLs weren’t indexed. I thought that was weird, and not being very familiar with GSC, I went ahead and clicked the “Validate” button. Only after did I realized that URL was the RSS feed subscribe link,

https://blog.james-zhan.com/feed/?type=rss, which wasn’t even a page so it made sense that it hadn’t been indexed, but it was too late and there was no way for me to stop the validation.

The essay explains how Google’s well crafted system responded to this signal to index an invalid url. Google could have taken time to add a message like “Are you sure?” or maybe a statement saying, “Clicking okay will cause de-indexing of the content at the root url.” But Google — with its massive amounts of user behavior data — knows that its interfaces are crystal clear. The vast majority of human Googlers understand what happens when they click on options to delete images from the Google Cloud. Or, when a Gmail user tries to delete old email using the familiar from: command.

But the basic issue is that a human caused the de-indexing.

What’s interesting about the human’s work around is that those actions could be interpreted as a gray or black hat effort to fiddle with Google’s exceptional approach to indexing. Here’s what the human did:

I copied my blog over to a different subdomain (you are on it right now), moved my domain from GoDaddy to Porkbun for URL forwarding, and set up URL forwarding with paths so any blog post URLs I posted online will automatically be redirected to the corresponding blog post on this new blog. I also avoided submitting the sitemap of the new blog to GSC. I’m just gonna let Google naturally index the blog this time. Hopefully, this new blog won’t run into the same issue.

I would point out that “hope” is not often an operative concept at the Google.

What’s interesting about this essay about a human error is that it touched a nerve amongst the readers of Hacker News. Here a few comments about this human error:

- PrairieFire offers this gentle observation: “Whether or not this specific author’s blog was de-indexed or de-prioritized, the issue this surfaces is real and genuine. The real issue at hand here is that it’s difficult to impossible to discover why, or raise an effective appeal, when one runs afoul of Google, or suspects they have. I shudder to use this word as I do think in some contexts it’s being overused, I think it’s the best word to use here though: the issue is really that Google is a Gatekeeper.

- FuturisticLover is a bit more direct: “Google search results have gone sh*t. I am facing some deindexing issues where Google is citing a content duplicate and picking a canonical URL itself, despite no similar content. Just the open is similar, but the intent is totally different, and so is the focus keyword. Not facing this issue in Bing and other search engines.

- Aldipower raises a question about excellence and domination of Web search technology: Yeah, Google search results are almost useless. How could they have neglected their core competence so badly?

Several observations are warranted:

- Don’t click on any Google button unless one does one’s homework. Interpreting Google speak without having fluency in the lingo can result in some interesting downstream consequences

- Google is unlikely to change due to its incentive programs. One does not get promoted for fixing up an statement that could lead to a site being removed from public view. One gets the brass ring for doing AI which hopefully works more reliably that Gemini today (December 12, 2025)

- Quite a few people posting to this Hacker News’ item don’t have the same level of affection I have for the scintillating Google search experience.

Net net: Get with the program. The courts have spoken in the US. The EU just collects money. Users consume ads. Adapt. My suggestion is to not screw around too much; otherwise, Bear Blogs might be de-indexed by an annoyed search administrator in Switzerland.

Stephen E Arnold, December 25, 2025