Japan Alleges Google Is a Monopoly Doing Monopolistic Things. What?

April 28, 2025

No AI, just the dinobaby himself.

No AI, just the dinobaby himself.

The Google has been around a couple of decades or more. The company caught my attention, and I wrote three monographs for a now defunct publisher in a very damp part of England. These are now out of print, but their titles illustrate my perception of what I call affectionately Googzilla:

- The Google Legacy. I tried to explain why Google’s approach was going to define how future online companies built their technical plumbing. Yep, OpenAI in all its charm is a descendant of those smart lads, Messrs. Brin and Page.

- Google Version 2.0. I attempted to document the shift in technical focus from search relevance to a more invasive approach to using user data to generate revenue. The subtitle, I thought at the time, gave away the theme of the book: “The Calculating Predator.”

- Google: The Digital Gutenberg. I presented information about how Google’s “outputs” from search results to more sophisticated content structures like profiles of people, places, and things was preparing Google to reinvent publishing. I was correct because the new head of search (Prabhakar Version 2.0) is making little reports the big thing in search results. This will doom many small publications because Google just tells you what it wants you to know.

I wrote these monographs between 2002 and 2008. I must admit that my 300 page Enterprise Search Report sold more copies than my Google work. But I think my Google trilogy explained what Googzilla was doing. No one cared.

Now I learn “Japan orders Google to stop pushing smartphone makers to install its apps.”* Okay, a little slow on the trigger, but government officials in the land of the rising sun figured out that Google is doing what Google has been doing for decades.

Enlightenment arrives!

The article reports:

Japan has issued a cease-and-desist order telling Google to stop pressuring smartphone makers to preinstall its search services on Android phones. The Japan Fair Trade Commission said on Tuesday Google had unfairly hindered competition by asking for preferential treatment for its search and browser from smartphone makers in violation of the country’s anti-monopoly law. The antitrust watchdog said Google, as far back as July 2020, had asked at least six Android smartphone manufacturers to preinstall its apps when they signed the license for the American tech giant’s app store…

Google has been this rodeo before. At the end of a legal process, Google will apologize, write a check, and move on down the road.

The question for me is, “How many other countries will see Google as a check writing machine?”

Quite a few in my opinion. The only problem is that these actions have taken many years to move from the thrill of getting a Google mouse pad to actual governmental action. (The best Google freebie was its flashing LED pin. Mine corroded and no longer flashed. I dumped it.)

Note for the * — Links to Microsoft “news” stories often go dead. Suck it up and run a query for the title using Google, of course.

Stephen E Arnold, April 28, 2025

6G: The Promise of 5G Actually Fulfilled?

January 20, 2025

Here is an interesting philosophical question: At what point does virtual reality cross over into teleportation? For some of us, the answer is a clear “never.” For LightReading, however, “6G Could Be the World’s First Teleportation Tech“. Would a device that accurately reproduces all five senses be the same as being there? International Editor Iain Morris writes:

“As far-fetched as this might all sound, it is the vision of several academics at the UK’s University of Surrey. Professors Rahim Tafazolli, David Hendon and Ian Corden volunteer it as an example of how a future 6G standard could be far more revolutionary than its predecessors. ‘We are turning the science fiction of teleportation into science fact,’ Tafazolli told Light Reading in a bold pitch.”

So, 6G won’t just mean another speed jump and latency drop? Not if this team has its way. The differences between 5G and 6G are still very much up in the air. Tafazolli believes it is time for a grander vision—and bigger profits. We learn:

“While the idea of virtual teleportation is an obvious attention grabber, there is much more to the vision. In a white paper published last year, the University of Surrey recognized that without progress in areas such as time synchronization, slashing latency to a level inconceivable on even the most sophisticated 5G network, virtual reality will continue to have limits. That same paper notes the importance of foundational technologies, including massive MIMO (for more advanced antennas), more intelligent core networks and even open RAN, an in-vogue radio system designed to improve interoperability between vendors. Many of them figure in today’s early 5G networks. Indeed, Tafazolli’s reference to a ‘network of networks’ is reminiscent of language used to describe older technology ecosystems. He envisages a mixture of short range, wide area and satellite networks as the basis for 6G, implying it will build heavily on existing infrastructure.”

Skeptics point to certain experimental technologies required to make such “teleportation” a reality. Just how far are we from commercially available virtual taste buds or electronic skin? Close enough for this team of academics to stand firm in their conviction, apparently. Just one request: Do us Star Trek fans a favor and come up with a different name for this ultra realistic VR. “Teleportation” is taken.

Cynthia Murrell, January 20, 2025

AI the New Battlefield in Cyberattack and Defense

October 19, 2020

It was inevitable—in the struggle between cybercrime and security, each side constantly strives to be a step ahead of the other. Now, both bad actors and protectors are turning to AI tools. Darktrace’s Max Heinemeyer describes the escalation in, “War of the Algorithms: The Next Evolution of Cyber Attacks” posted at Information/Age. He explains:

“In recent years, thousands of organizations have embraced AI to understand what is ‘normal’ for their digital environment and identify behavior that is anomalous and potentially threatening. Many have even entrusted machine algorithms to autonomously interrupt fast-moving attacks. This active, defensive use of AI has changed the role of security teams fundamentally, freeing up humans to focus on higher level tasks. … In what is the attack landscape’s next evolution, hackers are taking advantage of machine learning themselves to deploy malicious algorithms that can adapt, learn, and continuously improve in order to evade detection, signaling the next paradigm shift in the cyber security landscape: AI-powered attacks. We can expect Offensive AI to be used throughout the attack life cycle – be it to use natural language processing to understand written language and to craft contextualized spear-phishing emails at scale or image classification to speed up the exfiltration of sensitive documents once an environment is compromised and the attackers are on the hunt for material they can profit from.”

Forrester recently found (pdf) nearly 90% of security pros they surveyed expect AI attacks to become common within the year. Tools already exist that can, for example, assess an organizations juiciest targets based on their social media presence and then tailor phishing expeditions for the highest chance of success. On the other hand, defensive AI tools track what is normal activity for its organization’s network and works to block suspicious activity as soon as it begins. As each side in this digital arms race works to pull ahead of the other, the battles continue.

Cynthia Murrell, October 19, 2020

Dark Web Search: Specialized Services Are Still Better

March 26, 2020

Free Dark Web search is a hit-and-miss solution. In fact, “free” Dark Web search is often useless. Some experts do not agree with DarkCyber’s view, however. The reason is that these experts may not be aware of the specialized services available to government agencies and qualified licensees.

Here’s a recent example of cheerleading for a limited Dark Web search system.

A search engine does not exist for the Dark Web, until now says Digital Shadows in the article, “Dark Web Search Engine Kilos: Tipping The Scales InFavor Of Cybercrime.” Back in 2017, there used to be a search engine dubbed Grams that specialized in searching the Dark Web. It was taken down when its creator Larry Harmon, supposed operate of Helix the Bitcoin tumbling service. The Dark Web was search engine free, until November 2019 when Kilos debuted.

Kilos piggy backs on the same concept of Grams: using a Google-like search structure to locate illegal goods and services, bad actors, and cybercriminal marketplaces. Kilos has indexed more platforms, search functions, and includes many ways to ensure that users remain anonymous. Grams and Kilos are clearly linked based on the names that are units of measure.

Grams was the prominent search engine to use for the Dark Web, because it searched every where including Dream Market, Hansa, and AlphaBay and users could also hide their Bitcoin transactions via Helix. Grams did not have a powerful structure to crawl and index the Internet. Also it was expensive to maintain. This resulted in it going dark in 2017.

The argument is that Kilos is killing the Dark Web search scene as a more robust and powerful crawler/indexer. It already has indexed Samsara, Versus, Cannazon, CannaHome, and Cryptonia. Plus it has way more search functions to filter search results. Every day Kilos indexes more of the Dark Web’s content and has a unique feature Grams did not:

“Since the site’s creation in November 2019, the Kilos administrator has not only focused on increasing the site’s index but has also implemented updates and added new features and services to the site. These updates and features ensure the security and anonymity of its users but have also added a human element to the site not previously seen on dark web-based search engines, by allowing direct communication between the administrator and the users, and also between the users themselves.”

Kilos is adding more services to keep its users happy and anonymous. Among the upgrades are a CAPTCHA ranking system, faster search algorithm, a new Bitcoin mixer service, live chat, and ways to directly communicate with the administration.

Reading about Kilos sounds like an impressive search application startup, but wipe away the technology and its another tool to help bad actors hurt and break the system.

So what’s the issue? Kilos focuses on Dark Web storefronts, not the higher-value content in other Dark Web, difficult-to-index content pools.

But PR is PR, even in the Dark Web world.

Whitney Grace, March 26, 2020

Into R? A List for You

May 12, 2019

Computerworld, which runs some pretty unusual stories, published “Great R Packages for Data Import, Wrangling and Visualization.” “Great” is an interesting word. In the lingo of Computerworld, a real journalist did some searching, talked to some people, and created a list. As it turns out, the effort is useful. Looking at the Computerworld table is quite a bit easier than trying to dig information out of assorted online sources. Plus, people are not too keen on the phone and email thing now.

The listing includes a mixture of different tools, software, and utilities. There are more than 80 listings. I wasn’t sure what to make of XML’s inclusion in the list, but, the source is Computerworld, and I assume that the “real” journalist knows much more than I.

Two observations:

- Earthworm lists without classification or alphabetization are less useful to me than listings which are sorted by tags and alphabetized within categories. Excel does perform this helpful trick.

- Some items in the earthworm list have links and others do not. Consistency, I suppose, is the hobgoblin of some types of intellectual work

- An indication of which item is free or for fee would be useful too.

Despite these shortcomings, you may want to download the list and tuck it into your “Things I love about R” folder.

Stephen E Arnold, May 12, 2019

Newspapers and Google Grants

March 4, 2016

I read “Google Continuing Effort to Win Allies Amid Europe Antitrust Tax Probes.” (You may have to pay to view this article and its companion “European newspapers Get Google Grants.” Hey, Mr. Murdoch has bills to pay.)

As you may know, Google has had an on again off again relationship with “real” publishers. Also, Alphabet Google finds itself snared in some income tax matters.

The write up points out:

Alphabet Inc.’s Google … awarded a set of grants to European newspapers and offered to help protect them from cyber attacks, continuing an effort to win allies while it faces both antitrust and tax probes.

I find this interesting. Has Alphabet Google “trumped” some of its early activities. I like that protection against cyber attacks too. Does that mean that Alphabet Google does not protect other folks against cyber attacks?

Stephen E Arnold, March 4, 2016

CyberOSINT Study Findings Video Available

May 5, 2015

The third video summarizing Stephen E Arnold’s “CyberOSINT: Next Generation Information Access” is available. Grab your popcorn. The video is at this link.

Kenny Toth, May 5, 2015

Recorded Future: The Threat Detection Leader

April 29, 2015

The Exclusive Interview with Jason Hines, Global Vice President at Recorded Future

In my analyses of Google technology, despite the search giant’s significant technical achievements, Google has a weakness. That “issue” is the company’s comparatively weak time capabilities. Identifying the specific time at which an event took place or is taking place is a very difficult computing problem. Time is essential to understanding the context of an event.

This point becomes clear in the answers to my questions in the Xenky Cyber Wizards Speak interview, conducted on April 25, 2015, with Jason Hines, one of the leaders in Recorded Future’s threat detection efforts. You can read the full interview with Hines on the Xenky.com Cyber Wizards Speak site at the Recorded Future Threat Intelligence Blog.

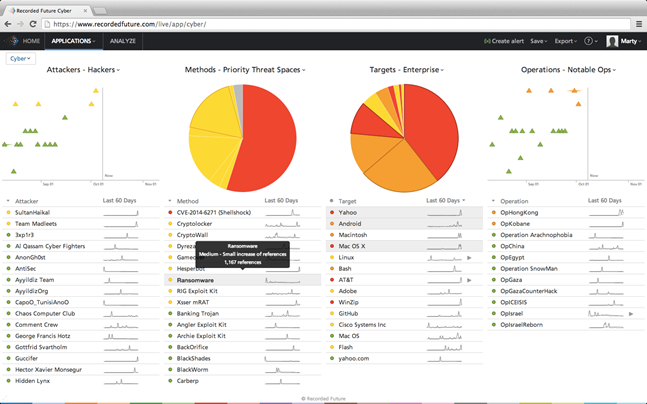

Recorded Future is a rapidly growing, highly influential start up spawned by a team of computer scientists responsible for the Spotfire content analytics system. The team set out in 2010 to use time as one of the lynch pins in a predictive analytics service. The idea was simple: Identify the time of actions, apply numerical analyses to events related by semantics or entities, and flag important developments likely to result from signals in the content stream. The idea was to use time as the foundation of a next generation analysis system, complete with visual representations of otherwise unfathomable data from the Web, including forums, content hosting sites like Pastebin, social media, and so on.

A Recorded Future data dashboard it easy for a law enforcement or intelligence professionals to identify important events and, with a mouse click, zoom to the specific data of importance to an investigation. (Used with the permission of Recorded Future, 2015.)

Five years ago, the tools for threat detection did not exist. Components like distributed content acquisition and visualization provided significant benefits to enterprise and consumer applications. Google, for example, built a multi-billion business using distributed processes for Web searching. Salesforce.com integrated visualization into its cloud services to allow its customers to “get insight faster.”

According to Jason Hines, one of the founders of Recorded Future and a former Google engineer, “When our team set out about five years ago, we took on the big challenge of indexing the Web in real time for analysis, and in doing so developed unique technology that allows users to unlock new analytic value from the Web.”

Recorded Future attracted attention almost immediately. In what was an industry first, Google and In-Q-Tel (the investment arm of the US government) invested in the Boston-based company. Threat intelligence is a field defined by Recorded Future. The ability to process massive real time content flows and then identify hot spots and items of interest to a matter allows an authorized user to identify threats and take appropriate action quickly. Fueled by commercial events like the security breach at Sony and cyber attacks on the White House, threat detection is now a core business concern.

The impact of Recorded Future’s innovations on threat detection was immediate. Traditional methods relied on human analysts. These methods worked but were and are slow and expensive. The use of Google-scale content processing combined with “smart mathematics” opened the door to a radically new approach to threat detection. Security, law enforcement, and intelligence professionals understood that sophisticated mathematical procedures combined with a real-time content processing capability would deliver a new and sophisticated approach to reducing risk, which is the central focus of threat detection.

In the exclusive interview with Xenky.com, the law enforcement and intelligence information service, Hines told me:

Recorded Future provides information security analysts with real-time threat intelligence to proactively defend their organization from cyber attacks. Our patented Web Intelligence Engine indexes and analyzes the open and Deep Web to provide you actionable insights and real-time alerts into emerging and direct threats. Four of the top five companies in the world rely on Recorded Future.

Despite the blue ribbon technology and support of organizations widely recognized as the most sophisticated in the technology sector, Recorded Future’s technology is a response to customer needs in the financial, defense, and security sectors. Hines said:

When it comes to security professionals we really enable them to become more proactive and intelligence-driven, improve threat response effectiveness, and help them inform the leadership and board on the organization’s threat environment. Recorded Future has beautiful interactive visualizations, and it’s something that we hear security administrators love to put in front of top management.

As the first mover in the threat intelligence sector, Recorded Future makes it possible for an authorized user to identify high risk situations. The company’s ability to help forecast and spotlight threats likely to signal a potential problem has obvious benefits. For security applications, Recorded Future identifies threats and provides data which allow adaptive perimeter systems like intelligent firewalls to proactively respond to threats from hackers and cyber criminals. For law enforcement, Recorded Future can flag trends so that investigators can better allocate their resources when dealing with a specific surveillance task.

Hines told me that financial and other consumer centric firms can tap Recorded Future’s threat intelligence solutions. He said:

We are increasingly looking outside our enterprise and attempt to better anticipate emerging threats. With tools like Recorded Future we can assess huge swaths of behavior at a high level across the network and surface things that are very pertinent to your interests or business activities across the globe. Cyber security is about proactively knowing potential threats, and much of that is previewed on IRC channels, social media postings, and so on.

In my new monograph CyberOSINT: Next Generation Information Access, Recorded Future emerged as the leader in threat intelligence among the 22 companies offering NGIA services. To learn more about Recorded Future, navigate to the firm’s Web site at www.recordedfuture.com.

Stephen E Arnold, April 29, 2015

Medical Search: A Long Road to Travel

April 13, 2015

Do you want a way to search medical information without false drops, the need to learn specialized vocabularies, and sidestep Boolean? Apparently the purveyors of medical search systems have left a user scratch without an antihistamine within reach.

Navigate to Slideshare (yep, LinkedIn) and flip through “Current Advances to Bridge the Usability Expressivity Gap in biomedical Semantic Search.” Before reading the 51 slide deck, you may want to refresh yourself with Quertle, PubMed, MedNar, or one of the other splendiferous medical information resources for researchers.

The slide deck identifies the problems with the existing search approaches. I can relate to these points. For example, those who tout question answering systems ignore the difficulty of passing a question from medicine to a domain consisting of math content. With math the plumbing in many advanced medical processes, the weakness is a bit of a problem and has been for decades.

The “fix” is semantic search. Well, that’s the theory. I interpreted the slide deck as communicating how a medical search system called ReVeaLD would crack this somewhat difficult nut. As an aside: I don’t like the wonky spelling that some researchers and marketers are foisting on the unsuspecting.

I admit that I am skeptical about many NGIA or next generation information access systems. One reason medical research works as well as it does is its body of generally standardized controlled term words. Learn MeSH and you have a fighting chance of figuring out if the drug the doctor prescribed is going to kill off your liver as it remediates your indigestion. Controlled vocabularies in scientific, technology, engineering, and medical domains address the annoying ambiguity problems encounter when one mixes colloquial words with quasi consultant speak. A technical buzzword is part of a technical education. It works, maybe not too well, but it works better than some of the wild and crazy systems which I have explored over the years.

You will have to dig through old jargon and new jargon such as entity reconciliation. In the law enforcement and intelligence fields, an entity from one language has to be “reconciled” with versions of the “entity” in other languages and from other domains. The technology is easier to market than make work. The ReVeaLD system is making progress as I understand the information in the slide deck.

Like other advanced information access systems, ReVeaLD has a fair number of moving parts. Here’s the diagram from Slide 27 in the deck:

There is also a video available at this link. The video explains that Granatum Project uses a constrained domain specific language. So much for cross domain queries, gentle reader. What is interesting to me is the similarity between the ReVeaLD system and some of the cyber OSINT next generation information access systems profiled in my new monograph. There is a visual query builder, a browser for structured data, visualization, and a number of other bells and whistles.

Several observations:

- Finding relevant technical information requires effort. NGIA systems also require the user to exert effort. Finding the specific information required to solve a time critical problem remains a hurdle for broader deployment of some systems and methods.

- The computational load for sophisticated content processing is significant. The ReVeaLD system is likely to such up its share of machine resources.

- Maintaining a system with many moving parts when deployed outside of a research demonstration presents another series of technical challenges.

I am encouraged, but I want to make certain that my one or two readers understand this point: Demos and marketing are much easier to roll out than a hardened, commercial system. Just as the EC’s Promise program, ReVeaLD may have to communicate its achievements to the outside world. A long road must be followed before this particular NGIA system becomes available in Harrod’s Creek, Kentucky.

Stephen E Arnold, April 13, 2015

Lexmark Jumps into Intelligence Work

March 25, 2015

I read “Lexmark Buys Software Maker Kofax at 47% Premium in $1B Deal.” The write up focuses on Kofax’s content management services. Largely overlooked is Kofax’s Kapow Tech unit. This company provides specialized services to intelligence, law enforcement, and security entities. How will a printer company in Lexington manage the ageing Kofax technology and the more promising Kapow entity? This should be interesting. Lexmark already owns the Brainware technology and the ISYS Search Software system. Lexmark is starting to look a bit like IBM and OpenText. These companies have rolled up promising firms, only to lose their focus. Will Lexmark follow in IBM’s footsteps and cook up a Watson? I think there is still some IBM DNA in the pale blue veins of the Lexmark outfit. On the other hand, Lexmark seems to be emulating some of the dance steps emerging from the Hewlett Packard ballroom as well. Fascinating. The mid-tier consultants with waves, quadrants, and paid for webinars will have to find a way to shoehorn hardware, health care, intelligence, and document scanning into one overview. Confused? Just wait.

Stephen E Arnold, March 25, 2015