Can You Guess What Is Making Everyone Stupid?

November 17, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

I read an article in “The Stupid Issue” of New York Magazine’s Intelligencer section. Is that a dumb set of metadata for an article about stupid? That’s evidence in my book.

The write up is “A Theory of Dumb: It’s Not Just Screens or COVID or Too-Strong Weed. Maybe the Culprit of Our Cognitive Decline Is Unfettered Access to Each Other.” [sic] Did anyone notice that a question mark was omitted? Of course not. It is a demonstration of dumb, not a theory.

This is a long write up, about 4,000 words. Based on the information in the essay, I am not sure most Americans will know the meaning of the words in the article, nor will they be able to make sense of it. According to Wordcalc.com, the author hits an eighth grade level of readability. I would wager that few eighth graders in rural Kentucky know the meaning of “unproctored” or “renormalized”. I suppose some students could ask their parents, but that may not produce particularly reliable definitions in my opinion.

Thanks, Venice.ai. Good enough, the new standard of excellence today.

Please, read the complete essay. I think it is excellent. I do want to pounce on one passage for my trademarked approach to analysis. The article states:

a lot of today’s thinking on our digitally addled state leans heavily on Marshall McLuhan and Neil Postman, the hepcat media theorists who taught us, in the decades before the internet, that every new medium changes the way we think. They weren’t wrong — and it’s a shame neither of them lived long enough to warn society about video podcasts — but they were operating in a world where the big leap was from books to TV, a gentle transition compared to what came later. As a result, much of the current commentary still fixates on devices and apps, as if the physical delivery mechanism were the whole story. But the deepest transformation might be less technological than social: the volume of human noise we’re now wired into.

This passage sets up the point about too much social connectedness. I mostly agree, but my concern is that references to Messrs. McLuhan and Postman and the social media / mobile symbiosis misses the most significant point.

Those of you who were in my Eagleton Lecture delivered in 1986 at Rutgers University heard me say, “Online information tears down structures.” The idea is not that the telegraph made decisions faster. The telegraph eliminated established methods of sending urgent messages and tilled the ground for “improvements” in communications. The lesson from the telegraph, radio, and other electronic technologies was that these eroded existing structures and enabled follow ons. If we shift to the clunky computers from the Atomic Age, the acceleration is more remarkable than what followed the wireless. My point, therefore, is that as information flows in electronic and digital form, structures like the brain are eroded. One can say, “There are smart people at Google.” I respond, “That’s true. The supply, however, is limited. There are lots of people in the world, but as the cited article points out, there is more stupid than ever.

I liked the comment about “nutritional information.” My concern is that “information bullets” fly about, they compound the damage the digital flows create. With lots of shots, some hit home and take out essential capabilities. Useful Web sites go dark. Important companies become the walking wounded. Firms that once relied entirely upon finding, training, and selling access to smart people want software to replace these individuals. For some tasks, sure, smart software is capable. For other tasks, even Mark Zuckerberg looks lost when he realizes his top AI wizard is jumping the good ship Facebook. Will smart software replace Yann LeCun? Not for a few years and a dozen IPOs.

One final comment. Here’s a statement from the Theory of Dumb essay:

Despite what I just finished saying, there is one compressionary artifact from the internet that may perfectly encapsulate everything about our present moment: the “midwit” meme. It’s a three-panel bell curve in which a simpleton on the left makes a facile, confident claim and a serene, galaxy-brained monk on the right makes a distilled version of the same claim — while the anxious try-hard in the middle ties himself in knots pedantically explaining why the simple version is actually wrong. Who wants to be that guy?

I want to point out that I am not sure how many people in the fine Commonwealth in which I reside know what a “compressionary artifact” is. I am not confident that most people could wrangle a definition they could understand from a Google Gemini output. The midwit concept is very real. As farmers lose the ability to fix their tractors, skills are not lost; they are never developed. When curious teens want to take apart an old iPad to see how it works, they learn how to pick glass from their fingers and possibly cause a battery leak. When a high school shop class “works” on an old car to repair it, they learn about engine control units and intermediary software on a mobile phone. An oil leak? What’s that?

I want to close with the reminder that when one immerses a self or a society in digital data flows, the information erodes the structures. Thus, in today’s datasphere, stupid is emergent. Get used to it. PS. Put the question mark in your New York Magazine headline. You are providing evidence that my assertion about online is accurate.

Stephen E Arnold, November 17, 2025

Starlink: Are You the Only Game in Town? Nope

October 23, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “SpaceX Disables More Than 2,000 Starlink Devices Used in Myanmar Scam Compounds.” Interesting from a quite narrow Musk-centric focus. I wonder if this is a PR play or the result of some cooperative government action. The write up says:

Lauren Dreyer, the vice-president of Starlink’s business operations, said in a post on X Tuesday night that the company “proactively identified and disabled over 2,500 Starlink Kits in the vicinity of suspected ‘scam centers’” in Myanmar. She cited the takedowns as an example of how the company takes action when it identifies a violation of its policies, “including working with law enforcement agencies around the world.”

The cyber outfit added:

Myanmar has recently experienced a handful of high-profile raids at scam compounds which have garnered headlines and resulted in the arrest, and in some cases release, of thousands of workers. A crackdown earlier this year at another center near Mandalay resulted in the rescue of 7,000 people. Nonetheless, construction is booming within the compounds around Mandalay, even after raids, Agence France-Presse reported last week. Following a China-led crackdown on scam hubs in the Kokang region in 2023, a Chinese court in September sentenced 11 members of the Ming crime family to death for running operations.

Thanks, Venice.ai. Good enough.

Just one Chinese crime family. Even more interesting.

I want to point out that the write up did not take a tiny extra step; for example, answer this question, “What will prevent the firms listed below from filling the Starlink void (if one actually exists)? Here are some Starlink options. These may be more expensive, but some surplus cash is spun off from pig butchering, human trafficking, drug brokering, and money laundering. Here’s the list from my files. Remember, please, that I am a dinobaby in a hollow in rural Kentucky. Are my resources more comprehensive than a big cyber security firm’s?

- AST

- EchoStar

- Eutelsat

- HughesNet

- Inmarsat

- NBN Sky Muster

- SES S.A.

- Telstra

- Telesat

- Viasat

With access to money, cut outs, front companies, and compensated government officials, will a Starlink “action” make a substantive difference? Again this is a question not addressed in the original write up. Myanmar is just one country operating in gray zones where government controls are ineffective or do not exist.

Starlink seems to be a pivot point for the write up. What about Starlinks in other “countries” like Lao PDR? What about a Starlink customer carrying his or her Starlink into Cambodia? I wonder if some US cyber security firms keep up with current actions, not those with some dust on the end tables in the marketing living room.

Stephen E Arnold, October 23, 2025

Hey, Pew, Wanna Bet?

October 16, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

My Telegram Labyrinth book is almost over the finish line. I include some discussion of online gambling in Telegram. Of particular interest to me and my research team was kiddie games. A number of these reward the young child with crypto tokens. Get enough tokens and the game provides the player with options. A couple of these options point the kiddie directly to an online casino running in Telegram Messenger. What happens next? A few players win. Others lose. The approach is structured and intentional. The goal of some of these fun games is addicting youngsters to online gambling via crypto.

Nifty. Telegram has been up and running since 2013. In the last few years, online gambling has become a part of the organization’s strategic vision. Anyone, including a child with a mobile device, can play online gambling on Telegram. From Telegram’s point of view, this is freedom. From a parent who discovers a financial downside from their child’s play, this is stressful.

I read “Americans Increasingly See Legal Sports Betting As a Bad Thing for Society and Sports.” The Pew research outfit dug into online gambling. What did the number crunchers learn? Here are a handful of findings:

- More Americans view legal sports betting as bad for society and sports. (Hey, addiction is a problem. Who knew?)

- One-fifth of Americans bet online. The good news is that sports betting is not growing. (Is that why advertising for online gaming seems to be more prevalent?)

- 47 percent of men under 30 say legal sports betting is a bad thing, up from 22 percent who said this in 2022.

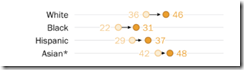

Now check out this tough-to-read graphic:

Views of online gambling vary within the demographic groups in the sample. I noted that old people (dinobabies like me) do not wager as frequently as those between the ages of 18 and 29. I wonder if the age of the VCs pumping money into AI come from this demographic. Betting seems okay to more of them. Ask someone over 65, only 12 percent of those you query will say, “Great idea.”

I would argue that online gambling is readily available. More services are emulating the Telegram model. The Pew study seemed to ignore the target demographic for the users of the Telegram kiddie gambling games. That is a whiff to me. But will anyone care? Only the parents and it may take years for the research firms to figure out where the key change is taking place.

Stephen E Arnold, October 16, 2025

Parenting 100: A Remedial Guide to Raising Children

October 13, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I am not sure what’s up this week (October 6 to 10, 2025). I am seeing more articles about the impact of mobile devices, social media, doom scrolling, and related cheerful subjects in my newsfeed. A representative article is “Lazy Parents Are Giving Their Toddlers ChatGPT on Voice Mode to Keep Them Entertained for Hours.”

Let’s take a look at a couple of passages that I thought were interesting:

with the rise of human-like AI chatbots, a generation of “iPad babies” could seem almost quaint: some parents are now encouraging their kids to talk with AI models, sometimes for hours on end…

I get it. Parents are busy today. If they are lucky enough to have jobs, automatic meeting services keep them hopping. Then there is the administrivia of life. Children just add to the burden. Why not stick the kiddie in a playpen with an iPad. Tim Apple will be happy.

What’s the harm? How about this factoid (maybe an assertion from smart software?) from the write up:

AI chatbots have been implicated in the suicides of several teenagers, while a wave of reports detail how even grown adults have become so entranced by their interactions with sycophantic AI interlocutors that they develop severe delusions and suffer breaks with reality — sometimes with deadly consequences.

Okay, bummer. The write up includes a hint of risk for parents about these chat-sitters; to wit:

Andrew McStay, a professor of technology and society at Bangor University, isn’t against letting children use AI — with the right safeguards and supervision. But he was unequivocal about the major risks involved, and pointed to how AI instills a false impression of empathy.

Several observations seem warranted:

- Which is better? Mom and dad interacting with the kiddo. Maybe grandma could be a good stand in? Or, letting the kid tune in and drop out?

- Imagine sending a chat surfer to school. Human interaction is not going to be as smooth and stress free as having someone take the kiddo’s animal crackers and milk or pouting until kiddo can log on again.

- Visualize the future: Is this chat surfer going to be a great employee and colleague? Answer: No.

I find it amazing that decades after these tools became available that people do not understand the damage flowing bits do to thinking, self esteem, and social conventions. Empathy? Sure, just like those luminaries at Silicon Valley type AI companies. Warm, caring, trustworthy.

Stephen E Arnold, October 13, 2025

Screaming at the Cloud, Algorithms, and AI: Helpful or Lost Cause?

October 2, 2025

![Dino 5 18 25_thumb[3] Dino 5 18 25_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/09/Dino-5-18-25_thumb3_thumb-1.gif) Written by an unteachable dinobaby. Live with it.

Written by an unteachable dinobaby. Live with it.

One of my team sent me a link to a write up called “We Traded Blogs for Black Boxes. Now We’re Paying for It.” The essay is interesting because it [a] states, to a dinobaby-type of person, the obvious and [b] evidences what I would characterize as authenticity.

The main idea is the good, old Internet is gone. The culprits are algorithms, the quest for clicks, and the loss of a mechanism to reach people who share an interest. Keep in mind that I am summarizing my view of the original essay. The cited document includes nuances that I have ignored.

The reason I found the essay interesting is that it includes a concept I had not seen applied to the current world of online and a “fix” to the problem. I am not sure I agree with the essay’s suggestions, but the ideas warrant comment.

The first is the idea of “context collapse.” I don’t want too many YouTube philosophy or big idea ideas. I do like the big chunks of classical music, however. Context collapse is a nifty way of saying, “Yo, you are bowling alone.” The displacement of hanging out with people has given way to mobile phone social media interactions.

The write up says:

algorithmic media platforms bring out (usually) negative reactions from unrelated audiences.

The essay does not talk about echo chambers of messaging, but I get the idea. When people have no idea about a topic, there is no shared context. The comments are fragmented and driven by emotion. I will appropriate this bound phrase.

The second point is the fix. The write up urges the reader to use open source software. Now this is an idea much loved by some big thinkers. From my point of view, a poisoned open source software can disseminate malware or cause some other “harm.” I am somewhat cautious when it comes to open source, but I don’t think the model works. Think ransomware, phishing, and back doors.

I like the essay. Without that link from my team member to me, I would have been unaware of the essay. The problem is that no service indexes deeply across a wide scope of content objects. Without finding tools, information is ineffectual. Does any organization index and make findable content like this “We Traded Blogs for Black Boxes”? Nope. None has not and none will.

That’s the ball being dropped by national libraries and not profit organizations.

Stephen E Arnold, October 2, 2025

Is Reading Necessary, Easy, and Fun? Sure

August 25, 2025

No AI. Just a dinobaby working the old-fashioned way.

No AI. Just a dinobaby working the old-fashioned way.

The GenAI service person answered my questions this way:

- Is reading necessary? Answer: Not really

- Is reading easy? No, not for me

- Is reading fun? For me, no.

Was I shocked? No. I almost understand. Note: I said “almost.” The idea that the mental involvement associated with reading is, for my same of one, is not on the radar.

“Reading for Pleasure in Freefall: Research Finds 40% Drop Over Two Decades” presents information that caught my attention for two reasons:

- The decline appears to be gradual; that is, freefall. The time period in terms of my dinobaby years is wildly inaccurate.

- The inclusion of a fat round number like 40 percent strikes me as understatement

On what basis do I make these two observations about the headline? I have what I call a Barnes & Noble toy ratio. A bookstore is now filled with toys, knick-knacks and Temu-type products. That’s it. Book stores are tough to find. When one does locate a book store, it often is a toy store.

The write up is much more scientific than my toy algorithm. I noted this passage from a study conducted by two universities I view as anchors of opposite ends of the academic spectrum: The University of Florida and University College London. Here’s the passage:

the study analyzed data from over 236,000 Americans who participated in the American Time Use Survey between 2003 and 2023. The findings suggest a fundamental cultural shift: fewer people are carving out time in their day to read for enjoyment. This is not just a small dip—it’s a sustained, steady decline of about 3% per year…

I don’t want to be someone who criticizes the analysis of two esteemed institutions. I would suggest that the decrease is going to take much less time than a couple of centuries if the three percent erosion continues. I acknowledge that in the US print book sales in 2024 reached about 700 million (depending on whom one believes), an increase of over 2023. These data do not reflect books generated by smart software.

But book sales does not mean more people are reading material that requires attention. Old people read more books than a grade school student or a young person who is not in what a dinobaby would call a school. Hats off to the missionary who teaches one young person in a tough spot to read and provides the individual with access to books.

I want to acknowledge this statement in the write up:

The researchers also noted some more promising findings, including that reading with children did not change over the last 20 years. However, reading with children was a lot less common than reading for pleasure, which is concerning given that this activity is tied to early literacy development, academic success and family bonding….

I interpreted the two stellar institutions as mostly getting at these points:

- Some people read and read voraciously. Pleasure or psychological problem? Who knows. It happens.

- Many people don’t value books, don’t read books, and won’t by choice or mental set up can’t read books.

- The distractions of just existing today make reading a lower priority for some than other considerations; for example, not getting hit by a kinetic in a war zone, watching TikTok, creating YouTube videos about big thoughts, a mobile phone, etc.

Let’s go back to the book store and toys. The existence of toys in a book store make clear that selling books is a very tough business. To stay open, Temu-type stuff has to be pushed. If reading were “fun,” the book stores would be as plentiful as unsold bourbon in Kentucky.

Net net: We have reached a point at which the number of readers is a equivalent to the count of snow leopards. Reading for fun marks an individual as one at risk of becoming a fur coat.

Stephen E Arnold, August 29, 2025

Self-Appointed Gatekeepers and AI Wizards Clash

August 11, 2025

No AI. Just a dinobaby being a dinobaby.

No AI. Just a dinobaby being a dinobaby.

Cloudflare wants to protect those with content. Perplexity wants content. Cloudflare sees an opportunity to put up a Google-type toll booth on the Information Highway. Perplexity sees traffic stops of any type the way a soccer mom perceives an 80 year old driving at the speed limit.

Perplexity has responded to Cloudflare’s words about Perplexity allegedly using techniques to crawl sites which may not want to be indexed.

“Agents or Bots? Making Sense of AI on the Open Web” states:

Cloudflare’s recent blog post managed to get almost everything wrong about how modern AI assistants actually work.

In addition to misunderstanding 20-25M user agent requests are not scrapers, Cloudflare claimed that Perplexity was engaging in “stealth crawling,” using hidden bots and impersonation tactics to bypass website restrictions. But the technical facts tell a different story.

It appears Cloudflare confused Perplexity with 3-6M daily requests of unrelated traffic from BrowserBase, a third-party cloud browser service that Perplexity only occasionally uses for highly specialized tasks (less than 45,000 daily requests).

Because Cloudflare has conveniently obfuscated their methodology and declined to answer questions helping our teams understand, we can only narrow this down to two possible explanations.

- Cloudflare needed a clever publicity moment and we–their own customer–happened to be a useful name to get them one.

- Cloudflare fundamentally misattributed 3-6M daily requests from BrowserBase’s automated browser service to Perplexity, a basic traffic analysis failure that’s particularly embarrassing for a company whose core business is understanding and categorizing web traffic.

The idea is to provide two choices, a technique much-loved by vaudeville comedians on the Paul Whiteman circuit decades ago; for example, Have you stopped stealing office supplies?

I find this situation interesting for several reasons:

- Smart software outfits have been sucking down data

- The legal dust ups, the license fees, even the posture of the US government seems dynamic; that is, uncertain

- Clever people often find themselves tripped by their own clever lines.

My view is that when tech companies squabble, the only winners are the lawyers and the users lose.

Stephen E Arnold, August 11, 2025

Decentralization: Nope, a Fantasy It Seems

July 25, 2025

Just a dinobaby working the old-fashioned way, no smart software.

Just a dinobaby working the old-fashioned way, no smart software.

Web 3, decentralization, graceful fail over, alternative routing. Are these concepts baloney? I think the idea that the distributed approach to online systems is definitely not bullet proof.

Why would I, an online person, make such a statement? I read “Cloudflare 1.1.1.1 Incident on July 14, 2025.” I know a number of people who know zero about Cloudflare. One can argue that AT&T, Google, Microsoft, et al are the gate keepers of the online world. Okay, that sounds great. It is sort of true.

I quote from the write up:

For many users, not being able to resolve names using the 1.1.1.1 Resolver meant that basically all Internet services were unavailable.

The operative word is “all.”

What can one conclude if this explanation of a failure of “legacy” systems can be pinned on a “configuration error.”? Some observations:

- A bad actor able to replicate this can kill the Internet or at least Cloudflare’s functionality

- The baloney about decentralization is just that… baloney. Cheap words packed in a PR tube and “sold” as something good

- The fail over and resilience assertions? Three-day old fish. Remember Ben Franklin’s aphorism: Three-day old fish smell. Badly.

Net net: We have evidence that the reality of today’s Internet rests in the semi capable hands of certain large companies. Without real “innovation,” the centralization of certain functions will have wide spread and unexpected impacts. Yep, “all,” including the bad actors who make use of these points of concentration. The Cloudflare incident may motivate other technically adept groups to find a better way. Perhaps something in the sky like satellites or on the ground like device to device wireless? I wonder if adversaries of the US have noticed this incident?

Stephen E Arnold, July 25, 2025

Curation and Editorial Policies: Useful and Are Net Positives

July 8, 2025

No AI, just the dinobaby expressing his opinions to Zillennials.

No AI, just the dinobaby expressing his opinions to Zillennials.

The write up “I Deleted My Second Brain.” The author is Joan Westenberg. I had to look her up. She is writer and entrepreneur. She sells subscriptions to certain essays. Okay, that’s the who. Now what does the essay address?

It takes a moment to convert “Zettelkasten slip” into a physical notecard, but I remembered learning this from the fellows who were pitching a note card retrieval system via a company called Remac. (No, I have no idea what happened to that firm. But I understand note cards. My high school debate coach in 1958 explained the concept to me.)

Ms. Westenberg says:

For years, I had been building what technologists and lifehackers call a “second brain.” The premise: capture everything, forget nothing. Store your thinking in a networked archive so vast and recursive it can answer questions before you know to ask them. It promises clarity. Control. Mental leverage. But over time, my second brain became a mausoleum. A dusty collection of old selves, old interests, old compulsions, piled on top of each other like geological strata. Instead of accelerating my thinking, it began to replace it. Instead of aiding memory, it froze my curiosity into static categories. And so… Well, I killed the whole thing.

I assume Ms. Westenberg is not engaged in a legal matter. The intentional deletion could lead to some interesting questions. On the other hand, for a person who does public relations and product positioning, deletion may not present a problem.

I liked the reference to Jorge Luis Borges (1899 to 1986) , a writer with some interesting views about the nature of reality. As Ms. Westenberg notes:

But Borges understood the cost of total systems. In “The Library of Babel,” he imagines an infinite library containing every possible book. Among its volumes are both perfect truth and perfect gibberish. The inhabitants of the library, cursed to wander it forever, descend into despair, madness, and nihilism. The map swallows the territory. PKM systems promise coherence, but they often deliver a kind of abstracted confusion. The more I wrote into my vault, the less I felt. A quote would spark an insight, I’d clip it, tag it, link it – and move on. But the insight was never lived. It was stored. Like food vacuum-sealed and never eaten, while any nutritional value slips away. Worse, the architecture began to shape my attention. I started reading to extract. Listening to summarize. Thinking in formats I could file. Every experience became fodder. I stopped wondering and started processing.

I think the idea of too much information causing mental torpor is interesting for two reasons: [a] digital information has a mass of sorts and [b] information can disrupt the functioning of an information processing organ; that is, the human brain.

The fix? Just delete everything. Ms. Westenberg calls this “destruction by design.” She is now (presumably) lighter and more interested in taking notes. I think this is the modern equivalent of throwing out junk from the garage. My mother would say, after piling my ball bat, scuffed shoes, and fossils into the garbage can, “There. That feels better.” I would think because I did not want to suffer the wrath of mom, “No, mother, you are destroying objects which are meaningful to me. You are trashing a chunk of my self with each spring cleaning.” Destruction by design may harm other people. In the case of a legal matter, destruction by design can cost the person hitting delete big time.

What’s interesting is that the essay reveals something about Ms. Westenberg; for example, [a] A person who can destroy information can destroy other intangible “stuff” as well. How does that work in an organization? [b] The sudden realization that one has created a problem leads to a knee jerk reaction. What does that say about measured judgment? [c] The psychological boost from hitting the delete key clears the path to begin the collecting again. Is hoarding an addiction? What’s the recidivism rate for an addict who does the rehabilitation journey?

My major takeaway may surprise you. Here it is: Ms. Westenberg learned by trial and error over many years that curation is a key activity in knowledge work. Google began taking steps to winnow non-compliant Web sites from its advertising program. The decision reduces lousy content and advances Google’s agenda to control in digital Gutenberg machines. Like Ms. Westenberg, Google is realizing that indexing and saving “everything” is a bubbling volcano of problems.

Librarians know about curation. Entities like Ms. Westenberg and Google are just now realizing why informed editorial policies are helpful. I suppose it is good news that Ms. Westenberg and Google have come to the same conclusion. Too bad it took years to accept something one could learn at any library in five minutes.

Stephen E Arnold, July 8, 2025

Worthless College Degrees. Hey, Where Is Mine?

July 4, 2025

Smart software involved in the graphic, otherwise just an addled dinobaby.

Smart software involved in the graphic, otherwise just an addled dinobaby.

This write up is not about going “beyond search.” Heck, search has just changed adjectives and remains mostly a frustrating and confusing experience for employees. I want to highlight the information (which I assume to be 100 percent dead accurate like other free data on the Internet) about the “17 Most Useless College Degrees Employers Don’t Want Today.” Okay, high school seniors, pay attention. According to the estimable Finance Buzz, do not study these subjects and — heaven forbid — expect to get a job when you graduate from an online school, the local college, or a big-time, big-bucks university; I have grouped the write up’s earthworm list into some categories; to wit:

Do gooder work

- Criminal justice

- Education (Who needs an education when there is YouTube?)

Entertainment

- Fashion design

- Film, video, and photographic arts

- Music

- Performing arts

Information

- Advertising

- Creative writing (like Finance Buzz research articles?)

- Communications

- Computer science

- Languages (Emojis and some English are what is needed I assume)

Real losers

- Anthropology and archaeology (I thought these were different until Finance Buzz cleared up my confusion)

- Exercise science

- Religious studies

Waiting tables and working the midnight check in desk

- Culinary arts (Fry cook until the robots arrive)

- Hospitality (Smile and show people their table)

- Tourism (Do not fall into the volcano)

Assume the write up is providing verifiable facts. (I know, I know, this is the era of alternative facts.) If we flash forward five years, the already stretched resources for law enforcement and education will be in an even smaller pickle barrel. Good for the bad actors and the people who don’t want to learn. Perhaps less beneficial to others in society. I assume that one can make TikTok-type videos and generate a really bigly income until the Googlers change the compensation rules or TikTok is banned from the US. With the world awash in information and open source software available, who needs to learn anything. AI will do this work. Who in the heck gets a job in archaeology when one can learn from UnchartedX and Brothers of the Serpent? Exercise. Play football and get a contract when you are in middle school like talented kids in Brazil. And the cruise or specialty restaurant business? Those contracts are for six months for a reason. Plus cruise lines have started enforcing no video rules on the staff who were trying to make day in my life videos about the wonderful cruise ship environment. (Weren’t these vessels once called “prison ships”?) My hunch is that whoever assembled this stellar research at Finance Buzz was actually but indirectly writing about smart software and robots. These will decimate many jobs in the idenfied

What should a person study? Nuclear physics, mathematics (applied and theoretical maybe), chemistry, biogenetics, materials science, modern financial management, law (aren’t there enough lawyers?), medicine, and psychology until the DRG codes are restricted.

Excellent way to get a job. And in what field was my degree? Medieval religious literature. Perfect for life-long employment as a dinobaby essayist.

Stephen E Arnold, July 4, 2025