Ten Directories of AI Tools

May 26, 2025

Just the dinobaby operating without Copilot or its ilk.

Just the dinobaby operating without Copilot or its ilk.

I scan DailyHunt, an India-based news summarizer powered by AI I think. The link I followed landed me on a story titled “Best 10 AI Directories to Promote.” I looked for a primary source, an author, and links to each service. Zippo. Therefore, I assembled the list, provided links, and generated with my dinobaby paws and claws the list below. Enjoy or ignore. I am weary of AI, but many others are not. I am not sure why, but that is our current reality, replete with alternative facts, cheating college professors, and oodles of crypto activity. Remember. The list is not my “best of”; I am simply presenting incomplete information in a slightly more useful format.

AIxploria https://www.aixploria.com/en/ [Another actual directory. Its promotional language says “largest list”. Yeah, I believe that]

AllAITool.ai at https://allaitool.ai/

FamouseAITools.ai https://famousaitools.ai/ [Another marketing outfit sucking up AI tool submissions]

Futurepedia.io https://www.futurepedia.io/

TheMangoAI.co https://themangoai.co/ [Not a directory, an advertisement of sorts for an AI-powered marketing firm]

NeonRev https://www.neonrev.com/ [Another actual directory. It looks like a number of Telegram bot directories]

Spiff Store https://spiff.store/ [Another directory. I have no idea how many tools are included]

StackViv https://stackviv.ai/ [An actual directory with 10,000 tools. No I did not count them. Are you kidding me?]

TheresanAIforThat https://theresanaiforthat.com/ [You have to register to look at the listings. A turn off for me]

Toolify.ai https://www.toolify.ai/ [An actual listing of more than 25,000 AI tools organized into categories probably by AI, not a professional indexing specialist]

When I looked at each of these “directories”, marketing is something the AI crowd finds important. A bit more effort in the naming of some of these services might help. Just a thought. Enjoy.

Stephen E Arnold, May 26, 2025

Speed Up Your Loss of Critical Thinking. Use AI

February 19, 2025

While the human brain isn’t a muscle, its neurology does need to be exercised to maintain plasticity. When a human brain is rigid, it’s can’t function in a healthy manner. AI is harming brains by making them not think good says 404 Media: “Microsoft Study Finds AI Makes Human Cognition “Atrophied and Unprepared.” You can read the complete Microsoft research report at this link. (My hunch is that this type of document would have gone the way of Timnit Gebru and the flying stochastic parrot, but that’s just my opinion, Hank, Advait, Lev, Ian, Sean, Dick, and Nick.)

Carnegie Mellon University and Microsoft researchers released a paper that says the more humans rely on generative AI the “result in the deterioration of cognitive faculties that ought to be preserved.”

Really? You don’t say! What else does this remind you of? How about watching too much television or playing too many videogames? These passive activities (arguable with videogames) stunt the development of brain gray matter and in a flight of Mary Shelley rhetoric make a brain rot! What else did the researchers discover when they studied 319 knowledge workers who self-reported their experiences with generative AI:

“ ‘The data shows a shift in cognitive effort as knowledge workers increasingly move from task execution to oversight when using GenAI,’ the researchers wrote. ‘Surprisingly, while AI can improve efficiency, it may also reduce critical engagement, particularly in routine or lower-stakes tasks in which users simply rely on AI, raising concerns about long-term reliance and diminished independent problem-solving.’”

By the way, we definitely love and absolutely believe data based on self reporting. Think of the mothers who asked their teens, “Where did you go?” The response, “Out.” The mothers ask, “What did you do?” The answer, “Nothing.” Yep, self reporting.

Does this mean generative AI is a bad thing? Yes and no. It’ll stunt the growth of some parts of the brain, but other parts will grow in tandem with the use of new technology. Humans adapt to their environments. As AI becomes more ingrained into society it will change the way humans think but will only make them sort of dumber [sic]. The paper adds:

“ ‘GenAI tools could incorporate features that facilitate user learning, such as providing explanations of AI reasoning, suggesting areas for user refinement, or offering guided critiques,’ the researchers wrote. ‘The tool could help develop specific critical thinking skills, such as analyzing arguments, or cross-referencing facts against authoritative sources. This would align with the motivation enhancing approach of positioning AI as a partner in skill development.’”

The key is to not become overly reliant AI but also be aware that the tool won’t go away. Oh, when my mother asked me, “What did you do, Whitney?” I responded in the best self reporting manner, “Nothing, mom, nothing at all.”

Whitney Grace, February 19, 2025

Directories Have Value

November 29, 2024

Why would one build an online directory—to create a helpful reference? Or for self aggrandizement? Maybe both. HackerNoon shares a post by developer Alexander Isora, “Here’s Why Owning a Directory = Owning a Free Infinite Marketing Channel.”

First, he explains why users are drawn to a quality directory on a particular topic: because humans are better than Google’s algorithm at determining relevant content. No argument here. He uses his own directory of Stripe alternatives as an example:

“Why my directory is better than any of the top pages from Google? Because in the SERP [Search Engine Results Page], you will only see articles written by SEO experts. They have no idea about billing systems. They never managed a SaaS. Their set of links is 15 random items from Crunchbase or Product Hunt. Their article has near 0 value for the reader because the only purpose of the article is to bring traffic to the company’s blog. What about mine? I tried a bunch of Stripe alternatives myself. Not just signed up, but earned thousands of real cash through them. I also read 100s of tweets about the experiences of others. I’m an expert now. I can even recognize good ones without trying them. The set of items I published is WAY better than any of the SEO-optimized articles you will ever find on Google. That is the value of a directory.”

Okay, so that is why others would want a subject-matter expert to create a directory. But what is in it for the creator? Why, traffic, of course! A good directory draws eyeballs to one’s own products and services, the post asserts, or one can sell ads for a passive income. One could even sell a directory (to whom?) or turn it into its own SaaS if it is truly popular.

Perhaps ironically, Isora’s next step is to optimize his directories for search engines. Sounds like a plan.

Cynthia Murrell, November 29, 2024

Bookmark This: HathiTrust Digital Library

October 30, 2024

Concerned for the Internet Archive? So are we. (For multiple reasons.) But while that venerable site recovers from its recent cyberattacks, remember Hathi exists. Founded in 2008, the not-for-profit HathiTrust Digital Library is a collaborative of academic and research libraries. The site makes millions of digitized items available for study by humans as well as for data mining. The site shares the collection’s story:

“HathiTrust’s digital library came into being during the mid-2000s when companies such as Google began scanning print titles from the shelves of university and college campus libraries. When many of those same libraries created HathiTrust in 2008, they united library copies of those digitized books into a single, shared collection to make as much of the collection available for access as allowable by copyright law. Through HathiTrust, libraries collaborate on long-term management, preservation, and access of their collections. Book lovers and researchers like you can explore this huge collection of digitized materials! Today, HathiTrust Digital Library is the largest set of digitized books managed by academic and research libraries. The collection includes materials typically found on the shelves of North American university and college campuses with the benefit of being available online instead of scattered in buildings around the globe. Our enormous collection includes thousands of years of human knowledge and published materials from around the world, selected by librarians and preserved in the libraries of academic and research libraries. You can find all kinds of digitized books and primary source materials to suit a wide range of research needs.”

The collection contains books and “book-like” items—basically anything except audio/visual files. All Library of Congress subjects are represented, but the largest treasures lie in the Language & Literature, Philosophy, Religion, History, and Social Sciences chambers. All volumes not restricted by copyright are free for anyone to read. Just over half the works are in English, while the rest span over 400 languages, including some that are now extinct. Ninety-five percent were scanned from print by Google, but a few specialized collections were contributed by individuals or institutions. The Collection page offers several sample collections to get you started, or you can build your own. Have fun browsing their collections, and with luck the Internet Archive will be back up and running in no time.

Cynthia Murrell, October 30, 2024

PrivacyTools.io: A Good Resource for Privacy Tools and Services

October 30, 2024

Keeping up with the latest in global mass surveillance by private and state-sponsored groups can be a challenge. Here is a resource that can help: Privacy Tools evaluates the many tools designed to fight mass surveillance and highlights the best on its website. Its Home page lists its many clickable categories on the left and describes the criteria by which the site evaluates privacy tools and services. It also educates visitors on surveillance issues and why even those with “nothing to hide” should be concerned. It specifies:

“Many of the activities we carry out on the internet leave a trail of data that can be used to track our behavior and access some personal information. Some of the activities that collect data include credit card transactions, GPS, phone records, browsing history, instant messaging, watching videos, and searching for goods. Unfortunately, there are many companies and individuals on the internet that are looking for ways to collect and exploit your personal data to their own benefit for issues like marketing, research, and customer segmentation. Others have malicious intentions with your data and may use it for phishing, accessing your banking information or hacking into your online accounts. Businesses have similar privacy issues. Malicious entities could be looking for ways to access customer information, steal trade secrets, stop networks and platforms such as e-commerce sites from operating and disrupt your operations.”

The site’s list of solutions to these threats is long. Some are free and some are not. And which to choose will differ depending on one’s situation. One way to simplify the selection is with the group’s specific Privacy Guides—collections of tools for specific concerns. Categories currently include Android, Encryption, Network, Smartphones, Tor Browser, and Tracking, to name a few. This is a handy way to narrow down the many solutions featured on the site. A worthy undertaking since, as the site emphasizes, “You are being watched.”

Cynthia Murrell, October 30, 2024

Research: A Slippery Path to Wisdom Now

January 19, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

When deciding whether to believe something on the Internet all one must do is google it, right? Not so fast. Citing five studies performed between 2019 and 2022, Scientific American describes “How Search Engines Boost Misinformation.” Writer Lauren Leffer tells us:

“Encouraging Internet users to rely on search engines to verify questionable online articles can make them more prone to believing false or misleading information, according to a study published today in Nature. The new research quantitatively demonstrates how search results, especially those prompted by queries that contain keywords from misleading articles, can easily lead people down digital rabbit holes and backfire. Guidance to Google a topic is insufficient if people aren’t considering what they search for and the factors that determine the results, the study suggests.”

Those of us with critical thinking skills may believe that caveat goes without saying but, alas, it does not. Apparently evaluating the reliability of sources through lateral reading must be taught to most searchers. Another important but underutilized practice is to rephrase a query before hitting enter. Certain terms are predominantly used by purveyors of misinformation, so copy-and-pasting a dubious headline will turn up dubious sources to support it. We learn:

“For example, one of the misleading articles used in the study was entitled ‘U.S. faces engineered famine as COVID lockdowns and vax mandates could lead to widespread hunger, unrest this winter.’ When participants included ‘engineered famine’—a unique term specifically used by low-quality news sources—in their fact-check searches, 63 percent of these queries prompted unreliable results. In comparison, none of the search queries that excluded the word ‘engineered’ returned misinformation. ‘I was surprised by how many people were using this kind of naive search strategy,’ says the study’s lead author Kevin Aslett, an assistant professor of computational social science at the University of Central Florida. ‘It’s really concerning to me.’”

That is putting it mildly. These studies offer evidence to support suspicions that thoughtless searching is getting us into trouble. See the article for more information on the subject. Maybe a smart LLM will spit it out for you, and let you use it as your own?

Cynthia Murrell, January 19, 2024

Information Voids for Vacuous Intellects

January 18, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

In countries around the world, 2024 is a critical election year, and the problem of online mis- and disinformation is worse than ever. Nature emphasizes the seriousness of the issue as it describes “How Online Misinformation Exploits ‘Information Voids’—and What to Do About It.” Apparently we humans are so bad at considering the source that advising us to do our own research just makes the situation worse. Citing a recent Nature study, the article states:

“According to the ‘illusory truth effect’, people perceive something to be true the more they are exposed to it, regardless of its veracity. This phenomenon pre-dates the digital age and now manifests itself through search engines and social media. In their recent study, Kevin Aslett, a political scientist at the University of Central Florida in Orlando, and his colleagues found that people who used Google Search to evaluate the accuracy of news stories — stories that the authors but not the participants knew to be inaccurate — ended up trusting those stories more. This is because their attempts to search for such news made them more likely to be shown sources that corroborated an inaccurate story.”

Doesn’t Google bear some responsibility for this phenomenon? Apparently the company believes it is already doing enough by deprioritizing unsubstantiated news, posting content warnings, and including its “about this result” tab. But it is all too easy to wander right past those measures into a “data void,” a virtual space full of specious content. The first impulse when confronted with questionable information is to copy the claim and paste it straight into a search bar. But that is the worst approach. We learn:

“When [participants] entered terms used in inaccurate news stories, such as ‘engineered famine’, to get information, they were more likely to find sources uncritically reporting an engineered famine. The results also held when participants used search terms to describe other unsubstantiated claims about SARS-CoV-2: for example, that it rarely spreads between asymptomatic people, or that it surges among people even after they are vaccinated. Clearly, copying terms from inaccurate news stories into a search engine reinforces misinformation, making it a poor method for verifying accuracy.”

But what to do instead? The article notes Google steadfastly refuses to moderate content, as social media platforms do, preferring to rely on its (opaque) automated methods. Aslett and company suggest inserting human judgement into the process could help, but apparently that is too old fashioned for Google. Could educating people on better research methods help? Sure, if they would only take the time to apply them. We are left with this conclusion: instead of researching claims from untrustworthy sources, one should just ignore them. But that brings us full circle: one must be willing and able to discern trustworthy from untrustworthy sources. Is that too much to ask?

Cynthia Murrell, January 18, 2024

Academic Research Resources: Smart Software Edition

August 8, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

One of my research team called “The Best AI Tools to Power Your Academic Research.” The article identifies five AI infused tools; specifically:

- ChatPDF

- Consensus

- Elicit.org

- Research Rabbit

- Scite.ai

Each of the tools is described briefly. The “academic research” phrase is misleading. These tools can provide useful information related to inventors and experts (real or alleged), specific technical methods, and helpful background or contest for certain social, political, and intellectual issues.

If you have access to a LLM question-and-answer system, experimenting with article summaries, lists of information, and names of people associated with a particular activity — give a ChatGPT system a whirl too.

Stephen E Arnold, August 8, 2023

Need Research Assistance, Skip the Special Librarian. Go to Elicit

July 17, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-32.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Academic databases are the bedrock of research. Unfortunately most of them are hidden behind paywalls. If researchers get past the paywalls, they encounter other problems with accurate results and access to texts. Databases have improved over the years but AI algorithms make things better. Elicit is a new database marketed as a digital assistant with less intelligence than Alexa, Siri, and Google but can comprehend simple questions.

“This is indeed the research library. The shelves are filled with books. You know what a book is, don’t you? Also, will find that this research library is not used too much any more. Professors just make up data. Students pay others to do their work. If you wish, I will show you how to use the card catalog. Our online public access terminal and library automation system does not work. The university’s IT department is busy moonlighting for a professor who is a consultant to a social media company,” says the senior research librarian.

What exactly is Elicit?

“Elicit is a research assistant using language models like GPT-3 to automate parts of researchers’ workflows. Currently, the main workflow in Elicit is Literature Review. If you ask a question, Elicit will show relevant papers and summaries of key information about those papers in an easy-to-use table.”

Researchers use Elicit to guide their research and discover papers to cite. Researcher feedback stated they use Elicit to answer their questions, find paper leads, and get better exam scores.

Elicit proves its intuitiveness with its AI-powered research tools. Search results contain papers that do not match the keywords but semantically match the query meaning. Keyword matching also allows researchers to narrow or expand specific queries with filters. The summarization tool creates a custom summary based on the research query and simplifies complex abstracts. The citation graph semantically searches citations and returns more relevant papers. Results can be organized and more information added without creating new queries.

Elicit does have limitations such as the inability to evaluate information quality. Also Elicit is still a new tool so mistakes will be made along the development process. Elicit does warn users about mistakes and advises to use tried and true, old-fashioned research methods of evaluation.

Whitney Grace, July 16 , 2023

OSINT Is Popular. Just Exercise Caution

November 2, 2022

Many have embraced open source intelligence as the solution to competitive intelligence, law enforcement investigations, and “real” journalists’ data gathering tasks.

For many situations, OSINT as open source intelligence is called, most of those disciplines can benefit. However, as we work on my follow up to monograph to CyberOSINT and the Dark Web Notebook, we have identified some potential blind spots for OSINT enthusiasts.

I want to mention one example of what happens when clever technologists mesh hungry OSINT investigators with some online trickery.

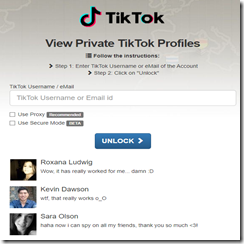

Navigate to privtik.com (78.142.29.185). At this site you will find:

But there is a catch, and a not too subtle one:

The site includes mandatory choices in order to access the “secret” TikTok profile.

How many OSINT investigators use this service? Not too many at this time. However, we have identified other, similar services. Many of these reside on what we call “ghost ISPs.” If you are not aware of these services, that’s not surprising. As the frenzy about the “value” of open source investigations increases, geotag spoofing, fake data, and scams will escalate. What happens if those doing research do not verify what’s provided and the behind the scenes data gathering?

That’s a good question and one that gets little attention in much OSINT training. If you want to see useful OSINT resources, check www.osintfix.com. Each click displays one of the OSINT resources we find interesting.

Stephen E Arnold, November 2, 2022