Another Better, Faster, Cheaper from a Big AI Wizard Type

October 16, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Cheap seems to be the hot button for some smart software people. I spotted a news item in the Russian computer feed I get called in English “Former OpenAI Engineer Andrey Karpaty Launched the Nanochat Neural Network Generator. You Can Make Your ChatGPT in a Few Hours.” The project is on GitHub at https://github.com/karpathy/nanochat.

The GitHub blurb says:

This repo is a full-stack implementation of an LLM like ChatGPT in a single, clean, minimal, hackable, dependency-lite codebase. Nanochat is designed to run on a single 8XH100 node via scripts like speedrun.sh, that run the entire pipeline start to end. This includes tokenization, pretraining, finetuning, evaluation, inference, and web serving over a simple UI so that you can talk to your own LLM just like ChatGPT. Nanochat will become the capstone project of the course LLM101n being developed by Eureka Labs.

The open source bundle includes:

- A report service

- A Rust-coded tokenizer

- A FineWeb dataset and tools to evaluate CORE and other metrics for your LLM

- Some training gizmos like SmolTalk, tests, and tool usage information

- A supervised fine tuning component

- Training Group Relative Policy Optimization and the GSM8K (a reinforcement learning technique), a benchmark dataset consisting of grade school math word problems

- An output engine.

Is it free? Yes. Do you have to pay? Yep. About US$100 is needed? Launch speedrun.sh, and you will have to be hooked into a cloud server or a lot of hardware in your basement to do the training. A low-ball estimate for using a cloud system is about US$100, give or take some zeros. (Think of good old Amazon AWS and its fascinating billing methods.) To train such a model, you will need a server with eight Nvidia H100 video cards. This will take about 4 hours and about $100 when renting equipment in the cloud. The need for the computing resources becomes evident when you enter the command speedrun.sh.

Net net: As the big dogs burn box cars filled with cash, Nanochat is another player in the cheap LLM game.

Stephen E Arnold, October 16, 2025

Teenie Boppers and Smart Software: Yep, Just Have Money

January 23, 2025

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

I scanned the research summary “About a Quarter of U.S. Teens Have Used ChatGPT for Schoolwork – Double the Share in 2023.” Like other Pew data, the summary contained numerous numbers. I was not sufficiently motivated to dig into the methodology to find out how the sample was assembled nor how Pew got the mobile addicted youth were prompted to provide presumably truthful answers to direct questions. But why nit pick? We are at the onset of an interesting year which will include forthcoming announcements about how algorithms are agentic and able to fuel massive revenue streams for those in the know.

Students doing their homework while their parents play polo. Thanks, MSFT Copilot. Good enough. I do like the croquet mallets and volleyball too. But children from well-to-do families have such items in abundance.

Let’s go to the video tape, as the late and colorful Warner Wolf once said to his legion of Washington, DC, fan.

One of the highlights of the summary was this finding:

Teens who are most familiar with ChatGPT are more likely to use it for their schoolwork. Some 56% of teens who say they’ve heard a lot about it report using it for schoolwork. This share drops to 18% among those who’ve only heard a little about it.

Not surprisingly, the future leaders of America embrace short cuts. The question is, “How quickly will awareness reach 99 percent and usage nosing above 75 percent?” My guesstimate is pretty quickly. Convenience and more time to play with mobile phones will drive the adoption. Who in America does not like convenience?

Another finding catching my eye was:

Teens from households with higher annual incomes are most likely to say they’ve heard about ChatGPT. The shares who say this include 84% of teens in households with incomes of $75,000 or more say they’ve heard at least a little about ChatGPT.

I found this interesting because it appears to suggest that if a student comes from a home where money does not seem to be a huge problem, the industrious teens are definitely aware of smart software. And when it comes to using the digital handmaiden, Pew finds apparently nothing. There is no data point relating richer progeny with greater use. Instead we learned:

Teens who are most familiar with the chatbot are also more likely to say using it for schoolwork is OK. For instance, 79% of those who have heard a lot about ChatGPT say it’s acceptable to use for researching new topics. This compares with 61% of those who have heard only a little about it.

My thought is that more wealthy families are more likely to have teens who know about smart software. I would hypothesize that wealthy parents will pay for the more sophisticated smart software and smile benignly as the future intelligentsia stride confidently to ever brighter futures. Those without the money will get the opportunity to watch their classmates have more time for mobile phone scrolling, unboxing Amazon deliveries, and grabbing burgers at Five Guys.

I am not sure that the link between wealth and access to learning experiences is a random, one-off occurrence. If I am correct, the Pew data suggest that smart software is not reinforcing democracy. It seems to be making a digital Middle Ages more and more probable. But why think about what a dinobaby hypothesizes? It is tough to scroll zippy mobile phones with old paws and yellowing claws.

Stephen E Arnold, January 23, 2025

AI Weapons: Someone Just Did Actual Research!

July 12, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read a write up that had more in common with a write up about the wonders of a steam engine than a technological report of note. The title of the “real” news report is “AI and Ukraine Drone Warfare Are Bringing Us One Step Closer to Killer Robots.”

I poked through my files and found a couple of images posted as either advertisements for specialized manufacturing firms or by marketers hunting for clicks among the warfighting crowd. Here’s one:

The illustration represents a warfighting drone. I was able to snap this image in a lecture I attended in 2021. At that time, an individual could purchase online the device in quantity for about US$9,000.

Here’s another view:

This militarized drone has 10 inch (254 millimeter) propellers / blades.

The boxy looking thing below the rotors houses electronics, batteries, and a payload of something like a Octanitrocubane- or HMX-type of kinetic charge.

Imagine four years ago, a person or organization could buy a couple of these devices and use them in a way warmly supported by bad actors. Why fool around with an unreliable individual pumped on drugs to carry a mobile phone that would receive the “show time” command? Just sit back. Guide the drone. And — well — evidence that kinetics work.

The write up is, therefore, years behind what’s been happening in some countries for years. Yep, years.

Consider this passage:

As the involvement of AI in military applications grows, alarm over the eventual emergence of fully autonomous weapons grows with it.

I want to point out that Palmer Lucky’s Andruil outfit has been fooling around in the autonomous system space since 2017. One buzz phrase an Andruil person used in a talk was, “Lattice for Mission Autonomy.” Was Mr. Lucky to focus on this area? Based on what I picked up at a couple of conferences in Europe in 2015, the answer is, “Nope.”

The write up does have a useful factoid in the “real” news report?

It is not technology. It is not range. It is not speed, stealth, or sleekness.

It is cheap. Yes, low cost. Why spend thousands when one can assemble a drone with hobby parts, a repurposed radio control unit from the local model airplane club, and a workable but old mobile phone?

Sign up for Telegram. Get some coordinates and let that cheap drone fly. If an operating unit has a technical whiz on the team, just let the gizmo go and look for rectangular shapes with a backpack near them. (That’s a soldier answering nature’s call.) Autonomy may not be perfect, but close enough can work.

The write up says:

Attack drones used by Ukraine and Russia have typically been remotely piloted by humans thus far – often wearing VR headsets – but numerous Ukrainian companies have developed systems that can fly drones, identify targets, and track them using only AI. The detection systems employ the same fundamentals as the facial recognition systems often controversially associated with law enforcement. Some are trained with deep learning or live combat footage.

Does anyone believe that other nation-states have figured out how to use off-the-shelf components to change how warfighting takes place? Ukraine started the drone innovation thing late. Some other countries have been beavering away on autonomous capabilities for many years.

For me, the most important factoid in the write up is:

… Ukrainian AI warfare reveals that the technology can be developed rapidly and relatively cheaply. Some companies are making AI drones using off-the-shelf parts and code, which can be sent to the frontlines for immediate live testing. That speed has attracted overseas companies seeking access to battlefield data.

Yep, cheap and fast.

Innovation in some countries is locked in a time warp due to procurement policies and bureaucracy. The US F 35 was conceived decades ago. Not surprisingly, today’s deployed aircraft lack the computing sophistication of the semiconductors in a mobile phone I can acquire today a local mobile phone repair shop, often operating from a trailer on Dixie Highway. A chip from the 2001 time period is not going to do the TikTok-type or smart software-type of function like an iPhone.

So cheap and speedy iteration are the big reveals in the write up. Are those the hallmarks of US defense procurement?

Stephen E Arnold, July 12, 2024

AI Delivers The Best of Both Worlds: Deception and Inaccuracy

May 16, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Wizards from one of Jeffrey Epstein’s probes made headlines about AI deception. Well, if there is one institution familiar with deception, I would submit that the Massachusetts Institute of Technology might be considered for the ranking, maybe in the top five.

The write up is “AI Deception: A Survey of Examples, Risks, and Potential Solutions.” If you want summaries of the write up, you will find them in The Guardian (we beg for dollars British newspaper) and Science Alert. Before I offer my personal observations, I will summarize the “findings” briefly. Smart software can output responses designed to deceive users and other machine processes.

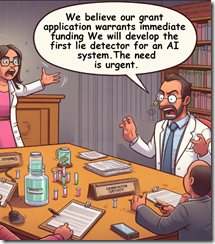

Two researchers at a big name university make an impassioned appeal for a grant. These young, earnest, and passionate wizards know their team can develop a lie detector for an artificial intelligence large language model. The two wizards have confidence in their ability, of course. Thanks, MSFT Copilot. Good enough, like some enterprise software’s security architecture.

If you follow the “next big thing” hoo hah, you know that the garden variety of smart software incorporates technology from outfits like Google. I have described Google as a “slippery fish” because it generates explanations which often don’t make sense to me. Using the large language model generative text systems can yield some surprises. These range from images which seem out of step with historical fact to legal citations that land a lazy lawyer (yes! alliteration) in a load of lard.

The MIT researcher has verified that smart software may emulate the outstanding ethical qualities of an engineer or computer scientist. Logic is everything. Ethics are not anything.

The write up says:

Deception has emerged in a wide variety of AI systems trained to complete a specific task. Deception is especially likely to emerge when an AI system is trained to win games that have a social element …

The domain of the investigation was games. I want to step back and ask, “If LLMs are not understood by their developers, how do we know if deception is hard wired into the systems or that the systems learn deception from their developers with a dusting of examples from the training data?”

The answer to the question is, “At this time, no one knows how these large-scale systems work. Even the “small” LLMs can prove baffling. We input our own data into Mistral and managed to obtain gibberish. Another go produced a system crash that required a hard reboot of the Mac we were using for the test.

The reality appears to be that probability-based systems do not follow the same rules as a human. With more and more humans struggling with old-school skills like readin’, writin’ and ‘rithmatic — most people won’t notice. For the top 10 percenters, the mistakes are amusing… sometimes.

The write up concludes:

Training models to be more truthful could also create risk. One way a model could become more truthful is by developing more accurate internal representations of the world. This also makes the model a more effective agent, by increasing its ability to successfully implement plans. For example, creating a more truthful model could actually increase its ability to engage in strategic deception by giving it more accurate insights into its opponents’ beliefs and desires. Granted, a maximally truthful system would not deceive, but optimizing for truthfulness could nonetheless increase the capacity for strategic deception. For this reason, it would be valuable to develop techniques for making models more honest (in the sense of causing their outputs to match their internal representations), separately from just making them more truthful. Here, as we discussed earlier, more research is needed in developing reliable techniques for understanding the internal representations of models. In addition, it would be useful to develop tools to control the model’s internal representations, and to control the model’s ability to produce outputs that deviate from its internal representations. As discussed in Zou et al., representation control is one promising strategy. They develop a lie detector and can control whether or not an AI lies. If representation control methods become highly reliable, then this would present a way of robustly combating AI deception.

My hunch is that MIT will be in the hunt for US government grants to develop a lie detector for AI models. It is also possible that Harvard’s medical school will begin work to determine where ethical behavior resides in the human brain so that can be replicated in one of the megawatt munching data centers some big tech outfits want to deploy.

Four observations:

- AI can generate what appears to be “accurate” information, but that information may be weaponized by a little-understood mechanism

- “Soft” human information like ethical behavior may be difficult to implement in the short term, if ever

- A lie detector for AI will require AI; therefore, how will an opaque and not understood system be designated okay to use? It cannot at this time

- Duplicity may be inherent in the educational institutions. Therefore, those affiliated with the institution may be duplicitous and produce duplicitous content. This assertion raises the question, “Whom can one trust in the AI development chain?

Net net: AI is hot because is a candidate for 2024’s next big thing. The “big thing” may be the economic consequences of its being a fairly small and premature thing. Incubator time?

Stephen E Arnold, May 16, 2024

Cow Control or Elsie We Are Watching

April 1, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Australia already uses remotely controlled drones to herd sheep. Drones are considered more ethnical tax traditional herding methods because they’re less stressful for sheep.

Now the island continent is using advanced tracking technology to monitor buffalos and cows. Euro News investigates how technology is used in the cattle industry: “Scientists Are Attempting To Track 1000 Cattle And Buffalo From Using GPS, AI, And Satellites.”

An estimated 22000 buffalo freely roam in Arnhem Land, Australia. The emphasis is on estimate, because the exact number is unknown. These buffalo are harming Arnhem Land’s environment. One feral buffalo weighing 1200 kilograms and 188 cm not only damages the environment by eating a lot of plant life but also destroys cultural rock art, ceremonial sites, and waterways. Feral buffalos and cattle are major threats to Northern Australia’s economy and ecology.

Scientists, cattlemen, and indigenous rangers have teamed up to work on a program that will monitor feral bovines from space. The program is called SpaceCows and will last four years. It is a large-scale remote herd management system powered by AI and satellite. It’s also supported by the Australian government’s Smart Farming Partnership.

The rangers and stockmen trap feral bovines, implant solar-powered GPS tags, and release them. The tags transmit the data to a space satellite located 650 km away for two years or until it falls off. SpaceCows relies on Microsoft Azure’s cloud platform. The satellites and AI create a digital map of the Australian outback that tells them where feral cows live:

“Once the rangers know where the animals tend to live, they can concentrate on conservation efforts – by fencing off important sites or even culling them. ‘There’s very little surveillance that happens in these areas. So, now we’re starting to build those data sets and that understanding of the baseline disease status of animals,’ said Andrew Hoskins, a senior research scientist at CSIRO.

If successful, it could be one of the largest remote herd management systems in the world.”

Hopefully SpaceCows will protect the natural and cultural environment.

Whitney Grace, April 1, 2024

Google: Technology Which Some Day Will Be Error Corrected But In the Meantime?

May 20, 2021

I read “Can Google Really Build a Practical Quantum Computer by 2029?” which is based on the Google announcements at its developers’ conference. The article reports:

… the most interesting news we heard at I/O has to be the announcement that Google intends to build a new quantum AI center in Santa Barbara where the company says it will produce a “useful, error-corrected quantum computer” by 2029.

Didn’t Google announce “quantum supremacy” some time ago? This is an assertion which China appears to dispute. See “Google and China Duke It Out over Quantum Supremacy.” Let’s assume Google is the supremo of quantumness. It stands to reason that the technology would be tamed by Googlers. With smart software and quantum supremacy, what’s with the 96-month timelines for “a useful” quantum computer?

Then I came across a news item from Fox10 in Arizona. The TV station’s story “Driverless Waymo Taxi Gets Stuck in Chandler Traffic, Runs from Support Crew” suggests that a Google infused smart auto got confused and then tried to get away from the Googlers who were providing “support.” That’s a very non-Google word “support.” The write up asserts:

A driverless Waymo taxi was caught on camera going rogue on a Chandler intersection near a construction site last week. The company told the passenger at the time that the vehicle was confused with cones blocking a lane.

The Google support team lifted off. Upon arrival, the smart taxi with a humanoid in the vehicle “decided to make for a quick getaway.”

According to Business Insider, “Waymo issued a statement that it has assessed the event and used it to improve future rides.” If you want to watch smart software and the incident, you can navigate to this YouTube link (YouTube videos can be disappeared, so no guarantees that the video will remain on the Googley service.)

Amusing. Independent, easily confused smart Google vehicles and error-corrected quantum computers. Soon. Perhaps both the Waymo capabilities and the quantum supremacy are expensive high school science club experiments which may not work in way that the hapless rider in the errant Waymo taxi would describe as “error corrected”?

Stephen E Arnold, May 20, 2021

Smart Software Names Cookies

December 11, 2018

Tired of McVities’ digestives, coconut macaroons, and chocolate chip cookies. Tireless researchers have trained smart software to name cookies. Next to solving death, this is definitely a significant problem.

The facts appear in “AI System Tries to Rename Classic Cookies and Fails Miserably.” You can read the original, possible check the cv of the expert who crafted this study, and inform your local patisserie that you want new names for the confections. (I assume the patisserie has not been trashed by gilets jaunes.)

Here’s an alphabetical list of the “new” names from the write up. Sorry, I don’t have the real world cookie name to which each neologism is matched. Complain to TechRadar, not me.

The names:

- Apricot Dream Moles

- Canical Bear-Widded Nuts

- Fluffin coffee drops

- Granma’s spritches

- Hersel pump sprinters

- Lord’s honey fight

- Low fuzzy feats

- Merry hunga poppers

- Quitterbread bars

- Sparry brunchies #2

- Spice biggers

- Walps

And my personal favorite:

Hand buttersacks.

I quite like the system. One can use it to name secret projects. I can envision attending a meeting and suggesting, “Our new project will be code named Quitterbread bars.”

Stephen E Arnold, December 11, 2018

Knowledge Supposedly the Best Investment

December 13, 2017

Read, read, read, read! You are told it is good for you, but, much like eating vegetables, no one wants to do it. School children loath their primers, adults say they do not have the time, and senior citizens explain it puts them to sleep. Reading, however, is the single best investment an individual can make. This is not new, but the Observer treats reading like some epiphany in the article, “If You’re Not Spending Five Hours Per Week Learning, You’re Being Irresponsible.”

The article opens with snippets about famous smart people and how they take the time to read at least an hour a day. The stories are followed by these wise words:

The answer is simple: Learning is the single best investment of our time that we can make. Or as Benjamin Franklin said, ‘An investment in knowledge pays the best interest.’ This insight is fundamental to succeeding in our knowledge economy, yet few people realize it. Luckily, once you do understand the value of knowledge, it’s simple to get more of it. Just dedicate yourself to constant learning.

The standard excuse follows that in today’s modern world we are too busy making money in order to survive to learn new things, then we are slugged with the dire downer that demonetization is making previously expensive technology cheaper or even free. Examples are provided such as video conferencing, video game consoles, cameras, encyclopedias, and anything digital. All of these are found on a smartphone.

Technology that was once gold is now cheap, making knowledge more valuable. Then we are told that technology will make certain jobs obsolete and the only way to survive in the future will be to gain more knowledge and apply, because this can never be taken from you. The bottom line is to read, learn, apply knowledge, and then make that a daily ritual. The message is not anything new, but does learning via filtered and censored online search results count?

Whitney Grace, December 13, 2017

eDiscovery to Get a Fillip with DISCO

June 23, 2017

A legal technology company has unveiled the next generation AI platform that will reduce time, efforts and money spent by law firms and large corporations on mundane legal discovery work. Named DISCO, the program was in beta phase for two years.

PR distribution platform BusinessWire in a release titled DISCO Launches Artificial Intelligence Platform for Legal Technology quotes:

While there will be many applications for DISCO AI, initially the focus is to dramatically reduce the time, burden, and cost of identifying evidence in legal document review — a process known as eDiscovery.

Many companies have attempted to automate the process of eDiscovery, the success rates, however, have been far from encouraging. Apart from disrupting the legal industry, automated processes like the ones offered by DISCO will render many people in the industry jobless. AI creators, however, say that their intention is to speed up the process and reduce costs to organizations. But again, as technology advances, job losses are inevitable.

Vishal Ingole, June 23, 2017

Make Your Amazon Echo an ASMR Device

June 7, 2017

For people who love simple and soothing sounds, the Internet is a boon for their stimulation. White noise or ambient noise is a technique many people use to relax or fall asleep. Ambient devices used to be sold through catalogs, especially Sky Mall, but now any sort of sound can be accessed through YouTube or apps for free. Smart speakers are the next evolution for ambient noise. CNET has a cool article that explains, “How To Turn Your Amazon Echo Into A Noise Machine.”

The article lists several skills that can be downloaded onto the Echo and the Echo Dot. The first two suggestions are music skills: Amazon Prime Music and Spotify. Using these skills, the user can request that Alexia finds any variety of nature sounds and then play them on a loop. It takes some trial and error to find the perfect sounds to fit your tastes, but once found they can be added to a playlist. An easier way, but might offer less variety is:

One of the best ways to find ambient noise or nature sounds for Alexa is through skills. Developer Nick Schwab created a family of skills under Ambient Noise. There are currently 12 skills or sounds to choose from:

-

Airplane

-

Babbling Brook

-

Birds

-

City

-

Crickets

-

Fan

-

Fireplace

-

Frogs

-

Ocean waves

-

Rainforest

-

Thunderstorms

-

Train

Normally, you could just say, “Alexa, open Ambient Noise,” to enable the skill, but there are too many similar skills for Alexa to list and let you choose using your voice. Instead, go to alexa.amazon.com or open the iOS or Android app and open the Skills menu. Search for Ambient Noise and click Enable.

This is not a bad start for ambient noises, but the vocal command adds its own set of problems. Amazon should consider upgrading their machine learning algorithms to a Bitext-based solution. If you want something with a WHOLE lot more variety to check out YouTube and search for ambient noise or ASMR.

Whitney Grace, June 7, 2017