A Digital Walden Despond: Life without Social Media

July 11, 2024

Here is a refreshing post from Deep Work Culture and More about the author’s shift to an existence mostly offline, where he discovered … actual life. Upon marking one year without Facebook, Instagram, or Twitter / X, the blogger describes “Rediscovering Time and Relationships: The Impact of Quitting Social Media.” After a brief period of withdrawal, he learned to put his newly freed time and attention to good use. He writes:

“Hours previously lost to mindless scrolling were now available for activities that brought genuine enrichment. I rediscovered the joy of uninterrupted reading, long walks, and deep conversations. This newfound time became a fertile ground for hobbies that had languished in the shadows of digital distractions. The absence of the incessant need to document and share every moment of my life allowed me to be fully present in my experiences.”

Imagine that. The author states more time for reflection and self-discovery, as well as abandoning the chase for likes and comments, provided clarity and opportunities for personal growth. He even rediscovered his love of books. He considers:

“Without the constant distractions of social media, I found myself turning to books more frequently and with greater enthusiasm. … My recent literary journey has been instrumental in fostering a deeper sense of empathy and curiosity, encouraging me to view the world through varied lenses and enhancing my overall cognitive and emotional well-being. Additionally, reading more has cultivated a more reflective mindset, allowing me to draw connections between my personal experiences and broader human themes. This has translated into a more nuanced approach to both my professional endeavors and personal relationships, as the wisdom gleaned from books has informed my decision-making, problem-solving, and communication skills.”

Enticing, is it not? Strangely, this freedom, time, and depth of experience are available to any of us. All we have to do is log out of social media once and for all. Are you ready, dear reader? Find a walled in despond.

Cynthia Murrell, July 11, 2024

The Future for Flops with Humans: Flop with Fakes

May 15, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

As a dinobaby, I find the shift from humans to fake humans fascinating. Jeff Epstein’s favorite university published “Deepfakes of Your Dead Loved Ones Are a Booming Chinese Business.” My first thought is that MIT’s leadership will commission a digital Jeffrey. Imagine. He could introduce MIT fund raisers to his “friends.” He could offer testimonials about the university. He could invite — virtually, of course — certain select individuals to a virtual “island.”

The bar located near the technical university is a hot bed of virtual dating, flirting, and drinking. One savvy service person is disgusted by the antics of the virtual customers. The bartender is wide-eyed in amazement. He is a math major with an engineering minor. He sees what’s going on. Thanks, MSFT Copilot. Working hard on security, I bet.

Failing that, MIT might turn its attention to Whitney Wolfe Herd, the founder of Bumble. Although a graduate of the vastly, academically inferior Southern Methodist University in the non-Massachusetts locale of Texas (!), she has a more here-and-now vision. The idea is probably going to get traction among some of the MIT-type brainiacs. A machine-generated “self” — suitably enhanced to remove pocket protectors, plaid jammy bottoms, and observatory grade bifocals — will date a suitable companion’s digital self. Imagine the possibilities.

The write up “AI Personas Are the Future of Dating, Bumble Founder Says. Many Aren’t Buying.” The write up reports:

Herd proposed a scenario in which singles could use AI dating concierges as stand-ins for themselves when reaching out to prospective partners online. “There is a world where your dating concierge could go and date for you with other dating concierge … and then you don’t have to talk to 600 people,” she said during the summit.

Wow. More time to put a pony on the roof of an MIT building.

The write up did inject a potential downside. A downside? Who is NBC News kidding?

There’s some healthy skepticism over whether AI is the answer. A clip of Herd at the Bloomberg Summit gained over 10 million views on X, where people expressed uneasiness with the idea of an AI-based dating scene. Some compared it to episodes of "Black Mirror," a Netflix series that explores dystopian uses of technology. Others felt like the use of AI in dating would exacerbate the isolation and loneliness that people have been feeling in recent years.

Are those working in the techno-feudal empires or studying in the prep schools known to churn out the best, the brightest, the most 10X-ceptional knowledge workers weak in social skills? Come on. Having a big brain (particularly for mathy type of logic) is “obviously” the equipment needed to deal with lesser folk. Isolated? No. Think about gamers. Such camaraderie. Think about people like the head of Bumble. Lectures, Discord sessions, and access to data about those interested in loving and living virtually. Loneliness? Sorry. Not an operative word. Halt.

“AI Personas Are the Future…” reports:

"We will not be a dating app in a few years," she [the Bumble spokesperson] said. "Dating will be a component, but we will be a true human connection platform. This is where you will meet anyone you want to meet — a hiking buddy, a mahjong buddy, whatever you’re looking for."

What happens when a virtually Jeff Epstein goes to the bar and spots a first-year who looks quite youthful. Virtual fireworks?

Stephen E Arnold, May 15, 2024

Want to Fix Technopoly Life? Here Is a Plan. Implement It. Now.

December 28, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Cal Newport published an interesting opinion essay in New Yorker Magazine called “It Is Time to Dismantle the Technopoly.” The point upon which I wish to direct my dinobaby microscope appears at the end of the paywalled artistic commentary. Here’s the passage:

We have no other reasonable choice but to reassert autonomy over the role of technology in shaping our shared story.

The author or a New Yorker editor labored over this remarkable sentence.

First, I want to point out that there is a somewhat ill-defined or notional “we”. Okay, exactly who is included in the “we.” I would suggest that the “technopoly” is excluded. The title of the article makes clear that dismantle means taking apart, disassembling, or deconstructing. How will that be accomplished in a nation state like the US? What about the four entities in the alleged “Axis of Evil”? Are there other social constructs like an informal, distributed group of bad actors who want to make smart software available to anyone who wants to mount phishing and ransomware attacks? Okay, that’s the we problem, not too tiny is it?

A teacher explains to her top students that they have an opportunity to define some interesting concepts. The students do not look too happy. As the students grow older, their interest is therapist jargon may increase. The enthusiasm for defining such terms remains low. Thanks, MSFT Copilot.

Second, “no other reasonable choice.” I think you know where I am going with my next question: What does “reasonable” mean? I think the author knows or hopes that the “we” will recognize “reasonable” when those individuals see it. But reason is slippery, particularly in an era in which literacy is defined as being able to “touch and pay” and “swiping left.” What is the computing device equipped with good enough smart software “frames” an issue? How does one define “reasonable” if the information used to make that decision is weaponized, biased, or defined by a system created by the “technopoly”? Who other than lawyers wants to argue endlessly over an epistemological issue? Not me. The “reasonable” is pulled from the same word list used by some of the big technology outfits. Isn’t Google reasonable when it explains that it cares about the user’s experience? What about Meta (the Zuckbook) and its crystal clear explanations of kiddie protections on its services? What about the explanations of legal experts arguing against one another? The word “reasonable” strikes me as therapist speak or mother-knows-best talk.

Third, the word “reassert” suggests that it is time to overthrow the technopoly. I am not sure a Boston Tea Party-type event will do the trick. Technology, particularly open source software, makes it easy for a bad actor working from a beat down caravan near Makarska can create a new product or service that sweeps through the public network. How is “reassert” going to cope with an individual hooked into an online, distributed criminal network. Believe me, Europol is trying, but the work is difficult. But the notion of “reassert” implies that there was a prior state, a time when technopolists were not the focal point of “The New Yorker.” “Reassert” is a call to action. The who, how, when, and where questions are not addressed. The result is crazy rhetoric which, I suppose, might work if one were a TikTok influencer backed by a large country’s intelligence apparatus. But that might not work either. The technopolies have created the datasphere, and it is tough to grab a bale of tea and pitch it in the Boston Harbor today. “Heave those bits overboard, mates” won’t work.

Fourth “autonomy.” I am not sure what “autonomy” means. When I was taking required classes at the third-rate college I attended, I learned the definition each instructor presented. Then, like a good student chasing top marks, I spit the definition back. Bingo. The method worked remarkably well. The notion of “autonomy” dredges upon explanations of free will and predestination. “Autonomy” sounds like a great idea to some people. To me, it smacks of ideas popular when Ben Franklin was chasing females through French doors before he was asked to return to the US of A. YouTube is chock-a-block with off-the-grid methods. Not too many people go off the grid and remain there. When someone disappears, it becomes “news.” And the person or the entity’s remains become an anecdote on a podcast. How “free” is a person in the US to “dismantle” a public or private enterprise? Can one “dismantle” a hacker? Remember those homeowners who put bullets in an intruder and found themselves in jail? Yeah. Autonomy. How’s that working out in other countries? What about the border between French Guyana and Brazil? Do something wrong and the French Foreign Legion will define “autonomy” in terms of a squad solving a problem. Bang. Done. Nice idea that “autonomy” stuff.

Fifth, the word “role” is interesting. I think of “role” as a character in a social setting; for example, a CEO who is insecure about how he or she actually became a CEO. That individual tries to play a “role.” A character like the actor who becomes “Mr. Kitzel” on a Jack Benny Show plays a role. The talking heads on cable news play a “role.” Technology enables, it facilitates, and it captivates. I suppose that’s its “role.” I am not convinced. Technology does what it does because humans have shaped a service, software, or system to meet an inner need of a human user. Technology is like a gerbil. Look away and there are more and more little technologies. Due to human actions, the little technologies grow and then the actions of lots of human make the technologies into digital behemoths. But humans do the activating, not the “technology.” The twist with technology is that as it feeds on human actions, the impact of the two interacting is tough to predict. In some cases, what happens is tough to explain as that action is taking place. A good example is the role of TikTok in shaping the viewpoints of some youthful fans. “Role” is not something I link directly to technology, but the word implies some sort of “action.” Yeah, but humans were and are involved. The technology is perhaps a catalyst or digital Teflon. It is not Mr. Kitzel.

Sixth, the word “shaping” in the cited sentence directly implies that “technology” does something. It has intent. Nope. The humans who control or who have unrestricted access to the “technology” do the shaping. The technology — sorry, AI fans — is following instructions. Some instructions come from a library; others can be cooked up based on prior actions. But for most technology technology is inanimate and “smart” to uninformed people. It is not shaping anything unless a human set up the system to look for teens want to commit suicide and the software identifies similar content and displays it for the troubled 13 year old. But humans did the work. Humans shape, distort, and weaponize. The technology is putty composed of zeros and ones. If I am correct, the essay wants to terminate humans. Once these bad actors are gone, the technology “problem” goes away. Sounds good, right?

Finally, the word “shared story.” What is this “shared story”? The commentary on a spectacular shot to win a basketball game? A myth that Thomas Jefferson was someone who kept his trousers buttoned? The story of a Type A researcher who experimented with radium and ended up a poster child for radiation poisoning? An influencer who escaped prison and became a homeless minister caring for those without jobs and a home? The “shared story” is a baffler. My hunch is that “shared story” is something that the “we” are sad has disappeared. My family was one of the group that founded Hartford, Connecticut, in the 17th century. Is that the Arnolds’ shared story. News flash: There are not many Arnolds left and those who remain laugh we I “share” that story. It means zero to them. If you want a “shared story”, go viral on YouTube or buy Super Bowl ads. Making friends with Taylor Swift will work too.

Net net: The mental orientation of the cited essay is clear in one sentence. Yikes, as the honor students might say.

Stephen E Arnold, December 28, 2023

Gallup on Social Media: Just One, Tiny, Irrelevant Data Point Missing

October 23, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[2] Vea4_thumb_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t2_thumb-25.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read “Teens Spend Average of 4.8 Hours on Social Media Per Day.” I like these insights into how intelligence is being whittled away.

Me? A dumb bunny. Thanks, MidJourney. What dumb bunny inspired you?

Three findings caught may attention and one, tiny, irrelevant data point I noticed was missing. Let’s look at three of the hooks snagging me.

First, the write up reveals:

Across age groups, the average time spent on social media ranges from as low as 4.1 hours per day for 13-year-olds to as high as 5.8 hours per day for 17-year-olds.

Doesn’t that seem like a large chunk of one’s day?

Second, I learned that the research unearthed this insight:

Teens report spending an average of 1.9 hours per day on YouTube and 1.5 hours per day on TikTok

I assume the bright spot is that only two plus hours are invested in reading X.com, Instagram, and encrypted messages.

Third, I learned:

The least conscientious adolescents — those scoring in the bottom quartile on the four items in the survey — spend an average of 1.2 hours more on social media per day than those who are highly conscientious (in the top quartile of the scale). Of the remaining Big 5 personality traits, emotional stability, openness to experience, agreeableness and extroversion are all negatively correlated with social media use, but the associations are weaker compared with conscientiousness.

Does this mean that social media is particularly effective on the most vulnerable youth?

Now let me point out the one item of data I noted was missing:

How much time does this sample spend reading?

I think I know the answer.

Stephen E Arnold, October 23, 2023

Digital Addiction Game Plan: Get Those Kiddies When Young

April 6, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I enjoy research which provides roadmaps for confused digital Hummer drivers. The Hummer weighs more than four tons and costs about the same as one GMLRS rocket. Digital weapons are more effective and less expensive. One does give up a bit of shock and awe, however. Life is full of trade offs.

The information in “Teens on Screens: Life Online for Children and Young Adults Revealed” is interesting. The analytics wizards have figure out how to hook a young person on zippy new media. I noted this insight:

Children are gravitating to ‘dramatic’ online videos which appear designed to maximize stimulation but require minimal effort and focus…

How does one craft a magnetic video:

Gossip, conflict, controversy, extreme challenges and high stakes – often involving large sums of money – are recurring themes. ‘Commentary’ and ‘reaction’ video formats, particularly those stirring up rivalry between influencers while encouraging viewers to pick sides, were also appealing to participants. These videos, popularized by the likes of Mr Beast, Infinite and JackSucksAtStuff, are often short-form, with a distinct, stimulating, editing style, designed to create maximum dramatic effect. This involves heavy use of choppy, ‘jump-cut’ edits, rapidly changing camera angles, special effects, animations and fast-paced speech.

One interesting item in the article’s summary of the research concerned “split screening.” The term means that one watches more than one short-form video at the same time. (As a dinobaby, I have to work hard to get one thing done. Two things simultaneously. Ho ho ho.)

What can an enterprising person interested in weaponizing information do? Here are some ideas:

- Undermine certain values

- Present shaped information

- Take time from less exciting pursuits like homework and reading books

- Having self-esteem building experiences.

Who cares? Advertisers, those hostile to the interests of the US, groomers, and probably several other cohorts.

I have to stop now. I need to watch multiple TikToks.

Stephen E Arnold, April 6, 2023

Surprise: TikTok Reveals Its Employees Can View European User Data

December 28, 2022

What a surprise. The Tech Times reports, “TikTok Says Chinese Employees Can Access Data from European Users.” This includes workers not just within China, but also in Brazil, Canada, Israel, Japan, Malaysia, Philippines, Singapore, South Korea, and the United States. According to The Guardian, TikTok revealed the detail in an update to its privacy policy. We are to believe it is all in the interest of improving the users’ experience. Writer Joseph Henry states:

“According to ByteDance, TikTok’s parent firm, accessing the user data can help in improving the algorithm performance on the platform. This would mean that it could help the app to detect bots and malicious accounts. Additionally, this could also give recommendations for content that users want to consume online. Back in July, Shou Zi Chew, a TikTok chief executive clarified via a letter that the data being accessed by foreign staff is a ‘narrow set of non-sensitive’ user data. In short, if the TikTok security team in the US gives a green light for data access, then there’s no problem viewing the data coming from American users. Chew added that the Chinese government officials do not have access to these data so it won’t be a big deal to every consumer.”

Sure they don’t. Despite assurances, some are skeptical. For example, we learn:

“US FCC Commissioner Brendan Carr told Reuters that TikTok should be immediately banned in the US. He added that he was suspicious as to how ByteDance handles all of the US-based data on the app.”

Now just why might he doubt ByteDance’s sincerity? What about consequences? As some Sillycon Valley experts say, “No big deal. Move on.” Dismissive naïveté is helpful, even charming.

Cynthia Murrell, December 28, 2022

SocialFi, a New Type of Mashup

November 22, 2022

Well this is quite an innovation. HackerNoon alerts us to a combination of just wonderful stuff in, “The Rise of SocialFi: A Fusion of Social Media, Web3, and Decentralized Finance.” Short for social finance, SocialFi builds on other -Fi trends: DeFi (decentralized finance) and GameFi (play-to-earn crypto currency games). The goal is to monetize social media through “tokenized achievements.” Writer Samiran Mondal elaborates:

“SocialFi is an umbrella that combines various elements to provide a better social experience through crypto, DeFi, metaverse, NFTs, and Web3. At the heart of SocialFi aremonetization and incentivization through social tokens. SocialFi offers many new ways for users, content creators, and app owners to monetarize their engagements. This has perhaps been the most attractive aspect of SocialFi. By introducing the concept of social tokens and in-app utility tokens. Notably, these tokens are not controlled by the platform but by the creator. Token creators have the power to decide how they want their tokens to be utilized, especially by fans and followers.”

This monetization strategy is made possible by more alphabet soup—PFP NFTs, or picture-for-proof non fungible tokens. These profile pictures identify users, provide proof of NFT ownership, and connect users to specific SocialFi communities. Then there are the DAOs, or decentralized autonomous organizations. These communities make decisions through member votes to prevent the type of unilateral control exercised by companies like Facebook, Twitter, and TikTok. This arrangement provides another feature. Or is it a bug? We learn:

“SocialFi also advocates for freedom of speech since users’ messages or content are not throttled by censorship. Previously, social media platforms were heavily plagued by centralized censorship that limited what users could post to the extent of deleting accounts that content creators and users had poured their hearts and souls into. But with SocialFi, users have the freedom to post content without the constant fear of overreaching moderation or targeted censorship.”

Sounds great, until one realizes what Mondal calls overreach and censorship would include efforts to quell the spread of misinformation and harmful content already bedeviling society. To those behind SocialFi have any plans to address that dilemma? Sure.

Cynthia Murrell, November 22, 2022

Evolution? Sure, Consider the Future of Humanoids

November 11, 2022

It’s Friday, and everyone deserves a look at what their children’s grandchildren will look like. Let me tell you. These progeny will be appealing folk. “Future Humans Could Have Smaller Brains, New Eyelids and Hunchbacks Thanks to Technology.” Let’s look at some of the real “factoids” in this article from the estimable, rock solid fact factory, The Daily Sun:

- A tech neck which looks to me to be a baby hunchback

- Smaller brains (evidence of this may be available now. Just ask a teen cashier to make change

- A tech claw. I think this means fingers adapted to thumbtyping and clicky keyboards.

I must say that these adaptations seem to be suited to the digital environment. However, what happens if there is no power?

Perhaps Neanderthal characteristics will manifest themselves? Please, check the cited article for an illustration of the future human. My first reaction was, “Someone who looks like that works at one of the high tech companies near San Francisco. Silicon Valley may be the cradle of adapted humans at this time. Perhaps a Stanford grad student will undertake a definitive study by observing those visiting Philz’ Coffee.

Stephen E Arnold, November 11, 2022

The Google: Indexing and Discriminating Are Expensive. So Get Bigger Already

November 9, 2022

It’s Wednesday, November 9, 2022, only a few days until I hit 78. Guess what? Amidst the news of crypto currency vaporization, hand wringing over the adult decisions forced on high school science club members at Facebook and Twitter, and the weirdness about voting — there’s a quite important item of information. This particular datum is likely to be washed away in the flood of digital data about other developments.

What is this gem?

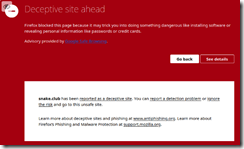

An individual has discovered that the Google is not indexing some Mastodon servers. You can read the story in a Mastodon post at this link. Don’t worry. The page will resolve without trying to figure out how to make Mastodon stomp around in the way you want it to. The link to you is Snake.club Stephen Brennan.

The item is that Google does not index every Mastodon server. The Google, according to Mr. Brennan:

has decided that since my Mastodon server is visually similar to other Mastodon servers (hint, it’s supposed to be) that it’s an unsafe forgery? Ugh. Now I get to wait for a what will likely be a long manual review cycle, while all the other people using the site see this deceptive, scary banner.

So what?

Mr. Brennan notes:

Seems like El Goog has no problem flagging me in an instant, but can’t cleanup their mistakes quickly.

A few hours later Mr. Brennan reports:

However, the Search Console still insists I have security problems, and the “transparency report” here agrees, though it classifies my threat level as Yellow (it was Red before).

Is the problem resolved? Sort of. Mr. Brennan has concluded:

… maybe I need to start backing up my Google data. I could see their stellar AI/moderation screwing me over, I’ve heard of it before.

Why do I think this single post and thread is important? Four reasons:

- The incident underscores how an individual perceives Google as “the Internet.” Despite the use of a decentralized, distributed system. The mind set of some Mastodon users is that Google is the be-all and end-all. It’s not, of course. But if people forget that there are other quite useful ways of finding information, the desire to please, think, and depend on Google becomes the one true way. Outfits like Mojeek.com don’t have much of a chance of getting traction with those in the Google datasphere.

- Google operates on a close-enough-for-horseshoes or good-enough approach. The objective is to sell ads. This means that big is good. The Good Principle doesn’t do a great job of indexing Twitter posts, but Twitter is bigger than Mastodon in terms of eye balls. Therefore, it is a consequence of good-enough methods to shove small and low-traffic content output into a area surrounded by Google’s police tape. Maybe Google wants Mastodon users behind its police tape? Maybe Google does not care today but will if and when Mastodon gets bigger? Plus some Google advertisers may want to reach those reading search results citing Mastodon? Maybe? If so, Mastodon servers will become important to the Google for revenue, not content.

- Google does not index “the world’s information.” The system indexes some information, ideally information that will attract users. In my opinion, the once naive company allegedly wanted to achieve the world’s information. Mr. Page and I were on a panel about Web search as I recall. My team and I had sold to CMGI some technology which was incorporated into Lycos. That’s why I was on the panel. Mr. Page rolled out the notion of an “index to the world’s information.” I pointed out that indexing rapidly-expanding content and the capturing of content changes to previously indexed content would be increasingly expensive. The costs would be high and quite hard to control without reducing the scope, frequency, and depth of the crawls. But Mr. Page’s big idea excited people. My mundane financial and technical truths were of zero interest to Mr. Page and most in the audience. And today? Google’s management team has to work overtime to try to contain the costs of indexing near-real time flows of digital information. The expense of maintaining and reindexing backfiles is easier to control. Just reduce the scope of sites indexed, the depth of each crawl, the frequency certain sites are reindexed, and decrease how much content old content is displayed. If no one looks at these data, why spend money on it? Google is not Mother Theresa and certainly not the Andrew Carnegie library initiative. Mr. Brennan brushed against an automated method that appears to say, “The small is irrelevant controls because advertisers want to advertise where the eyeballs are.”

- Google exists for two reasons: First, to generate advertising revenue. Why? None of its new ventures have been able to deliver advertising-equivalent revenue. But cash must flow and grow or the Google stumbles. Google is still what a Microsoftie called a “one-trick pony” years ago. The one-trick pony is the star of the Google circus. Performing Mastodons are not in the tent. Second, Google wants very much to dominate cloud computing, off-the-shelf machine learning, and cyber security. This means that the performing Mastodons have to do something that gets the GOOG’s attention.

Net net: I find it interesting to find examples of those younger than I discovering the precise nature of Google. Many of these individuals know only Google. I find that sad and somewhat frightening, perhaps more troubling than Mr. Putin’s nuclear bomb talk. Mr. Putin can be seen and heard. Google controls its datasphere. Like goldfish in a bowl, it is tough to understand the world containing that bowl and its inhabitants.

Stephen E Arnold, November 9, 2022

COVID-19 Made Reading And Critical Thinking Skills Worse For US Students

March 31, 2022

The COVID-19 pandemic is the first time in history remote learning was implemented for all educational levels. As the guinea pig generation, students were forced to deal with technical interruptions and, unfortunately, not the best education. Higher achieving and average students will be able to compensate for the last two years of education, but the lower achievers will have trouble. The pandemic, however, exasperated an issue with learning.

Kids have been reading less with each passing year, because of they spend more time consuming social media and videogames. Kids are reading, except they are absorbing a butchered form of the English language and not engaging their critical thinking skills. Social media and videogames are passive activities, Another problem for the low reading skills says The New York Times in, “The Pandemic Has Worsened The Reading Crisis In Schools” is the lack of educators:

“The causes are multifaceted, but many experts point to a shortage of educators trained in phonics and phonemic awareness — the foundational skills of linking the sounds of spoken English to the letters that appear on the page.”

According to the article, remote learning lessened the quality of learning elementary school received on reading fundamentals. It is essential for kids to pickup the basics in elementary school, otherwise higher education will be more difficult. Federal funding is being used for assistance programs, but there is a lack of personnel. Trained individuals are leaving public education for the private sector, because it is more lucrative.

Poor reading skills feed into poor critical skills. The Next Web explores the dangers of deep fakes and how easily they fool people: “Deep fakes Study Finds Doctored Text Is More Manipulative Than Phony Video.” Deep fakes are a dangerous AI threat, but MIT Media Lab scientists discovered that people have a hard time discerning fake sound bites:

“Scientists at the MIT Media Lab showed almost 6,000 people 16 authentic political speeches and 16 that were doctored by AI. The sound bites were presented in permutations of text, video, and audio, such as video with subtitles or only text. The participants were told that half of the content was fake, and asked which snippets they believed were fabricated.”

When the participants were only shown text they barely discovered the falsehoods with a 57% success rate, while they were more accurate at video with subtitles (66%) and the best at video and text combined (82%). Participants relied on tone and vocal conveyance to discover the fakes, which makes sense given that is how people discover lying:

“The study authors said the participants relied more on how something was said than the speech content itself: ‘The finding that fabricated videos of political speeches are easier to discern than fabricated text transcripts highlights the need to re-introduce and explain the oft-forgotten second half of the ‘seeing is believing’ adage.’ There is, however, a caveat to their conclusions: their deep fakes weren’t exactly hyper-realistic.”

Low quality deep fakes are not as dangerous as a single video with high resolution, great audio, and spot on duplicates of the subjects. Even the smartest people will be tricked by one high quality deep fake than thousands of bad ones.

It is more alarming that participants did not do well with the text only sound bites. Dd they lack the critical thinking and reading skills they should have learned in elementary school or did the lack of delivery from a human stump them?

Students need to focus on the basics of reading and critical thinking to establish their entire education. It is more fundamental than anything else.

Whitney Grace, March 31, 2022