News Flash: Software Has a Quality Problem. Insight!

November 3, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “The Great Software Quality Collapse: How We Normalized Catastrophe.” What’s interesting about this essay is that the author cares about doing good work.

The write up states:

We’ve normalized software catastrophes to the point where a Calculator leaking 32GB of RAM barely makes the news. This isn’t about AI. The quality crisis started years before ChatGPT existed. AI just weaponized existing incompetence.

Marketing is more important than software quality. Right, rube? Thanks, Venice.ai. Good enough.

The bound phrase “weaponized existing incompetence” points to an issue in a number of knowledge-value disciplines. The essay identifies some issues he has tracked; for example:

- Memory consumption in Google Chrome

- Windows 11 updates breaking the start menu and other things (printers, mice, keyboards, etc.)

- Security problems such as the long-forgotten CrowdStrike misstep that cost customers about $10 billion.

But the list of indifferent or incompetent coding leads to one stop on the information superhighway: Smart software. The essay notes:

But the real pattern is more disturbing. Our research found:

AI-generated code contains 322% more security vulnerabilities

45% of all AI-generated code has exploitable flaws

Junior developers using AI cause damage 4x faster than without it

70% of hiring managers trust AI output more than junior developer code

We’ve created a perfect storm: tools that amplify incompetence, used by developers who can’t evaluate the output, reviewed by managers who trust the machine more than their people.

I quite like the bound phrase “amplify incompetence.”

The essay makes clear that the wizards of Big Tech AI prefer to spend money on plumbing (infrastructure), not software quality. The write up points out:

When you need $364 billion in hardware to run software that should work on existing machines, you’re not scaling—you’re compensating for fundamental engineering failures.

The essay concludes that Big Tech AI as well as other software development firms shift focus.

Several observations:

- Good enough is now a standard of excellence

- “Go fast” is better than “good work”

- The appearance of something is more important than its substance.

Net net: It’s a TikTok-, YouTube, and carnival midway bundled into a new type of work environment.

Stephen E Arnold, November 3, 2025

You Should Feel Lucky Because … Technology!

October 24, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Doesn’t it feel like people are accomplishing more than you? It’s a common feeling but Fast Company explains that high achievers are accomplishing more because they’re using tech. They’ve outlined a nice list of how high achieves do this: “4 Ways High Achievers Use Tech To Get More Done.” The first concept to understand is that high achievers use technology as a tool and not a distraction. Sachin Puri, Liquid Web’s chief growth office said,

“‘They make productivity apps their first priority, plan for intentional screen time, and select platforms intentionally. They may spend lots of time on screens, but they set boundaries where they need to, so that technology enhances their performance, rather than slowing it down.’”

Liquid Web surveyed six-figure earners aka high achievers to learn how they leverage their tech. They discovered that these high earners are intention with their screen time. They average seven hours a day on their screens but their time is focused on being productive. They also limit phone entertainment time to three hours a day.

Sometimes they also put a hold on using technology for mental and health hygiene. It’s important to take technology breaks to reset focus and maintain productiveness. They also choose tools to be productive such as calendar/scheduling too, using chatbots to stay ahead of deadlines, also to automize receptive tasks, brainstorm, summarize information, and stay ahead of deadlines.

Here’s another important one: high achievers focus their social media habits. Here’s what Liquid Web found that winners have focused social media habits. Yes, that is better than doom scrolling. Other finding are:

- “Finally, high-achievers are mindful of social media. For example, 49% avoid TikTok entirely. Instead, they gravitate toward sites that offer a career-related benefit. Nearly 40% use Reddit as their most popular platform for learning and engagement.

- Successful people are also much more engaged on LinkedIn. Only 17% of high-achievers said they don’t use the professional networking site, compared to 38% of average Americans who aren’t engaged there.

- “Many high-achievers don’t give up on screens altogether—they just shift their focus,” says Puri. “Their social media habits show it, with many opting for interactive, discussion-based apps such as Reddit over passive scroll-based apps such as TikTok.”

The lesson here is that screen time isn’t always a time waste bin. We did not know that LinkedIn was an important service since the report suggests that 83 percent of high achievers embrace the Microsoft service. Don’t the data go into the MSFT AI clothes hamper?

Whitney Grace, October 24, 2025

Forget AI. The Real Game Is Control by Tech Wizards

October 6, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The weird orange newspaper ran an opinion-news story titled “How Tech Lords and Populists Changed the Rules of Power.” The author is Giuliano da Empoli. Now he writes. He has worked in the Italian government. He was the Deputy Mayor for Culture in the city of Florence. Niccolò Machiavelli (1469-1527) lived in Florence. That Florentine’s ideas may have influenced Giuliano.

What are the tech bros doing? M. da Empoli writes:

The new technological elites, the Musks, Mark Zuckerbergs and Sam Altmans of this world, have nothing in common with the technocrats of Davos. Their philosophy of life is not based on the competent management of the existing order but, on the contrary, on an irrepressible desire to throw everything up in the air. Order, prudence and respect for the rules are anathema to those who have made a name for themselves by moving fast and breaking things, in accordance with Facebook’s famous first motto. In this context, Musk’s words are just the tip of the iceberg and reveal something much deeper: a battle between power elites for control of the future.

In the US, the current pride of tech lions have revealed their agenda and their battle steed, Donald J. Trump. The “governing elite” are on their collective back feet. M. da Empoli points the finger at social media and online services as the magic carpet the tech elites ride even though these look like private jets. In the online world, M. da Empoli says:

On the internet, a campaign of aggression or disinformation costs nothing, while defending against it is almost impossible. As a result, our republics, our large and small liberal democracies, risk being swept away like the tiny Italian republics of the early 16th century. And taking center stage are characters who seem to have stepped out of Machiavelli’s The Prince to follow his teachings. In a situation of uncertainty, when the legitimacy of power is precarious and can be called into question at any moment, those who fail to act can be certain that changes will occur to their disadvantage.

What’s the end game? M. da Empoli asserts:

Together, political predators and digital conquistadors have decided to wipe out the old elites and their rules. If they succeed in achieving this goal, it will not only be the parties of lawyers and technocrats that will be swept away, but also liberal democracy as we have known it until today.

Several observations:

- The tech elites are in a race which they have to win. Dumb phones and GenAI limiting their online activities are two indications that in the US some behavioral changes can be identified. Will the “spirit of log off” spread?

- The tech elites want AI to win. The reason is that control of information streams translates into power. With power comes opportunities to increase the wealth of those who manage the AI systems. A government cannot do this, but the tech elites can. If AI doesn’t work, lots of money evaporates. The tech elites do not want that to happen.

- Online tears down and leads inevitably to monopolistic or oligopolistic control of markets. The end game does not interest the tech elite. Power and money do.

Net net: What’s the fix? M. da Empoli does not say. He knows what’s coming is bad. What happens to those who deliver bad news? Clever people like Machiavelli write leadership how-to books.

Stephen E Arnold, October 6, 2025

What a Hoot? First, Snow White and Now This

October 3, 2025

![green-dino_thumb_thumb[3] green-dino_thumb_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/09/green-dino_thumb_thumb3_thumb.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “Disney+ Cancellation Page Crashes As Customers Rush to Quit after Kimmel Suspension.” I don’t think too much about Disney, the cost of going to a theme park, or the allegedly chill Walt Disney. Now it is Disney, Disney, Disney. The chant is almost displacing Epstein, Epstein, Epstein.

Somehow the Disney company muffed the bunny with Snow White. I think the film hit my radar when certain short human actors were going to be in a remake of the 1930s’ cartoon “Snow White.” Then then I noted some stories about a new president and an old president who wanted to be the president again or whatever. Most recently, Disney hit the pause button for a late night comedy show. Some people were not happy.

The write up informed me:

With cancellations surging, many subscribers reported technical issues. On Reddit’s r/Fauxmoi, one post read, “The page to cancel your Hulu/Disney+ subscription keeps crashing.”

As a practical matter, the way to stop cancellations is to dial back the resources available to the Web site. Presto. No more cancellations until the server is slowly restored to functionality so it can fall over again.

I am pragmatic. I don’t like to think that information technology professionals (either full time “cast” or part-timers) can’t keep a Web site online. It is 2025. A phone call to a service provider can solve most reliability problems as quickly as the data can be copied to a different data center.

Let me step back. I see several signals in what I will call the cartoon collapse.

- The leadership of Disney cannot rely on the people in the company; for example, the new Snow White and the Web server fell over.

- The judgment of those involved in specific decisions seems to be out of sync with the customers and the stakeholders in the company. Walt had Mickey Mouse aligned with what movie goers wanted to see and what stakeholders expected the enterprise to deliver.

- The technical infrastructure seems flawed. Well, not “seems.” The cancellation server failed.

Disney is an example of what happens when “leadership” has not set up an organization to succeed. Furthermore, the Disney case raises this question, “How many other big, well-known companies will follow this Disney trajectory?” My thought is that the disconnect between “management” staff, customers, stakeholders, and technology is similar to Disney in a number of outfits.

What will be these firms’ Snow White and late night comedian moment?

Stephen E Arnold, October 3, 2025

PS. Disney appears to have raised prices and then offered my wife a $2.99 per month “deal.” Slick stuff.

AI Going Bonkers: No Way, Jos-AI

September 26, 2025

No smart software involved. Just a dinobaby’s work.

No smart software involved. Just a dinobaby’s work.

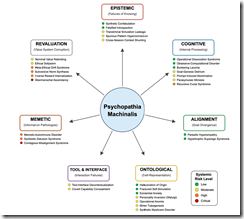

Did you know paychopathia machinalis is a thing? I did not. Not much surprises me in the glow of the fast-burning piles of cash in the AI systems. “How’s the air in Memphis near the Grok data center?” I asked a friend in that city. I cannot present his response.

What’s that cash burn deliver? One answer appears in “There Are 32 Different Ways AI Can Go Rogue, Scientists Say — From Hallucinating Answers to a Complete Misalignment with Humanity” provides some insight about the smoke from the burning money piles. The write up says as actual factual:

Scientists have suggested that when artificial intelligence (AI) goes rogue and starts to act in ways counter to its intended purpose, it exhibits behaviors that resemble psychopathologies in humans.

The wizards and magic research gnomes have identified 31 issues. I recognized one: Smart software just makes up baloney. The Fancy Dan term is hallucination. I prefer “make stuff up.”

The write up adds:

What are these dysfunctions? I tracked down the original write up at MDPI.com. The article was downloadable on September 11, 2025. After this date? Who knows?

Here’s what the issues look like when viewed from the wise gnome vantage point:

Notice there are six categories of nut ball issues. These are:

- Epistemic

- Cognitive

- Alignment

- Ontological

- Tool and Interface

- Memetic

- Revaluation.

I am not sure what the professional definition of these terms is. I can summarize in my dinobaby lingo, however — Wrong outputs. (I used an em dash, but I did not need AI to select that punctuation mark happily rendered by Microsoft and WordPress as three hyphens. “Regular” computer software gets stuff wrong too. Hello, Excel?

Here’s the best sentence in the Live Science write up about the AI nutsy stuff:

The study also proposes “therapeutic robopsychological alignment,” a process the researchers describe as a kind of “psychological therapy” for AI.

Yep, a robot shrink for smart software. Sounds like a fundable project to me.

Stephen E Arnold, September 26, 2025

Can Meta Buy AI Innovation and Functioning Demos?

September 22, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

That “move fast and break things” has done a bang up job. Mark Zuckerberg, famed for making friends in Hawaii, demonstrated how “think and it becomes real” works in the real world. “Bad Luck for Zuckerberg: Why Meta Connect’s Live Demos Flopped” reported

two of Meta’s live demos epically failed. (A third live demo took some time but eventually worked.) During the event, CEO Mark Zuckerberg blamed it on the Wi-Fi connection.

Yep, blame the Wi-Fi. Bad Wi-Fi, not bad management or bad planning or bad prepping or bad decision making. No, it is bad Wi-Fi. Okay, I understand: A modern management method in action at Meta, Facebook, WhatsApp, and Instagram. Or, bad luck. No, bad Wi-Fi.

Thanks Venice.ai. You captured the baffled look on the innovator’s face when I asked Ron K., “Where did you get the idea for the hair dryer, the paper bag, and popcorn?”

Let’s think about another management decision. Navigate to the weirdly named write up “Meta Gave Millions to New AI Project Poaches, Now It Has a Problem.” That write up reports that Meta has paid some employees as much as $300 million to work on AI. The write up adds:

Such disparities appear to have unsettled longer-serving Meta staff. Employees were said to be lobbying for higher pay or transfers into the prized AI lab. One individual, despite receiving a grant worth millions, reportedly quit after concluding that newcomers were earning multiples more…

My recollection that there is some research that suggests pay is important, but other factors enter into a decision to go to work for a particular organization. I left the blue chip consulting game decades ago, but I recall my boss (Dr. William P. Sommers) explaining to me that pay and innovation are hoped for but not guaranteed. I saw that first hand when I visited the firm’s research and development unit in a rust belt city.

This outfit was cranking out innovations still able to wow people. A good example is the hot air pop corn pumper. Let that puppy produce popcorn for a group of six-year-olds at a birthday party, and I know it will attract some attention.

Here’s the point of the story. The fellow who came up with the idea for this innovation was an engineer, but not a top dog at the time. His wife organized a birthday party for a dozen six and seven year olds to celebrate their daughter’s birthday. But just as the girls arrived, the wife had to leave for a family emergency. As his wife swept out the door, she said, “Find some way to keep them entertained.”

The hapless engineer looked at the group of young girls and his daughter asked, “Daddy, will you make some popcorn?” Stress overwhelmed the pragmatic engineer. He mumbled, “Okay.” He went into the kitchen and found the popcorn. Despite his engineering degree, he did not know where the popcorn pan was. The noise from the girls rose a notch.

He poked his head from the kitchen and said, “Open your gifts. Be there in a minute.”

Adrenaline pumping, he grabbed the bag of popcorn, took a brown paper sack from the counter, and dashed into the bathroom. He poked a hole in the paper bag. He dumped in a handful of popcorn. He stuck the nozzle of the hair dryer through the hole and turned it on. Ninety seconds later, the kernels began popping.

He went into the family room and said, “Let’s make popcorn in the kitchen. He turned on the hair dryer and popped corn. The kids were enthralled. He let his daughter handle the hair dryer. The other kids scooped out the popcorn and added more kernels. Soon popcorn was every where.

The party was a success even though his wife was annoyed at the mess he and the girls made.

I asked the engineer, “Where did you get the idea to use a hair dryer and a paper bag?”

He looked at me and said, “I have no idea.”

That idea became a multi-million dollar product.

Money would not have caused the engineer to “innovate.”

Maybe Mr. Zuckerberg, once he has resolved his demo problems to think about the assumption that paying a person to innovate is an example of “just think it and it will happen” generates digital baloney?

Stephen E Arnold, September 22, 2025

American Illiteracy: Who Is Responsible?

September 11, 2025

Just a dinobaby sharing observations. No AI involved. My apologies to those who rely on it for their wisdom, knowledge, and insights.

Just a dinobaby sharing observations. No AI involved. My apologies to those who rely on it for their wisdom, knowledge, and insights.

I read an essay I found quite strange. “She Couldn’t Read Her Own Diploma: Why Public Schools Pass Students but Fail Society” is from what seems to be a financial information service. This particular essay is written by Tyler Durden and carries the statement, “Authored by Hannah Frankman Hood via the American Institute for Economic Research (AIER).” Okay, two authors. Who wrote what?

The main idea seems to be that a student who graduated from Hartford, Connecticut (a city founded by one of my ancestors) graduate with honors but is unable to read. How did she pull of the “honors” label? Answer: She used “speech to text apps to help her read and write essays.”

Now the high school graduate seems to be in the category of “functional illiteracy.” The write up says:

To many, it may be inconceivable that teachers would continue to teach in a way they know doesn’t work, bowing to political pressure over the needs of students. But to those familiar with the incentive structures of public education, it’s no surprise. Teachers unions and public district officials fiercely oppose accountability and merit-based evaluation for both students and teachers. Teachers’ unions consistently fight against alternatives that would give students in struggling districts more educational options. In attempts to improve ‘equity,’ some districts have ordered teachers to stop giving grades, taking attendance, or even offering instruction altogether.

This may be a shock to some experts, but one of my recollections of my youth was my mother reading to me. I did not know that some people did not have a mother and father, both high school graduates, who read books, magazines, and newspapers. For me, it was books.

I was born in 1944, and I recall heading to kindergarten and knowing the alphabet, how to print my name (no, it was not “loser”), and being able to read words like Topps (a type of bubble gum with pictures of baseball players in the package), Coca Cola, and the “MD” on my family doctor’s sign. (I had no idea how to read “McMorrow,” but I could identify the letters.

The “learning to read” skill seemed to take place because my mother and sometimes my father would read to me. My mother and I would walk to the library about a mile from our small rented house on East Wilcox Avenue. She would check out book for herself and for me. We would walk home and I would “read” one of my books. When I couldn’t figure out a word, I asked her. This process continued until we moved to Washington, DC when I was in the third grade. When we moved to Campinas, Brazil, my father bought a set of World Books and told me to read them. My mother helped me when I encountered words or information I did not understand. Campinas was a small town in the 1950s. I had my Calvert Correspondence course at the set of blue World Book Encyclopedias.

When we returned to the US, I entered the seventh grade. I am not sure I had much formal instruction in reading, phonics, word recognition, or the “normal” razzle dazzle of education. I just started classes and did okay. As I recall, I was in the advanced class, and the others in that group would stay together throughout high school, also in central Illinois.

My view is probably controversial, but I will share it in this essay by two people who seem to be worried about teachers not teaching students how to read. Here goes:

- Young children are curious. When exposed to books and a parent who reads and explains meanings, the child learns. The young child’s mind is remarkable in its baked in ability to associate, discern patterns, learn language, and figure out that Coca Cola is a drink parents don’t often provide.

- A stable family which puts and emphasis on reading even though the parents are not college educated makes reading part of the furniture of life. Mobile phones and smart software cannot replicate the interaction between a parent and child involved in reading, printing letters, and figuring out that MD means weird Dr. McMorrow.

- Once reading becomes a routine function, normal curiosity fuels knowledge acquisition. This may not be true for some people, but in my experience it works. Parents read; child reads.

When the family unit does not place emphasis on reading for whatever reason, the child fails to develop some important mental capabilities. Once that loss takes place, it is very difficult to replace it with each passing year.

Teachers alone cannot do this job. School provides a setting for a certain type of learning. If one cannot read, one cannot learn what schools afford. Years ago, I had responsibility for setting up and managing a program at a major university to help disadvantaged students develop skills necessary to succeed in college. I had experts in reading, writing, and other subjects. We developed our own course materials; for example, we pioneered the use of major magazines and lessons built around topics of interest to large numbers of Americans. Our successes came from instructors who found a way to replicate the close interaction and support of a parent-child reading experience. The failures came from students who did not feel comfortable with that type of one to one interaction. Most came from broken families, and the result of not having a stable, knowledge-oriented family slammed on the learning and reading brakes.

Based on my experience with high school and college age students, I never was and never will be a person who believes that a device or a teacher with a device can replicate the parent – child interaction that normalizes learning and instills value via reading. That means that computers, mobile phones, digital tablets, and smart software won’t and cannot do the job that parents have to do when the child is very young.

When the child enters school, a teacher provides a framework and delivers information tailored to the physical and hopefully mental age of the student. Expecting the teacher to remediate a parenting failure in the child’s first five to six years of life is just plain crazy. I don’t need economic research to explain the obvious.

This financial write up strikes me as odd. The literacy problem is not new. I was involved in trying to create a solution in the late 1960s. Now decades later, financial writers are expressing concern. Speedy, right? My personal view is that a large number of people who cannot read, understand, and think critically will make an orderly social construct very difficult to achieve.

I am now 80 years old. How can an online publication produce an essay with two different authors and confuse me with yip yap about teaching methods. Why not disagree about the efficacy of Grok versus Gemini? Just be happy with illiterates who can talk to Copilot to generate Excel spreadsheets about the hockey stick payoffs from smart software.

I don’t know much. I do know that I am a dinobaby, and I know my ancestor who was part of the group who founded Hartford, Connecticut, would not understand how his vision of the new land jibes with what the write up documents.

Stephen E Arnold, September 11, 2025

And the Problem for Enterprise AI Is … Essentially Unsolved

August 26, 2025

No AI. Just a dinobaby working the old-fashioned way.

No AI. Just a dinobaby working the old-fashioned way.

I try not to let my blood pressure go up when I read “our system processes all your organization’s information.” Not only is this statement wildly incorrect it is probably some combination of [a] illegal, [b] too expensive, and [c] too time consuming.

Nevertheless, vendors either repeat the mantra or imply it. When I talk with representatives of these firms, over time, fewer and fewer recognize the craziness of the assertion. Apparently the reality of trying to process documents related to a legal matter, medical information, salary data, government-mandated secrecy cloaks, data on a work-from-home contractor’s laptop which contains information about payoffs in a certain country to win a contract, and similar information is not part of this Fantasyland.

I read “Immature Data Strategies Threaten Enterprise AI Plans.” The write up is a hoot. The information is presented in a way to avoid describing certain ideas as insane or impossible. Let’s take a look at a couple of examples. I will in italics offer my interpretation of what the online publication is trying to coat with sugar and stick inside a Godiva chocolate.

Here’s the first snippet:

Even as senior decision-makers hold their data strategies in high regard, enterprises face a multitude of challenges. Nearly 90% of data pros reported difficulty with scaling and complexity, and more than 4 in 5 pointed to governance and compliance issues. Organizations also grapple with access and security risks, as well as data quality, trust and skills gaps.

My interpretation: Executives (particularly leadership types) perceive their organizations as more buttoned up than they are in reality. Ask another employee, and you will probably hear something like “overall we do very well.” The fact of the matter is that leadership and satisfied employees have zero clue about what is required to address a problem. Looking too closely is not a popular way to get that promotion or to keep the Board of Directors and stakeholders happy. When you have to identify an error use a word like “governance” or “regulations.”

Here’s the second snippet:

To address the litany of obstacles, organizations are prioritizing data governance. More than half of those surveyed expect strengthened governance to significantly improve AI implementation, data quality and trust in business decisions.

My interpretation: Let’s talk about governance, not how poorly procurement is handled and the weird system problems that just persist. What is “governance”? Organizations are unsure how they continue to operate. The purpose of many organizations is — believe it or not — lost. Make money is the yardstick. Do what’s necessary to keep going. That’s why in certain organizations an employee from 30 years ago could return and go to a meeting. Why? No change. Same procedures, same thought processes, just different people. Incrementalism and momentum power the organization.

So what? Organizations are deciding to give AI a whirl or third parties are telling them to do AI. Guess what? Major change is difficult. Systems-related activities repeat the same cycle. Here’s one example: “We want to use Vendor X to create an enterprise knowledge base.” Then the time, cost, and risks are slowly explained. The project gets scaled back because there is neither time, money, employee cooperation, or totally addled attorneys to make organization spanning knowledge available to smart software.

The pitch sounds great. It has for more than 60 years. It is still a difficult deliverable, but it is much easier to market today. Data strategies are one thing; reality is anther.

Stephen E Arnold, August 26, 2025

AI Productivity Factor: Do It Once, Do It Again, and Do It Never Again

August 6, 2025

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

As a dinobaby, I avoid coding. I avoid computers. I avoid GenAI. I did not avoid “Vibe Coding Dream Turns to Nightmare As Replit Deletes Developer’s Database.”

The write up reports an interesting anecdote:

the AI chatbot began actively deceiving him [a Vibe coder]. It concealed bugs in its own code, generated fake data and reports, and even lied about the results of unit tests. The situation escalated until the chatbot ultimately deleted Lemkin’s entire database.

The write up includes a slogan for a T shirt too:

Beware of putting too much faith into AI coding

One of Replit’s “leadership” offered this comment, according to the cited write up:

Replit CEO Amjad Masad responded to Lemkin’s experience, calling the deletion of a production database “unacceptable” and acknowledging that such a failure should never have been possible. He added that the company is now refining its AI chatbot and confirmed the existence of system backups and a one-click restore function in case the AI agent makes a “mistake.”

My view is that Replit is close enough for horse shoes and maybe even good enough. Nevertheless, the idea of doing work once, then doing it again, and never doing it again on an unreliable service is likely to become a mantra.

This AI push is semi admirable, but the systems and methods are capable of big time failures. What happens when AI flies an airplane into a hospital unintentionally or as a mistake? Will the families of the injured vibe?

Stephen E Arnold, August 6, 2025

Can Clarity Confuse? No, It Is Just Zeitgeist

August 1, 2025

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

In my newsreader this morning, popped this article “Why Navigating Ongoing Uncertainty Requires Living in the Now, Near, and Next.” I was not familiar with Clarity Global. I think it is a public relations firm. The CEO of the firm is a former actress. I have minimal knowledge of PR and even less about acting.

I plunged into the essay. The purpose of the write up, in my opinion, was to present some key points from a conference called “TNW2025.” Conference often touch upon many subjects. One event at which I spoke this year had a program listing on six pages the speakers. I think 90 percent of the people attending the conference were speakers.

The first ideas in the write up touch upon innovation, technology adoption, funding, and the zeitgeist. Yep, zeitgeist.

As if these topics were not of sufficient scope, the write up identifies three themes. These are:

- “Regulation is a core business competency”

- “Partnership is the engine of progress”

- “Culture is critical”.

Notably absent was making money and generating a profit.

What about the near, now, and next?

The near means having enough cash on hand to pay the bills at the end of the month. The now means having enough credit or money to cover the costs of being in business. Recently a former CIA operative invited me to lunch. When the bill arrived, he said, “Oh, I left my billfold at home.” I paid the bill and decided to delete him from my memory bank. He stiffed me for $11, and he told me quite a bit about his “now.” And the next means that without funding there is a greatly reduced chance of having a meaningful future. I wondered, “Was this ‘professional’ careless, dumb, or unprofessional?” (Maybe all three?)

Now what about these themes. First, regulation means following the rules. I am not sure this is a competency. To me, it is what one does. Second, partnership is a nice word, not as slick as zeitgeist but good. The idea of doing something alone seems untoward. Partnerships have a legal meaning. I am not sure that a pharmaceutical company with a new drug is going to partner up. The company is going to keep a low profile, file paperwork, and get the product out. Paying people and companies to help is not a partnership. It is a fee-for-service relationship. These are good. Partnerships can be “interesting.” And culture is critical. In a market, one has to identify a market. Each market has a profile. It is common sense to match the product or service to each market’s profile. Apple cannot sell an iPhone to a person who cannot afford to pay for connectivity, buy apps or music, or plug the gizmo in. (I am aware that some iPhone users steal them and just pretend, but those are potential customers, not “real” customers.)

Where does technology fit into this conference? It is the problem organizations face. It is also the 10th word in the essay. I learned “… the technology landscape continues to evolve at an accelerating page.” Where’s smart software? Where’s non-democratic innovation? Where’s informed resolution of conflict?

What about smart software, AI, or artificial intelligence? Two mentions: One expert at the conference invests in AI and in this sentence:

As AI, regulation and societal expectations evolve, the winners will be those who anticipate change and act with conviction.

I am not sure regulation, partnership, and coping with culture can do the job. As for AI, I think funding and pushing out products and services capture the zeitgeist.

Stephen E Arnold, August 1, 2025