Google and X: Shall We Again Love These Bad Dogs?

November 30, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Two stories popped out of my blah newsfeed this morning (Thursday, November 30, 2023). I want to highlight each and offer a handful of observations. Why? I am a dinobaby, and I remember the adults who influenced me telling me to behave, use common sense, and follow the rules of “good” behavior. Dull? Yes. A license to cut corners and do crazy stuff? No.

The first story, if it is indeed accurate, is startling. “Google Caught Placing Big-Brand Ads on Hardcore Porn Sites, Report Says” includes a number of statements about the Google which make me uncomfortable. For instance:

advertisers who feel there’s no way to truly know if Google is meeting their brand safety standards are demanding more transparency from Google. Ideally, moving forward, they’d like access to data confirming where exactly their search ads have been displayed.

Where are big brand ads allegedly appearing? How about “undesirable sites.” What comes to mind for me is adult content. There are some quite sporty ads on certain sites that would make a Methodist Sunday school teacher blush.

These two big dogs are having a heck of a time ruining the living room sofa. Neither dog knows that the family will not be happy. These are dogs, not the mental heirs of Immanuel Kant. Thanks, MSFT Copilot. The stuffing looks like soap bubbles, but you are “good enough,” the benchmark for excellence today.

But the shocking factoid is that Google does not provide a way for advertisers to know where their ads have been displayed. Also, there is a possibility that Google shared ad revenue with entities which may be hostile to the interests of the US. Let’s hope that the assertions reported in the article are inaccurate. But if the display of big brand ads on sites with content which could conceivably erode brand value, what exactly is Google’s system doing? I will return to this question in the observations section of this essay.

The second article is equally shocking to me.

“Elon Musk Tells Advertisers: ‘Go F*** Yourself’” reports that the EV and rocket man with a big hole digging machine allegedly said about advertisers who purchase promotions on X.com (Twitter?):

Don’t advertise,” … “If somebody is going to try to blackmail me with advertising, blackmail me with money, go fuck yourself. Go f*** yourself. Is that clear? I hope it is.” … ” If advertisers don’t return, Musk said, “what this advertising boycott is gonna do is it’s gonna kill the company.”

The cited story concludes with this statement:

The full interview was meandering and at times devolved into stream of consciousness responses; Musk spoke for triple the time most other interviewees did. But the questions around Musk’s own actions, and the resulting advertiser exodus — the things that could materially impact X — seemed to garner the most nonchalant answers. He doesn’t seem to care.

Two stories. Two large and successful companies. What can a person like myself conclude, recognizing that there is a possibility that both stories may have some gaps and flaws:

- There is a disdain for old-fashioned “values” related to acceptable business practices

- The thread of pornography and foul language runs through the reports. The notion of well-crafted statements and behaviors is not part of the Google and X game plan in my view

- The indifference of the senior managers at both companies seeps through the descriptions of how Google and X operate strikes me as intentional.

Now why?

I think that both companies are pushing the edge of business behavior. Google obviously is distributing ad inventory anywhere it can to try and create a market for more ads. Instead of telling advertisers where their ads are displayed or giving an advertiser control over where ads should appear, Google just displays the ads. The staggering irrelevance of the ads I see when I view a YouTube video is evidence that Google knows zero about me despite my being logged in and using some Google services. I don’t need feminine undergarments, concealed weapons products, or bogus health products.

With X.com the dismissive attitude of the firm’s senior management reeks of disdain. Why would someone advertise on a system which promotes behaviors that are detrimental to one’s mental set up?

The two companies are different, but in a way they are similar in their approach to users, customers, and advertisers. Something has gone off the rails in my opinion at both companies. It is generally a good idea to avoid riding trains which are known to run on bad tracks, ignore safety signals, and demonstrate remarkably questionable behavior.

What if the write ups are incorrect? Wow, both companies are paragons. What if both write ups are dead accurate? Wow, wow, the big dogs are tearing up the living room sofa. More than “bad dog” is needed to repair the furniture for living.

Stephen E Arnold, November 30, 2023

A Musky Odor Thwarts X Academicians

November 15, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

How does a tech mogul stamp out research? The American way, of course! Ars Technica reveals, “100+ Researchers Say they Stopped Studying X, Fearing Elon Musk Might Sue Them.” A recent Reuters report conducted by the Coalition for Independent Technology Research found a fear of litigation and jacked-up data-access fees are hampering independent researchers. All while X (formerly Twitter) is under threat of EU fines for allowing Israel/Hamas falsehoods. Meanwhile, the usual hate speech, misinformation, and disinformation continue. The company insists its own, internal mechanisms are doing a fine job, thank you very much, but it is getting harder and harder to test that claim. Writer Ashley Belanger tells us:

“Although X’s API fees and legal threats seemingly have silenced some researchers, X has found some other partners to support its own research. In a blog last month, Yaccarino named the Technology Coalition, Anti-Defamation League (another group Musk threatened to sue), American Jewish Committee, and Global Internet Forum to Counter Terrorism (GIFCT) among groups helping X ‘keep up to date with potential risks’ and supporting X safety measures. GIFCT, for example, recently helped X identify and remove newly created Hamas accounts. But X partnering with outside researchers isn’t a substitute for external research, as it seemingly leaves X in complete control of spinning how X research findings are characterized to users. Unbiased research will likely become increasingly harder to come by, Reuters’ survey suggested.”

Indeed. And there is good reason to believe the company is being less than transparent about its efforts. We learn:

“For example, in July, X claimed that a software company that helps brands track customer experiences, Sprinklr, supplied X with some data that X Safety used to claim that ‘more than 99 percent of content users and advertisers see on Twitter is healthy.’ But a Sprinklr spokesperson this week told Reuters that the company could not confirm X’s figures, explaining that ‘any recent external reporting prepared by Twitter/X has been done without Sprinklr’s involvement.’”

Musk is famously a “free speech absolutist,” but only when it comes to speech he approves of. Decreasing transparency will render X more dangerous, unless and until its decline renders it irrelevant. Fear the musk ox.

Cynthia Murrell, November 15, 2023

The EU and the Tweeter Thing

December 16, 2022

Most of the folks who live in Harrod’s Creek, Kentucky, are not frequent tweeters. I am not certain if those in the city could name the countries wrapped in European Union goodness. The information in “Twitter Threatened with EU Sanctions over Journalists’ Ban” is of little interest. Some in the carpetland of Twitter may find the write up suggestive.

Here’s an illustrative statement from the BBC write up:

EU commissioner Vera Jourova warned that the EU’s Digital Services Act requires respect of media freedom. “Elon Musk should be aware of that. There are red lines. And sanctions, soon,” she tweeted. She said: “News about arbitrary suspension of journalists on Twitter is worrying. “[The] EU’s Digital Services Act requires respect of media freedom and fundamental rights. This is reinforced under our Media Freedom Act.”

One quick fix would be ban EU officials from Twitter. My hunch is that might poke the hornet’s nest stuffed full of well-fed and easily awakened officials.

There are several interesting shoes waiting to fall in one of the nice hotels in Brussels; for example:

- Ringing the Twitter cash register. Fines have a delayed effect. After months of legal wrangling, the targeted offenders pay something. That’s what I call the ka-ching factor.

- Creating more work for government officials in the US. The tweeter thing may not be pivotal to the economic well being of EU member states, but grousing about US regulatory laxness creates headaches for those who have to go to meetings, write memos, and keep interactions reasonably pleasant.

- Allowing certain information to flow; for example, data about the special action in Ukraine or information useful to law enforcement and certain intelligence agencies.

Excitement will ensue. I am waiting for certain Silicon Valley real news professionals to find themselves without a free info and opinion streaming service. The cries of the recently banned are, however, unlikely to distract the EU officials from their goal: Ka-ching.

Stephen E Arnold, December 16, 2022

Elephants Recognize One Another and When They Stomp Around, Grass Gets Trampled

December 1, 2022

I find the coverage of the Twitter, Apple, and Facebook hoe down a good example of self serving and possibly dysfunctional behavior.

What caught my attention in the midst of news about a Tim Apple and the Musker was this story “Zuckerberg Says Apple’s Policies Not Sustainable.” The write up reports as actual factual:

Meta CEO Mark Zuckerberg on Wednesday (November 30, 2022) added to the growing chorus of concerns about Apple, arguing that it’s “problematic that one company controls what happens on the device.” … Zuckerberg has been one of the loudest critics of Apple in Silicon Valley for the past two years. In the wake of Elon Musk’s attacks on Apple this week (third week of November 2022) , his concerns are being echoed more broadly by other industry leaders and Republican lawmakers….”I think the problem is that you get into it with the platform control, is that Apple obviously has their own interests…

Ah, Facebook with its interesting financial performance partially a result of Apple’s unilateral actions is probably not an objective observer. What about the Facebook Cambridge Analytic matter? Ancient history.

Much criticism is directed at the elected officials in the European Union for questioning the business methods of American companies. The interaction of Apple, Facebook, and Twitter will draw more attention to the management methods, the business procedures, and the motivation behind some words and deeds.

If I step back from the flood of tweets, Silicon Valley “real” news, and oracular (possibly self congratulatory write ups from conference organizers) what do I see:

- Activities illustrating what happens in a Wild West business environment

- Personalities looming larger than the ethical issues intertwined with their revenue generation methods

- Regulatory authorities’ inaction creating genuine concern among users, business partners, and employees.

Elephants can stomp around. Even when the beasts mean well, their sheer size puts smaller entities at risk. The shenanigans of big creatures are interesting. Are these creatures of magnitude sustainable or a positive for the datasphere? My view? Nope.

Stephen E Arnold, December 1, 2022

Clever Twitter Write Up: The Muskrat!

November 21, 2022

I am not into the tweeter thing. I do find glancing at the flood of Twitter mine run off interesting. Because I live in a hollow with its very own toxic lake of assorted man-made compounds, I know drainage when I smell it.

The article which caught my attention is “I Don’t Want to Go Back to Social Media.” What is interesting is that the author who is a software developer and former Twitter user.

The write up makes what might be a statement of interest to a legal eagle; to wit:

Musk is an obvious fraud.

The highlight of the write up is the use of the neologism muskrat. The idea is that estimable Elon Musk is either a metaphorical rat (Rattus norvegicus) or muskrat (Ondatra zibethicus). The figure of speech is ambiguous, and disambiguation is a great deal of work. (I think that is the reason super duper free Web search systems are unable to provide consistent on point results for a query.

Now back to the Muskrat (article style, not the furry, four legged variety known to present some challenges when living in proximity to humanoids.

The write up asserts:

…Twitter brought out the worst in me. I struggled to be “my best self” on Twitter. Admittedly, I struggle to be my best self almost everywhere, but Twitter was the worst situation for that. The incentives on Twitter are perverse: the short character limits, the statistical counts of retweets and likes, the unknown followers and readers, the platform and publicity all conspire to corrupt you, to push you toward superficial tweets that incite the crowd.

The write up ends with a call to action; specifically:

Consider the alternative of avoiding social media altogether. You can live without it. I would argue that you can live better. You’ll get nothing, and like it!

I want to end this short blog post with a quote from Captain and Tennille (Willis Alan Ramsey and O/BO Capasso who probably love the tweeter thing):

And they whirl and they twirled and they tango

Singin’ and jinglin’ a jango

Floatin’ like the heavens above

Looks like muskrat love

Do, do, do, do, do

Do, do-e, do

Yep, do d0.

Stephen E Arnold, November 21, 2022

With Mass Firings, Here Is a Sketchy Factoid to Give One Pause

November 17, 2022

In the midst of the Twitter turmoil and the mea culpae of the Zuck and the Zen master (Jack Dorsey), the idea about organizational vulnerability is not getting much attention. One facet of layoffs or RIFs (reductions in force) is captured in the article “Only a Quarter of Businesses Have Confidence Ex-Employees Can No Longer Access Infrastructure.” True to content marketing form, the details of the methodology are not disclosed.

Who among the thousands terminated via email or a Slack message are going to figure out that selling “insider information” is a good way to make money. Are those executive recruitment firms vetting their customers. Is that jewelry store in Athens on the up and up, or is it operated by a friend of everyone’s favorite leader, Vlad the Assailer. What mischief might a tech-savvy former employee undertake as a contractor on Fiverr or a disgruntled person in a coffee shop?

The write up states:

Only 24 percent of respondents to a new survey are fully confident that ex-employees no longer have access to their company’s infrastructure, while almost half of organizations are less than 50 percent confident that former employees no longer have access.

An outfit called Teleport did the study. A few other factoids which I found suggestive are:

- … Organizations [are] using on average 5.7 different tools to manage access policy, making it complicated and time-consuming to completely shut off access.

- “62 percent of respondents cite privacy concerns as a leading challenge when replacing passwords with biometric authentication.”

- “55 percent point to a lack of devices capable of biometric authentication.”

Let’s assume that these data are off by 10 or 15 percent. There’s room for excitement in my opinion.

Stephen E Arnold, November 17, 2022

A Digital Tweet with More Power Than a Status-6 Torpedo

November 14, 2022

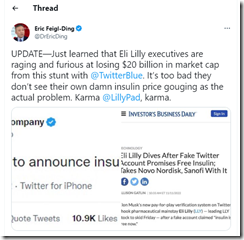

I have no idea if the information captured in this screenshot of a Tweet on the Elon thing is correct. Figure it out seems to be the optimal way to deal with fake information.

My hunch is that the text is tough to read. In a nutshell, a “stunt” tweet knocked $20 billion off the market cap of the ethical and estimable firm Eli Lilly.

The item I spotted was attributed to an entity identified as Erik Feigl Ding [blue check]. I am not sure what a blue check means.

One of my Arnold Laws of Online says, “Information has impact.” My thought is that $20 billion, the alleged lawsuit, and the power of a digital message seems obvious.

Does this suggest that the dinobaby approach to curated information within an editorial structure is a useful business process? Elon, what’s your take?

Stephen E Arnold, November 14, 2022

What Will the Twitter Dependent Do Now?

November 7, 2022

Here’s a question comparable to Roger Penrose’s, Michio Kaku’s, and Sabine Hossenfelder’s discussion of the multiverse. (One would think that the Institute of Art and Ideas could figure out sound, but that puts high-flying discussions in a context, doesn’t it?)

What will the Twitter dependent do now?

Since I am not Twitter dependent nor Twitter curious (twi-curious, perhaps?), I find the artifacts of Muskism interesting to examine. Let’s take one example; specifically, “Twitter, Cut in Half.” Yikes, castration by email! Not quite like the real thing, but for some, the imagery of chopping off the essence of the tweeter thing is psychologically disturbing.

Consider this statement:

After the layoffs, we asked some of the employees who had been cut what they made of the process. They told us that they had been struck by the cruelty: of ordering people to work around the clock for a week, never speaking to them, then firing them in the middle of the night, no matter what it might mean for an employee’s pregnancy or work visa or basic emotional state. More than anything they were struck by the fact that the world’s richest man, who seems to revel in attention on the platform they had made for him, had not once deigned to speak to them.

Knife cutting a quite vulnerable finger as collateral damage to major carrot chopping. Image by https://www.craiyon.com/

Cruelty. Interesting word. Perhaps it reflects on the author who sees the free amplifier of his thoughts ripped from his warm fingers? The word cut keeps the metaphor consistent: Cutting the cord, cutting the umbilical, and cutting the unmentionables. Ouch! No wonder some babies scream when slicing and cleaving ensue. Ouch ouch.

Then the law:

whether they were laid off or not, several employees we’ve spoken to say they are hiring attorneys. They anticipate difficulties getting their full severance payments, among other issues. Tensions are running high.

The flocking of the legal eagles will cut off the bright white light of twitterdom. The shadows flicker awaiting the legal LEDs to shine and light the path to justice in free and easy short messages to one’s followers. Yes, the law versus the Elon.

So what’s left of the Fail Whale’s short messaging system and its functions designed to make “real” information available on a wide range of subjects? The write up reports:

It was grim. It was also, in any number of ways, pointless: there had been no reason to do any of this, to do it this way, to trample so carelessly over the lives and livelihoods of so many people.

Was it pointless? I am hopeful that Twitter goes away. The alternatives could spit out a comparable outfit. Time will reveal if those who must tweet will find another easy, cheap way to promote specific ideas, build a rock star like following, and provide a stage for performers who do more than tell jokes and chirp.

Several observations:

- A scramble for other ways to find, build, and keep a loyal following is underway. Will it be the China-linked TikTok? Will it be the gamer-centric Discord? Will it be a ghost web service following the Telegram model?

- Fear is perched on the shoulder of the Twitter dependent celebrity. What worked for Kim has worked for less well known “stars.” Those stars may wonder how the Elon volcano could ruin more of their digital constructs.

- Fame chasers find that the information highway now offers smaller, less well traveled digital paths? Forget the two roads in the datasphere. The choices are difficult, time consuming to master, and may lead to dead ends or crashes on the information highway’s collector lanes.

Net net: Change is afoot. Just watch out for smart automobiles with some Elon inside.

Stephen E Arnold, November 7, 2022

Will the Musker Keep Amplification in Mind?

November 4, 2022

In its ongoing examination of misinformation online, the New York Times tells us about the Integrity Institute‘s quest to measure just how much social media contributes to the problem in, “How Social Media Amplifies Misinformation More than Information.” Reporter Steven Lee Meyers writes:

“It is well known that social media amplifies misinformation and other harmful content. The Integrity Institute, an advocacy group, is now trying to measure exactly how much — and on Thursday [October 13] it began publishing results that it plans to update each week through the midterm elections on Nov. 8. The institute’s initial report, posted online, found that a ‘well-crafted lie’ will get more engagements than typical, truthful content and that some features of social media sites and their algorithms contribute to the spread of misinformation.”

In is ongoing investigation, the researchers compare the circulation of posts flagged as false by the International Fact-Checking Network to that of other posts from the same accounts. We learn:

“Twitter, the analysis showed, has what the institute called the great misinformation amplification factor, in large part because of its feature allowing people to share, or ‘retweet,’ posts easily. It was followed by TikTok, the Chinese-owned video site, which uses machine-learning models to predict engagement and make recommendations to users. … Facebook, according to the sample that the institute has studied so

far, had the most instances of misinformation but amplified such claims to a lesser degree, in part because sharing posts requires more steps. But some of its newer features are more prone to amplify misinformation, the institute found.”

Facebook‘s video content spread lies faster than the rest of the platform, we learn, because its features lean more heavily on recommendation algorithms. Instagram showed the lowest amplification rate, while the team did not yet have enough data on YouTube to draw a conclusion. It will be interesting to see how these amplifications do or do not change as the midterms approach. The Integrity Institute shares its findings here.

Cynthia Murrell, November 4, 2022

Threats of a Digital Death: Legal Eagles, Pay Attention

November 1, 2022

I read “Twitter Users Plot Revenge on Elon Musk by Killing the Platform.” I don’t have a dog (real or a digital Zuck confection) in this fight. Frankly I never thought that individuals would admit that their actions harmed a commercial enterprise. Nor did I think these individuals would use their Twitter handles and the tweeter system to organize a group action to harm the Fail Whale. Plus, these people displayed their photographs and probably have profiles available online. (How difficult will it be for some to identify these actors and provide that information to a legal eagle known to chase ambulances?)

The write up states:

…tweeters are conspiring to help Twitter suffer a similar fate by sh*tposting and furry-frying Musk.

“Furry-frying?” Sounds interesting. Will there be a TikTok video?

There are illustrative tweets about what to do and how to accomplish the goal of causing the tweeter to die while trying to get aloft.

Remarkable information if accurate. I wonder if social media analytics systems can pinpoint these actors and take action; for example, emailing tweets and “personas” to a musky law firm?

My hunch is that this idea may occur to someone on the Musk team.

Stephen E Arnold, November 1, 2022