Synthetic Data: The Future Because Real Data Is Too Inefficient

January 28, 2022

One of the biggest problems with AI advancement is the lack of comprehensive datasets. AI algorithms use datasets to learn how to interpret and understand information. The lack of datasets has resulted in biased aka faulty algorithms. The most notorious examples are “racist” photo recognition or light sensitivity algorithms that are unable to distinguish dark skin tones. VentureBeat shares that a new niche market has sprung up: “Synthetic Data Platform Mostly AI Lands $25M.”

Mostly AI is an Austria startup that specializes in synthetic data for AI model testing and training. The company recently acquired $25 million in funding from Molten Ventures with plans to invest the funds to accelerate the industry. Mostly AI plans to hire more employees, create unbiased algorithms, and increase their presence in Europe and North America.

It is difficult for AI developers to roundup comprehensive datasets, because of privacy concerns. There is tons of data available for AI got learn from, but it might not be anonymous and it could be biased from the get go.

Mostly AI simulates real datasets by replicating the information for data value chains but removing the personal data points. The synthetic data is described as “good as the real thing” without violating privacy laws. The synthetic data algorithm works like other algorithms:

“The solution works by leveraging a state-of-the-art generative deep neural network with an in-built privacy mechanism. It learns valuable statistical patterns, structures, and variations from the original data and recreates these patterns using a population of fictional characters to give out a synthetic copy that is privacy compliant, de-biased, and just as useful as the original dataset – reflecting behaviors and patterns with up to 99% accuracy.”

Mostly AI states that their platform also accelerates the time it takes to access the datasets. They claim their technology reduces the wait time by 90%.

Demands for synthetic data are growing as the AI industry burgeons and there is a need for information to advance the technology. Efficient, acceptable error rates, objective methods: What could go wrong?

Whitney Grace, January 27, 2022

Facebook and Synthetic Data

October 13, 2021

What’s Facebook thinking about its data future?

A partial answer may be that the company is doing some contingency planning. When regulators figure out how to trim Facebook’s data hoovering, the company may have less primary data to mine, refine, and leverage.

The solution?

Synthetic data. The jargon means annotated data that computer simulations output. Run the model. Fiddle with the thresholds. Get good enough data.

How does one get a signal about Facebook’s interest in synthetic data?

Facebook, according to Venture Beat, the responsible social media company acquired AI.Reverie.

Was this a straight forward deal? Sure, just via a Facebook entity called Dolores Acquisition Sub, Inc. If this sounds familiar, the social media leader may have taken its name from a motion picture called “Westworld.”

The write up states:

AI.Reverie — which competed with startups like Tonic, Delphix, Mostly AI, Hazy, Gretel.ai, and Cvedia, among others — has a long history of military and defense contracts. In 2019, the company announced a strategic alliance with Booz Allen Hamilton with the introduction of Modzy at Nvidia’s GTC DC conference. Through Modzy — a platform for managing and deploying AI models — AI.Reverie launched a weapons detection model that ostensibly could spot ammunition, explosives, artillery, firearms, missiles, and blades from “multiple perspectives.”

Booz, Allen may be kicking its weaker partners. Perhaps the wizards at the consulting firm should have purchased AI.Reverie. But Facebook aced out the century old other people’s business outfit. (Note: I used to labor in the BAH vineyards, and I feel sorry for the individuals who were not enthusiastic about acquiring AI.Reverie. Where did that bonus go?)

Several observations are warranted:

- Synthetic data is the ideal dating partner for Snorkel-type machine learning systems

- Some researchers believe that real data is better than synthetic data, but that is a fight like spats between those who love Windows and those who love Mac OSX

- The uptake of “good” enough data for smart statistical systems which aim for 60 percent or better “accuracy” appears to be a mini trend.

Worth watching?

Stephen E Arnold, October 13, 2021

Synthetic Datasets: Reality Bytes

February 5, 2017

Years ago I did a project for an outfit specializing in an esoteric math space based on mereology. No, I won’t define it. You can check out the explanation in the Stanford Encyclopedia of Philosophy. The idea is that sparse information can yield useful insights. Even better, if mathematical methods were use to populate missing cells in a data system, one could analyze the data as if it were more than probability generated items. Then when real time data arrived to populate the sparse cells, the probability component would generate revised data for the cells without data. Nifty idea, just tough to explain to outfits struggling to move freight or sell off lease autos.

I thought of this company’s software system when I read “Synthetic Datasets Are a Game Changer.” Once again youthful wizards happily invent the future even though some of the systems and methods have been around for decades. For more information about the approach, the journal articles and books of Dr. Zbigniew Michaelewicz may be helpful.

The “Synthetic Databases…” write up triggered some yellow highlighter activity. I found this statement interesting:

Google researchers went as far as to say that even mediocre algorithms received state-of-the-art results given enough data.

The idea that algorithms can output “good enough” results when volumes of data are available to the number munching algorithms.

I also noted:

there are recent successes using a new technique called ‘synthetic datasets’ that could see us overcome those limitations. This new type of dataset consists of images and videos that are solely rendered by computers based on various parameters or scenarios. The process through which those datasets are created fall into 2 categories: Photo realistic rendering and Scenario rendering for lack of better description.

The focus here is not on figuring out how to move nuclear fuel rods around a reactor core or adjusting coal fired power plant outputs to minimize air pollution. The synthetic databases have an application in image related disciplines.

The idea of using rendering engines to create images for facial recognition or for video games is interesting. The write up mentions a number of companies pushing forward in this field; for example, Cvedia.

However, the use of NuTech’s methods populated databases of fact. I think the use of synthetic methods has a bright future. Oh, NuTech was acquired by Netezza. Guess what company owns the prescient NuTech Solutions’ technology? Give up? IBM, a company which has potent capabilities but does the most unusual things with those important systems and methods.

I suppose that is one reason why old wine looks like new IBM Holiday Spirit rum.

Stephen E Arnold, February 5, 2017

Google Synthetic Content Scaffolding

September 3, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Google posted what I think is an important technical paper on the arXiv service. The write up is “Towards Realistic Synthetic User-Generated Content: A Scaffolding Approach to Generating Online Discussions.” The paper has six authors and presumably has the grade of “A”, a mark not award to the stochastic parrot write up about Google-type smart software.

For several years, Google has been exploring ways to make software that would produce content suitable for different use cases. One of these has been an effort to use transformer and other technology to produce synthetic data. The idea is that a set of real data is mimicked by AI so that “real” data does not have to be acquired, intercepted, captured, or scraped from systems in the real-time, highly litigious real world. I am not going to slog through the history of smart software and the research and application of synthetic data. If you are curious, check out Snorkel and the work of the Stanford Artificial Intelligence Lab or SAIL.

The paper I referenced above illustrates that Google is “close” to having a system which can generate allegedly realistic and good enough outputs to simulate the interaction of actual human beings in an online discussion group. I urge you to read the paper, not just the abstract.

Consider this diagram (which I know is impossible to read in this blog format so you will need the PDF of the cited write up):

The important point is that the process for creating synthetic “human” online discussions requires a series of steps. Notice that the final step is “fine tuned.” Why is this important? Most smart software is “tuned” or “calibrated” so that the signals generated by a non-synthetic content set are made to be “close enough” to the synthetic content set. In simpler terms, smart software is steered or shaped to match signals. When the match is “good enough,” the smart software is good enough to be deployed either for a test, a research project, or some use case.

Most of the AI write ups employ steering, directing, massaging, or weaponizing (yes, weaponizing) outputs to achieve an objective. Many jobs will be replaced or supplemented with AI. But the jobs for specialists who can curve fit smart software components to produce “good enough” content to achieve a goal or objective will remain in demand for the foreseeable future.

The paper states in its conclusion:

While these results are promising, this work represents an initial attempt at synthetic discussion thread generation, and there remain numerous avenues for future research. This includes potentially identifying other ways to explicitly encode thread structure, which proved particularly valuable in our results, on top of determining optimal approaches for designing prompts and both the number and type of examples used.

The write up is a preliminary report. It takes months to get data and approvals for this type of public document. How far has Google come between the idea to write up results and this document becoming available on August 15, 2024? My hunch is that Google has come a long way.

What’s the use case for this project? I will let younger, more optimistic minds answer this question. I am a dinobaby, and I have been around long enough to know a potent tool when I encounter one.

Stephen E Arnold, September 3, 2024

Old Problem, New Consequences: AI and Data Quality

August 6, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Grab a business card from several years ago. Just for laughs send an email to the address on the card or dial one of the numbers printed on it. What happens? Does the email bounce? Does the person you called answer? In my experience, the business cards I have gathered at conferences in 2021 are useless. The number rings in space or a non-human voice says, “The number has been disconnected.” The emails go into a black hole. I would, based on my experience, peg the 100 random cards I had one of my colleagues pull from the files that work at fewer than 30 percent. In 24 months, 70 percent of the data are invalid. An optimist would say, “You have 30 people you can contact.” A pessimist would say, “Wow, you lost 70 contacts.” A 20-something whiz kid at one of the big time AI companies would say, “Good enough.”

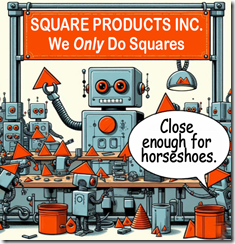

An automated data factory purports to manufacture squares. What does it do? Triangles are good enough and close enough for horseshoes. Does the factory call the triangles squares? Of course, it does. Thanks, MSFT Copilot. Security is Job One today I hear.

I read “Data Quality: The Unseen Villain of Machine Learning.” The write up states:

Too often, data scientists are the people hired to “build machine learning models and analyze data,” but bad data prevents them from doing anything of the sort. Organizations put so much effort and attention into getting access to this data, but nobody thinks to check if the data going “in” to the model is usable. If the input data is flawed, the output models and analyses will be too.

Okay, that’s a reasonable statement. But this passage strikes me as a bit orthogonal to the observations I have made:

It is estimated that data scientists spend between 60 and 80 percent of their time ensuring data is cleansed, in order for their project outcomes to be reliable. This cleaning process can involve guessing the meaning of data and inferring gaps, and they may inadvertently discard potentially valuable data from their models. The outcome is frustrating and inefficient as this dirty data prevents data scientists from doing the valuable part of their job: solving business problems. This massive, often invisible cost slows projects and reduces their outcomes.

The painful reality, in my experience, consists of three factors:

- Data quality depends on the knowledge and resources available to a subject matter expert. A data quality expert might define quality as consistent data; that is, the name field has a name. The SME figures out if the data are in line with other data and what’s is off base.

- The time required to “ensure” data quality is rarely available. There are interruptions, Zooms, and automated calendars that ping a person for a meeting. Data quality is easily killed by time suffocation.

- The volume of data begs for automated procedures and, of course, AI. The problem is that the range of errors related to validity is sufficiently broad to allow “flawed” data to enter a systems. Good enough creates interesting consequences.

The write up says:

Data quality shouldn’t be a case of waiting for an issue to occur in production and then scrambling to fix it. Data should be constantly tested, wherever it lives, against an ever-expanding pool of known problems. All stakeholders should contribute and all data must have clear, well-defined data owners. So, when a data scientist is asked what they do, they can finally say: build machine learning models and analyze data.

This statement makes clear why flawed data remain flawed. The fix, according to some, is synthetic data. Are these data of high quality? It depends on what one means by “quality.” Today the benchmark is good enough. Good enough produces outputs that are not. But who knows? Not the harried person looking for something, anything, to put in a presentation, journal article, or podcast.

Stephen E Arnold, August 6, 2024

Market Research Shortcut: Fake Users Creating Fake Data

July 10, 2024

Market research can be complex and time consuming. It would save so much time if one could consolidate thousands of potential respondents into one model. A young AI firm offers exactly that, we learn from Nielsen Norman Group’s article, “Synthetic Users: If, When, and How to Use AI Generated ‘Research.’”

But are the results accurate? Not so much, according to writers Maria Rosala and Kate Moran. The pair tested fake users from the young firm Synthetic Users and ones they created using ChatGPT. They compared responses to sample questions from both real and fake humans. Each group gave markedly different responses. The write-up notes:

“The large discrepancy between what real and synthetic users told us in these two examples is due to two factors:

- Human behavior is complex and context-dependent. Synthetic users miss this complexity. The synthetic users generated across multiple studies seem one-dimensional. They feel like a flat approximation of the experiences of tens of thousands of people, because they are.

- Responses are based on training data that you can’t control. Even though there may be proof that something is good for you, it doesn’t mean that you’ll use it. In the discussion-forum example, there’s a lot of academic literature on the benefits of discussion forums on online learning and it is possible that the AI has based its response on it. However, that does not make it an accurate representation of real humans who use those products.”

That seems obvious to us, but apparently some people need to be told. The lure of fast and easy results is strong. See the article for more observations. Here are a couple worth noting:

“Real people care about some things more than others. Synthetic users seem to care about everything. This is not helpful for feature prioritization or persona creation. In addition, the factors are too shallow to be useful.”

Also:

“Some UX [user experience] and product professionals are turning to synthetic users to validate or product concepts or solution ideas. Synthetic Users offers the ability to run a concept test: you describe a potential solution and have your synthetic users respond to it. This is incredibly risky. (Validating concepts in this way is risky even with human participants, but even worse with AI.) Since AI loves to please, every idea is often seen as a good one.”

So as appealing as this shortcut may be, it is a fast track to incorrect results. Basing business decisions on “insights” from shallow, eager-to-please algorithms is unwise. The authors interviewed Synthetic Users’ cofounder Hugo Alves. He acknowledged the tools should only be used as a supplement to surveys of actual humans. However, the post points out, the company’s website seems to imply otherwise: it promises “User research. Without the users.” That is misleading, at best.

Cynthia Murrell, July 10, 2024

School Technology: Making Up Performance Data for Years

February 9, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

What is the “make up data” trend? Why is it plaguing educational institutions. From Harvard to Stanford, those who are entrusted with shaping young-in-spirit minds are putting ethical behavior in the trash can. I think I know, but let’s look at allegations of another “synthetic” information event. For context in the UK there is a government agency called the Office for Standards in Education, Children’s Services and Skills.” The agency is called OFSTED. Now let’s go to the “real” news story.“

A possible scene outside of a prestigious academic institution when regulations about data become enforceable… give it a decade or two. Thanks, MidJourney. Two tries and a good enough illustration.

“Ofsted Inspectors Make Up Evidence about a School’s Performance When IT Fails” reports:

Ofsted inspectors have been forced to “make up” evidence because the computer system they use to record inspections sometimes crashes, wiping all the data…

Quite a combo: Information technology and inventing data.

The article adds:

…inspectors have to replace those notes from memory without telling the school.

Will the method work for postal investigations? Sure. Can it be extended to other activities? What about data pertinent to the UK government initiates for smart software?

Stephen E Arnold, February 9, 2024

A Xoogler Explains Why Big Data Is Going Nowhere Fast

March 3, 2023

The essay “Big Data Is Dead.” One of my essays from the Stone Age of Online used the title “Search Is Dead” so I am familiar with the trope. In a few words, one can surprise. Dead. Final. Absolute, well, maybe. On the other hand, the subject either Big Data or Search are part of the woodwork in the mini-camper of life.

I found this statement interesting:

Modern cloud data platforms all separate storage and compute, which means that customers are not tied to a single form factor. This, more than scale out, is likely the single most important change in data architectures in the last 20 years.

The cloud is the future. I recall seeing price analyses of some companies’ cloud activities; for example, “The Cloud vs. On-Premise Cost: Which One is Cheaper?” In my experience, cloud computing was pitched as better, faster, and cheaper. Toss in the idea that one can get rid of pesky full time systems personnel, and the cloud is a win.

What the cloud means is exactly what the quoted sentence says, “customers are not tied to a single form factor.” Does this mean that the Big Data rah rah combined with the sales pitch for moving to the cloud will set the stage for more hybrid sets up a return to on premises computing. Storage could become a combination of on premises and cloud base solutions. The driver, in my opinion, will be cost. And one thing the essay about Big Data does not dwell on is the importance of cost in the present economic environment.

The arguments for small data or subsets of Big Data is accurate. My reading of the essay is that some data will become a problem: Privacy, security, legal, political, whatever. The essay is an explanation for what “synthetic data.” Google and others want to make statistically-valid, fake data the gold standard for certain types of processes. In the data are a liability section of the essay, I noted:

Data can suffer from the same type of problem; that is, people forget the precise meaning of specialized fields, or data problems from the past may have faded from memory.

I wonder if this is a murky recasting of Google’s indifference to “old” data and to date and time stamping. The here and now not then and past are under the surface of the essay. I am surprised the notion of “forward forward” analysis did not warrant a mention. Outfits like Google want to do look ahead prediction in order to deal with inputs newer than what is in the previous set of values.

You may read the essay and come away with a different interpretation. For me, this is the type of analysis characteristic of a Googler, not a Xoogler. If I am correct, why doesn’t the essay hit the big ideas about cost and synthetic data directly?

Stephen E Arnold, March 3, 2023

How about This Intelligence Blindspot: Poisoned Data for Smart Software

February 23, 2023

One of the authors is a Googler. I think this is important because the Google is into synthetic data; that is, machine generated information for training large language models or what I cynically refer to as “smart software.”

The article / maybe reproducible research is “Poisoning Web Scale Datasets Is Practical.” Nine authors of whom four are Googlers have concluded that a bad actor, government, rich outfit, or crafty students in Computer Science 301 can inject information into content destined to be used for training. How can this be accomplished. The answer is either by humans, ChatGPT outputs from an engineered query, or a combination. Why would someone want to “poison” Web accessible or thinly veiled commercial datasets? Gee, I don’t know. Oh, wait, how about control information and framing of issues? Nah, who would want to do that?

The paper’s authors conclude with more than one-third of that Google goodness. No, wait. There are no conclusions. Also, there are no end notes. What there is a road map explaining the mechanism for poisoning.

One key point for me is the question, “How is poisoning related to the use of synthetic data?”

My hunch is that synthetic data are more easily manipulated than going through the hoops to poison publicly accessible data. That’s time and resource intensive. The synthetic data angle makes it more difficult to identify the type of manipulations in the generation of a synthetic data set which could be mingled with “live” or allegedly-real data.

Net net: Open source information and intelligence may have a blindspot because it is not easy to determine what’s right, accurate, appropriate, correct, or factual. Are there implications for smart machine analysis of digital information? Yep, in my opinion already flawed systems will be less reliable and the users may not know why.

Stephen E Arnold, February 23, 2023

Synthetic Content: A Challenge with No Easy Answer

January 30, 2023

Open source intelligence is the go-to method for many crime analysts, investigators, and intelligence professionals. Whether social media or third-party data from marketing companies, useful insights can be obtained. The upside of OSINT means that many of its supporters downplay or choose to sidestep its downsides. I call this “OSINT blindspots”, and each day I see more information about what is becoming a challenge.

For example, “As Deepfakes Flourish, Countries Struggle with Response” is a useful summary of one problem posed by synthetic (fake) content. What looks “real” may not be. A person sifting through data assumes that information is suspect. Verification is needed. But synthetic data can output multiple instances of fake information and then populate channels with “verification” statements of the initial item of information.

The article states:

Deepfake technology — software that allows people to swap faces, voices and other characteristics to create digital forgeries — has been used in recent years to make a synthetic substitute of Elon Musk that shilled a crypto currency scam, to digitally “undress” more than 100,000 women on Telegram and to steal millions of dollars from companies by mimicking their executives’ voices on the phone. In most of the world, authorities can’t do much about it. Even as the software grows more sophisticated and accessible, few laws exist to manage its spread.

For some government professionals, the article says:

problematic applications are also plentiful. Legal experts worry that deepfakes could be misused to erode trust in surveillance videos, body cameras and other evidence. (A doctored recording submitted in a British child custody case in 2019 appeared to show a parent making violent threats, according to the parent’s lawyer.) Digital forgeries could discredit or incite violence against police officers, or send them on wild goose chases. The Department of Homeland Security has also identified risks including cyber bullying, blackmail, stock manipulation and political instability.

The most interesting statement in the essay, in my opinion, is this one:

Some experts predict that as much as 90 per cent of online content could be synthetically generated within a few years.

The number may overstate what will happen because no one knows the uptake of smart software and the applications to which the technology will be put.

Thinking in terms of OSINT blindspots, there are some interesting angles to consider:

- Assume the write up is correct and 90 percent of content is authored by smart software, how does a person or system determine accuracy? What happens when a self learning system learns from itself?

- How does a human determine what is correct or incorrect? Education appears to be struggling to teach basic skills? What about journals with non reproducible results which spawn volumes of synthetic information about flawed research? Is a person, even one with training in a narrow discipline, able to determine “right” or “wrong” in a digital environment?

- Are institutions like libraries being further marginalized? The machine generated content will exceed a library’s capacity to acquire certain types of information? Does one acquire books which are “right” when machine generated content produces information that shouts “wrong”?

- What happens to automated sense making systems which have been engineered on the often flawed assumption that available data and information are correct?

Perhaps an OSINT blind spot is a precursor to going blind, unsighted, or dark?

Stephen E Arnold, January 30, 2023