You Do Not Search. You Insight.

April 12, 2017

I am delighted, thrilled. I read “Coveo, Microsoft, Sinequa Lead Insight Engine Market.” What a transformation is captured in what looks to me like a content marketing write up. Key word search morphs into “insight.” For folks who do not follow the history of enterprise search with the fanaticism of those involved in baseball statistics, the use of the word “insight” to describe locating a document is irrelevant. Do you search or insight?

For me, hunkered down in rural Kentucky, with my monitors flickering in the intellectual darkness of Kentucky, the use of the word “insight” is a linguistic singularity. Maybe not on the scale of an earthquake in Italy or a banker leaping from his apartment to the Manhattan asphalt, but a historical moment nevertheless.

Let me recap some of my perceptions of the three companies mentioned in the headline to this tsunami of jargon in the Datanami story:

- Coveo is a company which developed a search and retrieval system focused on Windows. With some marketing magic, the company explained keyword search as customer support, then Big data, and now this new thing, “insight”. For those who track vendor history, the roots of Coveo reach back to a consumer interface which was designed to make search easy. Remember Copernic. Yep, Coveo has been around a long while.

- Sinequa also was a search vendor. Like Exalead and Polyspot and other French search vendors, the company wanted manage data, provide federation, and enable workflows. After a president change and some executive shuffling, Sinequa emerged as a Big Data outfit with a core competency in analytics. Quite a change. How similar is Sinequa to enterprise search? Pretty similar.

- Microsoft. I enjoyed the “saved by the bell” deal in 2008 which delivered the “work in progress” Fast Search & Transfer enterprise search system to Redmond. Fast Search was one of the first search vendors to combine fast-flying jargon with a bit of sales magic. Despite the financial meltdown and an investigation of the Fast Search financials, Microsoft ponied up $1.2 billion and reinvented SharePoint search. Well, not exactly reinvented, but SharePoint is a giant hairball of content management, collaboration, business “intelligence” and, of course, search. Here’s a user friendly chart to help you grasp SharePoint search.

Flash forward to this Datanami article and what do I learn? Here’s a paragraph I noted with a smiley face and an exclamation point:

Among the areas where natural language processing is making inroads is so-called “insight engines” that are projected to account for half of analytic queries by 2019. Indeed, enterprise search is being supplanted by voice and automated voice commands, according to Gartner Inc. The market analyst released it latest “Magic Quadrant” rankings in late March that include a trio of “market leaders” along with a growing list of challengers that includes established vendors moving into the nascent market along with a batch of dedicated startups.

There you go. A trio like ZZTop with number one hits? Hardly. A consulting firm’s “magic” plucks these three companies from a chicken farm and gives each a blue ribbon. Even though we have chickens in our backyard, I cannot tell one from another. Subjectivity, not objectivity, applies to picking good chickens, and it seems to be what New York consulting firms do too.

Are the “scores” for the objective evaluations based on company revenue? No.

Return on investment? No.

Patents? No.

IRR? No. No. No.

Number of flagship customers like Amazon, Apple, and Google type companies? No.

The ranking is based on “vision.” And another key factor is “the ability to execute its “strategy.” There you go. A vision is what I want to help me make my way through Kabul. I need a strategy beyond stay alive.

What would I do if I have to index content in an enterprise? My answer may surprise you. I would take out my check book and license these systems.

- Palantir Technologies or Centrifuge Systems

- Bitext’s Deep Linguistic Analysis platform

- Recorded Future.

With these three systems I would have:

- The ability to locate an entity, concept, event, or document

- The capability to process content in more than 40 languages, perform subject verb object parsing and entity extraction in near real time

- Point-and-click predictive analytics

- Point-and-click visualization for financial, business, and military warfighting actions

- Numerous programming hooks for integrating other nifty things that I need to achieve an objective such as IBM’s Cybertap capability.

Why is there a logical and factual disconnect between what I would do to deliver real world, high value outputs to my employees and what the New York-Datanami folks recommend?

Well, “disconnect” may not be the right word. Have some search vendors and third party experts embraced the concept of “fake news” or embraced the know how explained in Propaganda, Father Ellul’s important book? Is the idea something along the lines of “we just say anything and people will believe our software will work this way”?

Many vendors stick reasonably close to the factual performance of their software and systems. Let me highlight three examples.

First, Darktrace, a company crafted by Dr. Michael Lynch, is a stickler for explaining what the smart software does. In a recent exchange with Darktrace, I learned that Darktrace’s senior staff bristle when a descriptive write up strays from the actual, verified technical functions of the software system. Anyone who has worked with Dr. Lynch and his senior managers knows that these people can be very persuasive. But when it comes to Darktrace, it is “facts R us”, thank you.

Second, Recorded Future takes a similar hard stand when explaining what the Recorded Future system can and cannot do. Anyone who suggests that Recorded Future predictive analytics can identify the winner of the Kentucky Derby a day before the race will be disabused of that notion by Recorded Future’s engineers. Accuracy is the name of the game at Recorded Future, but accuracy relates to the use of numerical recipes to identify likely events and assign a probability to some events. Even though the company deals with statistical probabilities, adding marketing spice to the predictive system’s capabilities is a no-go zone.

Third, Bitext, the company that offers a Deep Linguistics Analysis platform to improve the performance of a range of artificial intelligence functions, is anchored in facts. On a recent trip to Spain, we interviewed a number of the senior developers at this company and learned that Bitext software works. Furthermore, the professionals are enthusiastic about working for this linguistics-centric outfit because it avoid marketing hyperbole. “Our system works,” said one computational linguist. This person added, “We do magic with computational linguistics and deep linguistic analysis.” I like that—magic. Oh, Bitext does sales too with the likes of Porsche, Volkswagen, and the world’s leading vendor of mobile systems and services, among others. And from Madrid, Spain, no less. And without marketing hyperbole.

Why then are companies based on keyword indexing with a sprinkle of semantics and basic math repositioning themselves by chasing each new spun sugar-encrusted trend?

I have given a tiny bit of thought to this question.

In my monograph “The New Landscape of Search” I made the point that search had become devalued, a free download in open source repositories, and a utility like cat or dir. Most enterprise search systems have failed to deliver results painted in Technicolor in sales presentations and marketing collateral.

Today, if I want search and retrieval, I just use Lucene. In fact, Lucene is more than good enough; it is comparable to most proprietary enterprise search systems. If I need support, I can ring up Elastic or one of many vendors eager to gild the open source lily.

The extreme value and reliability of open source search and retrieval software has, in my opinion, gutted the market for proprietary search and retrieval software. The financial follies of Fast Search & Transfer reminded some investors of the costly failures of Convera, Delphes, Entopia, among others I documented on my Xenky.com site at this link.

Recently most of the news I see on my coal fired computer in Harrod’s Creek about enterprise search has been about repositioning, not innovation. What’s up?

The answer seems to be that the myth cherished by was that enterprise search was the one, true way make sense of digital information. What many organizations learned was that good enough search does the basic blocking and tackling of finding a document but precious little else without massive infusions of time, effort, and resources.

But do enterprise search systems–no matter how many sparkly buzzwords–work? Not too many, no matter what publicly traded consulting firms tell me to believe.

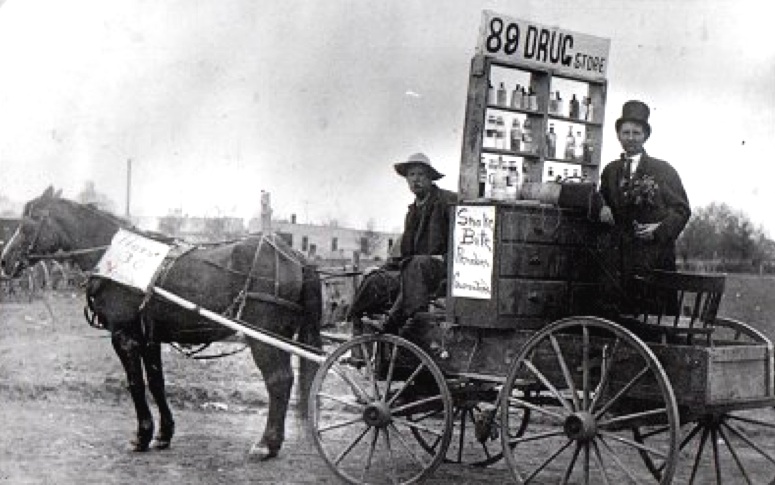

Snake oil? I don’t know. I just know my own experience, and after 45 years of trying to make digital information findable, I avoid fast talkers with covered wagons adorned with slogans.

What happens when an enterprise search system is fed videos, podcasts, telephone intercepts, flows of GPS data, and a couple of proprietary file formats?

Answer: Not much.

The search system has to be equipped with extra cost connectors, assorted oddments, and shimware to deal with a recorded webinar and a companion deck of PowerPoint slides used by the corporate speaker.

What happens when the content stream includes email and documents in six, 12, or 24 different languages?

Answer: Mad scrambling until the proud licensee of an enterprise search system can locate a vendor able to support multiple language inputs. The real life needs of an enterprise are often different from what the proprietary enterprise search system can deal with.

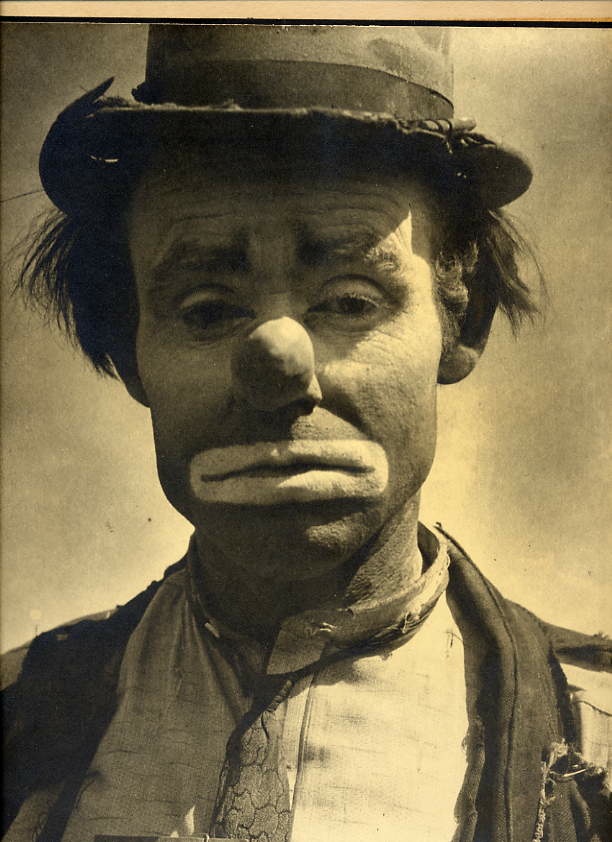

That’s why I find the repositioning of enterprise search technology a bit like a clown with a sad face. The clown is no longer funny. The unconvincing efforts to become something else clash with the sad face, the red nose, and worn shoes still popular in Harrod’s Creek, Kentucky.

When it comes to enterprise search, my litmus test is simple: If a system is keyword centric, it isn’t going to work for some of the real world applications I have encountered.

Oh, and don’t believe me, please.

Find a US special operations professional who relies on Palantir Gotham or IBM Analyst’s Notebook to determine a route through a hostile area. Ask whether a keyword search system or Palantir is more useful. Listen carefully to the answer.

No matter what keyword enthusiasts and quasi-slick New York consultants assert, enterprise search systems are not well suited for a great many real world applications. Heck, enterprise search often has trouble displaying documents which match the user’s query.

And why? Sluggish index updating, lousy indexing, wonky metadata, flawed set up, updates that kill a system, or interfaces that baffle users.

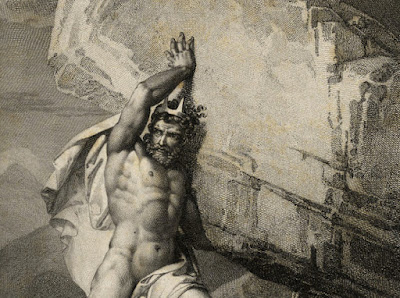

Personally I love to browse results lists. I like old fashioned high school type research too. I like to open documents and Easter egg hunt my way to a document that answers my question. But I am in the minority. Most users expect their finding systems to work without the query-read-click-scan-read-scan-read-scan Sisyphus-emulating slog.

Ah, you are thinking I have offered no court admissible evidence to support my argument, right? Well, just license a proprietary enterprise search system and let me know how your career is progressing. Remember when you look for a new job. You won’t search; you will insight.

Stephen E Arnold, April 12, 2017

Alphabet Google Falls on Its Algorithms

March 24, 2017

Here in Harrod’s Creek, advertising is mostly hand painted signs nailed to telephone poles in front of trailer parks.

Real Advertising in Big Cities Does This

In the LED illuminated big cities, people advertise by:

- Cooking up some keywords that are used to locate products and services like mesothelioma or cheap tickets

- Paying money to the “do no evil” outfit Alphabet Google to put those ads in front of people who are searching (sometimes cluelessly) for a topic related to lung disease or flying to the land of milk and honey for a couple of hundred bucks

- Alphabet Google putting the ads in front of humans (or software robots as the case may be) who will click on the displayed message, banner, or video snippet

- The GOOG collects the money

- The advertiser gets leads

- Repeat the process.

The notion, like digital currencies, is based on trust. Advertisers trust or “believe” that the GOOG’s smart software will recognize a search for Madrid will require an airplane ticket and maybe a hotel. The GOOG’s smart software consults the ads germane to travel and displays a relevant ad in front of the human (or software robot as the case may be).

What happens when the GOOG’s smart software does everything except the relevance part?

The reaction in the non Sillycon Valley business world is easy to spot; for example, here are some examples of the consequences of the reality of what the GOOG does versus what advertisers and other true believers in the gospel of Google collides with faith, trust, and hope:

- USA Today: “AT&T, Other US Advertisers Quit Google, Yo8uiTube over Extremist Videos”. Yikes, outrage and a signal that the online advertising juggernaut has hit a pothole

- Bloomberg: “Google Ad Crisis Spreads as Biggest Marketers Halt Spending.” The word “crisis” is not one usually associated with the Alphabet Google outfit, is it?

- Daily Mail (a fountain of truth): “Google’s Head of Europe Apologizes for Ads on Extremist Content but Furious MP Says Sorry Is Not Enough.” After more than 15 years of doing and apologizing, someone has finally noticed the tactics of the GOOG. Progress.

I could list more stories about this sudden discovery that matching ads to queries is not exactly what some people have believed.

Diffeo Incorporates Meta Search Technology

March 24, 2017

Will search-and-discovery firm Diffeo’s recent acquisition give it the edge? Yahoo Finance shares, “Diffeo Acquires Meta Search and Launches New Offering.” Startup Meta Search developed a local computer and cloud search system that uses smart indexing to assign index terms and keep the terms consistent. Diffeo provides a range of advanced content processing services based on collaborative machine intelligence. The press release specifies:

Diffeo’s content discovery platform accelerates research analysts by applying text analytics and machine intelligence algorithms to users’ in-progress files, so that it can recommend content that fills in knowledge gaps — often before the user thinks of searching. Diffeo acts as a personal research assistant that scours both the user’s files and the Internet. The company describes its technology as collaborative machine intelligence.

Diffeo and Meta’s services complement each other. Meta provides unified search across the content on all of a user’s cloud platforms and devices. Diffeo’s Advanced Discovery Toolbox displays recommendations alongside in-progress documents to accelerate the work of research analysts by uncovering key connections.

Meta’s platform integrates cloud environments into a single keyword search interface, enabling users to search their files on all cloud drives, such as Dropbox, Google Drive, Slack and Evernote all at once. Meta also improves search quality by intelligently analyzing each document, determining the most important concepts, and automatically applying those concepts as ‘Smart Tags’ to the user’s documents.

This seems like a promising combination. Founded in 2012, Diffeo made Meta Search its first acquisition on January 10 of this year. The company is currently hiring. Meta Search, now called Diffeo Cloud Search, is based in Boston.

Cynthia Murrell, March 24, 2017

Is This Our Beloved Google? Ads and Consumer Scams?

March 20, 2017

I admit it. I want to believe everything I read on the Internet. I take this approach to be more in tune with today’s talking heads on US cable TV and the millennials who seem to cross my path like deer unfamiliar with four lane highways.

I read what must be an early April Fool’s joke. The write up’s headline struck me as orthogonal to my perception of the company I know, love, and trust: “Google to Revamp Ad Policies after U.K., Big Brands Boycott.”

The main idea is that someone believes that Google has been indexing terror-related content and placing ads next to those result pages and videos. I learned:

The U.S. company said in a blog post Friday it would give clients more control over where their ads appear on both YouTube, the video-sharing service it owns, and the Google Display Network, which posts advertising to third-party websites. The announcement came after the U.K. government and the Guardian newspaper pulled ads from the video site, stepping up pressure on YouTube to police content on its platform.

Interesting. I thought Google / DeepMind had the hate speech, fake news, and offensive content issue killed, cooked, and eaten.

The notion that Google would buckle under to mere advertisers strikes me as ludicrous. For years, Google has pointed out that confused individuals at Foundem, the government of France, and other information sites misunderstand Google’s squeaky clean approach to figuring out what’s important.

The other item which suggests that the Google in my mind is not the Google in the real world is “Facebook, Twitter, and Google Must Remove Scams or Risk Legal Action, Says EU.”

What’s up? Smart software understands content in context. Algorithms developed by the wizards at Google and other outfits chug along without the silly errors humans make. Google and other companies have to become net nannies. (Hey, that software worked great, didn’t it?)

I learned:

The EU also ordered these social networks to remove fraudulent posts that can mislead consumers.

If these write ups are indeed accurate, I will take down my “Do no evil” poster. Is there a “We do evil” version available? I will check those advertisements on Google.

Stephen E Arnold, March 20, 2017

Search Like Star Trek: The Next Frontier

February 28, 2017

I enjoy the “next frontier”-type article about search and retrieval. Consider “The Next Frontier of Internet and Search,” a write up in the estimable “real” journalism site Huffington Post. As I read the article, I heard “Scotty, give me more power.” I thought I heard 20 somethings shouting, “Aye, aye, captain.”

The write up told me, “Search is an ev3ryday part of our lives.” Yeah, maybe in some demographics and geo-political areas. In others, search is associated with finding food and water. But I get the idea. The author, Gianpiero Lotito of FacilityLive is talking about people with computing devices, an interest in information like finding a pizza, and the wherewithal to pay the fees for zip zip connectivity.

And the future? I learned:

he future of search appears to be in the algorithms behind the technology.

I understand algorithms applied to search and content processing. Since humans are expensive beasties, numerical recipes are definitely the go to way to perform many tasks. For indexing, humans fact checking, curating, and indexing textual information. The math does not work the way some expect when algorithms are applied to images and other rich media. Hey, sorry about that false drop in the face recognition program used by Interpol.

I loved this explanation of keyword search:

The difference among the search types is that: the keyword search only picks out the words that it thinks are relevant; the natural language search is closer to how the human brain processes information; the human language search that we practice is the exact matching between questions and answers as it happens in interactions between human beings.

This is as fascinating as the fake information about Boolean being a probabilistic method. What happened to string matching and good old truncation? The truism about people asking questions is intriguing as well. I wonder how many mobile users ask questions like, “Do manifolds apply to information spaces?” or “What is the chemistry allowing multi-layer ion deposition to take place?”

Yeah, right.

The write up drags in the Internet of Things. Talk to one’s Alexa or one’s thermostat via Google Home. That’s sort of natural language; for example, Alexa, play Elvis.

Here’s the paragraph I highlighted in NLP crazy red:

Ultimately, what the future holds is unknown, as the amount of time that we spend online increases, and technology becomes an innate part of our lives. It is expected that the desktop versions of search engines that we have become accustomed to will start to copy their mobile counterparts by embracing new methods and techniques like the human language search approach, thus providing accurate results. Fortunately these shifts are already being witnessed within the business sphere, and we can expect to see them being offered to the rest of society within a number of years, if not sooner.

Okay. No one knows the future. But we do know the past. There is little indication that mobile search will “copy” desktop search. Desktop search is a bit like digging in an archeological pit on Cyprus: Fun, particularly for the students and maybe a professor or two. For the locals, there often is a different perception of the diggers.

There are shifts in “the business sphere.” Those shifts are toward monopolistic, choice limited solutions. Users of these search systems are unaware of content filtering and lack the training to work around the advertising centric systems.

I will just sit here in Harrod’s Creek and let the future arrive courtesy of a company like FacilityLive, an outfit engaged in changing Internet searching so I can find exactly what I need. Yeah, right.

Stephen E Arnold, February 28, 2017

Forecasting Methods: Detail without Informed Guidance

February 27, 2017

Let’s create a scenario. You are a person trying to figure out how to index a chunk of content. You are working with cancer information sucked down from PubMed or a similar source. You run an extraction process and push the text through an indexing system. You use a system like Leximancer and look at the results. Hmmm.

Next you take a corpus of blog posts dealing with medical information. You suck down the content and run it through your extractor, your indexing system, and your Leximancer set up. You look at the results. Hmmm.

How do you figure out what terms are going to be important for your next batch of mixed content?

You might navigate to “Selecting Forecasting Methods in Data Science.” The write up does a good job of outlining some of the numerical recipes taught in university courses and discussed in textbooks. For example, you can get an overview in this nifty graphic:

And you can review outputs from the different methods identified like this:

Useful.

What’s missing? For the person floundering away like one government agency’s employee at which I worked years ago, you pick the trend line you want. Then you try to plug in the numbers and generate some useful data. If that is too tough, you hire your friendly GSA schedule consultant to do the work for you. Yep, that’s how I ended up looking at:

- Manually selected data

- Lousy controls

- Outputs from different systems

- Misindexed text

- Entities which were not really entities

- A confused government employee.

Here’s the takeaway. Just because software is available to output stuff in a log file and Excel makes it easy to wrangle most of the data into rows and columns, none of the information may be useful, valid, or even in the same ball game.

When one then applies without understanding different forecasting methods, we have an example of how an individual can create a pretty exciting data analysis.

Descriptions of algorithms do not correlate with high value outputs. Data quality, sampling, understanding why curves are “different”, and other annoying details don’t fit into some busy work lives.

Stephen E Arnold, February 27, 2017

Intellisophic / Linkapedia

February 24, 2017

Intellisophic identifies itself as a Linkapedia company. Poking around Linkapedia’s ownership revealed some interesting factoids:

- Linkapedia is funded in part by GITP Ventures and SEMMX (possible a Semper fund)

- The company operates in Hawaii and Pennsylvania

- One of the founders is a monk / Zen master. (Calm is a useful characteristic when trying to spin money from a search machine.)

First, Intellisophic. The company describes itself this way at this link:

Intellisophic is the world’s largest provider of taxonomic content. Unlike other methods for taxonomy development that are limited by the expense of corporate librarians and subject matter experts, Intellisophic content is machine developed, leveraging knowledge from respected reference works. The taxonomies are unbounded by subject coverage and cost significantly less to create. The taxonomy library covers five million topic areas defined by hundreds of millions of terms. Our taxonomy library is constantly growing with the addition of new titles and publishing partners.

In addition, Intellisophic’s technology—Orthogonal Corpus Indexing—can identify concepts in large collections of text. The system can be sued to enrich an existing technology, business intelligence, and search. One angle Intellisophic exploits is its use of reference and educational books. The company is in the “content intelligence” market.

Second, the “parent” of Intellisophic is Linkapedia. This public facing Web site allows a user to run a query and see factoids, links about a topic. Plus, Linkapedia has specialist collections of content bundles; for example, lifestyle, pets, and spirituality. I did some clicking around and found that certain topics were not populated; for instance, Lifestyle, Cars, and Brands. No brand information appeared for me. I stumbled into a lengthy explanation of the privacy policy related to a mathematics discussion group. I backtracked, trying to get access the actual group and failed. I think the idea is an interesting one, but more work is needed. My test query for “enterprise search” presented links to Convera and a number of obscure search related Web sites.

The company is described this way in Crunchbase:

Linkapedia is an interest based advertising platform that enables publishers and advertisers to monetize their traffic, and distribute their content to engaged audiences. As opposed to a plain search engine which delivers what users already know, Linkapedia’s AI algorithms understand the interests of users and helps them discover something new they may like even if they don’t already know to look for it. With Linkapedia content marketers can now add Discovery as a new powerful marketing channel like Search and Social.

Like other search related services, Linkapedia uses smart software. Crunchbase states:

What makes Linkapedia stand out is its AI discovery engine that understands every facet of human knowledge. “There’s always something for you on Linkapedia”. The way the platform works is simple: people discover information by exploring a knowledge directory (map) to find what interests them. Our algorithms show content and native ads precisely tailored to their interests. Linkapedia currently has hundreds of million interest headlines or posts from the worlds most popular sources. The significance of a post is that “someone thought something related to your interest was good enough to be saved or shared at a later time.” The potential of a post is that it is extremely specific to user interests and has been extracted from recognized authorities on millions of topics.

Interesting. Search positioned as indexing, discovery, social, and advertising.

Stephen E Arnold, February 24, 2017

A Famed Author Talks about Semantic Search

February 24, 2017

I read “An Interview with Semantic Search and SEO Expert David Amerland.” Darned fascinating. I enjoyed the content marketing aspect of the write up. I also found the explanation of semantic search intriguing as well.

This is the famed author. Note the biceps and the wrist gizmos.

The background of the “famed author” is, according to the write up:

David Amerland, a chemical engineer turned semantic search and SEO expert, is a famed author, speaker and business journalist. He has been instrumental in helping startups as well as multinational brands like Microsoft, Johnson & Johnson, BOSCH, etc. create their SMM and SEO strategies. Davis writes for high-profile magazines and media organizations such as Forbes, Social Media Today, Imassera and journalism.co.uk. He is also part of the faculty in Rutgers University, and is a strategic advisor for Darebee.com.

Darebee.com is a workout site. Since I don’t workout, I was unaware of the site. You can explore it at Darebee.com. I think the name means that a person can “dare to be muscular” or “date to be physically imposing.” I ran a query for Darebee.com on Giburu, Mojeek, and Unbubble. I learned that the name “Darebee” does come up in the index. However, the pointers in Unbubble are interesting because the links identify other sites which are using the “darebee” string to get traffic. Here’s the Unbubble results screen for my query “darebee.”

What I found interesting is the system administrator for Darebee.com is none other than David Amerland, whose email is listed in the Whois record as david@amerland.co.uk. Darebee is apparently a part of Amerland Enterprises Ltd. in Hertfordshire, UK. The traffic graph for Darebee.com is listed by Alexa. It shows about 26,000 “visitors” per month which is at variance with the monthly traffic data of 3.2 million on W3Snoop.com.

When I see this type of search result, I wonder if the sites have been working overtime to spoof the relevance components of Web search and retrieval systems.

I noted these points in the interview which appeared in the prestigious site Kamkash.com.

On relevance: Data makes zero sense if you can’t find what you want very quickly and then understand what you are looking for.

On semantic search’s definition: Semantic search essentially is trying to understand at a very nuanced level, and then it is trying to give us the best possible answer to our query at that nuanced level of our demands or our intent.

On Boolean search: Boolean search essentially looks at something probabilistically.

On Google’s RankBrain: [Google RankBrain] has nothing to do with ranking.

On participating in Google Plus: Google+ actually allows you to be pervasively enough very real in a very digital environment where we are synchronously connected with lot of people from all over the world and yet the connection feels very…very real in terms of that.

I find these statements interesting.

Tips for Finding Information on Reddit.com

February 23, 2017

I noted “The Right Way to Search Posts on Reddit.” I find it interesting that the Reddit content is not comprehensively indexed by Google. One does stumble across this type of results list in the Google if one knows how to use Google’s less than obvious search syntax. Where’s bad stuff on Reddit? Google will reveal some links of interest to law enforcement professionals. For example:

Bing does a little better with certain Reddit content. To be fair, neither service is doing a bang up job indexing social media content but lists a fraction of the Google index pointers. For example:

So how does one search Reddit.com the “right way.” I noted this paragraph:

As of 2015, Reddit had accumulated over 190 million posts across 850,000 different subreddits (or communities), plus an additional 1.7 billion comments across all of those posts. That’s an incredible amount of content, and all of it can still be accessed on Reddit.

I would point out that the “all” is not accurate. There is a body of content deleted by moderators, including some of Reddit.com’s top dogs, which has been removed from the site.

Reddit offers some search syntax to help the researcher locate what is indexed by Reddit.com’s search system. The write up pointed to these strings:

- title:[text] searches only post titles.

- author:[username] searches only posts by the given username.

- selftext:[text] searches only the body of posts that were made as self-posts.

- subreddit:[name] searches only posts that were submitted to the given subreddit community.

- url:[text] searches only the URL of non-self-post posts.

- site:[text] searches only the domain name of non-self-post posts.

- nsfw:yes or nsfw:no to filter results based on whether they were marked as NSFW or not.

- self:yes or self:no to filter results based on whether they were self-posts or not.

The article contains a handful of other search commands; for example, Boolean and and or. How does one NOT out certain words. Use the minus sign. The word not is apparently minus sign appropriate for the discerning Reddit.com searcher.

Stephen E Arnold, February 23, 2017

Mondeca: Tweaking Its Market Position

February 22, 2017

One of the Beyond Search goslings noticed a repositioning of the taxonomy capabilities of Mondeca. Instead of pitching indexing, the company has embraced ElasticSearch (based on Lucene) and Solr. The idea is that if an organization is using either of these systems for search and retrieval, Mondeca can provide “augmented” indexing. The idea is that keywords are not enough. Mondeca can index the content using concepts.

Of course, the approach is semantic, permits exploration, and enables content discovery. Mondeca’s Web site describes search as “find” and explains:

Initial results are refined, annotated and easy to explore. Sorted by relevancy, important terms are highlighted: easy to decide which one are relevant. Sophisticated facet based filters. Refining results set: more like this, this one, statistical and semantic methods, more like these: graph based activation ranking. Suggestions to help refine results set: new queries based on inferred or combined tags. Related searches and queries.

This is a similar marketing move to the one that Intrafind, a German search vendor, implemented several years ago. Mondeca continues to offer its taxonomy management system. Human subject matter experts do have a role in the world of indexing. Like other taxonomy systems and services vendors, the hook is that content indexed with concepts is smart. I love it when indexing makes content intelligent.

The buzzword is used by outfits ranging from MarkLogic’s merry band of XML and XQuery professionals to the library-centric outfits like Smartlogic. Isn’t smart logic better than logic?

Stephen E Arnold, February 22, 2017