What Happens When One NOTs Out Web Sites?

April 30, 2012

We learned about Million Short. The idea is that a user can NOT out a block of Web sites. The use case is that a query is passed to the system, and the system filters out the top 1,000 or 100,000 Web sites. We think you will want to check it out. Once you have run some sample queries, consider these questions:

- When a handful of Web sites attract the most traffic, is popularity the road to comprehensive information retrieval?

- When sites are NOTted out, what do you gain? What do you lose?

- How do you know what is filtered from any Web search index? Do you run test queries and examine the results?

Enjoy. If you know a librarian, why not involve that person in your test queries?

Stephen E Arnold, May 1, 2012

Sponsored by PolySpot

Love Lost between Stochastic and Google AppEngine

March 30, 2012

Stochastic Technologies’ Stavros Korokithakis has some very harsh words for Google’s AppEngine in “Going from Loving AppEngine to Hating it in 9 Days.” Is the Google shifting its enterprise focus?

Stochastic’s service Dead Man’s Switch got a huge publicity boost from its recent Yahoo article, which drove thousands of new visitors to the site. Preparing for just such a surge, the company turned months ago to Google’s AppEngine to manage potential customers. At first, AppEngine worked just fine. The hassle-free deployments while rewriting and the free tier were just what the company needed at that stage.

Soon after the Yahoo piece, Stochastic knew they had to move from the free quota to a billable status. There was a huge penalty, though, for one small mistake: Korokithakis entered the wrong credit card number. No problem, just disable the billing and re-enable it with the correct information, right? Wrong. Billing could not be re-enabled for another week.

Things only got worse from there. Korokithakis attempted to change settings from Google Wallet, but all he could do was cancel the payment. He then found that, while he was trying to correct his credit card information, the AppEngine Mail API had reached its daily 100-recipient email limit. The limit would not be removed until the first charge cleared, which would take a week. The write up laments:

At this point, we had five thousand users waiting for their activation emails, and a lot of them were emailing us, asking what’s wrong and how they could log in. You can imagine our frustration when we couldn’t really help them, because there was no way to send email from the app! After trying for several days to contact Google, the AppEngine team, and the AppEngine support desk, we were at our wits’ end. Of all the tens of thousands of visitors that had come in with the Yahoo! article, only 100 managed to actually register and try out the site. The rest of the visitors were locked out, and there was nothing we could do.

Between sluggish payment processing and a bug in the Mail API, it actually took nine days before the Stochastic team could send emails and register users. The company undoubtedly lost many potential customers to the delay. In the meantime, to add charges to injury, the AppEngine task queue kept retrying to send the emails and ran up high instance fees.

It is no wonder that Stochastic is advising us all to stay away from Google’s AppEngine. Our experiences with Google have been positive. Perhaps this is an outlier’s experience?

Cynthia Murrell, March 30, 2012

Sponsored by Pandia.com

Fat Apps. What Happened to the Cloud?

February 5, 2012

If it seems like a step backward, that’s because it is: Network Computing declares, “Fat Apps Are Where It’s At.” At least for now.

Writer Mike Fratto makes the case that, in the shift from desktop to mobile, we’re getting ahead of ourselves. Cloud-based applications that run only the user interface on mobile devices are a great way to save space– if you can guarantee constant wireless access to the Web. That’s not happening yet. Wi-Fi is unreliable, and wireless data plans with their data caps can become very expensive very quickly.

Besides, says Fratto, services that aim to place the familiar desktop environment onto mobile devices, like Citrix XenApp or VMware ThinApp, are barking up the wrong tree. The article asserts:

There isn’t the screen real estate available on mobile devices–certainly not on phones–to populate menus and pull downs. . . . But that is how desktop apps are designed. Lots of features displayed for quick access because you have the room to do it while still providing enough screen space to write a document or work on a spreadsheet. Try using Excel as a thin app on your phone or tablet. See how long it takes for you to get frustrated.

So, Fratto proposes “fat apps” as the temporary alternative, applications designed for mobile use with local storage that let you continue to work without a connection. Bloatware is back, at least until we get affordable, universal wireless access worked out.

I am getting some app fatigue. What’s the next big thing?

Cynthia Murrell, February 5, 2012

Sponsored by Pandia.com

Microsoft Innovation: Emulating the Bold Interface Move by Apple?

July 2, 2025

![Dino 5 18 25_thumb[3]_thumb_thumb_thumb Dino 5 18 25_thumb[3]_thumb_thumb_thumb](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/06/Dino-5-18-25_thumb3_thumb_thumb_thumb_thumb.gif) This dinobaby wrote this tiny essay without any help from smart software. Not even hallucinating gradient descents can match these bold innovations.

This dinobaby wrote this tiny essay without any help from smart software. Not even hallucinating gradient descents can match these bold innovations.

Bold. Decisive. Innovative. Forward leaning. Have I covered the adjectives used to communicate “real” innovation? I needed these and more to capture my reaction to the information in “Forget the Blue Screen of Death – Windows Is Replacing It with an Even More Terrifying Black Screen of Death.”

Yep, terrifying. I don’t feel terrified when my monitors display a warning. I guess some people do.

The write up reports:

Microsoft is replacing the Windows 11 Blue Screen of Death (BSoD) with a Black Screen of Death, after decades of the latter’s presence on multiple Windows iterations. It apparently wants to provide more clarity and concise information to help troubleshoot user errors easily.

The important aspect of this bold decision to change the color of an alert screen may be Apple color envy.

Apple itself said, “Apple Introduces a Delightful and Elegant New Software Design.” The innovation was… changing colors and channeling Windows Vista.

Let’s recap. Microsoft makes an alert screen black. Apple changes its colors.

Peak innovation. I guess that is what happens when artificial intelligence does not deliver.

Stephen E Arnold, July 2, 2025

Microsoft and OpenAI: An Expensive Sitcom

July 1, 2025

No smart software involved. Just an addled dinobaby.

No smart software involved. Just an addled dinobaby.

I remember how clever I thought the book title “Who Says Elephants Can’t Dance?: Leading a Great Enterprise Through Dramatic Change.” I find the break dancing content between Microsoft and OpenAI even more amusing. Bloomberg “real” news reported that Microsoft is “struggling to sell its Copilot solutions. Why? Those Microsoft customers want OpenAI’s ChatGPT. That’s a hoot.

Computerworld adds to this side show more Monte Python twists. “Microsoft and OpenAI: Will They Opt for the Nuclear Option?” (I am not too keen on the use of the word “nuclear.” People bandy it about without understanding exactly what the actual consequences of such an opton means. Please, do a bit of homework before suggesting that two enterprises are doing anything remotely similar.)

The estimable Computerworld reports:

Microsoft needs access to OpenAI technologies to keep its worldwide lead in AI and grow its valuation beyond its current more than $3.5 trillion. OpenAI needs Microsoft to sign a deal so the company can go public via an IPO. Without an IPO, the company isn’t likely to keep its highly valued AI researchers — they’ll probably be poached by companies willing to pay hundreds of millions of dollars for the talent.

The problem seems to be that Microsoft is trying to sell its version of smart software. The enterprise customers and even dinobabies like myself prefer the hallucinatory and unpredictable ChatGPT to the downright weirdness of Copilot in Notepad. The Computerworld story says:

Hovering over it all is an even bigger wildcard. Microsoft’s and OpenAI’s existing agreement dramatically curtails Microsoft’s rights to OpenAI technologies if the technologies reach what is called artificial general intelligence (AGI) — the point at which AI becomes capable of human reasoning. AGI wasn’t defined in that agreement. But Altman has said he believes AGI might be reached as early as this year.

People cannot agree over beach rights and school taxes. The smart software (which may remain without regulation for a decade) is a much bigger deal. The dollars at stake are huge. Most people do not know that a Board of Directors for a Fortune 1000 company will spend more time arguing about parking spaces than a $300 million acquisition. The reason? Most humans cannot conceive of the numbers of dollars associated with artificial intelligence. If the AI next big thing does not work, quite a few outfits are going to be selling snake oil from tables at flea markets.

Here’s the humorous twist from my vantage point. Microsoft itself kicked off the AI boom with its announcements a couple of years ago. Google, already wondering how it can keep the money gushing to pay the costs of simply being Google, short circuited and hit the switch for Code Red, Yellow, Orange, and probably the color only five people on earth have ever seen.

And what’s happened? The Google-spawned methods aren’t eliminating hallucinations. The OpenAI methods are not eliminating hallucinations. The improvements are more and more difficult to explain. Meanwhile start ups are doing interesting things with AI systems that are good enough for certain use cases. I particularly like consulting and investment firms using AI to get rid of MBAs.

The punch line for this joke is that the Microsoft version of ChatGPT seems to have more brand deliciousness. Microsoft linked with OpenAI, created its own “line of AI,” and now finds that the frisky money burner OpenAI is more popular and can just define artificial general intelligence to its liking and enjoy the philosophical discussions among AI experts and lawyers.

One cannot make this sequence up. Jack Benny’s radio scripts came close, but I think the Microsoft – OpenAI program is a prize winner.

Stephen E Arnold, July 1, 2025

Publishing for Cash: What Is Here Is Bad. What Is Coming May Be Worse

July 1, 2025

Smart software involved in the graphic, otherwise just an addled dinobaby.

Smart software involved in the graphic, otherwise just an addled dinobaby.

Shocker. Pew Research discovers that most “Americans” do not pay for news. Amazing. Is it possible that the Pew professionals were unaware of the reason newspapers, radio, and television included comic strips, horoscopes, sports scores, and popular music in their “real” news content? I read in the middle of 2025 the research report “Few Americans Pay for News When They Encounter Paywalls.” For a number of years I worked for a large publishing company in Manhattan. I also worked at a privately owned publishing company in fly over country.

The sky looks threatening. Is it clouds, locusts, or the specter of the new Dark Ages? Thanks, you.com. Good enough.

I learned several things. Please, keep in mind that I am a dinobaby and I have zero in common with GenX, Y, Z, or the horrific GenAI. The learnings:

- Publishing companies spend time and money trying to figure out how to convert information into cash. This “problem” extended from the time I took my first real job in 1972 to yesterday when I received an email from a former publisher who is thinking about batteries as the future.

- Information loses its value as it diffuses; that is, if I know something, I can generate money IF I can find the one person who recognizes the value of that information. For anyone else, the information is worthless and probably nonsense because that individual does not have the context to understand the “value” of an item of information.

- Information has a tendency to diffuse. It is a bit like something with a very short half life. Time makes information even more tricky. If the context changes exogenously, the information I have may be rendered valueless without warning.

So what’s the solution? Here are the answers I have encountered in my professional life:

- Convert the “information” into magic and the result of a secret process. This is popular in consulting, certain government entities, and banker types. Believe me, people love the incantations, the jargon talk, and the scent of spontaneous ozone creation.

- Talk about “ideals,” and deliver lowest common denominator content. The idea that the comix and sports scores will “sell” and the revenue can be used to pursue ideals. (I worked at an outfit like this, and I liked its simple, direct approach to money.)

- Make the information “exclusive” and charge a very few people a whole lot of money to access this “special” information. I am not going to explain how lobbying, insider talk, and trade show receptions facilitate this type of information wheeling and dealing. Just get a LexisNexis-type of account, run some queries, and check out the bill. The approach works for certain scientific and engineering information, financial data, and information people have no idea is available for big bucks.

- Embrace the “if it bleeds, it leads” approach. Believe me this works. Look at YouTube thumbnails. The graphics and word choice make clear that sensationalism, titillation, and jazzification are the order of the day.

Now back to the Pew research. Here’s a passage I noted:

The survey also asked anyone who said they ever come across paywalls what they typically do first when that happens. Just 1% say they pay for access when they come across an article that requires payment. The most common reaction is that people seek the information somewhere else (53%). About a third (32%) say they typically give up on accessing the information.

Stop. That’s the key finding: one percent pay.

Let me suggest:

- Humans will take the easiest path; that is, they will accept what is output or what they hear from their “sources”

- Humans will take “facts” and glue they together to come up with more “facts”. Without context — that is, what used to be viewed as a traditional education and a commitment to lifelong learning, these people will lose the ability to think. Some like this result, of course.

- Humans face a sharper divide between the information “haves” and the information “have nots.”

Net net: The new dark ages are on the horizon. How’s that for a speculative conclusion from the Pew research?

Stephen E Arnold, July 1, 2025

US Science Conferences: Will They Become an Endangered Species?

June 26, 2025

Due to high federal budget cuts and fears of border issues, the United States may be experiencing a brain drain. Some smart people (aka people tech bros like to hire) are leaving the country. Leadership in some high profile outfits are saying, ““Don’t let the door hit you on the way out.” Others get multi-million pay packets to remain in America.

Nature.com explains more in “Scientific Conferences Are Leaving The US Amid Border Fears.” Many scientific and academic conferences were slated to occur in the US, but they’ve since been canceled, postponed, or moved to other venues in other countries. The organizers are saying that Trump’s immigration and travel policies are discouraging foreign nerds from visiting the US. Some organizers have rescheduled conferences in Canada.

Conferences are important venues for certain types of professionals to network, exchange ideas, and learn the alleged new developments in their fields. These conferences are important to the intellectual communities. Nature says:

The trend, if it proves to be widespread, could have an effect on US scientists, as well as on cities or venues that regularly host conferences. ‘Conferences are an amazing barometer of international activity,’ says Jessica Reinisch, a historian who studies international conferences at Birkbeck University of London. ‘It’s almost like an external measure of just how engaged in the international world practitioners of science are.’ ‘What is happening now is a reverse moment,’ she adds. ‘It’s a closing down of borders, closing of spaces … a moment of deglobalization.’”

The brain drain trope and the buzzword “deglobalization” may point to a comparatively small change with longer term effects. At the last two specialist conferences I attended, I encountered zero attendees or speakers from another country. In my 60 year work career this was a first at conferences that issued a call for papers and were publicized via news releases.

Is this a loss? Not for me. I am a dinobaby. For those younger than I, my hunch is that a number of people will be learning about the truism “If ignorance is bliss, just say, ‘Hello, happy.’”

Whitney Grace, June 26, 2025

Big AI Surprise: Wrongness Spreads Like Measles

June 24, 2025

An opinion essay written by a dinobaby who did not rely on smart software .

An opinion essay written by a dinobaby who did not rely on smart software .

Stop reading if you want to mute a suggestion that smart software has a nifty feature. Okay, you are going to read this brief post. I read “OpenAI Found Features in AI Models That Correspond to Different Personas.” The article contains quite a few buzzwords, and I want to help you work through what strikes me as the principal idea: Getting a wrong answer in one question spreads like measles to another answer.

Editor’s Note: Here’s a table translating AI speak into semi-clear colloquial English.

| Term | Colloquial Version |

| Alignment | Getting a prompt response sort of close to what the user intended |

| Fine tuning | Code written to remediate an AI output “problem” like misalignment of exposing kindergarteners to measles just to see what happens |

| Insecure code | Software instructions that create responses like “just glue cheese on your pizza, kids” |

| Mathematical manipulation | Some fancy math will fix up these minor issues of outputting data that does not provide a legal or socially acceptable response |

| Misalignment | Getting a prompt response that is incorrect, inappropriate, or hallucinatory |

| Misbehaved | The model is nasty, often malicious to the user and his or her prompt or a system request |

| Persona | How the model goes about framing a response to a prompt |

| Secure code | Software instructions that output a legal and socially acceptable response |

I noted this statement in the source article:

OpenAI researchers say they’ve discovered hidden features inside AI models that correspond to misaligned “personas”…

In my ageing dinobaby brain, I interpreted this to mean:

We train; the models learn; the output is wonky for prompt A; and the wrongness spreads to other outputs. It’s like measles.

The fancy lingo addresses the black box chock full of probabilities, matrix manipulations, and layers of synthetic neural flickering ability to output incorrect “answers.” Think about your neighbors’ kids gluing cheese on pizza. Smart, right?

The write up reports that an OpenAI interpretability researcher said:

“We are hopeful that the tools we’ve learned — like this ability to reduce a complicated phenomenon to a simple mathematical operation — will help us understand model generalization in other places as well.”

Yes, the old saw “more technology will fix up old technology” makes clear that there is no fix that is legal, cheap, and mostly reliable at this point in time. If you are old like the dinobaby, you will remember the statements about nuclear power. Where are those thorium reactors? How about those fuel pools stuffed like a plump ravioli?

Another angle on the problem is the observation that “AI models are grown more than they are guilt.” Okay, organic development of a synthetic construct. Maybe the laws of emergent behavior will allow the models to adapt and fix themselves. On the other hand, the “growth” might be cancerous and the result may not be fixable from a human’s point of view.

But OpenAI is up to the task of fixing up AI that grows. Consider this statement:

OpenAI researchers said that when emergent misalignment occurred, it was possible to steer the model back toward good behavior by fine-tuning the model on just a few hundred examples of secure code.

Ah, ha. A new and possibly contradictory idea. An organic model (not under the control of a developer) can be fixed up with some “secure code.” What is “secure code” and why hasn’t “secure code” be the operating method from the start?

The jargon does not explain why bad answers migrate across the “models.” Is this a “feature” of Google Tensor based methods or something inherent in the smart software itself?

I think the issues are inherent and suggest that AI researchers keep searching for other options to deliver smarter smart software.

Stephen E Arnold, June 24, 2025

Paper Tiger Management

June 24, 2025

An opinion essay written by a dinobaby who did not rely on smart software .

An opinion essay written by a dinobaby who did not rely on smart software .

I learned that Apple and Meta (formerly Facebook) found themselves on the wrong side of the law in the EU. On June 19, 2025, I learned that “the European Commission will opt not to impose immediate financial penalties” on the firms. In April 2025, the EU hit Apple with a 500 million euro fine and Meta a 200 million euro fine for non compliance with the EU’s Digital Markets Act. Here’s an interesting statement in the cited EuroNews report the “grace period ends on June 26, 2025.” Well, not any longer.

What’s the rationale?

- Time for more negotiations

- A desire to appear fair

- Paper tiger enforcement.

I am not interested in items one and two. The winner is “paper tiger enforcement.” In my opinion, we have entered an era in management, regulation, and governmental resolve when the GenX approach to lunch. “Hey, let’s have lunch.” The lunch never happens. But the mental process follows these lanes in the bowling alley of life: [a] Be positive, [b] Say something that sounds good, [c] Check the box that says, “Okay, mission accomplished. Move on. [d] Forget about the lunch thing.

When this approach is applied on large scale, high-visibility issues, what happens? In my opinion, the credibility of the legal decision and the penalty is diminished. Instead of inhibiting improper actions, those who are on the receiving end of the punishment lean one thing: It doesn’t matter what we do. The regulators don’t follow through. Therefore, let’s just keep on moving down the road.

Another example of this type of management can be found in the return to the office battles. A certain percentage of employees are just going to work from home. The management of the company doesn’t do “anything”. Therefore, management is feckless.

I think we have entered the era of paper tiger enforcement. Make noise, show teeth, growl, and then go back into the den and catch some ZZZZs.

Stephen E Arnold, June 24, 2025

Meeker Reveals the Hurdle the Google Must Over: Can Google Be Agile Again?

June 20, 2025

Just a dinobaby and no AI: How horrible an approach?

Just a dinobaby and no AI: How horrible an approach?

The hefty Meeker Report explains Google’s PR push, flood of AI announcement, and statements about advertising revenue. Fear may be driving the Googlers to be the Silicon Valley equivalent of Dan Aykroyd and Steve Martin’s “wild and crazy guys.” Google offers up the Sundar & Prabhakar Comedy Show. Similar? I think so.

I want to highlight two items from the 300 page plus PowerPoint deck. The document makes clear that one can create a lot of slides (foils) in six years.

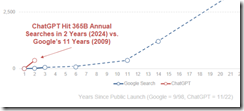

The first item is a chart on page 21. Here it is:

Note the tiny little line near the junction of the x and y axis. Now look at the red lettering:

ChatGPT hit 365 billion annual searches by Year since public launches of Google and Chat GPT — 1998 – 2025.

Let’s assume Ms. Meeker’s numbers are close enough for horse shoes. The slope of the ChatGPT search growth suggests that the Google is losing click traffic to Sam AI-Man’s ChatGPT. I wonder if Sundar & Prabhakar eat, sleep, worry, and think as the Code Red lights flashes quietly in the Google lair? The light flashes: Sundar says, “Fast growth is not ours, brother.” Prabhakar responds, “The chart’s slope makes me uncomfortable.” Sundar says, “Prabhakar, please, don’t think of me as your boss. Think of me as a friend who can fire you.”

Now this quote from the top Googler on page 65 of the Meeker 2025 AI encomium:

The chance to improve lives and reimagine things is why Google has been investing in AI for more than a decade…

So why did Microsoft ace out Google with its OpenAI, ChatGPT deal in January 2023?

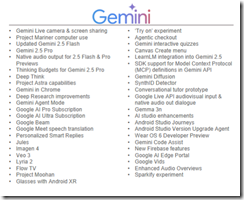

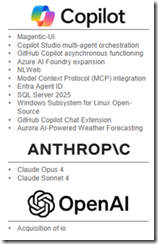

Ms. Meeker’s data suggests that Google is doing many AI projects because it named them for the period 5/19/25-5/23/25. Here’s a run down from page 260 in her report:

And what di Microsoft, Anthropic, and OpenAI talk about in the some time period?

Google is an outputter of stuff.

Let’s assume Ms. Meeker is wildly wrong in her presentation of Google-related data. What’s going to happen if the legal proceedings against Google force divestment of Chrome or there are remediating actions required related to the Google index? The Google may be in trouble.

Let’s assume Ms. Meeker is wildly correct in her presentation of Google-related data? What’s going to happen if OpenAI, the open source AI push, and the clicks migrate from the Google to another firm? The Google may be in trouble.

Net net: Google, assuming the data in Ms. Meeker’s report are good enough, may be confronting a challenge it cannot easily resolve. The good news is that the Sundar & Prabhakar Comedy Show can be monetized on other platforms.

Is there some hard evidence? One can read about it in Business Insider? Well, ooops. Staff have been allegedly terminated due to a decline in Google traffic.

Stephen E Arnold, June 20, 2025