Google Stomps into the Threat Intelligence Sector: AI and More

May 7, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Before commenting on Google’s threat services news. I want to remind you of the link to the list of Google initiatives which did not survive. You can find the list at Killed by Google. I want to mention this resource because Google’s product innovation and management methods are interesting to say the least. Operating in Code Red or Yellow Alert or whatever the Google crisis buzzword is, generating sustainable revenue beyond online advertising has proven to be a bit of a challenge. Google is more comfortable using such methods as [a] buying and trying to scale it, [b] imitating another firm’s innovation, and [c] dumping big money into secret projects in the hopes that what comes out will not result in the firm’s getting its “glass” kicked to the curb.

Google makes a big entrance at the RSA Conference. Thanks, MSFT Copilot. Have you considerate purchasing Google’s threat intelligence service?

With that as background, Google has introduced an “unmatched” cyber security service. The information was described at the RSA security conference and in a quite Googley blog post “Introducing Google Threat Intelligence: Actionable threat intelligence at Google Scale.” Please, note the operative word “scale.” If the service does not make money, Google will “not put wood behind” the effort. People won’t work on the project, and it will be left to dangle in the wind or just shot like Cricket, a now famous example of animal husbandry. (Google’s Cricket was the Google Appliance. Remember that? Take over the enterprise search market. Nope. Bang, hasta la vista.)

Google’s new service aims squarely at the comparatively well-established and now maturing cyber security market. I have to check to see who owns what. Venture firms and others with money have been buying promising cyber security firms. Google owned a piece of Recorded Future. Now Recorded Future is owned by a third party outfit called Insight. Darktrace has been or will be purchased by Thoma Bravo. Consolidation is underway. Thus, it makes sense to Google to enter the threat intelligence market, using its Mandiant unit as a springboard, one of those home diving boards, not the cliff in Acapulco diving platform.

The write up says:

we are announcing Google Threat Intelligence, a new offering that combines the unmatched depth of our Mandiant frontline expertise, the global reach of the VirusTotal community, and the breadth of visibility only Google can deliver, based on billions of signals across devices and emails. Google Threat Intelligence includes Gemini in Threat Intelligence, our AI-powered agent that provides conversational search across our vast repository of threat intelligence, enabling customers to gain insights and protect themselves from threats faster than ever before.

Google to its credit did not trot out the “quantum supremacy” lingo, but the marketers did assert that the service offers “unmatched visibility in threats.” I like the “unmatched.” Not supreme, just unmatched. The graphic below illustrates the elements of the unmatchedness:

Credit to the Google 2024

But where is artificial intelligence in the diagram? Don’t worry. The blog explains that Gemini (Google’s AI “system”) delivers

AI-driven operationalization

But the foundation of the new service is Gemini, which does not appear in the diagram. That does not matter, the Code Red crowd explains:

Gemini 1.5 Pro offers the world’s longest context window, with support for up to 1 million tokens. It can dramatically simplify the technical and labor-intensive process of reverse engineering malware — one of the most advanced malware-analysis techniques available to cybersecurity professionals. In fact, it was able to process the entire decompiled code of the malware file for WannaCry in a single pass, taking 34 seconds to deliver its analysis and identify the kill switch. We also offer a Gemini-driven entity extraction tool to automate data fusion and enrichment. It can automatically crawl the web for relevant open source intelligence (OSINT), and classify online industry threat reporting. It then converts this information to knowledge collections, with corresponding hunting and response packs pulled from motivations, targets, tactics, techniques, and procedures (TTPs), actors, toolkits, and Indicators of Compromise (IoCs). Google Threat Intelligence can distill more than a decade of threat reports to produce comprehensive, custom summaries in seconds.

I like the “indicators of compromise.”

Several observations:

- Will this service be another Google Appliance-type play for the enterprise market? It is too soon to tell, but with the pressure mounting from regulators, staff management issues, competitors, and savvy marketers in Redmond “indicators” of success will be known in the next six to 12 months

- Is this a business or just another item on a punch list? The answer to the question may be provided by what the established players in the threat intelligence market do and what actions Amazon and Microsoft take. Is a new round of big money acquisitions going to begin?

- Will enterprise customers “just buy Google”? Chief security officers have demonstrated that buying multiple security systems is a “safe” approach to a job which is difficult: Protecting their employers from deeply flawed software and years of ignoring online security.

Net net: In a maturing market, three factors may signal how the big, new Google service will develop. These are [a] price, [b] perceived efficacy, and [c] avoidance of a major issue like the SolarWinds’ matter. I am rooting for Googzilla, but I still wonder why Google shifted from Recorded Future to acquisitions and me-too methods. Oh, well. I am a dinobaby and cannot be expected to understand.

Stephen E Arnold, May 7, 2024

The Everything About AI Report

May 7, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read the Stanford Artificial Intelligence Report. If you have have not seen the 500 page document, click here. I spotted an interesting summary of the document. “Things Everyone Should Understand About the Stanford AI Index Report” is the work of Logan Thorneloe, an author previously unknown to me. I want to highlight three points I carried away from Mr. Thorneloe’s essay. These may make more sense after you have worked through the beefy Stanford document, which, due to its size, makes clear that Stanford wants to be linked to the the AI spaceship. (Does Stanford’s AI effort look like Mr. Musk’s or Mr. Bezos’ rocket? I am leaning toward the Bezos design.)

An amazed student absorbs information about the Stanford AI Index Report. Thanks, MSFT. Good enough.

The summary of the 500 page document makes clear that Stanford wants to track the progress of smart software, provide a policy document so that Stanford can obviously influence policy decisions made by people who are not AI experts, and then “highlight ethical considerations.” The assumption by Mr. Thorneloe and by the AI report itself is that Stanford is equipped to make ethical anything. The president of Stanford departed under a cloud for acting in an unethical manner. Plus some of the AI firms have a number of Stanford graduates on their AI teams. Are those teams responsible for depictions of inaccurate historical personages? Okay, that’s enough about ethics. My hunch is that Stanford wants to be perceived as a leader. Mr. Thorneloe seems to accept this idea as a-okay.

The second point for me in the summary is that Mr. Thorneloe goes along with the idea that the Stanford report is unbiased. Writing about AI is, in my opinion of course, inherently biased. That’s’ the reason there are AI cheerleaders and AI doomsayers. AI is probability. How the software gets smart is biased by [a] how the thresholds are rigged up when a smart system is built, [b] the humans who do the training of the system and then “fine tune” or “calibrate” the smart software to produce acceptable results, and [b] the information used to train the system. More recently, human developers have been creating wrappers which effectively prevent the smart software from generating pornography or other “improper” or “unacceptable” outputs. I think the “bias” angle needs some critical thinking. Stanford’s report wants to cover the AI waterfront as Stanford maps and presents the geography of AI.

The final point is the rundown of Mr. Thorneloe’s take-aways from the report. He presents ten. I think there may just be three. First, the AI work is very expensive. That leads to the conclusion that only certain firms can be in the AI game and expect to win and win big. To me, this means that Stanford wants the good old days of Silicon Valley to come back again. I am not sure that this approach to an important, yet immature technology, is a particularly good idea. One does not fix up problems with technology. Technology creates some problems, and like social media, what AI generates may have a dark side. With big money controlling the game, what’s that mean? That’s a tough question to answer. The US wants China and Russia to promise not to use AI in their nuclear weapons system. Yeah, that will work.

Another take-away which seems important is the assumption that workers will be more productive. This is an interesting assertion. I understand that one can use AI to eliminate call centers. However, has Stanford made a case that the benefits outweigh the drawbacks of AI? Mr. Thorneloe seems to be okay with the assumption underlying the good old consultant-type of magic.

The general take-away from the list of ten take-aways is that AI is fueled by “industry.” What happened the Stanford Artificial Intelligence Lab, synthetic data, and the high-confidence outputs? Nothing has happened. AI hallucinates. AI gets facts wrong. AI is a collection of technologies looking for problems to solve.

Net net: Mr. Thorneloe’s summary is useful. The Stanford report is useful. Some AI is useful. Writing 500 pages about a fast moving collection of technologies is interesting. I cannot wait for the 2024 edition. I assume “everyone” will understand AI PR.

Stephen E Arnold, May 7, 2024

Microsoft Security Messaging: Which Is What?

May 6, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I am a dinobaby. I am easily confused. I read two “real” news items and came away confused. The first story is “Microsoft Overhaul Treats Security As Top Priority after a Series of Failures.” The subtitle is interesting too because it links “security” to monetary compensation. That’s an incentive, but why isn’t security just part of work at an alleged monopoly’s products and services? I surmise the answer is, “Because security costs money, a lot of money.” That article asserts:

After a scathing report from the US Cyber Safety Review Board recently concluded that “Microsoft’s security culture was inadequate and requires an overhaul,” it’s doing just that by outlining a set of security principles and goals that are tied to compensation packages for Microsoft’s senior leadership team.

Okay. But security emerges from basic engineering decisions; for instance, does a developer spend time figuring out and resolving security when dependencies are unknown or documented only by a grousing user in a comment posted on a technical forum? Or, does the developer include a new feature and moves on to the next task, assuming that someone else or an automated process will make sure everything works without opening the door to the curious bad actor? I think that Microsoft assumes it deploys secure systems and that its customers have the responsibility to ensure their systems’ security.

The cyber racoons found the secure picnic basket was easily opened. The well-fed, previously content humans seem dismayed that their goodies were stolen. Thanks, MSFT Copilot. Definitely good enough.

The write up adds that Microsoft has three security principles and six security pillars. I won’t list these because the words chosen strike me like those produced by a lawyer, an MBA, and a large language model. Remember. I am a dinobaby. Six plus three is nine things. Some car executive said a long time ago, “Two objectives is no objective.” I would add nine generalizations are not a culture of security. Nine is like Microsoft Word features. No one can keep track of them because most users use Word to produce Words. The other stuff is usually confusing, in the way, or presented in a way that finding a specific feature is an exercise in frustration. Is Word secure? Sure, just download some nifty documents from a frisky Telegram group or the Dark Web.

The write up concludes with a weird statement. Let me quote it:

I reported last month that inside Microsoft there is concern that the recent security attacks could seriously undermine trust in the company. “Ultimately, Microsoft runs on trust and this trust must be earned and maintained,” says Bell. “As a global provider of software, infrastructure and cloud services, we feel a deep responsibility to do our part to keep the world safe and secure. Our promise is to continually improve and adapt to the evolving needs of cybersecurity. This is job #1 for us.”

First, there is the notion of trust. Perhaps Edge’s persistence and advertising in the start menu, SolarWinds, and the legions of Chinese and Russian bad actors undermine whatever trust exists. Most users are clueless about security issues baked into certain systems. They assume; they don’t trust. Cyber security professionals buy third party security solutions like shopping at a grocery store. Big companies’ senior executive don’t understand why the problem exists. Lawyers and accountants understand many things. Digital security is often not a core competency. “Let the cloud handle it,” sounds pretty good when the fourth IT manager or the third security officer quit this year.

Now the second write up. “Microsoft’s Responsible AI Chief Worries about the Open Web.” First, recall that Microsoft owns GitHub, a very convenient source for individuals looking to perform interesting tasks. Some are good tasks like snagging a script to perform a specific function for a church’s database. Other software does interesting things in order to help a user shore up security. Rapid 7 metasploit-framework is an interesting example. Almost anyone can find quite a bit of useful software on GitHub. When I lectured in a central European country’s main technical university, the students were familiar with GitHub. Oh, boy, were they.

In this second write up I learned that Microsoft has released a 39 page “report” which looks a lot like a PowerPoint presentation created by a blue-chip consulting firm. You can download the document at this link, at least you could as of May 6, 2024. “Security” appears 78 times in the document. There are “security reviews.” There is “cybersecurity development” and a reference to something called “Our Aether Security Engineering Guidance.” There is “red teaming” for biosecurity and cybersecurity. There is security in Azure AI. There are security reviews. There is the use of Copilot for security. There is something called PyRIT which “enables security professionals and machine learning engineers to proactively find risks in their generative applications.” There is partnering with MITRE for security guidance. And there are four footnotes to the document about security.

What strikes me is that security is definitely a popular concept in the document. But the principles and pillars apparently require AI context. As I worked through the PowerPoint, I formed the opinion that a committee worked with a small group of wordsmiths and crafted a rather elaborate word salad about going all in with Microsoft AI. Then the group added “security” the way my mother would chop up a red pepper and put it in a salad for color.

I want to offer several observations:

- Both documents suggest to me that Microsoft is now pushing “security” as Job One, a slogan used by the Ford Motor Co. (How are those Fords fairing in the reliability ratings?) Saying words and doing are two different things.

- The rhetoric of the two documents remind me of Gertrude’s statement, “The lady doth protest too much, methinks.” (Hamlet? Remember?)

- The US government, most large organizations, and many individuals “assume” that Microsoft has taken security seriously for decades. The jargon-and-blather PowerPoint make clear that Microsoft is trying to find a nice way to say, “We are saying we will do better already. Just listen, people.”

Net net: Bandying about the word trust or the word security puts everyone on notice that Microsoft knows it has a security problem. But the key point is that bad actors know it, exploit the security issues, and believe that Microsoft software and services will be a reliable source of opportunity of mischief. Ransomware? Absolutely. Exposed data? You bet your life. Free hacking tools? Let’s go. Does Microsoft have a security problem? The word form is incorrect. Does Microsoft have security problems? You know the answer. Aether.

Stephen E Arnold, May 6, 2024

Generative AI Means Big Money…Maybe

May 6, 2024

Whenever new technology appears on the horizon, there are always optimistic, venture capitalists that jump on the idea that it will be a gold mine. While this is occasionally true, other times it’s a bust. Anything can sound feasible on paper, but reality often proves that brilliant ideas don’t work. Medium published Ashish Karan’s article, “Generative AI: A New Gold Rush For Software Engineering.”

Kakran opens his article asserting the brilliant simplicity of Einstein’s E=mc² formula to inspire readers. He alludes that generative AI will revolutionize industries like Einstein’s formula changed physics. He also says that white collar jobs stand to be automated for the first time in history. White collar jobs have been automated or made obsolete for centuries.

Kakran then runs numbers complete with charts and explanations about how generative AI is going to change the world. His diagrams and explanations probably mean something but it reads like white paper gibberish. This part makes sense:

“If you rewind to the year 2008, you will suddenly hear a lot of skepticism about the cloud. Would it ever make sense to move your apps and data from private or colo [cated] data centers to cloud thereby losing fine-grained control. But the development of multi-cloud and devops technologies made it possible for enterprises to not only feel comfortable but accelerate their move to the cloud. Generative AI today might be comparable to cloud in 2008. It means a lot of innovative large companies are still to be founded. For founders, this is an enormous opportunity to create impactful products as the entire stack is currently getting built.”

The author is correct that are business opportunities to leverage generative AI. Is it a California gold rush? Nobody knows. If you have the funding, expertise, and a good idea then follow it. If not, maybe focusing on a more attainable career is better.

Whitey Grace, May 6, 2024

AI Versus People? That Is Easy. AI

April 25, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I don’t like to include management information in Beyond Search. I have noticed more stories related to management decisions related to information technology. Here’s an example of my breaking my own editorial policies. Navigate to “SF Exec Defends Brutal Tech Trend: Lay Off Workers to Free Up Cash for AI.” I noted this passage:

Executives want fatter pockets for investing in artificial intelligence.

Okay, Mr. Efficiency and mobile phone betting addict, you have reached a logical decision. Why are there no pictures of friends, family, and achievements in your window office? Oh, that’s MSFT Copilot’s work. What’s that say?

I think this means that “people resources” can be dumped in order to free up cash to place bets on smart software. The write up explains the management decision making this way:

Dropbox’s layoff was largely aimed at freeing up cash to hire more engineers who are skilled in AI.

How expensive is AI for the big technology companies? The write up provides this factoid which comes from the masterful management bastion:

Google AI leader Demis Hassabis said the company would likely spend more than $100 billion developing AI.

Smart software is the next big thing. Big outfits like Amazon, Google, Facebook, and Microsoft believe it. Venture firms appear to be into AI. Software development outfits are beavering away with smart technology to make their already stellar “good enough” products even better.

Money buys innovation until it doesn’t. The reason is that the time from roll out to saturation can be difficult to predict. Look how long it has taken the smart phones to become marketing exercises, not technology demonstrations. How significant is saturation? Look at the machinations at Apple or CPUs that are increasingly difficult to differentiate for a person who wants to use a laptop for business.

There are benefits. These include:

- Those getting fired can say, “AI RIF’ed me.”

- Investments in AI can perk up investors.

- Jargon-savvy consultants can land new clients.

- Leadership teams can rise about termination because these wise professionals are the deciders.

A few downsides can be identified despite the immaturity of the sector:

- Outputs can be incorrect leading to what might be called poor decisions. (Sorry, Ms. Smith, your child died because the smart dosage system malfunctioned.)

- A large, no-man’s land is opening between the fast moving start ups who surf on cloud AI services and the behemoths providing access to expensive infrastructure. Who wants to operate in no-man’s land?

- The lack of controls on smart software guarantee that bad actors will have ample tools with which to innovate.

- Knock-on effects are difficult to predict.

Net net: AI may be diffusing more quickly and in ways some experts chose to ignore… until they are RIF’ed.

Stephen E Arnold, April 25, 2024

From the Cyber Security Irony Department: We Market and We Suffer Breaches. Hire Us!

April 24, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Irony, according to You.com, means:

Irony is a rhetorical device used to express an intended meaning by using language that conveys the opposite meaning when taken literally. It involves a noticeable, often humorous, difference between what is said and the intended meaning. The term “irony” can be used to describe a situation in which something which was intended to have a particular outcome turns out to have been incorrect all along. Irony can take various forms, such as verbal irony, dramatic irony, and situational irony. The word “irony” comes from the Greek “eironeia,” meaning “feigned ignorance”

I am not sure I understand the definition, but let’s see if these two “communications” capture the smart software’s definition.

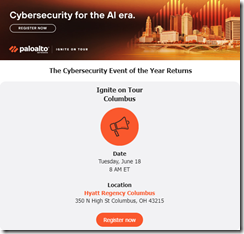

The first item is an email I received from the cyber security firm Palo Alto Networks. The name evokes the green swards of Stanford University, the wonky mall, and the softball games (co-ed, of course). Here’s the email solicitation I received on April 15, 2024:

The message is designed to ignite my enthusiasm because the program invites me to:

Join us to discover how you can harness next-generation, AI-powered security to:

- Solve for tomorrow’s security operations challenges today

- Enable cloud transformation and deployment

- Secure hybrid workforces consistently and at scale

- And much more.

I liked the much more. Most cyber outfits do road shows. Will I drive from outside Louisville, Kentucky, to Columbus, Ohio? I was thinking about it until I read:

“Major Palo Alto Security Flaw Is Being Exploited via Python Zero-Day Backdoor.”

Maybe it is another Palo Alto outfit. When I worked in Foster City (home of the original born-dead mall), I think there was a Palo Alto Pizza. But my memory is fuzzy and Plastic Fantastic Land does blend together. Let’s look at the write up:

For weeks now, unidentified threat actors have been leveraging a critical zero-day vulnerability in Palo Alto Networks’ PAN-OS software, running arbitrary code on vulnerable firewalls, with root privilege. Multiple security researchers have flagged the campaign, including Palo Alto Networks’ own Unit 42, noting a single threat actor group has been abusing a vulnerability called command injection, since at least March 26 2024.

Yep, seems to be the same outfit wanting me to “solve for tomorrow’s security operations challenges today.” The only issue is that the exploit was discovered a couple of weeks ago. If the write up is accurate, the exploit remains unfixed.,

Perhaps this is an example of irony? However, I think it is a better example of the over-the-top yip yap about smart software and the efficacy of cyber security systems. Yes, I know it is a zero day, but it is a zero day only to Palo Alto. The bad actors who found the problem and exploited already know the company has a security issue.

I mentioned in articles about some intelware that the developers do one thing, the software project manager does another, and the marketers output what amounts to hoo hah, baloney, and Marketing 101 hyperbole.

Yep, ironic.

Stephen E Arnold, April 24, 2024

Fake Books: Will AI Cause Harm or Do Good?

April 24, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read what I call a “howl” from a person who cares about “good” books. Now “good” is a tricky term to define. It is similar to “quality” or “love.” I am not going to try to define any of these terms. Instead I want to look at one example of smart software creating a problem for humans who create books. Then I want to focus attention on Amazon, the online bookstore. I think about two-thirds of American shoppers have some interaction with Amazon. That percentage is probably low if we narrow to top earners in the US. I want to wrap up with a reminder to those who think about smart software that the diffusion of technology chugs along and then — bang! — phase change. Spoiler: That’s what AI is doing now, and the pace is accelerating.

The Copilot images illustrates how smart software spreads. Cleaning up is a bit of a chore. The table cloth and the meeting may be ruined. But that’s progress of sorts, isn’t it?

The point of departure is an essay cum “real” news write up about fake books titled “Amazon Is Filled with Garbage Ebooks. Here’s How They Get Made.”

. These books are generated by smart software and Fiverr-type labor. Dump the content in a word processor, slap on a title, and publish the work on Amazon. I write my books by hand, and I try to label that which I write or pay people to write as “the work of a dumb dinobaby.” Other authors do not follow my practice. Let many flowers bloom.

The write up states:

It’s so difficult for most authors to make a living from their writing that we sometimes lose track of how much money there is to be made from books, if only we could save costs on the laborious, time-consuming process of writing them. The internet, though, has always been a safe harbor for those with plans to innovate that pesky writing part out of the actual book publishing.

This passage explains exactly why fake books are created. The fact of fake books makes clear that AI technology diffusing; that is, smart software is turning up in places and ways that the math people fiddling the numerical recipes or the engineers hooking up thousands of computing units envisioned. Why would they? How many mathy types are able to remember their mother’s birthday?

The path for the “fake book” is easy money. The objective is not excellence, sophisticated demonstration of knowledge, or the mindlessness of writing a book “because.” The angst in the cited essay comes from the side of the coin that wants books created the old-fashioned way. Yeah, I get it. But today it is clear that the hand crafted books are going to face some challenges in the marketplace. I anticipate that “quality” fake books will convert the “real” book to the equivalent of a cuneiform tablet. Don’t like this? I am a dinobaby, and I call the trajectory as my experience and research warrants.

Now what about buying fake books on Amazon? Anyone can get an ISBN, but for Amazon, no ISBN is (based on our tests) no big deal. Amazon has zero incentive to block fake books. If someone wants a hard copy of a fake book, let Amazon’s own instant print service produce the copy. Amazon is set up to generate revenue, not be a grumpy grandmother forcing grandchildren to pick up after themselves. Amazon could invest to squelch fraudulent or suspect behaviors. But here’s a representative Amazon word salad explanation cited in the “Garbage Ebooks” essay:

In a statement, Amazon spokesperson Ashley Vanicek said, “We aim to provide the best possible shopping, reading, and publishing experience, and we are constantly evaluating developments that impact that experience, which includes the rapid evolution and expansion of generative AI tools.”

Yep, I suggest not holding one’s breath until Amazon spends money to address a pervasive issue within its service array.

Now the third topic: Slowly, slowly, then the frog dies. Smart software in one form or another has been around a half century or more. I can date smart software in the policeware / intelware sector to the late 1990s when commercial services were no longer subject to stealth operation or “don’t tell” business practices. For the ChatGPT-type services, NLP has been around longer, but it did not work largely due to computational costs and the hit-and-miss approaches of different research groups. Inference, DR-LINK, or one of the other notable early commercial attempts, anyone?

Okay, now the frog is dead, and everyone knows it. Better yet, navigate to any open source repository or respond to one of those posts on Pinboard or listings in Product Hunt, and you are good to go. Anthropic has released a cook book, just do-it-yourself ideas for building a start up with Anthropic tools. And if you write Microsoft Excel or Word macros for a living, you are already on the money road.

I am not sure Microsoft’s AI services work particularly well, but the stuff is everywhere. Microsoft is spending big to make sure it is not left out of an AI lunches in Dubai. I won’t describe the impact of the Manhattan chatbot. That’s a hoot. (We cover this slip up in the AItoAI video pod my son and I do once each month. You can find that information about NYC at this link.)

Net net: The tipping point has been reached. AI is tumbling and its impact will be continuous — at least for a while. And books? Sure, great books like those from Twitter luminaries will sell. To those without a self-promotion rail gun, cloudy days ahead. In fact, essays like “Garbage Ebooks” will be cranked out by smart software. Most people will be none the wiser. We are not facing a dead Internet; we are facing the death of high-value information. When data are synthetic, what’s original thinking got to do with making money?

Stephen E Arnold, April 24, 2024

So Much for Silicon Valley Solidarity

April 23, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I thought the entity called Benzinga was a press release service. Guess not. I received a link to what looked like a “real” news story written by a Benzinga Staff Writer name Jain Rounak. “Elon Musk Reacts As Marc Andreessen Says Google Is ‘Literally Run By Employee Mobs’ With ‘Chinese Spies’ Scooping Up AI Chip Designs.” The article is a short one, and it is not exactly what the title suggested to me. Nevertheless, let’s take a quick look at what seems to be some ripping of the Silicon Valley shibboleth of solidarity.

The members of the Happy Silicon Valley Social club are showing signs of dissention. Thanks, MSFT Copilot. How is your security today? Oh, really.

The hook for the story is another Google employee protest. The cause was a deal for Google to provide cloud services to Israel. I assume the Googlers split along ethno-political-religious lines: One group cheering for Hamas and another for Israel. (I don’t have any first-hand evidence, so I am leveraging the scant information in the Benzinga news story.

Then what? Apparently Marc Andreessen of Netscape fame and AI polemics offered some thoughts. I am not sure where these assertions were made or if they are on the money. But, I grant to Benzinga, that the Andreessen emissions are intriguing. Let’s look at one:

“The company is literally overrun by employee mobs, Chinese spies are walking AI chip designs out the door, and they turn the Founding Fathers and the Nazis black.”

The idea that there are “Google mobs” running from Foosball court to vending machines and then to their quiet space and then to the parking lot is interesting. Where’s Charles Dickens of Tale of Two Cities fame when you need an observer to document a revolution. Are Googlers building barricades in the passage ways? Are Prius and Tesla vehicles being set on fire?

In the midst of this chaotic environment, there are Chinese spies. I am not sure one has to walk chip designs anywhere. Emailing them or copying them from one Apple device to another works reasonably well in my experience. The reference to the Google art is a reminder that the high school management club approach to running a potential trillion dollar, alleged monopoly need some upgrades.

Where’s the Elon in this? I think I am supposed to realize that Elon and Andreessen are on the same mental wave length. The Google is not. Therefore, the happy family notion is shattered. Okay, Benzinga. Whatever. Drop those names. The facts? Well, drop those too.

Stephen E Arnold, April 23, 2024

Google Gem: Arresting People Management

April 18, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I have worked for some well-managed outfits: Halliburton, Booz Allen, Ziff Communications, and others in the 55 year career. The idea that employees at Halliburton Nuclear (my assignment) would occupy the offices of a senior officer like Eugene Saltarelli was inconceivable. (Mr. Saltarelli sported a facial scar. When asked about the disfigurement, he would stare at the interlocutor and ask, “What scar?” Do you want to “take over” his office?) Another of my superiors at a firm in New York had a special method of shaping employee behavior. This professional did nothing to suppress rumors that two of his wives drowned during “storms” after falling off his sail boat. Did I entertain taking over his many-windowed office in Manhattan? Answer: Are you sure you internalized the anecdote?

Another Google management gem glitters in the public spot light.

But at the Google life seems to be different, maybe a little more frisky absent psychological behavior controls. I read “Nine Google Workers Get Arrested After Sit-In Protest over $1.2B Cloud Deal with Israel.” The main idea seems to be that someone at Google sold cloud services to the Israeli government. Employees apparently viewed the contract as bad, wrong, stupid, or some combination of attributes. The fix involved a 1960s-style sit in. After a period of time elapsed, someone at Google called the police. The employee-protesters were arrested.

I recall hearing years ago that Google faced a similar push back about a contract with the US government. To be honest, Google has generated so many human resource moments, I have a tough time recalling each. A few are Mt. Everests of excellence; for example, the termination of Dr. Timnit Gebru. This Googler had the nerve to question the bias of Google’s smart software. She departed. I assume she enjoyed the images of biased signers of documents related to America’s independence and multi-ethnic soldiers in the World War II German army. Bias? Google thinks not I guess.

The protest occurs as the Google tries to cope with increased market pressure and the tough-to-control costs of smart software. The quick fix is to nuke or RIF employees. “Google Lays Off Workers As Part of Pretty Large-Scale Restructuring” reports by citing Business Insider:

Ruth Porat, Google’s chief financial officer, sent an email to employees announcing that the company would create “growth hubs” in India, Mexico and Ireland. The unspecified number of layoffs will affect teams in the company’s finance department, including its treasury, business services and revenue cash operations units

That looks like off-shoring to me. The idea was a cookie cutter solution spun up by blue chip consulting companies 20, maybe 30 years ago. On paper, the math is more enticing than a new Land Rover and about as reliable. A state-side worker costs X fully loaded with G&A, benefits, etc. An off-shore worker costs X minus Y. If the delta means cost savings, go for it. What’s not to like?

According to a source cited in the New York Post:

“As we’ve said, we’re responsibly investing in our company’s biggest priorities and the significant opportunities ahead… To best position us for these opportunities, throughout the second half of 2023 and into 2024, a number of our teams made changes to become more efficient and work better, remove layers and align their resources to their biggest product priorities.

Yep, align. That senior management team has a way with words.

Will those who are in fear of their jobs join in the increasingly routine Google employee protests? Will disgruntled staff sandbag products and code? Will those who are terminated write tell-alls about their experiences at an outfit operating under Code Red for more than a year?

Several observations:

- Microsoft’s quite effective push of its AI products and services continues. In certain key markets like New York City and the US government, Google is on the defensive. Hint: Microsoft has the advantage, and the Google is struggling to catch up.

- Google’s management of its personnel seems to create the wrong type of news. Example: Staff arrests. Is that part of Peter Drucker’s management advice.

- The Google leadership team appears to lack the ability to do their job in a way that operates in a quiet, effective, positive, and measured way.

Net net: The online ad money machine keeps running. But if the investigations into Google’s business practices get traction, Google will have additional challenges to face. The Sundar & Prabhakar Comedy team should make a TikTok-type, how-to video about human resource management. I would prefer a short video about the origin story for the online advertising method which allowed Google to become a fascinating outfit.

Stephen E Arnold, April 18, 2024

Will Google Fix Up On-the-Blink Israeli Intelligence Capability?

April 18, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Voyager Labs “value” may be slipping. The poster child for unwanted specialized software publicity (NSO Group) finds itself the focal point of some legal eagles. The specialized software systems that monitor, detect, and alert — quite frankly — seemed to be distracted before and during the October 2023 attack. What’s happening to Israel’s advanced intelligence capabilities with its secret units, mustered out wizards creating intelligence solutions, and doing the Madison Avenue thing at conferences? What’s happening is that the hyperbole seems to be a bit more advanced than some of the systems themselves.

Government leaders and military intelligence professionals listen raptly as the young wizard explains how the online advertising company can shore up a country’s intelligence capabilities. Thanks, MidJourney. You are good enough, and the modified free MSFT Copilot is not.

What’s the fix? Let me share one wild idea with you: Let Google do it. Time (once the stablemate of the AI-road kill Sports Illustrated) published this write up with this title:

Exclusive: Google Contract Shows Deal With Israel Defense Ministry

The write up says:

Google provides cloud computing services to the Israeli Ministry of Defense, and the tech giant has negotiated deepening its partnership during Israel’s war in Gaza, a company document viewed by TIME shows. The Israeli Ministry of Defense, according to the document, has its own “landing zone” into Google Cloud—a secure entry point to Google-provided computing infrastructure, which would allow the ministry to store and process data, and access AI services. [The wonky capitalization is part of the style manual I assume. Nice, shouting with capital letters.]

The article then includes this paragraph:

Google recently described its work for the Israeli government as largely for civilian purposes. “We have been very clear that the Nimbus contract is for workloads running on our commercial platform by Israeli government ministries such as finance, healthcare, transportation, and education,” a Google spokesperson told TIME for a story published on April 8. “Our work is not directed at highly sensitive or classified military workloads relevant to weapons or intelligence services.”

Does this mean that Google shaped or weaponized information about the work with Israel? Probably not: The intent strikes me as similar to the “Senator, thank you for the question” lingo offered at some US government hearings. That’s just the truth poorly understood by those who are not Googley.

I am not sure if the Time story has its “real” news lens in focus, but let’s look at this interesting statement:

The news comes after recent reports in the Israeli media have alleged the country’s military, controlled by the Ministry of Defense, is using an AI-powered system to select targets for air-strikes on Gaza. Such an AI system would likely require cloud computing infrastructure to function. The Google contract seen by TIME does not specify for what military applications, if any, the Ministry of Defense uses Google Cloud, and there is no evidence Google Cloud technology is being used for targeting purposes. But Google employees who spoke with TIME said the company has little ability to monitor what customers, especially sovereign nations like Israel, are doing on its cloud infrastructure.

The online story included an allegedly “real” photograph of a bunch of people who were allegedly unhappy with the Google deal with Israel. Google does have a cohort of wizards who seem to enjoy protesting Google’s work with a nation state. Are Google’s managers okay with this type of activity? Seems like it.

Net net: I think the core issue is that some of the Israeli intelligence capability is sputtering. Will Google fix it up? Sure, if one believes the intelware brochures and PowerPoints on display at specialized intelligence conferences, why not perceive Google as just what the country needs after the attack and amidst increasing tensions with other nation states not too far from Tel Aviv? Belief is good. Madison Avenue thinking is good. Cloud services are good. Failure is not just bad; it could mean zero warning for another action against Israel. Do brochures about intelware stop bullets and missiles?

Stephen E Arnold, April 18, 2024