Are Experts Misunderstanding Google Indexing?

April 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Google is not perfect. More and more people are learning that the mystics of Mountain View are working hard every day to deliver revenue. In order to produce more money and profit, one must use Rust to become twice as wonderful than a programmer who labors to make C++ sit up, bark, and roll over. This dispersal of the cloud of unknowing obfuscating the magic of the Google can be helpful. What’s puzzling to me is that what Google does catches people by surprise. For example, consider the “real” news presented in “Google Books Is Indexing AI-Generated Garbage.” The main idea strikes me as:

But one unintended outcome of Google Books indexing AI-generated text is its possible future inclusion in Google Ngram viewer. Google Ngram viewer is a search tool that charts the frequencies of words or phrases over the years in published books scanned by Google dating back to 1500 and up to 2019, the most recent update to the Google Books corpora. Google said that none of the AI-generated books I flagged are currently informing Ngram viewer results.

Thanks, Microsoft Copilot. I enjoyed learning that security is a team activity. Good enough again.

Indexing lousy content has been the core function of Google’s Web search system for decades. Search engine optimization generates information almost guaranteed to drag down how higher-value content is handled. If the flagship provides the navigation system to other ships in the fleet, won’t those vessels crash into bridges?

In order to remediate Google’s approach to indexing requires several basic steps. (I have in various ways shared these ideas with the estimable Google over the years. Guess what? No one cared, understood, and if the Googler understood, did not want to increase overhead costs. So what are these steps? I shall share them:

- Establish an editorial policy for content. Yep, this means that a system and method or systems and methods are needed to determine what content gets indexed.

- Explain the editorial policy and what a person or entity must do to get content processed and indexed by the Google, YouTube, Gemini, or whatever the mystics in Mountain View conjure into existence

- Include metadata with each content object so one knows the index date, the content object creation date, and similar information

- Operate in a consistent, professional manner over time. The “gee, we just killed that” is not part of the process. Sorry, mystics.

Let me offer several observations:

- Google, like any alleged monopoly, faces significant management challenges. Moving information within such an enterprise is difficult. For an organization with a Foosball culture, the task may be a bit outside the wheelhouse of most young people and individuals who are engineers, not presidents of fraternities or sororities.

- The organization is under stress. The pressure is financial because controlling the cost of the plumbing is a reasonably difficult undertaking. Second, there is technical pressure. Google itself made clear that it was in Red Alert mode and keeps adding flashing lights with each and every misstep the firm’s wizards make. These range from contentious relationships with mere governments to individual staff member who grumble via internal emails, angry Googler public utterances, or from observed behavior at conferences. Body language does speak sometimes.

- The approach to smart software is remarkable. Individuals in the UK pontificate. The Mountain View crowd reassures and smiles — a lot. (Personally I find those big, happy looks a bit tiresome, but that’s a dinobaby for you.)

Net net: The write up does not address the issue that Google happily exploits. The company lacks the mental rigor setting and applying editorial policies requires. SEO is good enough to index. Therefore, fake books are certainly A-OK for now.

Stephen E Arnold, April 12, 2024

Has Google Aligned Its AI Messaging for the AI Circus?

April 10, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I followed the announcements at the Google shindig Cloud Next. My goodness, Google’s Code Red has produced quite a new announcements. However, I want to ask a simple question, “Has Google organized its AI acts under one tent?” You can wallow in the Google AI news because TechMeme on April 10, 2024, has a carnival midway of information.

I want to focus on one facet: The enterprise transformation underway. Google wants to cope with Microsoft’s pushing AI into the enterprise, into the Manhattan chatbot, and into the government. One example of what Google envisions is what Google calls “genAI agents.” Explaining scripts with smarts requires a diagram. Here’s one, courtesy of Constellation Research:

Look at the diagram. The “customer”, which is the organization, is at the center of a Googley world: plumbing, models, and a “platform.” Surrounding this core with the customer at the center are scripts with smarts. These will do customer functions. This customer, of course, is the customer of the real customer, the organization. The genAI agents will do employee functions, creative functions, data functions, code functions, and security functions. The only missing function is the “paying Google function,” but that is baked into the genAI approach.

If one accepts the myriad announcements as the “as is” world of Google AI, the Cloud Next conference will have done its job. If you did not get the memo, you may see the Googley diagram as the work of enthusiastic marketers. The quantumly supreme lingo as more evidence that Code Red has been one output of the Code Red initiative.

I want to call attention, however, to the information in the allegedly accurate “Google DeepMind’s CEO Reportedly Thinks It’ll Be Tough to Catch Up with OpenAI’s Sora.” The write up states:

Google DeepMind CEO may think OpenAI’s text-to-video generator, Sora, has an edge. Demis Hassabis told a colleague it’d be hard for Google to draw level with Sora … The Information reported. His comments come as Big Tech firms compete in an AI race to build rival products.

Am I to believe the genAI system can deliver what enterprises, government organizations, and non governmental entities want: Ways to cut costs and operate in a smarter way?

If I tell myself, “Believe Google’s Cloud Next statements?” Amazon, IBM, Microsoft, OpenAI, and others should fold their tents, put their animals back on the train, and head to another city in Kansas.

If I tell myself, “Google is not delivering and one cannot believe the company which sells ads and outputs weird images of ethnically interesting historical characters,” then the advertising company is a bit disjointed.

Several observations:

- The YouTube content processing issues are an indication that Google is making interesting decisions which may have significant legal consequences related to copyright

- The senior managers who are in direct opposition about their enthusiasm for Google’s AI capabilities need to get in the same book and preferably read from the same page

- The assertions appear to be marketing which is less effective than Microsoft’s at this time.

Net net: The circus has some tired acts. The Sundar and Prabhakar Show seemed a bit tired. The acts were better than those features on the Gong Show but not as scintillating as performances on the Masked Singer. But what about search? Oh, it’s great. And that circus train. Is it powered by steam?

Stephen E Arnold, April 9, 2024

x

x

x

x

Google AI Has a New Competitive Angle: AI Is a Bit of Problem for Everyone Except Us, Of Course

April 2, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Google has not recovered from the MSFT Davos PR coup. The online advertising company with a wonderful approach to management promptly did a road show in Paris which displayed incorrect data. Next the company declared a Code Red emergency (whatever that means in an ad outfit). Then the Googley folk reorganized by laterally arabesque-ing Dr. Jeff Dean somewhere and putting smart software in the hands of the DeepMind survivors. Okay, now we are into Phase 2 of the quantumly supreme company’s push into smart software.

An unknown person in Hyde Park at Speaker’s Corner is explaining to the enthralled passers by that “AI is like cryptocurrency.” Is there a face in the crowd that looks like the powerhouse behind FTX? Good enough, MSFT Copilot.

A good example of this PR tactic appears in “Google DeepMind Co-Founder Voices Concerns Over AI Hype: ‘We’re Talking About All Sorts Of Things That Are Just Not Real’.” Some additional color similar to that of sour grapes appears in “Google’s DeepMind CEO Says the Massive Funds Flowing into AI Bring with It Loads of Hype and a Fair Share of Grifting.”

The main idea in these write ups is that the Top Dog at DeepMind and possible candidate to take over the online ad outfit is not talking about ruing the life of a Go player or folding proteins. Nope. The new message, as I understand it, AI is just not that great. Here’s an example of the new PR push:

The fervor amongst investors for AI, Hassabis told the Financial Times, reminded him of “other hyped-up areas” like crypto. “Some of that has now spilled over into AI, which I think is a bit unfortunate,” Hassabis told the outlet. “And it clouds the science and the research, which is phenomenal.”

Yes, crypto. Digital currency is associated with stellar professionals like Sam Bankman-Fried and those engaged in illegal activities. (I will be talking about some of those illegal activities at the US National Cyber Crime Conference in a few weeks.)

So what’s the PR angle? Here’s my take on the message from the CEO in waiting:

- The message allows Google and its numerous supporters to say, “We think AI is like crypto but maybe worse.”

- Google can suggest, “Our AI is not so good, but that’s because we are working overtime to avoid the crypto-curse which is inherent in outfits engaged in shoving AI down your throat.”

- Googlers gardons la tête froide unlike the possibly criminal outfits cheerleading for the wonders of artificial intelligence.

Will the approach work? In my opinion, yes, it will add a joke to the Sundar and Prabhakar Comedy Act. No, I don’t think it will not alter the scurrying in the world of entrepreneurs, investment firms, and “real” Silicon Valley journalists, poohbahs, and pundits.

Stephen E Arnold, April 2, 2024

Cow Control or Elsie We Are Watching

April 1, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Australia already uses remotely controlled drones to herd sheep. Drones are considered more ethnical tax traditional herding methods because they’re less stressful for sheep.

Now the island continent is using advanced tracking technology to monitor buffalos and cows. Euro News investigates how technology is used in the cattle industry: “Scientists Are Attempting To Track 1000 Cattle And Buffalo From Using GPS, AI, And Satellites.”

An estimated 22000 buffalo freely roam in Arnhem Land, Australia. The emphasis is on estimate, because the exact number is unknown. These buffalo are harming Arnhem Land’s environment. One feral buffalo weighing 1200 kilograms and 188 cm not only damages the environment by eating a lot of plant life but also destroys cultural rock art, ceremonial sites, and waterways. Feral buffalos and cattle are major threats to Northern Australia’s economy and ecology.

Scientists, cattlemen, and indigenous rangers have teamed up to work on a program that will monitor feral bovines from space. The program is called SpaceCows and will last four years. It is a large-scale remote herd management system powered by AI and satellite. It’s also supported by the Australian government’s Smart Farming Partnership.

The rangers and stockmen trap feral bovines, implant solar-powered GPS tags, and release them. The tags transmit the data to a space satellite located 650 km away for two years or until it falls off. SpaceCows relies on Microsoft Azure’s cloud platform. The satellites and AI create a digital map of the Australian outback that tells them where feral cows live:

“Once the rangers know where the animals tend to live, they can concentrate on conservation efforts – by fencing off important sites or even culling them. ‘There’s very little surveillance that happens in these areas. So, now we’re starting to build those data sets and that understanding of the baseline disease status of animals,’ said Andrew Hoskins, a senior research scientist at CSIRO.

If successful, it could be one of the largest remote herd management systems in the world.”

Hopefully SpaceCows will protect the natural and cultural environment.

Whitney Grace, April 1, 2024

AI and Jobs: Under Estimating Perhaps?

March 28, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I am interested in the impact of smart software on jobs. I spotted “1.5M UK Jobs Now at Risk from AI, Report Finds.” But the snappier assertion appears in the subtitle to the write up:

The number could rise to 7.9M in the future

The UK has about 68 million people (maybe more, maybe fewer but close enough). The estimate of 7.9 million job losses translates to seven million people out of work. Now these types of “future impact” estimates are diaphanous. But the message seems clear. Despite the nascent stage of smart software’s development, the number one use may be dumping humans and learning to love software. Will the software make today’s systems work more efficiently. In my experience, computerizing processes does very little to improve the outputs. Some tasks are completed quickly. However, get the process wrong, and one has a darned interesting project for a blue-chip consulting firm.

The smart software is alone in an empty office building. Does the smart software look lonely or unhappy? Thanks, MSFT Copilot. Good enough illustration.

The write up notes:

Back-office, entry-level, and part-time jobs are the ones mostly exposed, with employees on medium and low wages being at the greatest risk.

If this statement is accurate, life will be exciting for parents whose progeny camp out in the family room or who turn to other, possibly less socially acceptable, methods of generating cash. Crime comes to my mind, but you may see volunteers working to pick up trash in lovely Plymouth or Blackpool.

The write up notes:

Experts have argued that AI can be a force for good in the labor market — as long as it goes hand in hand with rebuilding workforce skills.

Academics, wizards, elected officials, consultants can find the silver lining in the cloud that spawned the tornado.

Several observations, if I may:

- The acceleration of tools to add AI to processes is evident in the continuous stream of “new” projects appearing in GitHub, Product Watch, and AI newsletters. The availability of tools means that applications will flow into job-reducing opportunities; that is, outfits which will pay cash to cut payroll.

- AI functions are now being embedded in mobile devices. Smart software will be a crutch and most users will not realize that their own skills are being transformed. Welcoming AI is an important first step in using AI to replace an expensive, unreliable humanoid.

- The floundering of government and non-governmental organizations is amusing to watch. Each day documents about managing the AI “risk” appear in my feedreader. Yet zero meaningful action is taking place as certain large companies work to consolidate their control of essential and mostly proprietary technologies and know how.

Net net: The job loss estimate is interesting. My hunch is that it underestimates the impact of smart software on traditional work. This is good for smart software and possibly not so good for humanoids.

Stephen E Arnold, March 28, 2024

Backpressure: A Bit of a Problem in Enterprise Search in 2024

March 27, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I have noticed numerous references to search and retrieval in the last few months. Most of these articles and podcasts focus on making an organization’s data accessible. That’s the same old story told since the days of STAIRS III and other dinobaby artifacts. The gist of the flow of search-related articles is that information is locked up or silo-ized. Using a combination of “artificial intelligence,” “open source” software, and powerful computing resources — problem solved.

A modern enterprise search content processing system struggles to keep pace with the changes to already processed content (the deltas) and the flow of new content in a wide range of file types and formats. Thanks, MSFT Copilot. You have learned from your experience with Fast Search & Transfer file indexing it seems.

The 2019 essay “Backpressure Explained — The Resisted Flow of Data Through Software” is pertinent in 2024. The essay, written by Jay Phelps, states:

The purpose of software is to take input data and turn it into some desired output data. That output data might be JSON from an API, it might be HTML for a webpage, or the pixels displayed on your monitor. Backpressure is when the progress of turning that input to output is resisted in some way. In most cases that resistance is computational speed — trouble computing the output as fast as the input comes in — so that’s by far the easiest way to look at it.

Mr. Phelps identifies several types of backpressure. These are:

- More info to be processed than a system can handle

- Reading and writing file speeds are not up to the demand for reading and writing

- Communication “pipes” between and among servers are too small, slow, or unstable

- A group of hardware and software components cannot move data where it is needed fast enough.

I have simplified his more elegantly expressed points. Please, consult the original 2019 document for the information I have hip hopped over.

My point is that in the chatter about enterprise search and retrieval, there are a number of situations (use cases to those non-dinobabies) which create some interesting issues. Let me highlight these and then wrap up this short essay.

In an enterprise, the following situations exist and are often ignored or dismissed as irrelevant. When people pooh pooh my observations, it is clear to me that these people have [a] never been subject to a legal discovery process associated with enterprise search fraud and [b] are entitled whiz kids who don’t do too much in the quite dirty, messy, “real” world. (I do like the variety in T shirts and lumberjack shirts, however.)

First, in an enterprise, content changes. These “deltas” are a giant problem. I know that none of the systems I have examined, tested, installed, or advised which have a procedure to identify a change made to a PowerPoint, presented to a client, and converted to an email confirming a deal, price, or technical feature in anything close to real time. In fact, no one may know until the president’s laptop is examined by an investigator who discovers the “forgotten” information. Even more exciting is the opposing legal team’s review of a laptop dump as part of a discovery process “finds” the sequence of messages and connects the dots. Exciting, right. But “deltas” pose another problem. These modified content objects proliferate like gerbils. One can talk about information governance, but it is just that — talk, meaningless jabber.

Second, the content which an employees needs to answer a business question in a timely manner can reside in am employee’s laptop or a mobile phone, a digital notebook, in a Vimeo video or one of those nifty “private” YouTube videos, or behind the locked doors and specialized security systems loved by some pharma company’s research units, a Word document in something other than English, etc. Now the content is changed. The enterprise search fast talkers ignore identifying and indexing these documents with metadata that pinpoints the time of the change and who made it. Is this important? Some contract issues require this level of information access. Who asks for this stuff? How about a COTR for a billion dollar government contract?

Third, I have heard and read that modern enterprise search systems “use”, “apply,” “operate within” industry standard authentication systems. Sure they do within very narrowly defined situations. If the authorization system does not work, then quite problematic things happen. Examples range from an employee’s failure to find the information needed and makes a really bad decision. Alternatively the employee goes on an Easter egg hunt which may or may not work, but if the egg found is good enough, then that’s used. What happens? Bad things can happen? Have you ridden in an old Pinto? Access control is a tough problem, and it costs money to solve. Enterprise search solutions, even the whiz bang cloud centric distributed systems, implement something, which is often not the “right” thing.

Fourth, and I am going to stop here, the problem of end-to-end encrypted messaging systems. If you think employees do not use these, I suggest you do a bit of Eastern egg hunting. What about the content in those systems? You can tell me, “Our company does not use these.” I say, “Fine. I am a dinobaby, and I don’t have time to talk with you because you are so much more informed than I am.”

Why did I romp though this rather unpleasant issue in enterprise search and retrieval? The answer is, “Enterprise search remains a problematic concept.” I believe there is some litigation underway about how the problem of search can morph into a fantasy of a huge business because we have a solution.”

Sorry. Not yet. Marketing and closing deals are different from solving findability issues in an enterprise.

Stephen E Arnold, March 27, 2024

Commercial Open Source: Fantastic Pipe Dream or Revenue Pipe Line?

March 26, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Open source is a term which strikes me as au courant. Artificial intelligence software is often described as “open source.” The idea has a bit of “do good” mixed with the idea that commercial software puts customers in handcuffs. (I think I hear Kumbaya playing faintly in the background.) Is it possible to blend the idea of free and open software with the principles of commercial software lock in? Notable open source entrepreneurs have become difficult to differentiate from a run-of-the-mill technology company. Examples include RedHat, Elastic, and OpenAI. Ooops. Sorry. OpenAI is a different type of company. I think.

Will open source software, particularly open source AI components, end up like this private playground? Thanks, MSFT Copilot. You are into open source, aren’t you? I hope your commitment is stronger than for server and cloud security.

I had these open source thoughts when I read “AI and Data Infrastructure Drives Demand for Open Source Startups.” The source of the information is Runa Capital, now located in Luxembourg. The firm publishes a report called the Runa Open Source Start Up Index, and it is a “rosy” document. The point of the article is that Runa sees open source as a financial opportunity. You can start your exploration of the tables and charts at this link on the Runa Capital Web site.

I want to focus on some information tucked into the article, just not presented in bold face or with a snappy chart. Here’s the passage I noted:

Defining what constitutes “open source” has its own inherent challenges too, as there is a spectrum of how “open source” a startup is — some are more akin to “open core,” where most of their major features are locked behind a premium paywall, and some have licenses which are more restrictive than others. So for this, the curators at Runa decided that the startup must simply have a product that is “reasonably connected to its open-source repositories,” which obviously involves a degree of subjectivity when deciding which ones make the cut.

The word “reasonably” invokes an image of lawyers negotiating on behalf of their clients. Nothing is quite so far from the kumbaya of the “real” open source software initiative as lawyers. Just look at the licenses for open source software.

I also noted this statement:

Thus, according to Runa’s methodology, it uses what it calls the “commercial perception of open-source” for its report, rather than the actual license the company attaches to its project.

What is “open source”? My hunch it is whatever the lawyers and courts conclude.

Why is this important?

The talk about “open source” is relevant to the “next big thing” in technology. And what is that? ANSWER: A fresh set of money making plays.

I know that there are true believers in open source. I wish them financial and kumbaya-type success.

My take is different: Open source, as the term is used today, is one of the phrases repurposed to breathe life in what some critics call a techno-feudal world. I don’t have a dog in the race. I don’t want a dog in any race. I am a dinobaby. I find amusement in how language becomes the Teflon on which money (one hopes) glides effortlessly.

And the kumbaya? Hmm.

Stephen E Arnold, March 26, 2024

Worried about TikTok? Do Not Overlook CapCut

March 18, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I find the excitement about TikTok interesting. The US wants to play the reciprocity card; that is, China disallows US apps so the US can ban TikTok. How influential is TikTok? US elected officials learned first hand that TikTok users can get messages through to what is often a quite unresponsive cluster of elected officials. But let’s leave TikTok aside.

Thanks, MSFT Copilot. Good enough.

What do you know about the ByteDance cloud software CapCut? Ah, you have never heard of it. That’s not surprising because it is aimed at those who make videos for TikTok (big surprise) and other video platforms like YouTube.

CapCut has been gaining supporters like the happy-go-lucky people who published “how to” videos about CapCut on YouTube. On TikTok, CapCut short form videos have tallied billions of views. What makes it interesting to me is that it wants to phone home, store content in the “cloud”, and provide high-end tools to handle some tricky video situations like weird backgrounds on AI generated videos.

The product CapCut was named (I believe) JianYing or Viamaker (the story varies by source) which means nothing to me. The Google suggests its meanings could range from hard to paper cut out. I am not sure I buy these suggestions because Chinese is a linguistic slippery fish. Is that a question or a horse? In 2020, the app got a bit of shove into the world outside of the estimable Middle Kingdom.

Why is this important to me? Here are my reasons for creating this short post:

- Based on my tests of the app, it has some of the same data hoovering functions of TikTok

- The data of images and information about the users provides another source of potentially high value information to those with access to the information

- Data from “casual” videos might be quite useful when the person making the video has landed a job in a US national laboratory or in one the high-tech playgrounds in Silicon Valley. Am I suggesting blackmail? Of course not, but a release of certain imagery might be an interesting test of the videographer’s self-esteem.

If you want to know more about CapCut, try these links:

- Download (ideally to a burner phone or a PC specifically set up to test interesting software) at www.capcut.com

- Read about the company CapCut in this 2023 Recorded Future write up

- Learn about CapCut’s privacy issues in this Bloomberg story.

Net net: Clever stuff but who is paying attention. Parents? Regulators? Chinese intelligence operatives?

Stephen E Arnold, March 18, 2024

Student Surveillance: It Is a Thing

March 1, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Once mobile phones were designed with cameras, all technology was equipped with one. Installing cameras and recording devices is SOP now, but facial recognition technology will soon become as common unless privacy advocates have their way. Students at the University of Waterloo were upset to learn that vending machines on their campus were programmed with the controversial technology. The Kitchener explores how the scandal started in: “ ‘Facial Recognition’ Error Message On Vending Machine Sparks Concern At University Of Waterloo.”

A series of smart vending machines decorated with M&M graphics and dispense candy were located throughout the Waterloo campus. They raised privacy concerns when a student noticed an error message about the facial recognition application on one machine. The machines were then removed from campus. Until they were removed, word spread quickly and students covered a hole believed to hold a camera.

Students believed that vending machines didn’t need to have facial recognition applications. They also wondered if there were more places on campus where they were being monitored with similar technology.

The vending machines are owned by MARS, an international candy company, and manufactured by Invenda. The MARS company didn’t respond to queries but Invenda shared more information about the facial recognition application:

“Invenda also did not respond to CTV’s requests for comment but told Stanley in an email ‘the demographic detection software integrated into the smart vending machine operates entirely locally.’ ‘It does not engage in storage, communication, or transmission of any imagery or personally identifiable information,’ it continued.

According to Invenda’s website, the Smart Vending Machines can detect the presence of a person, their estimated age and gender. The website said the ‘software conducts local processing of digital image maps derived from the USB optical sensor in real-time, without storing such data on permanent memory mediums or transmitting it over the Internet to the Cloud.’”

Invenda also said the software is compliant with the European Union privacy General Data Protection Regulation but that doesn’t mean it is legal in Canada. The University of Waterloo has asked that the vending machines be removed from campus.

Net net: Cameras will proliferate and have smart software. Just a reminder.

Whitney Grace, March 1, 2024

Google Gems for the Week of 19 February, 2024

February 27, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

This week’s edition of Google Gems focuses on a Hope Diamond and a handful of lesser stones. Let’s go.

THE HOPE DIAMOND

In the chaos of the AI Gold Rush, horses fall and wizard engineers realize that they left their common sense in the saloon. Here’s the Hope Diamond from the Google.

The world’s largest online advertising agency created smart software with a lot of math, dump trucks filled with data, and wizards who did not recall that certain historical figures in the US were not of color. “Google Says Its AI Image-Generator Would Sometimes Overcompensate for Diversity,” an Associated Press story, explains in very gentle rhetoric that its super sophisticate brain and DeepMind would get the race of historical figures wrong. I think this means that Ben Franklin could look like a Zulu prince or George Washington might have some resemblance to Rama (blue skin, bow, arrow, and snappy hat).

My favorite search and retrieval expert Prabhakar Raghavan (famous for his brilliant lecture in Paris about the now renamed Bard) indicated that Google’s image rendering system did not hit the bull’s eye. No, Dr. Raghavan, the digital arrow pierced the micrometer thin plastic wrap of Google’s super sophisticated, quantum supremacy, gee-whiz technology.

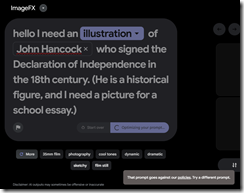

The message I received from Google when I asked for an illustration of John Hancock, an American historical figure. Too bad because this request goes against Google’s policies. Yep, wizards infused with the high school science club management method.

More important, however, was how Google’s massive stumble complemented OpenAI’s ChatGPT wonkiness. I want to award the Hope Diamond Award for AI Ineptitude to both Google and OpenAI. But, alas, there is just one Hope Diamond. The award goes to the quantumly supreme outfit Google.

[Note: I did not quote from the AP story. Why? Years ago the outfit threatened to sue people who use their stories’ words. Okay, no problemo, even though the newspaper for which I once worked supported this outfit in the days of “real” news, not recycled blog posts. I listen, but I do not forget some things. I wonder if the AP knows that Google Chrome can finish a “real” journalist’s sentences for he/him/she/her/it/them. Read about this “feature” at this link.]

Here are my reasons:

- Google is in catch-up mode and like those in the old Gold Rush, some fall from their horses and get up close and personal with hooves. How do those affect the body of a wizard? I have never fallen from a horse, but I saw a fellow get trampled when I lived in Campinas, Brazil. I recall there was a lot of screaming and blood. Messy.

- Google’s arrogance and intellectual sophistication cannot prevent incredible gaffes. A company with a mixed record of managing diversity, equity, etc. has demonstrated why Xooglers like Dr. Timnit Gebru find the company “interesting.” I don’t think Google is interesting. I think it is disappointing, particularly in the racial sensitivity department.

- For years I have explained that Google operates via the high school science club management method. What’s cute when one is 14 loses its charm when those using the method have been at it for a quarter century. It’s time to put on the big boy pants.

OTHER LITTLE GEMMAS

The previous week revealed a dirt trail with some sharp stones and thorny bushes. Here’s a quick selection of the sharpest and thorniest:

- The Google is running webinars to inform publishers about life after their wonderful long-lived cookies. Read more at Fipp.com.

- Google has released a small model as open source. What about the big model with the diversity quirk? Well, no. Read more at the weird green Verge thing.

- Google cares about AI safety. Yeah, believe it or not. Read more about this PR move on Techcrunch.

- Web search competitors will fail. This is a little stone. Yep, a kidney stone for those who don’t recall Neeva. Read more at Techpolicy.

- Did Google really pay $60 million to get that outstanding Reddit content. Wow. Maybe Google looks at different sub reddits than my research team does. Read more about it in 9 to 5 Google.

- What happens when an uninformed person uses the Google Cloud? Answer: Sticker shock. More about this estimable method in The Register.

- Some spoil sport finds traffic lights informed with Google’s smart software annoying. That’s hard to believe. Read more at this link.

- Google pointed out in a court filing that DuckDuckGo was a meta search system (that is, a search interface to other firm’s indexes) and Neeva was a loser crafted by Xooglers. Read more at this link.

No Google Hope Diamond report would be complete without pointing out that the online advertising giant will roll out its smart software to companies. Read more at this link. Let’s hope the wizards figure out that historical figures often have quite specific racial characteristics like Rama.

I wanted to include an image of Google’s rendering of a signer of the Declaration of Independence. What you see in the illustration above is what I got. Wow. I have more “gemmas”, but I just don’t want to present them.

Stephen E Arnold, February 27, 2024