Nuclia: The Solution to the Enterprise Search Problem?

April 21, 2022

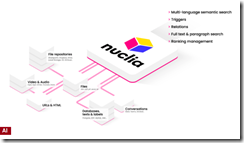

I read an interesting article called “Spanish Startup Nuclia Gets $5.4M to Advance Unstructured Data Search.” The article includes an illustration, presumably provided by Nuclia, which depicts search as a super app accessed via APIs.

Source: Silicon Angle and possibly Nuclia.com. Consult the linked story to see the red lines zip around without bottlenecks. (What? Bottlenecks in content processing, index updating, and query processing. Who ever heard of such a thing?)

Here are some of the highlights — assertions is probably a better word — about the Nuclia technology:

- The system is “AI powered.”

- Nuclia can “connect to any data source and automatically index its content regardless of what format or even language it is in.”

- The system can “discover semantic results, specific paragraphs in text and relationships between data. These capabilities can be integrated in any application with ease.”

- Nuclia can “detect images within unstructured datasets.”

- The cloud-based service can “say one video is X% similar to another one, and so on.”

What makes the Nuclia approach tick? There are two main components:

- The Nuclia vector database which is available via GitHub

- The application programming interface.

The news hook for the search story is that investors have input $5.4 million in seed funding to the company.

Algolia wants to reinvent search. Maybe Nuclia has? Google is search, but it may be intrigued with the assertions about vector embeddings and finding similarities which may be otherwise overlooked. The idea is that the ad for Liberty Mutual might be displayed in YouTube videos about seized yachts by business wizards on one or more lists of interesting individuals. Elastics may want to poke around Nuclia in a quest for adding some new functionality to its search system.

Enterprise search seems to be slightly less dormant than it has been.

Stephen E Arnold, April 21, 2022

Enterprise Search Vendors: Sure, Some Are Missing But Does Anyone Know or Care?

April 20, 2022

I came across a site called Software Suggest and its article “Coveo Enterprise Search Alternatives.” Wow. What’s a good word for bad info?

The system generated 29 vendors in addition to Coveo. The options were not in alphabetical order or any pattern I could discern. What outfits are on the list? Here are the enterprise search vendors for February 2022, the most recent incarnation of this list. My comments are included in parentheses for each system. By the way, an alternative is picking from two choices. This is more correctly labeled “options.” Just another indication of hippy dippy information about information retrieval.

AddSearch (Web site search which is not enterprise search)

Algolia (a publicly trade search company hiring to reinvent enterprise search just as Fast Search & Transfer did more than a decade ago)

Bonsai.io (another Eleasticsearch repackager)

Coveo (no info, just a plea for comments)

C Searcher(from HNsoft in Portugal. desktop search last updated in 2018 according to the firm’s Web site)

CTX Search (the expired certificate does bode well)

Datafari (maybe open source? chat service has no action since May 2021)

Expertrec Search Engine (an eCommerce solution, not an enterprise search system)

Funnelback (the name is now Squiz. The technology Australian)

Galaktic (a Web site search solution from Taglr, an eCommerce search service)

IBM Watson (yikes)

Inbenta (A Catalan outfit which shapes its message to suit the purchasing climate)

Indica Enterprise Search (based in the Netherlands but the name points to a cannabis plant)

Intrasearch (open source search repackaged with some spicy AI and other buzzwords)

Lateral (the German company with an office in Tasmania offers an interface similar to that of Babel Street and Geospark Analytics for an organization’s content)

Lookeen (desktop search for “all your data”. All?)

OnBase ECM (this is a tricky one. ISYS Search sold to Lexmark. Lexmark sold to Highland. Highland appears to be the proud possessor of ISYS Search and has grafted it to an enterprise content management system)

OpenText (the proud owner of many search systems, including Tuxedo and everyone’s fave BRS Search)

Relevancy Platform (three years ago, Searchspring Relevancy Platform was acquired by Scaleworks which looks like a financial outfit)

Sajari (smart site search for eCommerce)

SearchBox Search (Elasticsearch from the cloud)

Searchify (a replacement for Index Tank. who?)

SearchUnify (looks like a smart customer support system, a pitch used by Coveo and others in the sector)

Site Search 360 (not an enterprise search solution in my opinion)

SLI Systems (eCommerce search, not enterprise search, but I could be off base here)

Team Search (TransVault searches Azure Tenancy set ups)

Wescale (mobile eCommerce search)

Wizzy (the name is almost as interesting as the original Purple Yogi system and another eCommerce search system)

Wuha (not as good a name as Purple Yogi. A French NLP search outfit)

X1 Search (from Idea Labs, X1 is into eDiscovery and search)

This is quite an incomplete and inconsistent list from Software Suggest. It is obvious that there is considerable confusion about the meaning of “enterprise search.” I thought I provided a useful definition in my book “The Landscape of Enterprise Search,” published by Panda Press a decade ago. The book, like me, is not too popular or well known. As a result, the blundering around in eCommerce search, Web site search, application specific search, and enterprise search is painful. Who cares? No one at Software Suggest I posit.

My hunch is that this is content marketing for Coveo. Just a guess, however.

Stephen E Arnold, April xx, 2022

DuckDuckGo and Filtering

April 18, 2022

I read “DuckDuckGo Removes Pirate Websites from Search Results: No More YouTube-dl?” The main thrust of the story is:

The private search engine, DuckDuckGo, has decided to remove pirate websites from its official search results.

DuckDuckGo is a metasearch engine. These are systems which may do some focused original spidering, but may send a user’s query to partner indexes. Then the results are presented to the user (which may be a human or a software robot). Some metasearch systems like Vivisimo invested some intellectual cycles in de-duplicating the results. (A helpful rule of thumb is to assume a 50 to 70 percent overlap in results from one Web search system to another.) IBM bought Vivisimo, and I have to admit that I have no idea what happened to the de-duplicating technology because … IBM.

There are more advanced metasearch systems. One example is Silobreaker, a system influenced by some Swedish wizards. The difference between a DuckDuckGo and an industrial strength system, in my opinion, is significant. Web search is an opaque service. Many behind-the-scenes actions take place, and some of the most important are not public disclosed in a way that makes sense to a person looking for pizza.

My question, Is DuckDuckGo actively filtering?” And “Why did this take so long?” And, “Is DuckDuckGo virtue signaling after its privacy misstep, or is the company snagged in a content marketing bramble?

I don’t know. My thoughts are:

- The editorial policies of metasearch systems should be disclosed; that is, we do this and we do that.

- Metasearch systems should disclose that many results are recycled and the provenance, age, and accuracy of the results are unknown to the metasearch provider?

- Metasearch systems should make clear exactly what the benefits of using the metasearch system are and why the provider of some search results are not as beneficial to the user; for example, which result is an ad (explicit or implicit), sponsored, etc.

Will metasearch systems embrace some of these thoughts? Nah. Those who use “free” Web search systems are in a cloud of unknowing.

Stephen E Arnold, April 18, 2022

Google Hits Microsoft in the Nose: Alleges Security Issues

April 15, 2022

The Google wants to be the new Microsoft. Google wanted to be the big dog in social media. How did that turn out? Google wanted to diversify its revenue streams so that online advertising was not the main money gusher. How did that work out? Now there is a new dust up, and it will be more fun than watching the antics of coaches of Final Four teams. Go, Coach K!

The real news outfit NBC published “Attacking Rival, Google Says Microsoft’s Hold on Government Security Is a Problem.” The article presents as actual factual information:

Jeanette Manfra, director of risk and compliance for Google’s cloud services and a former top U.S. cybersecurity official, said Thursday that the government’s reliance on Microsoft — one of Google’s top business rivals — is an ongoing security threat. Manfra also said in a blog post published Thursday that a survey commissioned by Google found that a majority of federal employees believe that the government’s reliance on Microsoft products is a cybersecurity vulnerability.

There you go. A monoculture is vulnerable to parasites and other predations. So what’s the fix? Replace the existing monoculture with another one.

That’s a Googley point of view from Google’s cloud services unit.

And there are data to back up this assertion, at least data that NBC finds actual factual; for instance:

Last year, researchers discovered 21 “zero-days” — an industry term for a critical vulnerability that a company doesn’t have a ready solution for — actively in use against Microsoft products, compared to 16 against Google and 12 against Apple.

I don’t want to be a person who dismisses the value of my Google mouse pad, but I would offer:

- How are the anti ad fraud mechanisms working?

- What’s the issue with YouTube creators’ allegations of algorithmic oddity?

- What’s the issue with malware in approved Google Play apps?

- Are the incidents reported by Firewall Times resolved?

Microsoft has been reasonably successful in selling to the US government. How would the US military operate without PowerPoint slide decks?

From my point of view, Google’s aggressive security questions could be directed at itself? Does Google do the know thyself thing? Not when it comes to money is my answer. My view is that none of the Big Tech outfits are significantly different from one another.

Stephen E Arnold, April 15, 2022

Teams Tracking: Are You Working at Triple Peak?

April 14, 2022

I installed a new version of Microsoft Office. I had to spend some time disabling the Microsoft Cloud, Outlook, and Teams, plus a number of other odds and ends. Who in my office uses Publisher? Sorry, not me. In fact, I knew only one client who used Publisher and that was years ago. We converted that lucky person to an easier to use and more stable product.

We have tried to participate in Teams meetings. Unfortunately the system crashes on my Mac Mini, my Intel workstation, and my AMD workstation. I know the problem is obviously the fault of Apple, Intel, and AMD, but it would be nice if the Teams software would allow me to participate in a meeting. The workaround in my office is to use Zoom. It plays nice with my machines, my mostly secure set up, and the clumsy finger of my 77 year old self.

I provide the context so that you will understand my reaction to “Microsoft Discovers Triple Peak Work Day for Its Remote Employees.” As you may know, Microsoft has been adding features to Teams since the pandemic lit a fire under what was once a software service reserved for financial meetings and some companies that wanted everyone no matter what to be in a digital face to face meeting. Those were super. I did some work for an early video conferencing player. I think it was called Databeam. Yep, perfect for kids who wanted to take a virtual class, not a presentation about the turbine problems at Lockheed Martin.

Microsoft’s featuritis has embraced surveillance. I won’t run down the tools available to an “administrator” with appropriate access to a Teams’ set up for a company. I want to highlight the fact that Microsoft shared with ExtremeTech some information I find fascinating; to wit:

… when employees were in the office, it found “knowledge workers” usually had two periods of peak productivity: before lunch and after lunch. However, with everyone working from home there’s now a third period: late at night, right before bedtime.

My workday has for years begun about 6 am. I chug along until lunch. I then chug along until dinner. Then I chug along until I go to sleep at 10 pm. I like to think that my peak times are from 6 am to 9 am, from 10 am to noon, from 1 30 pm to 3 pm, and from 330 to 6 pm. I have been working for more than 50 years, and I am happy to admit that I am an old fashioned Type A person. Obviously Microsoft does not have many people like me in its sample. The morning, as I recall from my Booz, Allen & Hamilton days, the productive in the morning crowd was a large cohort, thousands in fact. But not in the MSFT sample. These are lazy dogs its seems.

Let’s imagine your are a Type A manager. You have some employees who work from home or from a remote location like a client’s office in Transnistia which you may know as the Pridnestrovian Moldavian Republic. How do you know your remotes are working at their peak times? You monitor the wily creatures: Before lunch, after lunch, and before bed or maybe to a disco in downtown Tiraspol.

How does this finding connect with Teams? With everyone plugged in from morning to night, the Type A manager can look at meeting attendance, participation, side talks, and other detritus sucked up by Teams’ log files. Match up the work with the times. Check to see if there are three ringing bells for each employee. Bingo. Another HR metric to use to reward or marginalize a human personnel asset.

I will just use Zoom and forget about people who do not work when I do.

Stephen E Arnold, April 14, 2022

Amazon: Is the Company Losing Control of Essentials?

April 11, 2022

Here’s a test question? Which is the computer product in the image below?

|

[a] |

[b] |

|

|

If you picked [a], you qualify for work at TopCharm, an Amazon service located in lovely Brooklyn at 3912 New Utrecht Avenue, zip 11219. Item [b] is the Ryzen cpu I ordered, paid for, and expected to arrive. TopCharm delivered: Panties, not the CPU. Is it easy to confuse a Ryzen 5900X with these really big, lacy, red “unmentionables”? One of my team asked me, “Do you want me to connect the red lace cpu to the ASUS motherboard?”

Ho ho ho.

What does Clustrmaps.com say about this location””?

This address has been used for business registration by Express Repair & Towing Inc. The property belongs to Lelah Inc. [Maybe these are Lelah’s underwear? And Express Repair & Towing? Yep, that sounds like a vendor of digital panties, red and see-through at that.]

One of my team suggested I wear the garment for my lecture in April 2021 at the National Cyber Crime Conference? My wife wanted to know if Don (one of my technical team) likes red panties? A neighbor’s college-attending son asked, “Who is the babe who wears that? Can I have her contact info?”

My sense of humor about this matter is officially exhausted.

Several observations about this Amazon transaction:

- Does the phrase “too big to manage” apply in this situation to Amazon’s ecommerce business?

- What type of stocking clerk confuses a high end CPU with cheap red underwear?

- What quality assurance methods are in place to protect a consumer from cheap jokes and embarrassment when this type of misstep occurs?

Has Amazon lost control of the basics of online commerce? If one confuses CPUs with panties, how is Amazon going to ensure that its Government Cloud services for the public sector stay online? Quite a misstep in my opinion. Is this cyber fraud, an example of management lapses, a screwed up inventory system, or a perverse sense of humor?

Stephen E Arnold, April 11, 2022

Google: Nosing into US Government Consulting

April 4, 2022

I spotted an item on Reddit called “Google x Palantir.” Let’s assume there’s a smidgen of truth in the post. The factoid is in a comment about Google’s naming Stephen Elliott as its head of artificial intelligence solutions for the Google public sector unit. (What happened to the wizard once involved in this type of work? Oh, well.)

The interesting item for me is that Mr. Elliott will have a particular focus on “leveraging the Palantir Foundry platform.” I thought that outfits like Praetorian Digital (now Lexipol) handled this type of specialist consulting and engineering.

What strikes me as intriguing about this announcement is that Palantir Foundry will work on the Google Cloud. Amazon is likely to be an interested party in this type of Google initiative.

Amazon has sucked up a significant number of product-centric searches. Now the Google wants to get into the “make Palantir work” business.

Plus, Google will have an opportunity to demonstrate its people management expertise, its ability to attract and retain a diverse employee group, and its ability to put some pressure on the Amazon brachial nerve.

How will Microsoft respond?

The forthcoming Netflix mockumentary “Mr. Elliot Goes to Washington” will fill someone’s hunger for a reality thriller.

And what if the Reddit post is off base. Hey, mockumentaries can be winners. Remember “This Is Spinal Tap”?

Stephen E Arnold, April 4, 2022

Google: The Quantum Supremacy Turtling

April 1, 2022

Okay, Aprils’ Fool Day.

“Google Wants to Win the Quantum Computing Race by Being the Tortoise, Not the Hare” explains that the quantum supremacy “winner” which captured “time crystals” has a new angle:

it’s clear that Google — or, to be more accurate, its parent company Alphabet — has its sights set on being the world’s premiere quantum computing organization.

Machines? Nah, think cloud, gentle reader. Google has it together, but the non Googley may struggle to get the picture. The write up says:

Parent company Alphabet recently starbursted its SandboxAQ division into its own company, now a Google sibling. It’s unclear exactly what SandboxAQ intends to do now that it’s spun out, but it’s positioned as a quantum-and-AI-as-a-service company. We expect it’ll begin servicing business clients in partnership with Google in the very near-term.

But? The write up says:

We can safely assume we haven’t seen the last of Google’s quantum computing research breakthroughs, and that tells us we could very well be living in the moments right before the slow-and-steady tortoise starts to make up ground on the speedy hare.

Maybe turtle? An ectotherm like Googzilla? Eye glass frames with a relevant Google product review? So many questions.

Stephen E Arnold, April 1, 2022

Google: Managing with Flair

March 24, 2022

I had forgotten there was a Google employee survey. I read “Googlegeist Survey Reveals That Google Workers Are Increasingly Unhappy about Compensation, Promotion, and More.” Unhappy employees suggest that the Google zeitgeist is out of joint if the information in the write up is accurate.

I noted this passage:

In the latest Googlegeist or the annual Google survey, the company noticed that there was a growing trend of “increasingly unhappy” workers over compensation and other key issues.

How could those admitted to the Walt Disney Wonderland of technology and doing good be unhappy? How could the senior managers craft an artificial environment at odds with the needs of humanoids?

Is there a silver lining to the clouds hanging over the Google? Yes. I learned:

The survey which took place two months ago, yielded the most desirable results when it comes to advertisements, cloud, and searches. Moreover, the highest score came from the values and mission of the company. However, it should be noted that the lowest remark tackled the context of execution and compensation on the part of the labor force.

And how did the management of the firm respond? According to the write up:

Addressing the survey results is considered to be “one of the most important ways” for evaluation, as CEO Sundar Pichai said during an announcement via email. This would help the company assess the willingness and desirability of the workers to work inside the firm.

There you go. Management insight. Be happy or begone.

Stephen E Arnold, March 24, 2022

Google and Mandiant: Will Google Be Able to Handle a People Business?

March 11, 2022

Talk about Google’s purchasing Mandiant is a hot topic. I want to comment about Protocol’s article “Google Wants to Be the Full-Service Security Cloud.” The write up is one of several mentioning an important fact:

The company currently has 2,200 employees, including 600 consultants and 300 intelligence analysts who respond to security breaches.

Mandiant, therefore, has about half of its employees performing consultant type work. Not long ago, Google benefited from the sale of Recorded Future, a company which was in the cyber security business AND had a capability that Google had not previously possessed. What was Recorded Future’s magic ingredient? My answer is, “Ability to index by time.” There were other Recorded Future capabilities. In-Q-Tel found the company interesting as well.

Now the Google is embracing the consultative business in which Mandiant has done well. How will the Google management method apply to the individuals who make up about half the Mandiant work force?

If the past is an indication, Google does okay when the staff are like Google’s previous and current management. Google does less well when the professionals are less like those high school science club members who climbed the ladder at the Google.

To sum up: This deal is going to be interesting to watch. Microsoft is likely to be keen on following the tie up. Mandiant is, as you may recall, the outfit which blew the whistle on the SolarWinds’ misstep. Microsoft was snagged in the subsequent forensic analyses. Plus, the cyber security industry is enjoying some favorable winds. The issue, however, is that as threats become breaches, the flaws of the present approach to cyber security become more obvious. Online advertising, cloud computing, and cyber security — a delightful concoction or a volatile mix?

Stephen E Arnold, March 11, 2022