Databases: Old Wine, New Bottles, and Now Updated Labels with More Jargon and Buzzwords

June 29, 2021

I read “It’s the Golden Age of Databases. It Can’t Last.” The subtitle is fetching too:

Startups are reaping huge funding rounds. But money alone won’t be enough to top the current market leaders.

I think that it is important to keep in mind that databases once resided within an organization. In 1980, I had my employer’s customer database in a small closet in my office. I kept my office locked, and anyone who needed access had to find me, set up an appointing, and do a look up. Was I paranoid? Yep, and I suppose that’s why I never went to work for flexi-think outfits intellectually allied with Microsoft or SolarWinds, among others.

Today the cloud is the rage. Why? It’s better, faster, and cheaper. Just pick any two and note that I did not include “more secure.” If you want some color about the “cost” of the cloud pursuit fueled by cost cutting, check out this high flying financial outfit’s essay “Andreesen Horowitz Partner Martin Casado Says the Cost of Cloud Computing Is a $100 Billion Drag on the Biggest Software Companies, Sparking a Huge Debate across the Industry.” Some of the ideas are okay; others strike me as similar to those suggesting the Egyptian pyramids are big batteries. The point is that many companies embraced the cloud in search of reducing the cost and hassle of on premises systems and people.

One of the upsides of the cloud is the crazy marketing assertions that a bunch of disparate data can be dumped into a “cloud system” and become instantly available for Fancy Dan analytics. Yeah, and I have a bridge to sell you in Brooklyn. I accept PayPal too.

The “Golden Age” write up works over time to make the new databases exciting for investors who want a big payout. I did note this statement in the write up which is chock-a-block with vendor names:

Ultimately, Databricks and Snowflake’s main competitors probably aren’t each other, but rather Microsoft, AWS and Google.

Do you think it would be helpful to mention IBM and Oracle? I do.

Here’s another important statement from the write up:

One thing is certain: The big data revolution isn’t slowing down. And that means the war over managing it and putting the information to use will only get more fierce.

Why the “fierce”? Perhaps it will be the investors in the whizzy new “we can federate and be better, faster, and cheaper” outfits who put the pedal to the metal. The reality is that big outfits license big brands. Change is time consuming and expensive. And the seamless data lakes with data lake houses on them? Probably still for sale after owners realize that data magic is expensive, time consuming, and fiddly.

But rah rah is solid info today.

Stephen E Arnold, June 29, 2021

Microsoft Teams: More Search, Better Search? Sure

June 23, 2021

How about the way Word handles images in a text document? Don’t you love the numbering in a Word list? And what about those templates?

Microsoft loves features. It is no surprise that Teams is collecting features the way my French bulldog pulls in ticks on a warm morning in the woods in June.

Here is an interesting development in search. We learn from a very brief write-up at MS Power User that “Microsoft Search Will Soon Be Able to Find Teams Meeting Recordings Based on What Was Said.” It occurs as the company moves MS Teams recordings to OneDrive and SharePoint. (We note Zoom offers similar functionality if one enables audio transcription and hits “record” before the meeting.) Writer Surur reports:

“Previously, Teams meeting recordings were only searchable based on the Title of the meetings. You will now be easily able to find Teams meeting recordings based on not just the Title of the meeting, but also based on what was said in the meeting, via the transcript, as long as Live Transcription was enabled. Note however that only the attendees of the Teams meeting will have the permission to view these recordings in the search results and playback the recordings. These meetings will now be discoverable in eDiscovery as well, via the transcript. If you don’t want these meetings to be discoverable in Microsoft Search or eDiscovery via transcripts, you can turn off Teams transcription.”

This is a handy feature. It does mean, however, that participants will want to be even more careful what they express in a Teams meeting. Confirmation of any surly utterances will be just a search away. How does the system index an expletive when the dog barks or a Teams’ session hangs?

Cynthia Murrell, June 23, 2021

TikTok: What Is the Problem? None to Sillycon Valley Pundits.

June 18, 2021

I remember making a comment in a DarkCyber video about the lack of risk TikTok posed to its users. I think I heard a couple of Sillycon Valley pundits suggest that TikTok is no big deal. Chinese links? Hey, so what. These are short videos. Harmless.

Individuals like this are lost in clouds of unknowing with a dusting of gold and silver naive sparkles.

“TikTok Has Started Collecting Your ‘Faceprints’ and ‘Voiceprints.’ Here’s What It Could Do With Them” provides some color for parents whose children are probably tracked, mapped, and imaged:

Recently, TikTok made a change to its U.S. privacy policy,allowing the company to “automatically” collect new types of biometric data, including what it describes as “faceprints” and “voiceprints.” TikTok’s unclear intent, the permanence of the biometric data and potential future uses for it have caused concern

Well, gee whiz. The write up is pretty good, but there are a couple of uses of these types of data left out of the write up:

- Cross correlate the images with other data about a minor, young adult, college student, or aging lurker

- Feed the data into analytic systems so that predictions can be made about the “flexibility” of certain individuals

- Cluster young people into egg cartons so fellow travelers and their weakness could be exploited for nefarious or really good purposes.

Will the Sillycon Valley real journalists get the message? Maybe if I convert this to a TikTok video.

Stephen E Arnold, June 18, 2021

Google Wants to Do Better: Read These Two Articles for Context

June 10, 2021

You will need to read these two articles before you scan my observations.

The first write up is “How an Ex Googler Turned Artist Hacked Her Work to the Top of Search Results.” The is a case example of considerable importance, at least to me and my research team. The methods of word use designed to bond to Google’s internal receptors is the secret sauce of search engine optimization experts. But here is a Xoogler manipulating Google’s clueless methods.

The second article is “Google Seeks to Break Vicious Cycle of Online Slander.” Ignore the self praise of the Gray Lady. The main point is that Google is going to take action to deal with the way in which its exemplary smart software handles “slander.” The main point is that Google has been converted from do gooder to the digital equivalent of Hakan Ayik, the individual the Australian Federal Police converted into the ultimate insider. The similarity is important at least to me.

Don’t agree with my interpretation? No problem. Nevertheless, I will offer my observations:

First, after 20 years of obfuscation, it is clear that the fragility and exploitability of Google’s smart software is known to the author of “How an Ex Googler Turned Artist Hacked…”. Therefore, the knowledge of Google’s willful blind spots is not secret to about 100,000 full time equivalent Googlers.

Second, Google – instead of taking direct, immediate action – once again is doing the “ask forgiveness” thing with words of assurance. Actions speak louder than words. Maybe this time?

Third, neither of the referenced articles speaks bluntly and clearly about the danger mishandling of meaning poses. Forget big, glittery issues like ethics or democracy. Think manipulation and becloudization.

Stephen E Arnold, June 10, 2021

High School Management Method: Blame a Customer

June 9, 2021

I noted another allegedly true anecdote. If the information is correct, gentle reader, we have another example of the high school science club management method. Think acne, no date for the prom, and a weird laugh type of science club. Before you get too excited, yes, I was a member of my high school’s science club and I think an officer as well as a proponent of the HSSC approach to social interaction. Proud am I.

“Fastly Claims a Single Customer Responsible for Widespread Internet Outage” asserts:

The company is now claiming the issue stemmed from a bug and one customer’s configuration change. “We experienced a global outage due to an undiscovered software bug that surfaced on June 8 when it was triggered by a valid customer configuration change,” Nick Rockwell, the company’s SVP of engineering and infrastructure wrote in a blog post last night. “This outage was broad and severe, and we’re truly sorry for the impact to our customers and everyone who relies on them.”

Yep, a customer using the Fastly cloud service.

Two observations:

- Unnoticed flaws will be found and noticed, maybe exploited. Fragility and vulnerability are engineered in.

- Customer service is likely to subject the individual to an inbound call loop. Take that, you valued customer.

And what about Amazon’s bulletproof, super redundant, fail over whiz bang system. Oh, it failed for users.

Yep, high school science club thinking says, “We did not do it.” Yeah.

Stephen E Arnold, June 9, 2021

Google: Do What We Say, Ignore What We Do, Just Heel!

June 8, 2021

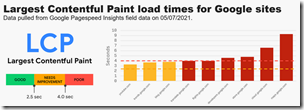

If this Reddit diagram is on the money, we have a great example of how Google management goes about rule making. The post is called “Google Can’t Pass Its Own Page Speed Test.” The post was online on June 5, 2021, but when Beyond Search posts this short item, that Reddit post may be tough to find. Why? Oh, because.

- There are three grades Dearest Google automatically assigns to those who put content online. There are people who get green badges (just like in middle school). There are people who warrant yellow badges (no, I won’t mention a certain shop selling cloth patches in the shape of pentagons with pointy things), and red badges (red, like the scarlet letter, and you know what that meant in Puritan New England a relatively short time ago).

Notice that these Google sites get the red badge of high school science club mismanagement recognition:

- Google Translate

- Google’s site for its loyal and worshipful developers

- Google’s online store where you can buy tchotchkes

- The Google Cloud which has dreams of crushing the competitors like Amazon and Microsoft into non-coherent photons

- Google Maps which I personally find almost impossible to use due to the effort to convert a map into so much more than a representation of a territory. Perhaps a Google Map is not the territory? Could it be a vessel for advertising?

There are three Google services which get the yellow badge. I find the yellow badge thing very troubling. Here these are:

- YouTube, an outstanding collection of content recommended by a super duper piece of software and a giant hamper for online advertising of Grammarly, chicken sandwiches, insurance, and so much more for the consumers of the world

- Google Trends. This is a service which reveals teeny tiny slice of the continent of data it seems the Alphabet Google thing possesses

- The Google blog. That is a font of wisdom.

Observations:

- Like Google’s other mandates, it appears that those inside the company responsible for Google’s public face either don’t know the guidelines or simply don’t care.

- Like AMP, this is an idea designed to help out Google. Making everyone go faster reduces costs for the Google. Who wants to help Google reduce costs? I sure do.

- Google’s high school science club management methods continue to demonstrate their excellence.

What happens if a non Google Web site doesn’t go fast? You thought you got traffic from Google like the good old days, perhaps rethink that assumption?

Stephen E Arnold, June 8, 2021

Technical Debt: Paying Down Despite Disaster Waiting in the Wings

June 7, 2021

Some interesting ideas appear in “10 Ways to Prevent and Manage Technical Debt—Tips from Developers.” The listicle is not particularized on a specific application or service. Let me convert a few of the points in the article to the challenges that vendors of information retrieval software have to meet in a successful manner.

I am not tracking innovations in search the way I did when I wrote the first three editions of the Enterprise Search Report many years ago. Search technology, despite the hooting of marketers and “innovators” who don’t know much about the 50 year plus history of finding, search has not made much progress. In fact, if I were still giving talks at search-related events, I would present data showing that “findability” has regressed. Now to the matter at hand.

I am not sure most people understand what technical debt is in general and even fewer apply the concept to search and retrieval. To keep it simple, technical debt is not repairing and servicing your auto. You do just enough to keep the Nash Rambler on the road. Then it dies. You find that parts are tough to find and expensive to get. If you want to do the job “right,” you will find that specialists are on hand to make that hunk of junk gleam. Get out your checkbook and write small. You will be filling in some big numbers. Search is that Nash Rambler but you have a couple of Metropolitans and a junker of a 1951 Nash Ambassador sitting in your data center. You can get stuff from A to B, but each trip becomes more agonizing. Then you have to spend.

Technical debt is the amount you have to spend to get back up and running plus the lost revenue or estimated opportunity cost. These numbers are the cost of the hardware, software, knick knacks, and humans who sort of know what to do.

What about search? Let’s take three of the items identified in the article and consider them in terms of what is often incorrectly described as “enterprise search.” My work over the years has documented the fact that there is no enterprise search. Shocking? Think about it. Employees cannot find the video of that Zoom meeting or the transcript automagically prepared this morning. And that sales presentation with the new pricing? Oh, right, that’s on the VP’s laptop and it won’t connect to the cloud archiving system because the wizard executive has trouble opening a hotel room with the keycard. Like I said, “Wizard.”

Item number 2 in the article is “Embed technical debt management into the company culture.” Ho ho ho. The present state of play is to get something up and running, dump on features, and generate revenue, some revenue, any revenue. In many organizations, the pressure to move the needle trumps any weird ideas to go back and fix the plumbing. How often is the core of Google’s search and retrieval reworked? Yeah, not often and every year the job becomes less and less desirable. The legions of Xooglers who worked on the system are unlikely to return to the digital Disneyland to do this work even for dump trucks filled with Ethereum.

Item number 5 is “Make technical debt a priority in open source culture.” Okay, let’s think about open source search. Have you through about Sphinx recently. What about Xapian? The big dogs are under intense pressure from the real champions of open source like Amazon and everyone’s favorite security company Microsoft. The individuals who do the bulk of the work struggle to make the darned thing work on the latest and greatest platforms and operating systems. The more outfits like Amazon pressure Elastic, the less likely the humans who work on Lucene and Solr will be able to fend off complete commercialization. Hey, there’s always consulting work or a job at IBM, another cheerleader for open source. So priority? Right.

Now item number 6 in the article. It is “Choose a flexible architecture.” What does this mean for search and retrieval. Most search and search centric applications like policeware and intelware are mashups of open source, legacy code left over from another project, and intern-infused scripts. The “architecture” is whatever was easiest and most financially acceptable. Once those initial decisions are made or simply allowed to happen because someone knew someone, the systems are unlikely to change. Fixing up something that sort of works is similar to the stars of VanWives repairing their ageing vehicle while driving in the rain. Ain’t gonna happen.

Net net: Technical debt for most organizations is what will bring down the company. Innovations slows to a crawl and becomes a series of add ons, wrappers, and strapping tape patches. Then boom. A competitor has blown the doors off the incumbent, customers just cancel contracts for enterprise search systems, or the once valued function becomes a feature for a more important application. Technical debt, like a college grad’s student loan, is a stress inducer. Stress can shorten one’s life and kill. The enterprise search market is littered with the corpses of outfits terminated from technical debt denial syndrome.

Stephen E Arnold, June 7, 2021

An Amusing Analysis of Palantir Technologies

June 7, 2021

I find analyses of intelware/policeware companies fascinating. “Palantir DD If You Want to Understand Company and Its History Better” is based on research conducted since November 2020. The write up asserts that Palantir is three “companies”: The government software (what I call intelware/policeware), the adding sales professionals facet of the business, and “their actual like full AI for weaponization and war and defense for the government.”

I must admit my research team has characterized Palantir Technologies in a different way. Palantir has been in business for more than a decade. The company has become publicly traded, and the stock as of June 2, 2021, is trading at about $25 per share. The challenge for companies like Palantir are the same old set of hurdles that other search and content processing firms have to get over without tripping and hitting the cinders with their snoot; namely:

- Generating sustainable and growing revenue streams from a somewhat narrow slide of commercial, financial, and government prospects. Newcomers like DataWalk offer comparable if not better technology at what may be more acceptable price points.

- Balancing the cost of “renting” cloud computer processing centers against the risk of those cloud vendors raising prices and possibly offering the same or substantially the same services at a lower price. Palantir relies on Amazon AWS, and that creates an interesting risk for Palantir’s senior management. To ameliorate the risk of AWS raising prices, buying a Palantir competitor, or just rolling out an Amazon version of the Palantir search and content processing system, Palantir signed a deal with IBM. This deal is for a different slice of the market, but it remains to be seen if this play will pay off in a substantial way.

- Figuring out how to expand the firm’s services’ business without getting into the business of creating customized versions of the Analyst’s Notebook type of product that Palantir offers. Furthermore, exceptional revenues can be generated from consulting, and to keep clients happy, Palantir may find that it has to resell competitors’ products. In short, consulting looks super from one point of view. From another it can derail the original Palantir business model. Money talks, particularly when the company has to payback its investors, invest in new technology, and spend money to generate leads and close.

- The clients have to be happy. Anecdotal evidence exists that some Palantir customers are not in thrill city. I am not going to identify the firms which have stubbed their toes on Palantir’s approach and the system’s value. Some online searching yields helpful insights.

- The company has a history of walking a fine line between appropriate and inappropriate behavior. The litigation (now sealed) between Palantir and the original i2 Ltd., the company which developed to a large part the current approach to intelware/policeware is usually unknown or just ignored. That’s not helpful. Combine the i2 matter with Palantir’s method of providing its software to analysts in some battle zones reveals helpful nuances about the firm’s methods.

To sum up, the analysis — at least to me — was a hoot.

Stephen E Arnold, June 7, 2021

Don Quixote Lives: Another Assault on Data Silos

June 3, 2021

Keep in mind that in some organizations data silos are necessary: Poaching colleagues (hello, big pharma), government security requirements (yep, the top Beltway bandits too), and common sense (lawyers heading to trial with a judge who has a certain reputation). Data silos are like everywhere. The were a couple of firms which billed themselves as “silo breakers.” How is that working out? The answer to the question resides in an analyst’s “data silo.” There you go.

Security is the biggest reason much-maligned data silos, also known as fragmented data, persist. Google now hopes to change that, we learn from “Google Cloud Launches New Services for a Unified Data Platform” at IT Brief. The company asserts its new solutions mean organizations can now forget about data silos and securely analyze their data in the cloud. We have yet to see detailed evidence for that claim, however. We will continue to keep our sensitive data separated, thank you very much.

Writer Ryan Morris-Reade describes the three new services upon which Google is pinning its cloudy unification hopes:

- Datastream, a new serverless Change Data Capture and replication service. Datastream enables customers to replicate data streams in real-time, from Oracle and MySQL databases to Google Cloud services such as BigQuery, Cloud SQL, Google Cloud Storage, and Cloud Spanner. This solution allows businesses to power real-time analytics, database replication, and event-driven architectures.

- Analytics Hub, a new capability that allows companies to create, curate, and manage analytics exchanges securely and in real-time. With Analytics Hub, customers can share data and insights, including dynamic dashboards and machine learning models securely inside and outside their organization.

- Dataplex, an intelligent data fabric that provides an integrated analytics experience, bringing the best of Google Cloud and open-source together, to enable users to rapidly curate, secure, integrate, and analyze their data at scale. Automated data quality allows data scientists and analysts to address data consistency across the tools of their choice, to unify and manage data without data movement or duplication. With built-in data intelligence using Google’s best-in-class AI and Machine Learning capabilities, organizations spend less time with infrastructure complexities and more time using data to deliver business outcomes.”

We learn consulting firm Deloitte is helping Google implement these solutions. That company’s global chief commercial officer emphasizes the tools provide “enhanced data experiences” for companies with siloed data by simplifying implementation and management. We are also told that Equifax and Deutsche Bank trust Google Cloud with their data. I guess that is supposed to mean we should, too.

But Google is quite the fan of data silos. Remember “universal search.” Google has separate indexes for news, scholarly information, and other content types. Universal implies breaking down “data silos.” But it is easier to talk about solving the data silo problem than delivering.

And what about Deloitte? This firm was fined about $20 million US because it had data silos which partitioned some partners from the work of the professionals working for Autonomy.

Yep, data silos. Persistent and embarrassing when someone thinks of “universal search” and Deloitte’s internal oversight methods.

Cynthia Murrell, June 03, 2021

Data Federation: Sure, Works Perfectly

June 1, 2021

How easy is it to snag a dozen sets of data, normalize them, parse them, and extract useful index terms, assign classifications, and other useful hooks? “Automated Data Wrangling” provides an answer sharply different from what marketers assert.

A former space explorer, now marooned on a beautiful dying world explains that the marketing assurances of dozens upon dozens of companies are baloney. Here’s a passage I noted:

Most public data is a mess. The knowledge required to clean it up exists. Cloud based computational infrastructure is pretty easily available and cost effective. But currently there seems to be a gap in the open source tooling. We can keep hacking away at it with custom rule-based processes informed by our modest domain expertise, and we’ll make progress, but as the leading researchers in the field point out, this doesn’t scale very well. If these kinds of powerful automated data wrangling tools are only really available for commercial purposes, I’m afraid that the current gap in data accessibility will not only persist, but grow over time. More commercial data producers and consumers will learn how to make use of them, and dedicate financial resources to doing so, knowing that they’ll be reap financial rewards. While folks working in the public interest trying to create universal public goods with public data and open source software will be left behind struggling with messy data forever.

Marketing is just easier than telling the truth about what’s needed in order to generate information which can be processed by a downstream procedure.

Stephen E Arnold, June xx, 2021