AI: Are You Sure You Are Secure?

December 19, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

North Carolina University published an interesting article. Are the data in the write up reproducible. I don’t know. I wanted to highlight the report in the hopes that additional information will be helpful to cyber security professionals. The article is “AI Networks Are More Vulnerable to Malicious Attacks Than Previously Thought.”

I noted this statement in the article:

Artificial intelligence tools hold promise for applications ranging from autonomous vehicles to the interpretation of medical images. However, a new study finds these AI tools are more vulnerable than previously thought to targeted attacks that effectively force AI systems to make bad decisions.

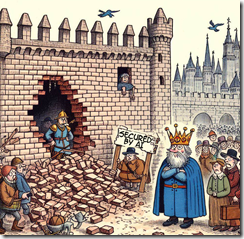

A corporate decision maker looks at a point of vulnerability. One of his associates moves a sign which explains that smart software protects the castel and its crown jewels. Thanks, MSFT Copilot. Numerous tries, but I finally got an image close enough for horseshoes.

What is the specific point of alleged weakness?

At issue are so-called “adversarial attacks,” in which someone manipulates the data being fed into an AI system in order to confuse it.

The example presented in the article is that a bad actor manipulates data provided to the smart software; for example, causing an image or content to be deleted or ignored. Another use case is that a bad actor could cause an X-ray machine to present altered information to the analyst.

The write up includes a description of software called QuadAttacK. The idea is to test a network for “clean” data. Four different networks were tested. The report includes a statement from Tianfu Wu, co-author of a paper on the work and an associate professor of electrical and computer engineering at North Carolina State University. He allegedly said:

“We were surprised to find that all four of these networks were very vulnerable to adversarial attacks,” Wu says. “We were particularly surprised at the extent to which we could fine-tune the attacks to make the networks see what we wanted them to see.”

You can download the vulnerability testing tool at this link.

Here are the observations my team and I generated at lunch today (Friday, December 14, 2023):

- Poisoned data is one of the weak spots in some smart software

- The free tool will allow bad actors with access to certain smart systems a way to identify points of vulnerability

- AI, at this time, may be better at marketing than protecting its reasoning systems.

Stephen E Arnold, December 19, 2023