Spotting Machine-Generated Content: A Work in Progress

July 31, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Some professionals want to figure out if a chunk of content is real, fabricated, or fake. In my experience, making that determination is difficult. For those who want to experiment with identifying weaponized, generated, or AI-assisted content, you may want to review the tools described in “AI Tools to Detect Disinformation – A Selection for Reporters and Fact-Checkers.” The article groups tools into categories. For example, there are utilities for text, images, video, and five bonus tools. There is a suggestion to address the bot problem. The write up is intended for “journalists,” a category which I find increasingly difficult to define.

The big question is, of course, do these systems work? I tried to test the tool from FactiSearch and the link 404ed. The service is available, but a bit of clicking is involved. I tried the Exorde tool and was greeted with the register for a free trial.

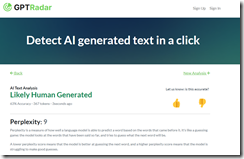

I plugged some machine-generated text produced with the You.com “Genius” LLM system in to GPT Radar (not in the cited article’s list by the way). That system happily reported that the sample copy was written by a human.

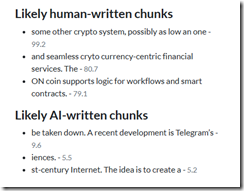

The test content was not. I plugged some text I wrote and the system reported:

Three items in my own writing were identified as text written by a large language model. I don’t know whether to be flattered or horrified.

The bottom line is that systems designed to identify machine-generated content are a work in progress. My view is that as soon as a bright your spark rolls out a new detection system, the LLM output become better. So a cat-and-mouse game ensues.

Stephen E Arnold, July 31, 2024