The Very Expensive AI Horse Race

December 4, 2024

This write up is from a real and still-alive dinobaby. If there is art, smart software has been involved. Dinobabies have many skills, but Gen Z art is not one of them.

This write up is from a real and still-alive dinobaby. If there is art, smart software has been involved. Dinobabies have many skills, but Gen Z art is not one of them.

One of the academic nemeses of smart software is a professional named Gary Marcus. Among his many intellectual accomplishments is cameo appearance on a former Jack Benny child star’s podcast. Mr. Marcus contributes his views of smart software to the person who, for a number of years, has been a voice actor on the Simpsons cartoon.

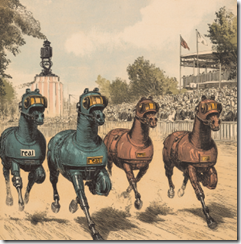

The big four robot stallions are racing to a finish line. Is the finish line moving away from the equines faster than the steeds can run? Thanks, MidJourney. Good enough.

I want to pay attention to Mr. Marcus’ Substack post “A New AI Scaling Law Shell Game?” The main idea is that the scaling law has entered popular computer jargon. Once the lingo of Galileo, scaling law now means that AI, like CPUs, are part of the belief that technology just gets better as it gets bigger.

In this essay, Mr. Marcus asserts that getting bigger may not work unless humanoids (presumably assisted by AI0 innovate other enabling processes. Mr. Marcus is aware of the cost of infrastructure, the cost of electricity, and the probable costs of exhausting content.

From my point of view, a bit more empirical “evidence” would be useful. (I am aware of academic research fraud.) Also, Mr. Marcus references me when he says keep your hands on your wallet. I am not sure that a fix is possible. The analogy is the old chestnut about changing a Sopwith Camel’s propeller when the aircraft is in a dogfight and the synchronized machine gun is firing through the propeller.

I want to highlight one passage in Mr. Marcus’ essay and offer a handful of comments. Here’s the passage I noted:

Over the last few weeks, much of the field has been quietly acknowledging that recent (not yet public) large-scale models aren’t as powerful as the putative laws were predicting. The new version is that there is not one scaling law, but three: scaling with how long you train a model (which isn’t really holding anymore), scaling with how long you post-train a model, and scaling with how long you let a given model wrestle with a given problem (or what Satya Nadella called scaling with “inference time compute”).

I think this is a paragraph I will add to my quotes file. The reasons are:

First, investors, would be entrepreneurs, and giant outfits really want a next big thing. Microsoft fired the opening shot in the smart software war in early 2023. Mr. Nadella suggested that smart software would be the next big thing for Microsoft. The company has invested in making good on this statement. Now Microsoft 365 is infused with smart software and Azure is burbling with digital glee with its “we’re first” status. However, a number of people have asked, “Where’s the financial payoff?” The answer is standard Silicon Valley catechism: The payoff is going to be huge. Invest now.” If prayers could power hope, AI is going to be hyperbolic just like the marketing collateral for AI promises. But it is almost 2025, and those billions have not generated more billions and profit for the Big Dogs of AI. Just sayin’.

Second, the idea that the scaling law is really multiple scaling laws is interesting. But if one scaling law fails to deliver, what happens to the other scaling laws? The interdependencies of the processes for the scaling laws might evoke new, hitherto identified scaling laws. Will each scaling law require massive investments to deliver? Is it feasible to pay off the investments in these processes with the original concept of the scaling law as applied to AI. I wonder if a reverse Ponzi scheme is emerging. The more pumped in the smaller the likelihood of success. Is AI a demonstration of convergence or The mathematical property you’re describing involves creating a sequence of fractions where the numerator is 1 and the denominator is an increasing sequence of integers. Just askin’.

Third, the performance or knowledge payoff I have experienced with my tests of OpenAI and the software available to me on You.com makes clear that the systems cannot handle what I consider routine questions. A recent example was my request to receive a list of the exhibitors at the November 1 Gateway Conference held in Dubai for crypto fans of Telegram’s The Open Network Foundation and TON Social. The systems were unable to deliver the lists. This is just one notable failure which a humanoid on my research team was able to rectify in an expeditious manner. (Did you know the Ku Group was on my researcher’s list?) Just reportin’.

Net net: Will AI repay the billions sunk into the data centers, the legal fees (many still looming), the staff, and the marketing? If you ask an accelerationist, the answer is, “Absolutely.” If you ask a dinobaby, you may hear, “Maybe, but some fundamental innovations are going to be needed.” If you ask an AI will kill us all type like the Xoogler Mo Gawdat, you will hear, “Doom looms.” Just dinobabyin’.

Stephen E Arnold, December 4, 2024