Which Browsers Devour the Most User Data?

May 19, 2025

Those concerned about data privacy may want to consider some advice from TechRadar: “These Are the Worst Web Browsers for Sucking Up All Your Data, So You May Want to Stop Using Them.” Citing research from Surfshark, writer Benedict Collins reports some of the most-used browsers are also the most ravenous. He tells us:

“Analyzing download statistics from AppMagic, Surfshark found Google’s Chrome and Apple‘s Safari account for 90% of the world’s mobile browser downloads. However, Chrome sucks up 20 different types of data while being used, including contact info, location, browsing history, and user content, and is the only browser to collect payment methods, card numbers, or bank account details. … Microsoft‘s Bing took second place for data collection, hoovering up 12 types of data, closely followed by Pi Browser in third place with nine data types, with Safari and Firefox collecting eight types and sharing fourth place.”

Et tu, Firefox? Collins notes the study found Brave and Tor to be the least data-hungry. The former collects identifiers and usage data. Tor, famously, collects no data at all. Both are free, though Brave sells add-ons and Tor accepts donations. The write-up continues:

“When it comes to the types of data collected, Pi Browser, Edge, and Bing all collected the most tracking data, usually sold to third parties to be used for targeted advertising. Pi Browser collects browsing history, search history, device ID, product interaction, and advertisement data, while Edge collects customer support request data, and Bing collects user ID data.”

For anyone unfamiliar, Pi Browser is designed for use with decentralized (blockchain) applications. We learn that, on mobile devices in the US, Chrome captures 43% of browser usage, while Safari captures 50%. Collins reminds readers there are ways to safeguard one’s data, though we would add none are total or foolproof. He also points us to TechRadar’s guide to the best VPNs for another layer of security.

Cynthia Murrell, May 19, 2025

Content Injection Can Have Unanticipated Consequences

February 24, 2025

The work of a real, live dinobaby. Sorry, no smart software involved. Whuff, whuff. That’s the sound of my swishing dino tail. Whuff.

The work of a real, live dinobaby. Sorry, no smart software involved. Whuff, whuff. That’s the sound of my swishing dino tail. Whuff.

Years ago I gave a lecture to a group of Swedish government specialists affiliated with the Forestry Unit. My topic was the procedure for causing certain common algorithms used for text processing to increase the noise in their procedures. The idea was to input certain types of text and numeric data in a specific way. (No, I will not disclose the methods in this free blog post, but if you have a certain profile, perhaps something can be arranged by writing benkent2020 at yahoo dot com. If not, well, that’s life.)

We focused on a handful of methods widely used in what now is called “artificial intelligence.” Keep in mind that most of the procedures are not new. There are some flips and fancy dancing introduced by individual teams, but the math is not invented by TikTok teens.

In my lecture, the forestry professionals wondered if these methods could be used to achieve specific objectives or “ends”. The answer was and remains, “Yes.” The idea is simple. Once methods are put in place, the algorithms chug along, some are brute force and others are probabilistic. Either way, content and data injections can be shaped, just like the gizmos required to make kinetic events occur.

The point of this forestry excursion is to make clear that a group of people, operating in a loosely coordinated manner can create data or content. Those data or content can be weaponized. When ingested by or injected into a content processing flow, the outputs of the larger system can be fiddled: More emphasis here, a little less accuracy there, and an erosion of whatever “accuracy” calculations are used to keep the system within the engineers’ and designers’ parameters. A plebian way to describe the goal: Disinformation or accuracy erosion.

I read “Meet the Journalists Training AI Models for Meta and OpenAI.” The write up explains that journalists without jobs or in search of extra income are creating “content” for smart software companies. The idea is that if one just does the Silicon Valley thing and sucks down any and all content, lawyers might come calling. Therefore, paying for “real” information is a better path.

Please, read the original article to get a sense of who is doing the writing, what baggage or mind set these people might bring to their work.

If the content is distorted — either intentionally or unintentionally — the impact of these content objects on the larger smart software system might have some interesting consequences. I just wanted to point out that weaponized information can have an impact. Those running smart software and buying content assuming it is just fine, might find some interesting consequences in the outputs.

Stephen E Arnold, February 24, 2025

Can the UN Control the Intelligence Units of Countries? Yeah, Sure. No Problem

January 16, 2025

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

I assume that the information in “Governments Call for Spyware Regulations in UN Security Council Meeting” is spot on or very close to the bull’s eye. The write up reports:

On Tuesday [January 14, 2025] , the United Nations Security Council held a meeting to discuss the dangers of commercial spyware, which marks the first time this type of software — also known as government or mercenary spyware — has been discussed at the Security Council. The goal of the meeting, according to the U.S. Mission to the UN, was to “address the implications of the proliferation and misuse of commercial spyware for the maintenance of international peace and security.” The United States and 15 other countries called for the meeting.

Not surprisingly, different countries had different points of view. These ranged from “we have local regulations” to giant nation state assertions about bad actions by governments being more important to it is the USA’s fault.

The write up from the ubiquitous intelligence commentator did not include any history, context, or practical commentary about the diffusion of awareness of intelware or what the article, the UN, and my 90 year old neighbor calls spyware.

The public awareness of intelware coincided with hacks of some highly regarded technology. I am not going to name this product, but if one pokes about one might find documentation, code snippets, and even some conference material. Ah, ha. The conference material was obviously designed for marketing. Yes, that is correct. Conferences are routinely held in which the participants are vetted and certain measures put in place to prevent leakage of these materials. However, once someone passes out a brochure, the information is on the loose and can be snagged by a curious reporter who wants to do good. Also, some conference organizers themselves make disastrous decisions about what to post on their conference web site; for example, the presentations. I give some presentations at these closed to the public events, and I have found my slide deck on the organizer’s Web site. I won’t mention this outfit, but I don’t participate in any events associated with this outfit. Also, some conference attendees dress up as sheep and register with possibly bogus information. These folks happily snap pictures of exhibits of hardware not available to the public, record audio, and at one event held in the Hague sat in front of me and did a recording of my lecture about some odd ball research project in which I was involved. I reported the incident to the people at the conference desk. I learned that the individual left the conference and that his office telephone number was bogus. That’s enough. Leaks do occur. People are careless. Others just clever and duplicitous.

Thanks, You.com. You are barely able to manage a “good enough” these days. Money problems? Yeah, too bad. My heart bleeds for you.

However, the big reveal of intelware and its cousin policeware coincided with the push by one nation state. I won’t mention the country, but here’s how I perceived what kicked into high gear in 2005 or so. A number of start ups offered data analytics. The basic pitch was that these outfits had developed a suite of procedures to make sense of data. If the client had data, these companies could import the information and display important points identified by algorithms about the numbers, entities, and times. Marketers were interested in these systems because, like the sale pitches for AI today, the Madison Avenue crowd could dispense with the humans doing the tedious hand work required to make sense of pharmaceutical information. Buy, recycle, or create a data set. Then import it into these systems. Business intelligence spills forth. Leaders in this field did not disclose their “roots” in the intelligence community of the nation encouraging its entrepreneurs to commercialize what they learned when fulfilling their government military service.

Where did the funding come from? The nation state referenced provided some seed funds. However, in order to keep these systems in line with customer requirements for analyzing the sales of shampoo and blockbuster movies. Venture firms with personnel familiar with the nation state’s government software innovations were invited to participate in funding some of these outfits. One of them is a very large publicly traded company. This firm has a commercial sales side and a government sales side. Some readers of this post will have the stock in their mutual fund stock baskets. Once a couple of these outfits hit the financial jackpot for the venture firms, the race was on.

Companies once focused squarely on serving classified entities in a government in a number of countries wanted to sanitize the software and sell to a much larger, more diverse corporate market. Today, if one wants to kick the tires of commercially available once-classified systems and methods, one can:

- Attend conferences about data brokering

- Travel to Barcelona or Singapore and contact interesting start ups and small businesses in the marketing data analysis business

- Sign up for free open source intelligence online events and note the names and organizations speaking. (Some of these events allow a registered attendee to conduct an off line for others but real time chat with a speaker who represents an interesting company.

There are more techniques as well to identify outfits which are in the business of providing or developing intelware and policeware tools for anyone with money. How do you find these folks? That’s easy. Dark Web searches, Telegram Group surfing, and running an advertisement for a job requiring a person with specialized experience in a region like southeast Asia.

Now let me return to the topic of the cited article: The UN’s efforts to get governments to create rules, controls, or policies for intelware and policeware. Several observations:

- The effort is effectively decades too late

- The trajectory of high powered technology is outward from its original intended purpose

- Greed because the software works and can generate useful results: Money or genuinely valuable information.

Agree or disagree with me? That’s okay. I did a few small jobs for a couple of these outfits and have just enough insight to point out that the article “Governments Call for Spyware Regulations in UN Security Council Meeting” presents a somewhat thin report and lacks color.

Stephen E Arnold, January 18, 2025

Will AI Data Scientists Become Street People?

November 4, 2024

Over at HackerNoon, all-around IT guy Dominic Ligot insists data scientists must get on board with AI or be left behind. In “AI Denialism,” he compares data analysts who insist AI can never replace them with 19th century painters who scoffed at photography as an art form. Many of them who specialized in realistic portraits soon found themselves out of work, despite their objections.

Like those painters, Ligot believes, some data scientists are in denial about how well this newfangled technology can do what they do. They hang on to a limited definition of creativity at their peril. In fact, he insists:

“The truth is, AI’s ability to model complex relationships, surface patterns, and even simulate multiple solutions to a problem means it’s already doing much of what data analysts claim as their domain. The fine-grained feature engineering, the subtle interpretations—AI is not just nibbling around the edges; it’s slowly encroaching into the core of what we’ve traditionally defined as ‘analytical creativity.’”

But we are told there is hope for those who are willing to adapt:

“I’m not saying that data scientists or analysts will be replaced overnight. But to assume that AI will never touch their domain simply because it doesn’t fit into an outdated view of what creativity means is shortsighted. This is a transformative era, one that calls for a redefinition of roles, responsibilities, and skill sets. Data analysts and scientists who refuse to keep an open mind risk finding themselves irrelevant in a world that is rapidly shifting beneath their feet. So, let’s not make the same mistake as those painters of the past. Denialism is a luxury we cannot afford.”

Is Ligot right? And, if so, what skill-set changes can preserve data scientists’ careers? That relevant question remains unanswered in this post. (There are good deals on big plastic mugs at Dollar Tree.)

Cynthia Murrell, November 04, 2024

Smart Software Project Road Blocks: An Up-to-the-Minute Report

October 1, 2024

![green-dino_thumb_thumb_thumb_thumb_t[2]_thumb_thumb green-dino_thumb_thumb_thumb_thumb_t[2]_thumb_thumb](https://arnoldit.com/wordpress/wp-content/uploads/2024/09/green-dino_thumb_thumb_thumb_thumb_t2_thumb_thumb_thumb.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I worked through a 22-page report by SQREAM, a next-gen data services outfit with GPUs. (You can learn more about the company at this buzzword dense link.) The title of the report is:

The report is a marketing document, but it contains some thought provoking content. The “report” was “administered online by Global Surveyz [sic] Research, an independent global research firm.” The explanation of the methodology was brief, but I don’t want to drag anyone through the basics of Statistics 101. As I recall, few cared and were often good customers for my class notes.

Here are three highlights:

- Smart software and services cause sticker shock.

- Cloud spending by the survey sample is going up.

- And the killer statement: 98 percent of the machine learning projects fail.

Let’s take a closer look at the astounding assertion about the 98 percent failure rate.

The stage is set in the section “Top Challenges Pertaining to Machine Learning / Data Analytics.” The report says:

It is therefore no surprise that companies consider the high costs involved in ML experimentation to be the primary disadvantage of ML/data analytics today (41%), followed by the unsatisfactory speed of this process (32%), too much time required by teams (14%) and poor data quality (13%).

The conclusion the authors of the report draw is that companies should hire SQREAM. That’s okay, no surprise because SQREAM ginned up the study and hired a firm to create an objective report, of course.

So money is the Number One issue.

Why do machine learning projects fail? We know the answer: Resources or money. The write up presents as fact:

The top contributing factor to ML project failures in 2023 was insufficient budget (29%), which is consistent with previous findings – including the fact that “budget” is the top challenge in handling and analyzing data at scale, that more than two-thirds of companies experience “bill shock” around their data analytics processes at least quarterly if not more frequently, that that the total cost of analytics is the aspect companies are most dissatisfied with when it comes to their data stack (Figure 4), and that companies consider the high costs involved in ML experimentation to be the primary disadvantage of ML/data analytics today.

I appreciated the inclusion of the costs of data “transformation.” Glib smart software wizards push aside the hassle of normalizing data so the “real” work can get done. Unfortunately, the costs of fixing up source data are often another cause of “sticker shock.” The report says:

Data is typically inaccessible and not ‘workable’ unless it goes through a certain level of transformation. In fact, since different departments within an organization have different needs, it is not uncommon for the same data to be prepared in various ways. Data preparation pipelines are therefore the foundation of data analytics and ML….

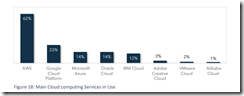

In the final pages of the report a number of graphs appear. Here’s one that stopped me in my tracks:

The sample contained 62 percent user of Amazon Web Services. Number 2 was users of Google Cloud at 23 percent. And in third place, quite surprisingly, was Microsoft Azure at 14 percent, tied with Oracle. A question which occurred to me is: “Perhaps the focus on sticker shock is a reflection of Amazon’s pricing, not just people and overhead functions?”

I will have to wait until more data becomes available to me to determine if the AWS skew and the report findings are normal or outliers.

Stephen E Arnold, October 1, 2024

Agents Are Tracking: Single Web Site Version

August 6, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

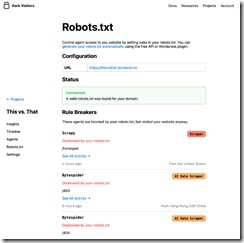

How many software robots are crawling (copying and indexing) a Web site you control now? This question can be answered by a cloud service available from DarkVisitors.com.

The Web site includes a useful list of these software robots (what many people call “agents” which sounds better, right?). You can find the list of about 800 bots as of July 30, 2024) on the DarkVisitors’ Web site at this link. There is a search function so you can look for a bot by name; for example, Omgili (the Israeli data broker Webz.io). Please, note, that the list contains categories of agents; for example, “AI Data Scrapers”, “AI Search Crawlers,” and “Developer Helpers,” among others.

The Web site also includes links to a service called “Set Up Your Robots.txt.” The idea is that one can link a Web site’s robots.txt file to DarkVisitors. Then DarkVisitors will update your Web site automatically to block crawlers, bots, and agents. The specific steps to make this service work are included on the DarkVisitors.com Web site.

The basic service is free. However, if you want analytics and a couple of additional features, the cost as of July 30, 2024, is $10 per month.

An API is also available. Instructions for implementing the service are available as well. Plus, a WordPress plug in is available. The cloud service is provided by Bit Flip LLC.

Stephen E Arnold, August 6, 2024

If Math Is Running Out of Problems, Will AI Help Out the Humans?

July 26, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read “Math Is Running Out of Problems.” The write up appeared in Medium and when I clicked I was not asked to join, pay, or turn a cartwheel. (Does Medium think 80-year-old dinobabies can turn cartwheels? The answer is, “Hey, doofus, if you want to read Medium articles pay up.)

Thanks, MSFT Copilot. Good enough, just like smart security software.

I worked through the free essay, which is a reprise of an earlier essay on the topic of running out of math problems. These reason that few cared about the topic is that most people cannot make change. Thinking about a world without math problems is an intellectual task which takes time from scamming the elderly, doom scrolling, generating synthetic information, or watching reruns of I Love Lucy.

The main point of the essay in my opinion is:

…take a look at any undergraduate text in mathematics. How many of them will mention recent research in mathematics from the last couple decades? I’ve never seen it.

New and math problems is an oxymoron.

I think the author is correct. As specialization becomes more desirable to a person, leaving the rest of the world behind is a consequence. But the issue arises in other disciplines. Consider artificial intelligence. That jazzy phrase embraces a number of mathematical premises, but it boils down to a few chestnuts, roasted, seasoned, and mixed with some interesting ethanols. (How about that wild and crazy Sir Thomas Bayes?)

My view is that as the apparent pace of information flow erodes social and cultural structures, the quest for “new” pushes a frantic individual to come up with a novelty. The problem with a novelty is that it takes one’s eye off the ball and ultimately the game itself. The present state of affairs in math was evident decades ago.

What’s interesting is that this issue is not new. In the early 1980s, Dialog Information Services hosted a mathematics database called xxx. The person representing the MATHFILE database (now called MathSciNet) told me in 1981:

We are having a difficult time finding people to review increasingly narrow and highly specialized papers about an almost unknown area of mathematics.

Flash forward to 2024. Now this problem is getting attention in 2024 and no one seems to care?

Several observations:

- Like smart software, maybe humans are running out of high-value information? Chasing ever smaller mathematical “insights” may be a reminder that humans and their vaunted creativity has limits, hard limits.

- If the premise of the paper is correct, the issue should be evident in other fields as well. I would suggest the creation of a “me too” index. The idea is that for a period of history, one can calculate how many knock off ideas grab the coat tails of an innovation. My hunch is that the state of most modern technical insight is high on the me too index. No, I am not counting “original” TikTok-type information objects.

- The fragmentation which seems apparent to me in mathematics and that interesting field of mathematical physics mirrors the fragmentation of certain cultural precepts; for example, ethical behavior. Why is everything “so bad”? The answer is, “Specialization.”

Net net: The pursuit of the ever more specialized insight hastens the erosion of larger ideas and cultural knowledge. We have come a long way in four decades. The direction is clear. It is not just a math problem. It is a now problem and it is pervasive. I want a hat that says, “I’m glad I’m old.”

Stephen E Arnold, July 26, 2024

Social Scoring Is a Thing and in Use in the US and EU Now

April 9, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Social scoring is a thing.

The EU AI regulations are not too keen on slapping an acceptability number on people or a social score. That’s a quaint idea because the mechanisms for doing exactly that are available. Furthermore, these are not controlled by the EU, and they are not constrained in a meaningful way in the US. The availability of mechanisms for scoring a person’s behaviors chug along within the zippy world of marketing. For those who pay attention to policeware and intelware, many of the mechanisms are implemented in specialized software.

Will the two match up? Thanks, MSFT Copilot. Good enough.

There’s a good rundown of the social scoring tools in “The Role of Sentiment Analysis in Marketing.” The content is focused on uses “emotional” and behavioral signals to sell stuff. However, the software and data sets yield high value information for other purposes. For example, an individual with access to data about the video viewing and Web site browsing about a person or a cluster of persons can make some interesting observations about that person or group.

Let me highlight some of the software mentioned in the write up. There is an explanation of the discipline of “sentiment analysis.” A person engaged in business intelligence, investigations, or planning a disinformation campaign will have to mentally transcode the lingo into a more practical vocabulary, but that’s no big deal. The write up then explains how “sentiment analysis” makes it possible to push a person’s buttons. The information makes clear that a service with a TikTok-type recommendation system or feed of “you will probably like this” can exert control over an individual’s ideas, behavior, and perception of what’s true or false.

The guts of the write up is a series of brief profiles of eight applications available to a marketer, PR team, or intelligence agency’s software developers. The products described are:

- Sprout Social. Yep, it’s wonderful. The company wrote the essay I am writing about.

- Reputation. Hello, social scoring for “trust” or “influence”

- Monkeylearn. What’s the sentiment of content? Monkeylearn can tell you.

- Lexalytics. This is an old-timer in sentiment analysis.

- Talkwalker. A content scraper with analysis and filter tools. The company is not “into” over-the-transom inquiries

If you have been thinking about the EU’s AI regulations, you might formulate an idea that existing software may irritate some regulators. My team and I think that AI regulations may bump into companies and government groups already using these tools. Working out the regulatory interactions between AI regulations and what has been a reasonably robust software and data niche will be interesting.

In the meantime, ask yourself, “How many intelware and policeware systems implement either these tools or similar tools?” In my AI presentation at the April 2024 US National Cyber Crime Conference, I will provide a glimpse of the future by describing a European company which includes some of these functions. Regulations do not control technology nor innovation.

Stephen E Arnold, April 9, 2024

Why Humans Follow Techno Feudal Lords and Ladies

December 19, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

“Seduced By The Machine” is an interesting blend of human’s willingness to follow the leader and Silicon Valley revisionism. The article points out:

We’re so obsessed by the question of whether machines are rising to the level of humans that we fail to notice how the humans are becoming more like machines.

I agree. The write up offers an explanation — it’s arriving a little late because the Internet has been around for decades:

Increasingly we also have our goals defined for us by technology and by modern bureaucratic systems (governments, schools, corporations). But instead of providing us with something equally rich and well-fitted, they can only offer us pre-fabricated values, standardized for populations. Apps and employers issue instructions with quantifiable metrics. You want good health – you need to do this many steps, or achieve this BMI. You want expertise? You need to get these grades. You want a promotion? Hit these performance numbers. You want refreshing sleep? Better raise your average number of hours.

A modern high-tech pied piper leads many to a sanitized Burning Man? Sounds like fun. Look at the funny outfit. The music is a TikTok hit. The followers are looking forward to their next “experience.” Thanks, MSFT Copilot. One try for this cartoon. Good enough again.

The idea is that technology offers a short cut. Who doesn’t like a short cut? Do you want to write music in the manner of Herr Bach or do you want to do the loop and sample thing?

The article explores the impact of metrics; that is, the idea of letting Spotify make clear what a hit song requires. Now apply that malleability and success incentive to getting fit, getting start up funding, or any other friction-filled task. Let’s find some Teflon, folks.

The write up concludes with this:

Human beings tend to prefer certainty over doubt, comprehensibility to confusion. Quantified metrics are extremely good at offering certainty and comprehensibility. They seduce us with the promise of what Nguyen calls “value clarity”. Hard and fast numbers enable us to easily set goals, justify decisions, and communicate what we’ve done. But humans reach true fulfilment by way of doubt, error and confusion. We’re odd like that.

Hot button alert! Uncertainty means risk. Therefore, reduce risk. Rely on an “authority,” “data,” or “research.” What if the authority sells advertising? What if the data are intentionally poisoned (a somewhat trivial task according to watchers of disinformation outfits)? What if the research is made up? (I am thinking of the Stanford University president and the Harvard ethic whiz. Both allegedly invented data; both found themselves in hot water. But no one seems to have cared.

With smart software — despite its hyperbolic marketing and its role as the next really Big Thing — finding its way into a wide range of business and specialized systems, just trust the machine output. I went for a routine check up. One machine reported I was near death. The doctor was recommending a number of immediate remediation measures. I pointed out that the data came from a single somewhat older device. No one knew who verified its accuracy. No one knew if the device was repaired. I noted that I was indeed still alive and asked if the somewhat nervous looking medical professional would get a different device to gather the data. Hopefully that will happen.

Is it a positive when the new pied piper of Hamelin wants to have control in order to generate revenue? Is it a positive when education produces individuals who do not ask, “Is the output accurate?” Some day, dinobabies like me will indeed be dead. Will the willingness of humans to follow the pied piper be different?

Absolutely not. This dinobaby is alive and kicking, no matter what the aged diagnostic machine said. Gentle reader, can you identify fake, synthetic, or just plain wrong data? If you answer yes, you may be in the top tier of actual thinkers. Those who are gatekeepers of information will define reality and take your money whether you want to give it up or not.

Stephen E Arnold, December 19, 2023

Predictive Analytics and Law Enforcement: Some Questions Arise

October 17, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[2] Vea4_thumb_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t2_thumb-16.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

We wish we could prevent crime before it happens. With AI and predictive analytics it seems possible but Wired shares that “Predictive Policing Software Terrible At Predicting Crimes.” Plainfield, NJ’s police department purchased Geolitica predictive software and it was not a wise use go tax payer money. The Markup, a nonprofit investigative organization that wants technology serve the common good, reported Geolitica’s accuracy:

“We examined 23,631 predictions generated by Geolitica between February 25 and December 18, 2018, for the Plainfield Police Department (PD). Each prediction we analyzed from the company’s algorithm indicated that one type of crime was likely to occur in a location not patrolled by Plainfield PD. In the end, the success rate was less than half a percent. Fewer than 100 of the predictions lined up with a crime in the predicted category, that was also later reported to police.”

The Markup also analyzed predictions for robberies and aggravated results that would occur in Plainfield and it was 0.6%. Burglary predictions were worse at 0.1%.

The police weren’t really interested in using Geolitica either. They wanted to be accurate in predicting and reducing crime. The Plainfield, NJ hardly used the software and discontinued the program. Geolitica charged $20,500 for a year subscription then $15,5000 for year renewals. Geolitica had inconsistencies with information. Police found training and experience to be as effective as the predictions the software offered.

Geolitica will go out off business at the end of 2023. The law enforcement technology company SoundThinking hired Geolitica’s engineering team and will acquire some of their IP too. Police software companies are changing their products and services to manage police department data.

Crime data are important. Where crimes and victimization occur should be recorded and analyzed. Newark, New Jersey, used risk terrain modeling (RTM) to identify areas where aggravated assaults would occur. They used land data and found that vacant lots were large crime locations.

Predictive methods have value, but they also have application to specific use cases. Math is not the answer to some challenges.

Whitney Grace, October 17, 2023