LLM Unreliable? Probably Absolutely No Big Deal Whatsoever For Sure

July 19, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-40.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

My team and I are working on an interesting project. Part of that work requires that we grind through papers, journal articles, and self-published (and essentially unverifiable) comments about smart software.

“What do you mean the outputs from the smart software I have been using for my homework delivers the wrong answer?” says this disappointed user of a browser and word processor with artificial intelligence baked in. Is she damning recursion? MidJourney created this emotion-packed image of a person who has learned that she has been accursed of plagiarism by her Sociology 215 professor.

Not surprisingly, we come across some wild and crazy information. On rare occasions we come across a paper, mostly ignored, which presents information that confirms many of our tests of smart software. When we do tests, we arrive with specific queries in mind. These relate to the behaviors of bad actors; for example, online services which front for cyber criminals, systems which are purpose built to make it time consuming to unmask a bad actor, and determine what person owns a particular domain engaged in the sale of fullz.

You can probably guess that most of the smart and dumb online finding services are of little or no help. We have to check these, however, simply because we want to be thorough. At a meeting last week, one of my team members who has a degree in library science, pointed out that the outputs from the services we use were becoming less useful than they were several months ago. I don’t spend too much time testing these services because I am a dinobaby and I run projects. My doing days are over. But I do listen to informed feedback. Her comment was one I had not seen in the Google PR onslaught about its method, the utterances of Sam AI-Man at OpenAI, or from the assorted LinkedIn gurus who post about smart software.

Then I spotted “How Is ChatGPT’s Behavior Changing over Time?”

I think the authors of the paper have documented what my team member articulated to me and others working on a smart software project. The paper states is polite academic prose:

Our findings demonstrate that the behavior of GPT-3.5 and GPT-4 has varied significantly over a relatively short amount of time.

The authors provide some data, a few diagrams, and some footnotes.

What is fascinating is that the most significant item in the journal article, in my opinion, is the use of the word “drifts.” Here’s the specific line:

Monitoring reveals substantial LLM drifts.

Yep, drifts.

What exactly is a drift in a numerical mélange like a large language model, its algorithms, and its probabilistic pulsing? In a nutshell, LLMs are formed by humans and use information to some degree created by humans. The idea is that sharp corners are created from decisions and data which may have rounded corners or be the equivalent of wad of Play-Doh after a kindergartener manipulates the stuff. The idea is that layers of numerical recipes are hooked together to output information useful to a human or system.

Those who worked with early versions of the Autonomy Neuro Linguistic black box know about the Play-Doh effect. Train the system on a crafted set of documents (information). Run test queries. Adjust a few knobs and dials afforded by the Autonomy system. Turn it loose on the Word documents and other content for which filters were installed. Then let users run queries.

To be upfront, using the early version of Autonomy in 1999 or 2000 was pretty darned good. However, Autonomy recommended that the system be retrained every few months.

Why?

The answer, as I recall, is that as new data were encountered by the Autonomy Neuro Linguistic engine, the engine had to cope with new words, names of companies, and phrases. Without retraining, the system would use what it had from its initial set up and tuning. Without retraining or recalibration, the Autonomy system would return results which were less useful in some situations. Operate a system without retraining, the results would degrade over time.

Math types labor to make inference-hooked and probabilistic systems stay on course. The systems today use tricks that make a controlled vocabulary look like the tool of a dinobaby like me. Without getting into the weeds, the Autonomy system would drift.

And what does the cited paper say, “LLM drift too.”

What does this mean? Here’s my dinobaby list of items to keep in mind:

- Smart software, if left to its own devices, will degrade over time; that is, outputs will drift from what the user wants. Feedback from users accelerates the drift because some feedback is from the smart software’s point of view is spot on even if it is crazy or off the wall. Do this over a period of time and you get what the paper’s authors and my team member pointed out: Degradation.

- Users who know how to look at a system’s outputs and validate or identify off the mark results can take corrective action; that is, ignore the outputs or fix them up. This is not common, and it requires specialized knowledge, time, and mental sharpness. Those who depend on TikTok or a smart system may not have these qualities in equal amounts.

- Entrepreneurs want money, power, or a new Tesla. Bringing up issues about smart software growing increasingly crazy like the dinobaby down the street is not valued. Hence, substantive problems with smart systems will require time, money, and expertise to remediate. Who wants that? Smart software is designed to improve efficiency, reduce costs, and make money. The result is a group of individuals who do PR, not up-to-snuff software.

Will anyone pay attention to this cited journal article? Sure, a few interns and maybe a graduate student or two. But at this time, the trend is that AI works and AI applied to something delivers a solution. Is that solution reliable or is it just good enough? What if the outputs deteriorate in a subtle way over time? What’s the fix? Who is responsible? The engineer who fiddled with thresholds? The VP of product development who dismissed objections about inherent bias in outputs?

I think you may have an answer to these questions. As a dinobaby, I can say, “Folks, I don’t have a clue about fixing up the smart software juggernaut.” I am skeptical of those who say, “Hey, it just works.” Okay, I hope you are correct.

Stephen E Arnold, July 19, 2023

Researchers Break New Ground with a Turkey Baster and Zoom

April 4, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I do not recall much about my pre-school days. I do recall dropping off at different times my two children at their pre-schools. My recollections are fuzzy. I recall horrible finger paintings carried to the automobile and several times a month, mashed pieces of cake. I recall quite a bit of laughing, shouting, and jabbering about classmates whom I did not know. Truth be told I did not want to meet these progeny of highly educated, upwardly mobile parents who wore clothes with exposed logos and drove Volvo station wagons. I did not want to meet the classmates. The idea of interviewing pre-kindergarten children struck me as a waste of time and an opportunity to get chocolate Baskin & Robbins cake smeared on my suit. (I am a dinobaby, remember. Dress for success. White shirt. Conservative tie. Yada yada._

I thought (briefly, very briefly) about the essay in Science Daily titled “Preschoolers Prefer to Learn from a Competent Robot Than an Incompetent Human.” The “real news” article reported without one hint of sarcastic ironical skepticism:

We can see that by age five, children are choosing to learn from a competent teacher over someone who is more familiar to them — even if the competent teacher is a robot…

Okay. How were these data gathered? I absolutely loved the use of Zoom, a turkey baster, and nonsense terms like “fep.”

Fascinating. First, the idea of using Zoom and a turkey baster would never roamed across this dinobaby’s mind. Second, the intuitive leap by the researchers that pre-schoolers who finger-paint would like to undertake this deeply intellectual task with a robot, not a human. The human, from my experience, is necessary to prevent the delightful sprouts from eating the paint. Third, I wonder if the research team’s first year statistics professor explained the concept of a valid sample.

One thing is clear from the research. Teachers, your days are numbered unless you participate in the Singularity with Ray Kurzweil or are part of the school systems’ administrative group riding the nepotism bus.

“Fep.” A good word to describe certain types of research.

Stephen E Arnold, April 4, 2023

More Impressive Than Winning at Go: WolframAlpha and ChatGPT

March 27, 2023

For a rocket scientist, Stephen Wolfram and his team are reasonably practical. I want to call your attention to this article on Dr. Wolfram’s Web site: “ChatGPT Gets Its Wolfram Superpowers.” In the essay, Dr. Wolfram explains how the computational system behind WolframAlpha can be augmented with ChatGPT. The examples in his write up are interesting and instructive. What can the WolframAlpha ChatGPT plug in do? Quite a bit.

I found this passage interesting:

ChatGPT + Wolfram can be thought of as the first truly large-scale statistical + symbolic “AI” system…. in ChatGPT + Wolfram we’re now able to leverage the whole stack: from the pure “statistical neural net” of ChatGPT, through the “computationally anchored” natural language understanding of Wolfram|Alpha, to the whole computational language and computational knowledge of Wolfram Language.

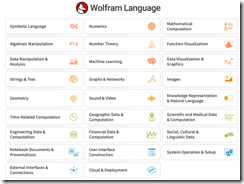

The WolframAlpha module works with these information needs:

The Wolfram Language modules does some numerical cartwheels, and I was impressed. I am not sure how high school calculus teachers will respond to the WolframAlpha – ChatGPT mash up, however. Here’s a snapshot of what Wolfram Language can do at this time:

One helpful aspect of Dr. Wolfram’s essay is that he notes differences between an earlier version of ChatGPT and the one used for the mash up. Navigate to this link and sign up for the plug in.

Stephen E Arnold, March 27, 2023

Making Data Incomprehensible

March 8, 2023

I spotted a short write up with 100 pictures called “1 Dataset 100 Visualizations.” The write up presents a simple table:

And converts or presents the data 100 different ways.

Here’s an example:

My reactions to the examples are:

- Why are the colors presented with low contrast. Many of the charts’ graphics are incomprehensible. The texts’ illegibility underscores the disconnect between being understood and being in a weird world of incomprehensibility.

- What’s wrong with the basic table? It works. Why create a graph? Oh, I know. To be clever. Nope, not clever. Performative demonstration of numerical expertise perhaps?

- The Wall Street Journal and other “real news” organizations love these types of obscurification. I can visualize the goose bumps which form on the arms of these individuals. The anticipation of making something fuzzy is a thrilling moment.

Yikes. Marketing methods to be unclear.

Stephen E Arnold, March 8, 2023

Worthless Data Work: Sorry, No Sympathy from Me

February 27, 2023

I read a personal essay about “data work.” The title is interesting: “Most Data Work Seems Fundamentally Worthless.” I am not sure of the age of the essayist, but the pain is evident in the word choice; for example: Flavor of despair (yes, synesthesia in a modern technology awakening write up!), hopeless passivity (yes, a digital Sisyphus!), essentially fraudulent (shades of Bernie Madoff!), fire myself (okay, self loathing and an inner destructive voice), and much, much more.

But the point is not the author for me. The big idea is that when it comes to data, most people want a chart and don’t want to fool around with numbers, statistical procedures, data validation, and context of the how, where, and what of the collection process.

Let’s go to the write up:

How on earth could we have what seemed to be an entire industry of people who all knew their jobs were pointless?

Like Elizabeth Barrett Browning, the essayist enumerates the wrongs of data analytics as a vaudeville act:

- Talking about data is not “doing” data

- Garbage in, garbage out

- No clue about the reason for an analysis

- Making marketing and others angry

- Unethical colleagues wallowing in easy money

What’s ahead? I liked these statements which are similar to what a digital Walt Whitman via ChatGPT might say:

I’ve punched this all out over one evening, and I’m still figuring things out myself, but here’s what I’ve got so far… that’s what feels right to me – those of us who are despairing, we’re chasing quality and meaning, and we can’t do it while we’re taking orders from people with the wrong vision, the wrong incentives, at dysfunctional organizations, and with data that makes our tasks fundamentally impossible in the first place. Quality takes time, and right now, it definitely feels like there isn’t much of a place for that in the workplace.

Imagine. The data and working with it has an inherent negative impact. We live in a data driven world. Is that why many processes are dysfunctional. Hey, Sisyphus, what are the metrics on your progress with the rock?

Stephen E Arnold, February 27, 2023

Confessions? It Is That Time of Year

December 23, 2022

Forget St. Augustine.

Big data, data science, or whatever you want to call is was the precursor to artificial intelligence. Tech people pursued careers in the field, but after the synergy and hype wore off the real work began. According to WD in his RYX,R blog post: “Goodbye, Data Science,” the work is tedious, low-value, unwilling, and left little room for career growth.

WD worked as a data scientist for a few years, then quit in pursuit of the higher calling as a data engineer. He will be working on the implementation of data science instead of its origins. He explained why he left in four points:

• “The work is downstream of engineering, product, and office politics, meaning the work was only often as good as the weakest link in that chain.

• Nobody knew or even cared what the difference was between good and bad data science work. Meaning you could suck at your job or be incredible at it and you’d get nearly the same regards in either case.

• The work was often very low value-add to the business (often compensating for incompetence up the management chain).

• When the work’s value-add exceeded the labor costs, it was often personally unfulfilling (e.g. tuning a parameter to make the business extra money).”

WD’s experiences sound like everyone who is disenchanted with their line of work. He worked with managers who would not listen when they were told stupid projects would fail. The managers were more concerned with keeping their bosses and shareholders happy. He also mentioned that engineers are inflamed with self-grandeur and scientists are bad at code. He worked with young and older data people who did not know what they were doing.

As a data engineer, WD has more free time, more autonomy, better career advancements, and will continue to learn.

Whitney Grace, December 23, 2022

Trackers? More Plentiful Than Baloney Press Releases

December 22, 2022

You are absolutely correct if you think more Web sites are asking you to approve their cookie settings. More Web sites are tracking your personal information to send you targeted ads. Tech Radar explains more about trackers in, “You’re Not Wrong-Websites Have Way More Trackers Now.”

NordVPN discovered that the average Web site has forty-eight trackers and it is putting users at risk. NordVPN used three tracker blockers (Badger, Brave, and uBlock Origin) to count the number of trackers across the one hundred more popular Web sites in twenty-five countries. Social media platforms had the most trackers at 160, health Web sites were the second with forty-six, and digital media Web sites have twenty-eight. Ironically government and adult Web sites had the least amount of trackers.

Third parties were tied to the trackers. Thirty percent belonged to Google, 11% to Facebook, and Adobe had 7%. All data is used for marketing reasons. North and Central Europe had the least amount of trackers, because of privacy laws. The US is a tracker’s playground, because there are not any blanket laws that protect user privacy. It is an Orwellian system for capitalist purposes:

“For NordVPN, the problem with collecting this data is that it can be used to profile the users in great detail. The profile is then sold to advertising companies, whose ads “follow” the users around the internet to collect even more data.

Worse still, cybercriminals might get their hands on this data at any point, and could then use this data in phishing attacks that use a victim’s in-depth personal profile to appear authentic, making them more likely to fall for the ruse.”

The article doubles as a marketing tool for VPN services, particularly NordVPN. VPNs collect user information too, except they can hide it. Interesting how the article wants to inform people about the dangers of tracking and wants to sell a product too.

Whitney Grace, December 22, 2022

Common Sense: A Refreshing Change in Tech Write Ups

December 13, 2022

I want to give a happy quack to this article: “Forget about Algorithms and Models — Learn How to Solve Problems First.” The common sense write up suggests that big data cowboys and cowgirls make sure of their problem solving skills before doing the algorithm and model Lego drill. To make this point clear: Put foundations in place before erecting a structure which may fail in interesting ways.

The write up says:

For programmers and data scientists, this means spending time understanding the problem and finding high-level solutions before starting to code.

But in an era of do your own research and thumbtyping will common sense prevail?

Not often.

The article provides a list a specific steps to follow as part of the foundation for the digital confection. Worth reading; however, the write up tries to be upbeat.

A positive attitude is a plus. Too bad common sense is not particularly abundant in certain fascinating individual and corporate actions; to wit:

- Doing the FBX talkathons

- Installing spyware without legal okays

- Writing marketing copy that asserts a cyber security system will protect a licensee.

You may have your own examples. Common sense? Not abundant in my opinion. That’s why a book like How to Solve It: Modern Heuristics is unlikely to be on many nightstands of some algorithm and data analysts. Do I know this for a fact? Nope, just common sense. Thumbtypers, remember?

Stephen E Arnold, December 13, 2022

TikTok: Algorithmic Data Slurping

November 14, 2022

There are several reasons TikTok rocketed to social-media dominance in just a few years. For example, Its user friendly creation tools plus a library of licensed tunes make it easy to create engaging content. Then there was the billion-dollar marketing campaign that enticed users away from Facebook and Instagram. But, according to the Guardian, it was the recommendation engine behind its For You Page (FYP) that really did the trick. Writer Alex Hern describes “How TikTok’s Algorithm Made It a Success: ‘It Pushes the Boundaries.’” He tells us:

“The FYP is the default screen new users see when opening the app. Even if you don’t follow a single other account, you’ll find it immediately populated with a never-ending stream of short clips culled from what’s popular across the service. That decision already gave the company a leg up compared to the competition: a Facebook or Twitter account with no friends or followers is a lonely, barren place, but TikTok is engaging from day one. It’s what happens next that is the company’s secret sauce, though. As you scroll through the FYP, the makeup of videos you’re presented with slowly begins to change, until, the app’s regular users say, it becomes almost uncannily good at predicting what videos from around the site are going to pique your interest.”

And so a user is hooked. Beyond the basics, specifically how the algorithm works is a mystery even, we’re told, to those who program it. We do know the AI takes the initiative. Instead of only waiting for users to select a video or tap a reaction, it serves up test content and tweaks suggestions based on how its suggestions are received. This approach has another benefit. It ensures each video posted on the platform is seen by at least one user, and every positive interaction multiplies its reach. That is how popular content creators quickly amass followers.

Success can be measured different ways, of course. Though TikTok has captured a record number of users, it is not doing so well in the critical monetization category. Estimates put its 2021 revenue at less than 5% of Facebook’s, and efforts to export its e-commerce component have not gone as hoped. Still, it looks like the company is ready to try, try again. Will its persistence pay off?

Cynthia Murrell, November 14, 2022

About Fancy Math: Struggles Are Not Exciting, Therefore Ignored

November 10, 2022

Ask yourself, “How many of my colleagues understand statistical procedures?” I am waiting.

Okay, enough time.

Navigate to “Pollsters Struggle to Improve Forecasts.” If you have a dead tree version of the Wall Street Journal, the story appears on page A4. If you have the online version of the paper, pay up and click this link. If you cannot locate the story, well, that’s life in the Murdoch high tech universe.

The article reports:

Overall, national polls in 2020 were the most inaccurate in 40 years, a study by the main association of survey researchers found, and state-level polls in 2016 were significantly off the mark.

So what?

Check out “Nate Silver Admits He Got Played by the GOP But Blames the Democrats for Not Using Poor Polling Practices.” The write up explains how a wizard fumbled the data ball. How many other whiz kids fumble data balls but do not come up with lame excuses and finger pointing?

Smart software relies on procedures not too distant from those used by pollsters. How accurate are the outputs from these massively hyped systems? Close enough for horseshoes? Good enough? Are you ready to let smart software determine how to treat your cancer, drive your vehicle, and grade your bright young 10-year-old?

Stephen E Arnold, November 10, 2022