China Smart US Dumb: An AI Content Marketing Push?

December 1, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I have been monitoring the China Smart, US Dumb campaign for some time. Most of the methods are below the radar; for example, YouTube videos featuring industrious people who seem to be similar to the owner of the Chinese restaurant not far from my office or posts on social media that remind me of the number of Chinese patents achieved each year. Sometimes influencers tout the wonders of a China-developed electric vehicle. None of these sticks out like a semi mainstream media push.

Thanks, Venice.ai, not exactly the hutong I had in mind but close enough for chicken kung pao in Kentucky.

However, that background “China Smart, US Dumb” messaging may be cranking up. I don’t know for sure, but this NBC News (not the Miss Now news) report caught my attention. Here’s the title:

The subtitle is snappier than Girl Fixes Generator, but you judge for yourself:

AI Startups Are Seeing Record Valuations, But Many Are Building on a Foundation of Cheap, Free-to-Download Chinese AI Models.

The write up states:

Surveying the state of America’s artificial intelligence landscape earlier this year, Misha Laskin was concerned. Laskin, a theoretical physicist and machine learning engineer who helped create some of Google’s most powerful AI models, saw a growing embrace among American AI companies of free, customizable and increasingly powerful “open” AI models.

We have a Xoogler who is concerned. What troubles the wizardly Misha Laskin? NBC News intones in a Stone Phillips’ tone:

Over the past year, a growing share of America’s hottest AI startups have turned to open Chinese AI models that increasingly rival, and sometimes replace, expensive U.S. systems as the foundation for American AI products.

Ever cautious, NBC News asserts:

The growing embrace could pose a problem for the U.S. AI industry. Investors have staked tens of billions on OpenAI and Anthropic, wagering that leading American artificial intelligence companies will dominate the world’s AI market. But the increasing use of free Chinese models by American companies raises questions about how exceptional those models actually are — and whether America’s pursuit of closed models might be misguided altogether.

Bingo! The theme is China smart and the US “misguided.” And not just misguided, but “misguided altogether.”

NBC News slams the point home with more force that the generator repairing Asian female closes the generator’s housing:

in the past year, Chinese companies like Deepseek and Alibaba have made huge technological advancements. Their open-source products now closely approach or even match the performance of leading closed American models in many domains, according to metrics tracked by Artificial Analysis, an independent AI benchmarking company.

I know from personal conversations that most of the people with whom I interreact don’t care. Most just accept the belief that the US is chugging along. Not doing great. Not doing terribly. Just moving along. Therefore, I don’t expect you, gentle reader, to think much of this NBC News report.

That’s why the China Smart, US Dumb messaging is effective. But this single example raises the question, “What’s the next major messaging outlet to cover this story?”

Stephen E Arnold, December 1, 2025

AI ASICs: China May Have Plans for AI Software and AI Hardware

December 1, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

I try to avoid wild and crazy generalizations, but I want to step back from the US-centric AI craziness and ask a question, “Why is the solution to anticipated AI growth more data centers?” Data centers seem like a trivial part of the broader AI challenge to some of the venture firms, BAIT (big AI technology) companies, and some online pundits. Building a data center is a cheap building filled with racks of computers, some specialized gizmos, a connection to the local power company, and a handful of network engineers. Bingo. You are good to go.

But what happens if the compute is provided by Application-Specific Integrated Circuits or ASICs? When ASICs became available for crypto currency mining, the individual or small-scale miner was no longer attractive. What happened is that large, industrialized crypto mining farms pushed out the individual miners or mom-and-pop data centers.

The Ghana ASIC roll out appears to have overwhelmed the person taking orders. Demand for cheap AI compute is strong. Is that person in the blue suit from Nvidia? Thanks, MidJourney. Good enough, the mark of excellence today.

Amazon, Google, and probably other BAIT outfits want to design their own AI chips. The problem is similar to moving silos of corn to a processing plant with a couple of pick up trucks. Capacity at chip fabrication facilities is constrained. Big chip ideas today may not be possible on the time scale set by the team designing NFL arena size data centers in Rhode Island- or Mississippi-type locations.

A Chinese startup founded by a former Google engineer claims to have created a new ultra-efficient and relatively low cost AI chip using older manufacturing techniques. Meanwhile, Google itself is now reportedly considering whether to make its own specialized AI chips available to buy. Together, these chips could represent the start of a new processing paradigm which could do for the AI industry what ASICs did for bitcoin mining.

What those ASICs did for crypto mining was shift calculations from individuals to large, centralized data centers. Yep, centralization is definitely better. Big is a positive as well.

The write up adds:

The Chinese startup is Zhonghao Xinying. Its Ghana chip is claimed to offer 1.5 times the performance of Nvidia’s A100 AI GPU while reducing power consumption by 75%. And it does that courtesy of a domestic Chinese chip manufacturing process that the company says is "an order of magnitude lower than that of leading overseas GPU chips." By "an order or magnitude lower," the assumption is that means well behind in technological terms given China’s home-grown chip manufacturing is probably a couple of generations behind the best that TSMC in Taiwan can offer and behind even what the likes of Intel and Samsung can offer, too.

The idea is that if these chips become widely available, they won’t be very good. Probably like the first Chinese BYD electric vehicles. But after some iterative engineering, the Chinese chips are likely to improve. If these improvements coincide with the turn on of the massive data centers the BAIT outfits are building, there might be rethinking required by the Silicon Valley wizards.

Several observations will be offered but these are probably not warranted by anyone other than myself:

- China might subsidize its home grown chips. The Googler is not the only person in the Middle Kingdom trying to find a way around the US approach to smart software. Cheap wins or is disruptive until neutralized in some way.

- New data centers based on the Chinese chips might find customers interested in stepping away from dependence on a technology that most AI companies are using for “me too”, imitative AI services. Competition is good, says Silicon Valley, until it impinges on our business. At that point, touch-to-predict actions come into play.

- Nvidia and other AI-centric companies might find themselves trapped in AI strategies that are comparable to a large US aircraft carrier. These ships are impressive, but it takes time to slow them down, turn them, and steam in a new direction. If Chinese AI ASICs hit the market and improve rapidly, the captains of the US-flagged Transformer vessels will have their hands full and financial officers clamoring for the leaderships’ attention.

Net net: Ponder this question: What is Ghana gonna do?

Stephen E Arnold, December 1, 2025

Can the Chrome Drone Deorbit Comet?

November 28, 2025

Perplexity developed Comet, an intuitive AI-powered Internet browser. Analytic Insight has a rundown on Comet in the article: “Perplexity CEO Aravind Srinivas Claims Comet AI Browser Could ‘Kill’ Android System.” Perplexity designed Comet for more complex tasks such as booking flights, shopping, and answering then executing simple prompts. The new browser is now being released for Android OS.

Until recently Comet was an exclusive, invite-only browser for the desktop version. It is now available for download. Comet is taking the same approach for an Android release. Perplexity hopes to overtake Android as the top mobile OS or so CEO Aravind Srinivas plans.

Another question is if Comet could overtake Chrome as the favored AI browser:

“The launch of Comet AI browser coincides with the onset of a new conflict between AI browsers. Not long ago, OpenAI introduced ChatGPT Atlas, while Microsoft Edge and Google Chrome are upgrading their platforms with top-of-the-line AI tools. Additionally, Perplexity previously received attention for a $34.5 billion proposal to acquire Google Chrome, a bold move indicating its aspirations.

Comet, like many contemporary browsers, is built on the open-source Chromium framework provided by Google, which is also the backbone for Chrome, Edge, and other major browsers. With Comet’s mobile rollout and Srinivas’s bold claim, Perplexity is obviously betting entirely on an AI-first future, one that will see a convergence of the browser and the operating system.”

Comet is built on Chromium. Chrome is too. Comet is a decent web browser, but it doesn’t have the power of Alphabet behind it. Chrome will dominate the AI-browser race because it has money to launch a swarm of digital drones at this frail craft.

Whitney Grace, November 28, 2025

What Can a Monopoly Type Outfit Do? Move Fast and Break Things Not Yet Broken

November 26, 2025

![green-dino_thumb_thumb[3] green-dino_thumb_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/11/green-dino_thumb_thumb3_thumb-4.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

CNBC published “Google Must Double AI Compute Every 6 Months to Meet Demand, AI Infrastructure Boss Tells Employees.”

How does the math work out? Big numbers result as well as big power demands, pressure on suppliers, and an incentive to enter hyper-hype mode for marketing I think.

Thanks, Venice.ai. Good enough.

The write up states:

Google ’s AI infrastructure boss [maybe a fellow named Amin Vahdat, the leadership responsible for Machine Learning, Systems and Cloud AI?] told employees that the company has to double its compute capacity every six months in order to meet demand for artificial intelligence services.

Whose demand exactly? Commercial enterprises, Google’s other leadership, or people looking for a restaurant in an unfamiliar town?

The write up notes:

Hyperscaler peers Microsoft, Amazon and Meta also boosted their capex guidance, and the four companies now expect to collectively spend more than $380 billion this year.

Faced with this robust demand, what differentiates the Google for other monopoly-type companies? CNBC delivers a bang up answer to my question:

Google’s “job is of course to build this infrastructure but it’s not to outspend the competition, necessarily,” Vahdat said. “We’re going to spend a lot,” he said, adding that the real goal is to provide infrastructure that is far “more reliable, more performant and more scalable than what’s available anywhere else.” In addition to infrastructure buildouts, Vahdat said Google bolsters capacity with more efficient models and through its custom silicon. Last week, Google announced the public launch of its seventh generation Tensor Processing Unit called Ironwood, which the company says is nearly 30 times more power efficient than its first Cloud TPU from 2018. Vahdat said the company has a big advantage with DeepMind, which has research on what AI models can look like in future years.

I see spend the same as a competitor but, because Google is Googley, the company will deliver better reliability, faster, and more easily made bigger AI than the non-Googley competition. Google is focused on efficiency. To me, Google bets that its engineering and programming expertise will give it an unbeatable advantage. The VP of Machine Learning, Systems and Cloud AI does not mention the fact that Google has its magical advertising system and about 85 percent of the global Web search market via its assorted search-centric services. Plus one must not overlook the fact that the Google is vertically integrated: Chips, data centers, data, smart people, money, and smart software.

The write up points out that Google knows there are risks with its strategy. But FOMO is more important than worrying about costs and technology. But what about users? Sure, okay, eyeballs, but I think Google means humanoids who have time to use Google whilst riding in Waymos and hanging out waiting for a job offer to arrive on an Android phone. Google doesn’t need to worry. Plus it can just bump up its investments until competitors are left dying in the desert known as Death Vall-AI.

After kicking beaten to the draw in the PR battle with Microsoft, the Google thinks it can win the AI jackpot. But what if it fails? No matter. The AI folks at the Google know that the automated advertising system that collects money at numerous touch points is for now churning away 24×7. Googzilla may just win because it is sitting on the cash machine of cash machines. Even counterfeiters in Peru and Vietnam cannot match Google’s money spinning capability.

Is it game over? Will regulators spring into action? Will Google win the race to software smarter than humans? Sure. Even if it part of the push to own the next big thing is puffery, the Google is definitely confident that it will prevail just like Superman and the truth, justice, and American way has. The only hitch in the git along may be having captured enough electrical service to keep the lights on and the power flowing. Lots of power.

Stephen E Arnold, November 26, 2025

Telegram, Did You Know about the Kiddie Pix Pyramid Scheme?

November 25, 2025

![green-dino_thumb_thumb[3] green-dino_thumb_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/11/green-dino_thumb_thumb3_thumb-5.gif) Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

The Independent, a newspaper in the UK, published “Leader of South Korea’s Biggest Telegram Sex Abuse Ring Gets Life Sentence.” The subtitle is a snappy one: “Seoul Court Says Kim Nok Wan Committed Crimes of Extreme Brutality.” Note: I will refer to this convicted person as Mr. Wan. The reason is that he will spend time in solitary confinement. In my experience individuals involved in kiddie crimes are at bottom of the totem pole among convicted people. If the prison director wants to keep him alive, he will be kept away from the general population. Even though most South Koreans are polite, it is highly likely that he will face a less than friendly greeting when he visits the TV room or exercise area. Therefore, my designation of Mr. Wan reflects the pallor his skin will evidence.

Now to the story:

The main idea is that Mr. Wan signed up for Telegram. He relied on Telegram’s Group and Channel function. He organized a social community dubbed the Vigilantes, a word unlikely to trigger kiddie pix filters. Then he “coerced victims, nearly 150 of them minors, into producing explicit material through blackmail and then distribute the content in online chat rooms.”

Telegram’s leader sets an example for others who want to break rules and be worshiped. Thanks, Venice.ai. Too bad you ignored my request for no facial hair. Good enough, the standard for excellence today I believe.

Mr. Wan’s innovation weas to set up what the Independent called “a pyramid hierarchy.” Think of an Herbal Life- or the OneCoin-type operation. He incorporated an interesting twist. According to the Independent:

He also sent a video of a victim to their father through an accomplice and threatened to release it at their workplace.

Let’s shift from the clever Mr. Wan to Telegram and its public and private Groups and Channels. The French arrested Pavel Durov in August 2024. The French judiciary identified a dozen crimes he allegedly committed. He awaits trial for these alleged crimes. Since that arrest, Telegram has, based on our monitoring of Telegram, blocked more aggressively a number of users and Groups for violating Telegram’s rules and regulations such as they are. However, Mr. Wan appears to have slipped through despite Telegram’s filtering methods.

Several observations:

- Will Mr. Durov implement content moderation procedures to block, prevent, and remove content like Mr. Wan’s?

- Will South Korea take a firm stance toward Telegram’s use in the country?

- Will Mr. Durov cave in to Iran’s demands so that Telegram is once again available in that country?

- Did Telegram know about Mr. Wan’s activities on the estimable Telegram platform?

Mr. Wan exploited Telegram. Perhaps more forceful actions should be taken by other countries against services which provide a greenhouse for certain types of online activity to flourish? Mr. Durov is a tech bro, and he has been pictured carrying a real (not metaphorical) goat to suggest that he is the greatest of all time.

That perception appears to be at odds with the risk his platform poses to children in my opinion.

Stephen E Arnold, November 25, 2025

Why the BAIT Outfits Are Drag Netting for Users

November 25, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Have you wondered why the BAIT (big AI tech) companies are pumping cash into what looks to many like a cash bonfire? Here’s one answer, and I think it is a reasonably good one. Navigate to “Best Case: We’re in a Bubble. Worst Case: The People Profiting Most Know Exactly What They’re Doing.” I want to highlight several passages and then often my usually-ignored observations.

Thanks, Venice.ai. Good enough, but I am not sure how many AI execs wear old-fashioned camping gear.

I noted this statement:

The best case scenario is that AI is just not as valuable as those who invest in it, make it, and sell it believe.

My reaction to this bubble argument is that the BAIT outfits realized after Microsoft said, “AI in Windows” that a monopoly-type outfit was making a move. Was AI the next oil or railroad play? Then Google did its really professional and carefully-planned Code Red or Yellow whatever, the hair-on-fire moment arrived. Now almost three years later, the hot air from the flaming coifs are equaled by the fumes of incinerating bank notes.

The write up offers this comment:

My experience with AI in the design context tends to reflect what I think is generally true about AI in the workplace: the smaller the use case, the larger the gain. The larger the use case, the larger the expense. Most of the larger use cases that I have observed — where AI is leveraged to automate entire workflows, or capture end to end operational data, or replace an entire function — the outlay of work is equal to or greater than the savings. The time we think we’ll save by using AI tends to be spent on doing something else with AI.

The experiences of my team and I support this statement. However, when I go back to the early days of online in the 1970s, the benefits of moving from print research to digital (online) research were fungible. They were quantifiable. Online is where AI lives. As a result, the technology is not global. It is a subset of functions. The more specific the problem, the more likely it is that smart software can help with a segment of the work. The idea that cobbled together methods based on built-in guesses will be wonderful is just plain crazy. Once one thinks of AI as a utility, then it is easier to identify a use case where careful application of the technology will deliver a benefit. I think of AI as a slightly more sophisticated spell checker for writing at the 8th grade level.

The essay points out:

The last ten years have practically been defined by filter bubbles, alternative facts, and weaponized social media — without AI. AI can do all of that better, faster, and with more precision. With a culture-wide degradation of trust in our major global networks, it leaves us vulnerable to lies of all kinds from all kinds of sources and no standard by which to vet the things we see, hear, or read.

Yep, this is a useful way to explain that flows of online information tear down social structures. What’s not referenced, however, is that rebuilding will take a long time. Think about smashing your mom’s favorite Knick- knack. Were you capable of making it as good as new? Sure, a few specialists might be able to do a good job, but the time and cost means that once something is destroyed, that something is gone. The rebuild is at best a close approximation. That’s why people who want to go back to social structures in the 1950s are chasing a fairy tale.

The essay notes:

When a private company can construct what is essentially a new energy city with no people and no elected representation, and do this dozens of times a year across a nation to the point that half a century of national energy policy suddenly gets turned on its head and nuclear reactors are back in style, you have a sudden imbalance of power that looks like a cancer spreading within a national body.

My view is that the BAIT outfits want to control, dominate, and cash in. Hey, if you have cancer and one company has the alleged cure, are you going to take the drug or just die?

Several observations are warranted:

- BAIT outfits want to be the winner and be the only alpha dog. Ruthless behavior will be the norm for these firms.

- AI is the next big thing. The idea is that if one wishes it, thinks it, or invests in it, AI will be. My hunch is that the present methodologies are on the path to becoming the equivalent of a dial up modem.

- The social consequences of the AI utility added to social media are either ignored or not understood. AI is the catalyst needed to turn one substance into an explosion.

Net net: Good essay. I think the downsides referenced in the essay understate the scope of the challenge.

Stephen E Arnold, November 25, 2025

Pavel Durov Can Travel As Some New Features Dribble from the Core Engineers

November 25, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

In November 2025, Telegram announced Cocoon, its AI system. Well, it is not yet revolutionizing writing code for smart contracts. Like Apple, Telegram is a bit late to the AI dog race. But there is hope for the company which has faced some headwinds. One blowing from the west is the criminal trial for which Pavel Durov, the founder of Telegram waits. Plus, the value of the much-hyped TONcoin and the subject of yet another investigation for financial fancy dancing is tanking.

What’s the good news? Telegram watching outfits like FoneArena and PCNews.ru have reported on some recent Telegram innovations. Keep in mind that Telegram means that a new user install the Messenger mini app. This is an “everything” app. Through the interface one can do a wide range of actions. Yep, that’s why it is called an “everything” app. You can read Telegram’s own explanation in the firm’s blog.

Fone Arena reports that “the Dubai-based virtual company (yeah, go figure that out) has rolled out Live Stories streaming, repeated messages, and gift auctions. Repeated messages will spark some bot developers to build this function into applications. Notifications (wanted and unwanted) are useful in certain types of advertising campaigns. The gift auctions is little more than a hybrid of Google ad auctions and eBay applied to the highly volatile, speculative crypto confections Telegram, users, and developers allegedly find of great value.

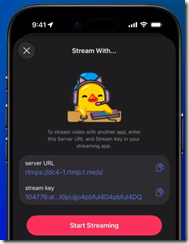

The Live Stories streaming is more significant. Rolled out in November 2025, Live Stories allows users to broadcast live streams within the Stories service. Viewers can post comments and interact in real time in a live chat. During a stream, viewers may highlight or pin their messages using Telegram Stars, which is a form of crypto cash. A visible Star counter appears in the corner of the broadcast. Gamification is a big part of the Telegram way. Gambling means crypto transactions. Transactions incur a service charge. A user can kick of a Live Story from a personal accounts or from a Groups or a Channels that have unlocked Story posting via boosts. Owners have to unlock the Live Story, however. Plus, the new service supports real time messaging protocol for external applications such as OBS and XSplit streaming software.

The interface for Live Stories steaming. Is Telegram angling to kill off Twitch and put a dent in Discord? Will the French judiciary forget to try Pavel Durov for his online service’s behavior. It appears that Mr. Durov and his core engineers think so.

Observations are warranted:

- Live Stories is likely to catch the attention of some of the more interesting crypto promoters who make use of Telegram

- Telegram’s monitoring service will have to operate in real time because dropping in a short but interesting video promo for certain illegal or controversial activities will have to operate better than the Cleveland Browns American football team

- The soft hooks to pump up service charges or “gas fees” in the lingo of the digital currency enthusiasts are an important part of gift and auction play. Think hooking users on speculative investments in digital goodies and then scraping off those service charges.

Net net: Will Cocoon make it easier for developers to code complex bots, mini apps, and distributed applications (dApps)? Answer: Not yet. Just go buy a gift on Telegram. PS. Mr. Zuckerberg, Telegram has aced you again it seems.

Stephen E Arnold, November 25, 2025

Tim Apple, Granny Scarfs, and Snooping

November 24, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I spotted a write in a source I usually ignore. I don’t know if the write up is 100 percent on the money. Let’s assume for the purpose of my dinobaby persona that it indeed is. The write up is “Apple to Pay $95 Million Settle Suit Accusing Siri Of Snoopy Eavesdropping.” Like Apple’s incessant pop ups about my not logging into Facetime, iMessage, and iCloud, Siri being in snoop mode is not surprising to me. Tim Apple, it seems, is winding down. The pace of innovation, in my opinion, is tortoise like. I haven’t nothing against turtle like creatures, but a granny scarf for an iPhone. That’s innovation, almost as cutting edge as the candy colored orange iPhone. Stunning indeed.

Is Frederick the Great wearing an Apple Granny Scarf? Thanks, Venice.ai. Good enough.

What does the write up say about this $95 million sad smile?

Apple has agreed to pay $95 million to settle a lawsuit accusing the privacy-minded company of deploying its virtual assistant Siri to eavesdrop on people using its iPhone and other trendy devices. The proposed settlement filed Tuesday in an Oakland, California, federal court would resolve a 5-year-old lawsuit revolving around allegations that Apple surreptitiously activated Siri to record conversations through iPhones and other devices equipped with the virtual assistant for more than a decade.

Apple has managed to work the legal process for five years. Good work, legal eagles. Billable hours and legal moves generate income if my understanding is correct. Also, the notion of “surreptitiously” fascinates me. Why do the crazy screen nagging? Just activate what you want and remove the users’ options to disable the function. If you want to be surreptitious, the basic concept as I understand it is to operate so others don’t know what you are doing. Good try, but you failed to implement appropriate secretive operational methods. Better luck next time or just enable what you want and prevent users from turning off the data vacuum cleaner.

The write up notes:

Apple isn’t acknowledging any wrongdoing in the settlement, which still must be approved by U.S. District Judge Jeffrey White. Lawyers in the case have proposed scheduling a Feb. 14 court hearing in Oakland to review the terms.

I interpreted this passage to mean that the Judge has to do something. I assume that lawyers will do something. Whoever brought the litigation will do something. It strikes me that Apple will not be writing a check any time soon, nor will the fine change how Tim Apple has set up that outstanding Apple entity to harvest money, data, and good vibes.

I have several questions:

- Will Apple offer a complementary Granny Scarf to each of its attorneys working this case?

- Will Apple’s methods of harvesting data be revealed in a white paper written by either [a] Apple, [b] an unhappy Apple employee, or [c] a researcher laboring in the vineyards of Stanford University or San Jose State?

- Will regulatory authorities and the US judicial folks take steps to curtail the “we do what we want” approach to privacy and security?

I have answers for each of these questions. Here we go:

- No. Granny Scarfs are sold out

- No. No one wants to be hassled endlessly by Apple’s legions of legal eagles

- No. As the recent Meta decision about WhatsApp makes clear, green light, tech bros. Move fast, break things. Just do it.

Stephen E Arnold, November 24, 2025

Google: AI or Else. What a Pleasant, Implicit Threat

November 24, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Do you remember that old chestnut of a how-to book. I think its title was How to Win Friends and Influence People. I think the book contains a statement like this:

“Instead of condemning people, let’s try to understand them. Let’s try to figure out why they do what they do. That’s a lot more profitable and intriguing than criticism; and it breeds sympathy, tolerance and kindness. “To know all is to forgive all.” ”

The Google leadership has mastered this approach. Look at its successes. An advertising system that sells access to users from an automated bidding system running within the Google platform. Isn’t that a way to breed sympathy for the company’s approach to serving the needs of its customers? Another example is the brilliant idea of making a Google-centric Agentic Operating System for the world. I know that the approach leaves plenty of room for Google partners, Google high performers, and Google services. Won’t everyone respond in a positive way to the “space” that Google leaves for others?

Thanks, Venice.ai. Good enough.

I read “Google Boss Warns No Company Is Going to Be Immune If AI Bubble Bursts.” What an excellent example of putting the old-fashioned precepts of Dale Carnegie’s book into practice. The soon-to-be-sued BBC article states:

Speaking exclusively to BBC News, Sundar Pichai said while the growth of artificial intelligence (AI) investment had been an “extraordinary moment”, there was some “irrationality” in the current AI boom… “I think no company is going to be immune, including us,” he said.

My memory doesn’t work the way it did when I was 13 years old, but I think I heard this same Silicon Valley luminary say, “Code Red” when Microsoft announced a deal to put AI in its products and services. With the klaxon sounding and flashing warning lights, Google began pushing people and money into smart software. Thus, the AI craze was legitimized. Not even the spat between Sam Altman and Elon Musk could slow the acceleration. And where are we now?

The chief Googler, a former McKinsey & Company consultant, is explaining that the AI boom is rational and irrational. Is that a threat from a company that knee jerked its way forward? Is Google saying that I should embrace AI or suffer the consequences? Mr. Pichai is worried about the energy needs of AI. That’s good. Because one doesn’t need to be an expert in utility forecast demand analysis to figure out that if the announced data centers are built, there will probably be brown outs or power rationing. Companies like Google can pay its electric bills; others may not have the benefit of that outstanding advertising system to spit out cash with the heart beat of an atomic clock.

I am not sure that Dale Carnegie would have phrased statements like these if they are words tumbling from Google’s leader as presented in the article:

“We will have to work through societal disruptions.” he said, adding that it would also “create new opportunities”. “It will evolve and transition certain jobs, and people will need to adapt,” he said. Those who do adapt to AI “will do better”. “It doesn’t matter whether you want to be a teacher [or] a doctor. All those professions will be around, but the people who will do well in each of those professions are people who learn how to use these tools.”

This sure sounds like a dire prediction for people who don’t “learn how to use these tools.” I would go so far as to suggest that one of the progenitors of the AI craziness is making another threat. I interpret the comment as meaning, “Get with the program or you will never work again anywhere.”

How uplifting. Imagine that old coot Dale Carnegie saying in the 1930s that you will do poorly if you don’t get with the Googley AI program? Here’s one of Dale’s off-the-wall comments was:

“The only way to influence people is to talk in terms of what the other person wants.”

The statements in the BBC story make one thing clear: I know what Google wants. I am not sure it is what other people want. Obviously the wacko Dale Carnegie is not in tune with the McKinsey consultant’s pragmatic view of what Google wants. Poor Dale. It seems his observations do not line up with the Google view of life for those who don’t do AI.

Stephen E Arnold, November 24, 2025

Collaboration: Why Ask? Just Do. (Great Advice, Job Seeker)

November 24, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

I read

I am too old to have an opinion about collaboration in 2025. I am a slacker, not a user of Slack. I don’t “GoTo” meetings; I stay in my underground office. I don’t “chat” on Facebook or smart software. I am, therefore, qualified to comment on the essay “Collaboration Sucks.” The main point of the essay is that collaboration is not a positive. (I know that this person has not worked at a blue chip consulting firm. If you don’t collaborate, you better have telepathy. Otherwise, you will screw up in a spectacular fashion with the client and the lucky colleagues who get to write about your performance or just drop hints to a Carpetland dweller.

The essay states:

We aim to hire people who are great at their jobs and get out of their way. No deadlines, minimal coordination, and no managers telling you what to do. In return, we ask for extraordinarily high ownership and the ability to get a lot done by yourself. Marketers ship code, salespeople answer technical questions without backup, and product engineers work across the stack.

To me, this sounds like a Silicon Valley commandment along with “Go fast and break things” or “It’s easier to ask forgiveness than it is to get permission.” Allegedly Rear Admiral Grace Hopper offered this observation. However, Admiral Craig Hosmer told me that her attitude did more harm to females in the US Navy’s technical services than she thought. Which Admiral does one believe? I believe what Admiral Hosmer told me when I provided technical support to his little Joint Committee on Nuclear Energy many years ago.

Thanks, Venice.ai. Good enough. Good enough.

The idea that a team of really smart and independent specialists can do great things is what has made respected managers familiar with legal processes around the world. I think Google just received an opportunity to learn from its $600 million fine levied by Germany. Moving fast, Google made some interesting decisions about German price comparison sites. I won’t raise again the specter of the AI bubble and the leadership methods of Sam AI-Man. Everything is working out just swell, right?

The write up presents seven reasons why collaboration sucks. Most of the reasons revolve around flaws in a person. I urge you to read the seven variations on the theme of insecurity, impostor syndrome, and cluelessness.

My view is that collaboration, like any business process, depends on the context of the task and the work itself. In some organizations, employees can do almost anything because middle managers (if they are still present) have little idea about what’s going on with workers who are in an office half a world away, down the hall but playing Foosball, pecking away at a laptop in a small, overpriced apartment in Plastic Fantastic (aka San Mateo), or working from a van and hoping the Starlink is up.

I like the idea of crushing collaboration. I urge those who want to practice this skill join a big time law firm, a blue chip consulting firm, or engage in the work underway at a pharmaceutical research lab. I love the tips the author trots out; specifically:

- Just ship the code, product, whatever. Ignore inputs like Slack messages.

- Tell the boss or leader, you are the “driver.” (When I worked for the Admiral, I would suggest that this approach was not appropriate for the context of that professional, the work related to nuclear weapons, or a way to win his love, affection, and respect. I would urge the author to track down a four star and give his method a whirl. Let me know how that works out.)

- Tell people what you need. That’s a great idea if one has power and influence. If not, it is probably important to let ChatGPT word an email for you.

- Don’t give anyone feedback until the code or product has shipped. This a career builder in some organizations. It is quite relevant when a massive penalty ensures because an individual withheld knowledge and thus made the problem worse. (There is something called “discovery.” And, guess what, those Slack and email messages can be potent.)

- Listen to inputs but just do what you want. (In my 60 year work career, I am not sure this has ever been good advice. In an AI outfit, it’s probably gold for someone. Isn’t there something called Fool’s Gold?)

Plus, there is one item on the action list for crushing collaboration I did not understand. Maybe you can divine its meaning? “If you are a team lead, or leader of leads, who has been asked for feedback, consider being more you can just do stuff.”

Several observations:

- I am glad I am not working in Sillycon Valley any longer. I loved the commute from Berkeley each day, but the craziness in play today would not match my context. Translation: I have had enough of destructive business methods. Find someone else to do your work.

- The suggestions for killing collaboration may kill one’s career except in toxic companies. (Notice that I did not identify AI-centric outfits. How politic of me.)

- The management failure implicit in this approach to colleagues, suggestions, and striving for quality is obvious to me. My fear is that some young professionals may see this collaboration sucks approach and fail to recognize the issues it creates.

Net net: When you hire, I suggest you match the individual to the context and the expertise required to the job. Short cuts contribute to the high failure rate of start ups and the dead end careers some promising workers create for themselves.

Stephen E Arnold, November 24, 2025