Microsoft Demonstrates Its Commitment to Security. Right, Copilot

December 4, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I read on November 20, 2025, an article titled “Critics Scoff after Microsoft Warns AI Feature Can Infect Machines and Pilfer Data.” My immediate reaction was, “So what’s new?” I put the write up aside. I had to run an errand, so I grabbed the print out of this Ars Technica story in case I had to wait for the shop to hunt down my dead lawn mower.

A hacking club in Moscow celebrates Microsoft’s decision to enable agents in Windows. The group seems quite happy despite sanctions, food shortages, and the special operation. Thanks, MidJourney. Good enough.

I worked through the short write up and spotted a couple of useful (if true) factoids. It may turn out that the information in this Ars Technica write up provide insight about Microsoft’s approach to security. If I am correct, threat actors, assorted money laundering outfits, and run-of-the-mill state actors will be celebrating. If I am wrong, rest easy. Cyber security firms will have no problem blocking threats — for a small fee of course.

The write up points to what the article calls a “warning” from Microsoft on November 18, 2025. The report says:

an experimental AI agent integrated into Windows can infect devices and pilfer sensitive user data

Yep, Ars Technica then puts a cherry on top with this passage:

Microsoft introduced Copilot Actions, a new set of “experimental agentic features” that, when enabled, perform “everyday tasks like organizing files, scheduling meetings, or sending emails,” and provide “an active digital collaborator that can carry out complex tasks for you to enhance efficiency and productivity.”

But don’t worry. Users can use these Copilot actions:

if you understand the security implications.

Wow, that’s great. We know from the psycho-pop best seller Thinking Fast and Slow that more than 80 percent of people cannot figure out how much a ball costs if the total is $1.10 and the ball costs one dollar more. Also, Microsoft knows that most Windows users do not disable defaults. I think that even Microsoft knows that turning on agentic magic by default is not a great idea.

Nevertheless, this means that agents combined with large language models are sparking celebrations among the less trustworthy sectors of those who ignore laws and social behavior conventions. Agentic Windows is the new theme part for online crime.

Should you worry? I will let you decipher this statement allegedly from Microsoft. Make up your own mind, please:

“As these capabilities are introduced, AI models still face functional limitations in terms of how they behave and occasionally may hallucinate and produce unexpected outputs,” Microsoft said. “Additionally, agentic AI applications introduce novel security risks, such as cross-prompt injection (XPIA), where malicious content embedded in UI elements or documents can override agent instructions, leading to unintended actions like data exfiltration or malware installation.”

I thought this sub head in the article exuded poetic craft:

Like macros on Marvel superhero crack

The article reports:

Microsoft’s warning, one critic said, amounts to little more than a CYA (short for cover your ass), a legal maneuver that attempts to shield a party from liability. “Microsoft (like the rest of the industry) has no idea how to stop prompt injection or hallucinations, which makes it fundamentally unfit for almost anything serious,” critic Reed Mideke said. “The solution? Shift liability to the user. Just like every LLM chatbot has a ‘oh by the way, if you use this for anything important be sure to verify the answers” disclaimer, never mind that you wouldn’t need the chatbot in the first place if you knew the answer.”

Several observations are warranted:

- How about that commitment to security after SolarWinds? Yeah, I bet Microsoft forgot that.

- Microsoft is doing what is necessary to avoid the issues that arise when the Board of Directors has a macho moment and asks whoever is the Top Dog at the time, “What about the money spent on data centers and AI technology? You know, How are you going to recoup those losses?

- Microsoft is not asking its users about agentic AI. Microsoft has decided that the future of Microsoft is to make AI the next big thing. Why? Microsoft is an alpha in a world filled with lesser creatures. The answer? Google.

Net net: This Ars Technica article makes crystal clear that security is not top of mind among Softies. Hey, when’s the next party?

Stephen E Arnold, December 4, 2025

AI Agents and Blockchain-Anchored Exploits:

November 20, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

In October 2025, Google published “New Group on the Block: UNC5142 Leverages EtherHiding to Distribute Malware,” which generated significant attention across cybersecurity publications, including Barracuda’s cybersecurity blog. While the EtherHiding technique was originally documented in Guard.io’s 2023 report, Google’s analysis focused specifically on its alleged deployment by a nation-state actor. The methodology itself shares similarities with earlier exploits: the 2016 CryptoHost attack also utilized malware concealed within compressed files. This layered obfuscation approach resembles matryoshka (Russian nesting dolls) and incorporates elements of steganography—the practice of hiding information within seemingly innocuous messages.Recent analyses emphasize the core technique: exploiting smart contracts, immutable blockchains, and malware delivery mechanisms. However, an important underlying theme emerges from Google’s examination of UNC5142’s methodology—the increasing role of automation. Modern malware campaigns already leverage spam modules for phishing distribution, routing obfuscation to mask server locations, and bots that harvest user credentials.

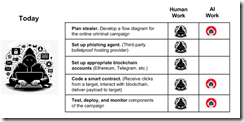

With rapid advances in agentic AI systems, the trajectory toward fully automated malware development becomes increasingly apparent. Currently, exploits still require threat actors to manually execute fundamental development tasks, including coding blockchain-enabled smart contracts that evade detection.During a recent presentation to law enforcement, attorneys, and intelligence professionals, I outlined the current manual requirements for blockchain-based exploits. Threat actors must currently complete standard programming project tasks: [a] Define operational objectives; [b] Map data flows and code architecture; [c] Establish necessary accounts, including blockchain and smart contract access; [d] Develop and test code modules; and [e] Deploy, monitor, and optimize the distributed application (dApp).

The diagrams from my lecture series on 21st-century cybercrime illustrate what I believe requires urgent attention: the timeline for when AI agents can automate these tasks. While I acknowledge my specific timeline may require refinement, the fundamental concern remains valid—this technological convergence will significantly accelerate cybercrime capabilities. I welcome feedback and constructive criticism on this analysis.

The diagram above illustrates how contemporary threat actors can leverage AI tools to automate as many as one half of the tasks required for a Vibe Blockchain Exploit (VBE). However, successful execution still demands either a highly skilled individual operator or the ability to recruit, coordinate, and manage a specialized team. Large-scale cyber operations remain resource-intensive endeavors. AI tools are increasingly accessible and often available at no cost. Not surprisingly, AI is a standard components in the threat actor’s arsenal of digital weapons. Also, recent reports indicate that threat actors are already using generative AI to accelerate vulnerability exploitation and tool development. Some operations are automating certain routine tactical activities; for example, phishing. Despite these advances, a threat actor has to get his, her, or the team’s hands under the hood of an operation.

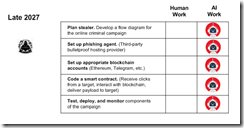

Now let’s jump forward to 2027.

The diagram illustrates two critical developments in the evolution of blockchain-based exploits. First, the threat actor’s role transforms from hands-on execution to strategic oversight and decision-making. Second, increasingly sophisticated AI agents assume responsibility for technical implementation, including the previously complex tasks of configuring smart contract access and developing evasion-resistant code. This represents a fundamental shift: the majority of operational tasks transition from human operators to autonomous software systems.

Several observations appear to be warranted:

- Trajectory and Detection Challenges. While the specific timeline remains subject to refinement, the directional trend for Vibe Blockchain Exploits (VBE) is unmistakable. Steganographic techniques embedded within blockchain operations will likely proliferate. The encryption and immutability inherent to blockchain technology significantly extend investigation timelines and complicate forensic analysis.

- Democratization of Advanced Cyber Capabilities. The widespread availability of AI tools, combined with continuous capability improvements, fundamentally alters the threat landscape by reducing deployment time, technical barriers, and operational costs. Our analysis indicates sustained growth in cybercrime incidents. Consequently, demand for better and advanced intelligence software and trained investigators will increase substantially. Contrary to sectors experiencing AI-driven workforce reduction, the AI-enabled threat environment will generate expanded employment opportunities in cybercrime investigation and digital forensics.

- Asymmetric Advantages for Threat Actors. As AI systems achieve greater sophistication, threat actors will increasingly leverage these tools to develop novel exploits and innovative attack methodologies. A critical question emerges: Why might threat actors derive greater benefit from AI capabilities than law enforcement agencies? Our assessment identifies a fundamental asymmetry. Threat actors operate with fewer behavioral constraints. While cyber investigators may access equivalent AI tools, threat actors maintain operational cadence advantages. Bureaucratic processes introduce friction, and legal frameworks often constrain rapid response and hamper innovation cycles.

Current analyses of blockchain-based exploits overlook a crucial convergences: The combination of advanced AI systems, blockchain technologies, and agile agentic operational methodologies for threat actors. These will present unprecedented challenges to regulatory authorities, intelligence agencies, and cybercrime investigators. Addressing this emerging threat landscape requires institutional adaptation and strategic investment in both technological capabilities and human expertise.

Stephen E Arnold, November 20, 2025

Surprise! Countries Not Pals with the US Are Using AI to Spy. Shocker? Hardly

November 17, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

The Beeb is a tireless “real” news outfit. Like some Manhattan newscasters, fixing up reality to make better stories, the BBC allowed a couple of high-profile members of leadership to find their future elsewhere. Maybe the chips shop in Slough?

Thanks, Venice.ai. You are definitely outputting good enough art today.

I am going to suspend my disbelief and point to a “real” news story about a US company. The story is “AI Firm Claims Chinese Spies Used Its Tech to Automate Cyber Attacks.” The write up reveals information that should not surprise anyone except the Beeb. The write up reports:

The makers of artificial intelligence (AI) chatbot Claude claim to have caught hackers sponsored by the Chinese government using the tool to perform automated cyber attacks against around 30 global organizations. Anthropic said hackers tricked the chatbot into carrying out automated tasks under the guise of carrying out cyber security research. The company claimed in a blog post this was the “first reported AI-orchestrated cyber espionage campaign”.

What’s interesting is that Anthropic itself was surprised. If Google and Microsoft are making smart software part of the “experience,” why wouldn’t bad actors avail themselves of the tools. Information about lashing smart software to a range of online activities is not exactly a secret.

What surprises me about this “news” is:

- Why is Anthropic spilling the beans about a nation state using its technology. Once such an account is identified, block it. Use pattern matching to determine if others are doing substantially similar exploits. Block those. If you want to become a self appointed police professional, get used to the cat-and-mouse game. You created the system. Deal with it.

- Why is the BBC presenting old information as something new? Perhaps its intrepid “real” journalists should pay attention to the public information distributed by cyber security firms? I think that is called “research”, but that may be surfing on news releases or running queries against ChatGPT or Gemini. Why not try Qwen, the China-affiliated system.

- I wonder why the Google-Anthropic tie up is not mentioned in the write up. Google released information about a quite specific smart exploit a few months ago. Was this information used by Anthropic to figure out that an bad actor was an Anthropic user? Is there a connection here? I don’t know, but that’s what investigative types are supposed to consider and address.

My personal view is that Anthropic is positioning itself as a tireless defender of truth, justice, and the American way. The company may also benefit from some of Google’s cyber security efforts. Google owns Mandiant and is working hard to make the Wiz folks walk down the yellow brick road to the Googleplex.

Net net: Bad actors using low cost, subsidized, powerful, and widely available smart software is not exactly a shocker.

Stephen E Arnold, November 17, 2025

Cyber Security: Do the Children of Shoemakers Have Yeezies or Sandals?

November 7, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

When I attended conferences, I liked to stop at the exhibitor booths and listen to the sales pitches. I remember one event held in a truly shabby hotel in Tyson’s Corner. The vendor whose name escapes me explained that his firm’s technology could monitor employee actions, flag suspicious behaviors, and virtually eliminate insider threats. I stopped at the booth the next day and asked, “How can your monitoring technology identify individuals who might flip the color of their hat from white to black?” The answer was, “Patterns.” I found the response interesting because virtually every cyber security firm with whom I have interacted over the years talks about patterns.

Thanks, OpenAI. Good enough.

The problem is that individuals aware of what are mostly brute-force methods of identifying that employee A tried to access a Dark Web site known for selling malware works if the bad actor is clueless. But what happens if the bad actors were actually wearing white hats, riding white stallions, and saying, “Hi ho, Silver, away”?

Here’s the answer: “Prosecutors allege incident response pros used ALPHV/BlackCat to commit string of ransomware attacks

.” The write up explains that “cybersecurity turncoats attacked at least five US companies while working for” cyber security firms. Here’s an interesting passage from the write up:

Ryan Clifford Goldberg, Kevin Tyler Martin and an unnamed co–conspirator — all U.S. nationals — began using ALPHV, also known as BlackCat, ransomware to attack companies in May 2023, according to indictments and other court documents in the U.S. District Court for the Southern District of Florida. At the time of the attacks, Goldberg was a manager of incident response at Sygnia, while Martin, a ransomware negotiator at DigitalMint, allegedly collaborated with Goldberg and another co-conspirator, who also worked at DigitalMint and allegedly obtained an affiliate account on ALPHV. The trio are accused of carrying out the conspiracy from May 2023 through April 2025, according to an affidavit.

How long did the malware attacks persist? Just from May 2023 until April 2025.

Obviously the purpose of the bad behavior was money. But the key point is that, according to the article, “he was recruited by the unnamed co-conspirator.”

And that, gentle reader, is how bad actors operate. Money pressure, some social engineering probably at a cyber security conference, and a pooling of expertise. I am not sure that insider threat software can identify this type of behavior. The evidence is that multiple cyber security firms employed these alleged bad actors and the scam was afoot for more that 20 months. And what about the people who hired these individuals? That screening seems to be somewhat spotty, doesn’t it?

Several observations:

- Cyber security firms themselves are not able to operate in a secure manner

- Trust in Fancy Dan software may be misplaced. Managers and co-workers need to be alert and have a way to communicate suspicions in an appropriate way

- The vendors of insider threat detection software may want to provide some hard proof that their systems operate when hats change from black to white.

Everyone talks about the boom in smart software. But cyber security is undergoing a similar economic gold rush. This example, if it is indeed accurate, indicates that companies may develop, license, and use cyber security software. Does it work? I suggest you ask the “leadership” of the firms involved in this legal matter.

Stephen E Arnold, November 7, 2025

Myanmar Direct Action: Online Cyber Crime Meets Kinetics

November 7, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “Stragglers from Myanmar Scam Center Raided by Army Cross into Thailand As Buildings Are Blown Up.” In August 2024, France took Pavel Durov at a Paris airport. The direct action worked. Telegram has been wobbling. Myanmar, perhaps learning from the French decision to arrest the Mr. Durov, shut down an online fraud operation. The Associated Press reported on October 28, 2025: “The KK Park site, identified by Thai officials and independent experts as housing a major cybercrime operation, was raided by Myanmar’s army in mid-October as part of operations starting in early September to suppress cross-border online scams and illegal gambling.”

News reports and white papers from the United Nations make clear that sites like KK Park are more like industrial estates. Dormitories, office space, and eating facilities are provided. “Workers” or captives remain within the defined area. The Golden Triangle region strikes me as a Wild West for a range of cyber crimes, including pig butchering and industrial-scale phishing.

The geographic names and the details of the different groups in an area with competing political groups can be confusing. However, what is clear is that Myanmar’s military assaulted the militia groups protecting the facilities. Reports of explosions and people fleeing the area have become public. The cited news report says that Myanmar has been a location known to be tolerant or indifferent to certain activities within its borders.

Will Myanmar take action against other facilities believed to be involved in cyber crime? KK Park is just one industrial campus from which threat actors conduct their activities. Is Myanmar’s response a signal that law enforcement is fed up with certain criminal activity and moving with directed prejudice at certain operations? Will other countries follow the French and Myanmar method?

The big question is, “What caused Myanmar to focus on KK Park?” Will Cambodia, Lao PDR, and Thailand follow French view that enough is enough and advance to physical engagement?

Stephen E Arnold, November 7, 2025

Iran and Crypto: A Short Cut Might Not Be Working

November 6, 2025

One factor about cryptocurrency mining (and AI) that is glossed over by news outlets is the amount of energy required to keep the servers running. In short, it’s a lot! The Cool Down reports how one Middle Eastern country is dealing with a cryptocurrency crisis: “Stunning Report Reveals Government-Linked Crypto Crisis: ‘Serious And Unimaginable’”.

What is very interesting (and not surprising) about the crypto-currency mining is who is doing it: the Iranian government. Iran is dealing with an energy crisis and the citizens are dismayed. Lakes are drying up and there are abundant power outages. Iran is dealing with one of the worst droughts in its modern history.

Iran’s people have protested, but it’s like pushing a boulder up hill: no one is listening. Iran is home to a large saltwater lake, Lake Urmia, and it has transformed into a marsh.

Here’s what one expert said:

“An Iranian engineer cited by The Observer alleged that cryptocurrency mining by the state is consuming up to 5% of electricity, contributing to water and power depletion. "We are in a serious and unimaginable crisis," Iran President Masoud Pezeshkian said as he urged action during a recent cabinet meeting.”

The Iranian government has temporarily closed offices and is rationing resources, but it likely won’t be enough to curb power demanded by the crypto mining.

Iran could demolish its authoritarian and fundamentalist religious government, invest in a mixed economy, liberate women, and invest in education and technology to prepare for a better future. That likely won’t happen.

Whitney Grace, November 6, 2025

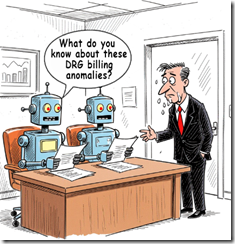

Medical Fraud Meets AI. DRG Codes Meet AI. Enjoy

November 4, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I have heard that some large medical outfits make use of DRG “chains” or “coding sequences.” I picked up this information when my team and I worked on what is called a “subrogation project.” I am not going to explain how subrogation works or what the mechanisms operating are. These are back office or invisible services that accompany something that seems straightforward. One doesn’t buy stock from a financial advisor; there is plumbing and plumbing companies that do this work. The hospital sends you a bill; there is plumbing and plumbing companies providing systems and services. To sum up, a hospital bill is often large, confusing, opaque, and similar to a secret language. Mistakes happen, of course. But often inflated medical bills do more to benefit the institution and its professionals than the person with the bill in his or her hand. (If you run into me at an online fraud conference, I will explain how the “chain” of codes works. It is slick and not well understood by many of the professionals who care for the patient. It is a toss up whether Miami or Nashville is the Florence of medical fancy dancing. I won’t argue for either city, but I would add that Houston and LA should be in the running for the most creative center of certain activities.

“Grieving Family Uses AI Chatbot to Cut Hospital Bill from $195,000 to $33,000 — Family Says Claude Highlighted Duplicative Charges, Improper Coding, and Other Violations” contains some information that will be [a] good news for medical fraud investigators and [b] for some health care providers and individual medical specialists in their practices. The person with the big bill had to joust with the provider to get a detailed, line item breakdown of certain charges. Once that anti-social institution provider the detail, it was time for AI.

The write up says:

Claude [Anthropic, the AI outfit hooked up with Google] proved to be a dogged, forensic ally. The biggest catch was that it uncovered duplications in billing. It turns out that the hospital had billed for both a master procedure and all its components. That shaved off, in principle, around $100,000 in charges that would have been rejected by Medicare. “So the hospital had billed us for the master procedure and then again for every component of it,” wrote an exasperated nthmonkey. Furthermore, Claude unpicked the hospital’s improper use of inpatient vs emergency codes. Another big catch was an issue where ventilator services are billed on the same day as an emergency admission, a practice that would be considered a regulatory violation in some circumstances.

Claude, the smart software, clawed through the data. The smart software identified certain items that required closer inspection. The AI helped the human using Claude to get the health care provider to adjust the bill.

Why did the hospital make billing errors? Was it [a] intentional fraud programmed into the medical billing system; [b] was it an intentional chain of DRG codes tuned to bill as many items, actions, and services as possible within reason and applicable rules; or [c] a computer error. If you picked item c, you are correct. The write up says:

Once a satisfactory level of transparency was achieved (the hospital blamed ‘upgraded computers’), Claude AI stepped in and analyzed the standard charging codes that had been revealed.

Absolutely blame the problem on the technology people. Who issued the instructions to the technology people? Innocent MBAs and financial whiz kids who want to maximize their returns are not part of this story. Should they be? Of course not. Computer-related topics are for other people.

Stephen E Arnold, November 4, 2025

Starlink: Are You the Only Game in Town? Nope

October 23, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “SpaceX Disables More Than 2,000 Starlink Devices Used in Myanmar Scam Compounds.” Interesting from a quite narrow Musk-centric focus. I wonder if this is a PR play or the result of some cooperative government action. The write up says:

Lauren Dreyer, the vice-president of Starlink’s business operations, said in a post on X Tuesday night that the company “proactively identified and disabled over 2,500 Starlink Kits in the vicinity of suspected ‘scam centers’” in Myanmar. She cited the takedowns as an example of how the company takes action when it identifies a violation of its policies, “including working with law enforcement agencies around the world.”

The cyber outfit added:

Myanmar has recently experienced a handful of high-profile raids at scam compounds which have garnered headlines and resulted in the arrest, and in some cases release, of thousands of workers. A crackdown earlier this year at another center near Mandalay resulted in the rescue of 7,000 people. Nonetheless, construction is booming within the compounds around Mandalay, even after raids, Agence France-Presse reported last week. Following a China-led crackdown on scam hubs in the Kokang region in 2023, a Chinese court in September sentenced 11 members of the Ming crime family to death for running operations.

Thanks, Venice.ai. Good enough.

Just one Chinese crime family. Even more interesting.

I want to point out that the write up did not take a tiny extra step; for example, answer this question, “What will prevent the firms listed below from filling the Starlink void (if one actually exists)? Here are some Starlink options. These may be more expensive, but some surplus cash is spun off from pig butchering, human trafficking, drug brokering, and money laundering. Here’s the list from my files. Remember, please, that I am a dinobaby in a hollow in rural Kentucky. Are my resources more comprehensive than a big cyber security firm’s?

- AST

- EchoStar

- Eutelsat

- HughesNet

- Inmarsat

- NBN Sky Muster

- SES S.A.

- Telstra

- Telesat

- Viasat

With access to money, cut outs, front companies, and compensated government officials, will a Starlink “action” make a substantive difference? Again this is a question not addressed in the original write up. Myanmar is just one country operating in gray zones where government controls are ineffective or do not exist.

Starlink seems to be a pivot point for the write up. What about Starlinks in other “countries” like Lao PDR? What about a Starlink customer carrying his or her Starlink into Cambodia? I wonder if some US cyber security firms keep up with current actions, not those with some dust on the end tables in the marketing living room.

Stephen E Arnold, October 23, 2025

Want to Catch the Attention of Bad Actors? Say, Easier Cross Chain Transactions

September 24, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I know from experience that most people don’t know about moving crypto in a way that makes deanonymization difficult. Commercial firms offer deanonymization services. Most of the well-known outfits’ technology delivers. Even some home-grown approaches are useful.

For a number of years, Telegram has been the go-to service for some Fancy Dancing related to obfuscating crypto transactions. However, Telegram has been slow on the trigger when it comes to smart software and to some of the new ideas percolating in the bubbling world of digital currency.

A good example of what’s ahead for traders, investors, and bad actors is described in “Simplifying Cross-Chain Transactions Using Intents.” Like most crypto thought, confusing lingo is a requirement. In this article, the word “intent” refers to having crypto currency in one form like USDC and getting 100 SOL or some other crypto. The idea is that one can have fiat currency in British pounds, walk up to a money exchange in Berlin, and convert the pounds to euros. One pays a service charge. Now in crypto land, the crypto has to move across a blockchain. Then to get the digital exchange to do the conversion, one pays a gas fee; that is, a transaction charge. Moving USDC across multiple chains is a hassle and the fees pile up.

The article “Simplifying Cross Chain Transaction Using Intents” explains a brave new world. No more clunky Telegram smart contracts and bots. Now the transaction just happens. How difficult will the deanonymization process become? Speed makes life difficult. Moving across chains makes life difficult. It appears that “intents” will be a capability of considerable interest to entities interested in making crypto transactions difficult to deanonymize.

The write up says:

In technical terms,

intentsare signed messages that express a user’s desired outcome without specifying execution details. Instead of crafting complex transaction sequences yourself, you broadcast your intent to a network ofsolvers(sophisticated actors) who then compete to fulfill your request.

The write up explains the benefit for the average crypto trader:

when you broadcast an intent, multiple solvers analyze it and submit competing quotes. They might route through different DEXs, use off-chain liquidity, or even batch your intent with others for better pricing. The best solution wins.

Now, think of solvers as your personal trading assistants who understand every connected protocol, every liquidity source, and every optimization trick in DeFi. They make money by providing better execution than you could achieve yourself and saves you a a lot of time.

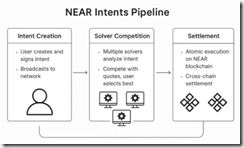

Does this sound like a use case for smart software? It is, but the approach is less complicated than what one must implement using other approaches. Here’s a schematic of what happens in the intent pipeline:

The secret sauce for the approach is what is called a “1Click API.” The API handles the plumbing for the crypto bridging or crypto conversion from currency A to currency B.

If you are interested in how this system works, the cited article provides a list of nine links. Each provides additional detail. To be up front, some of the write ups are more useful than others. But three things are clear:

- Deobfuscation is likely to become more time consuming and costly

- The system described could be implemented within the Telegram blockchain system as well as other crypto conversion operations.

- The described approach can be further abstracted into an app with more overt smart software enablements.

My thought is that money launderers are likely to be among the first to explore this approach.

Stephen E Arnold, September 24, 2025

Pavel Durov Was Arrested for Online Stubbornness: Will This Happen in the US?

September 23, 2025

Written by an unteachable dinobaby. Live with it.

In august 2024, the French judiciary arrested Pavel Durov, the founder of VKontakte and then Telegram, a robust but non-AI platform. Why? The French government identified more than a dozen transgressions by Pavel Durov, who holds French citizenship as a special tech bro. Now he has to report to his French mom every two weeks or experience more interesting French legal action. Is this an example of a failure to communicate?

Will the US take similar steps toward US companies? I raise the question because I read an allegedly accurate “real” news write up called “Anthropic Irks White House with Limits on Models’ Use.” (Like many useful online resources, this story requires the curious to subscribe, pay, and get on a marketing list.) These “models,” of course, are the zeros and ones which comprise the next big thing in technology: artificial intelligence.

The write up states:

Anthropic is in the midst of a splashy media tour in Washington, but its refusal to allow its models to be used for some law enforcement purposes has deepened hostility to the company inside the Trump administration…

The write up says as actual factual:

Anthropic recently declined requests by contractors working with federal law enforcement agencies because the company refuses to make an exception allowing its AI tools to be used for some tasks, including surveillance of US citizens…

I found the write up interesting. If France can take action against an upstanding citizen like Pavel Durov, what about the tech folks at Anthropic or other outfits? These firms allegedly have useful data and the tools to answer questions? I recently fed the output of one AI system (ChatGPT) into another AI system (Perplexity), and I learned that Perplexity did a good job of identifying the weirdness in the ChatGPT output. Would these systems provide similar insights into prompt patterns on certain topics; for instance, the charges against Pavel Durov or data obtained by people looking for information about nuclear fuel cask shipments?

With France’s action, is the door open to take direct action against people and their organizations which cooperate reluctantly or not at all when a government official makes a request?

I don’t have an answer. Dinobabies rarely do, and if they do have a response, no one pays attention to these beasties. However, some of those wizards at AI outfits might want to ponder the question about cooperation with a government request.

Stephen E Arnold, September 24, 2025