Smart Software Exploits Direct Tuition Payment. Sure, the Fraud Is Automated

April 22, 2025

No AI, just the dinobaby himself.

No AI, just the dinobaby himself.

The Voice of San Diego published “As Bot Students Continue to Flood In, Community Colleges Struggle to Respond.” The write up is one of those recipes that “real” news outfits provide to inform their readers about a crime. When I worked through the article, my reaction was, “The process California follows for community college student assistance is a big juicy sandwich on a picnic table in the park on a warm summer day.”

Will the insects flock to the sandwich?

Absolutely. Plus, telling the insects where the sandwich is and the basics of getting their mandibles on that sandwich does one thing: Provide an easy-to-follow set of instructions for a bad actor to follow.

The write up says:

Kevin Alston, a business professor who has taught at Southwestern for nearly 20 years, has stumbled across even more troubling incidents. During a prior semester, he actually called some of the students who were enrolled in his classes but had not submitted any classwork. “One student said ‘I’m not in your class. I’m not even in the state of California anymore’” Alston recalled. The student told him they had been enrolled in his class two years ago but had since moved on to a four-year university out of state. “I said, ‘Oh, then the robots have grabbed your student ID and your name and re-enrolled you at Southwestern College. Now they’re collecting financial aid under your name,’” Alston said.

The opportunity for fraud is a result of certain rules and regulations that require that financial aid be paid directly to the “student.” Enroll as a fake student and get a chunk of money. The more fake students that apply and receive aid, the more money the fake students receive.

California appears to be taking steps to reduce the fraud.

Several observations:

- A basket of rules and regulations appear to create this fraud opportunity

- Smart software in the hands of clever individuals allows the bad actors to collect money. (I am not sure how one cashes multiple checks made out to a fake person, but obviously there are ways around this problem. Are those nifty automatic teller machine deposits an issue?)

- The problem, according to the write up, has been known and getting larger since 2021.

I must admit that I think about online fraud in the hands of pig butchering outfits in the Golden Triangle. The fake student scam sounds like a smaller scale operation. Making a teacher the one who must identify the fake student does not seem to be working.

Okay, let’s see what the great state of California does to resolve this problem. Perhaps the instructors need to attend online classes in fraud detection, apply for financial aid, and get an extra benefit for this non-teaching work? Will community college teachers make good cyber investigators? Sure, especially those teaching history, social science, and literature classes.

Stephen E Arnold, April 22, 2025

The French Are Going After Enablers: Other Countries May Follow

April 16, 2025

Another post by the dinobaby. Judging by the number of machine-generated images of young female “entities” I receive, this 80-year-old must be quite fetching to some scammers with AI. Who knew?

Another post by the dinobaby. Judging by the number of machine-generated images of young female “entities” I receive, this 80-year-old must be quite fetching to some scammers with AI. Who knew?

Enervated by the French judiciary’s ability to reason with Pavel Durov, the Paris Judicial Tribunal is going after what I call “enablers.” The term applies to the legitimate companies which make their online services available to customers. With the popularity of self-managed virtual machines, the online services firms receive an online order, collect a credit card, validate it, and let the remote customer set up and manage a computing resource.

Hey, the approach is popular and does not require expensive online service technical staff to do the handholding. Just collect the money and move forward. I am not sure the Paris Judicial Tribunal is interested in virtual anything. According to “French Court Orders Cloudflare to ‘Dynamically’ Block MotoGP Streaming Piracy”:

In the seemingly endless game of online piracy whack-a-mole, a French court has ordered Cloudflare to block several sites illegally streaming MotoGP. The ruling is an escalation of French blocking measures that began increasing their scope beyond traditional ISPs in the last few months of 2024. Obtained by MotoGP rightsholder Canal+, the order applies to all Cloudflare services, including DNS, and can be updated with ‘future’ domains.

The write up explains:

The reasoning behind the blocking request is similar to a previous blocking order, which also targeted OpenDNS and Google DNS. It is grounded in Article L. 333-10 of the French Sports Code, which empowers rightsholders to seek court orders against any outfit that can help to stop ‘serious and repeated’ sports piracy. This time, SECP’s demands are broader than DNS blocking alone. The rightsholder also requested blocking measures across Cloudflare’s other services, including its CDN and proxy services.

The approach taken by the French provides a framework which other countries can use to crack down on what seem to be legal online services. Many of these outfits expose one face to the public and regulators. Like the fictional Dr. Jekyll and Mr. Hyde, these online service firms make it possible for bad actors to perform a number of services to a special clientele; for example:

- Providing outlets for hate speech

- Hosting all or part of a Dark Web eCommerce site

- Allowing “roulette wheel” DNS changes for streaming sites distributing sports events

- Enabling services used by encrypted messaging companies whose clientele engages in illegal activity

- Hosting images of a controversial nature.

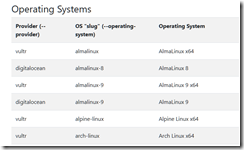

How can this be? Today’s technology makes it possible for an individual to do a search for a DMCA ignored advertisement for a service provider. Then one locates the provider’s Web site. Using a stolen credit card and the card owner’s identity, the bad actor signs up for a service from these providers:

This is a partial list of Dark Web hosting services compiled by SporeStack. Do you recognize the vendors Digital Ocean or Vultr? I recognized one.

These providers offer virtual machines and an API for interaction. With a bit of effort, the online providers have set up a vendor-customer experience that allows the online provider to say, “We don’t know what customer X is doing.” A cyber investigator has to poke around hunting for the “service” identified in the warrant in the hopes that the “service” will not be “gone.”

My view is that the French court may be ready to make life a bit less comfortable for some online service providers. The cited article asserts:

… the blockades may not stop at the 14 domain names mentioned in the original complaint. The ‘dynamic’ order allows SECP to request additional blockades from Cloudflare, if future pirate sites are flagged by French media regulator, ARCOM. Refusal to comply could see Cloudflare incur a €5,000 daily fine per site. “[Cloudflare is ordered to implement] all measures likely to prevent, until the date of the last race in the MotoGP season 2025, currently set for November 16, 2025, access to the sites identified above, as well as to sites not yet identified at the date of the present decision,” the order reads.

The US has a proposed site blocking bill as well.

But the French may continue to push forward using the “Pavel Durov action” as evidence that sitting on one’s hands and worrying about international repercussions is a waste of time. If companies like Amazon and Google operate in France, the French could begin tire kicking in the hopes of finding a bad wheel.

Mr. Durov believed he was not going to have a problem in France. He is a French citizen. He had the big time Kaminski firm represent him. He has lots of money. He has 114 children. What could go wrong? For starters, the French experience convinced him to begin cooperating with law enforcement requests.

Now France is getting some first hand experience with the enablers. Those who dismiss France as a land with too many different types of cheese may want to spend a few moments reading about French methods. Only one nation has some special French judicial savoir faire.

Stephen E Arnold, April 16, 2025

Passwords: Reuse Pumps Up Crime

April 8, 2025

Cloudflare reports that password reuse is one of the biggest mistakes users make that compromises their personal information online. Cloudflare monitored traffic through their services between September-November 2024 and discovered that 41% of all logins for Cloudflare protected Web sites used compromised passwords. Cloudflare discussed why this vulnerability in the blog post: “Password Reuse Is Rampant: Nearly Half Of Observed User Logins Are Compromised.”

As part of their services, Cloudflare monitors if passwords have been leaked in any known data breaches and then warn users of the potential threat. Cloudflare analyzed traffic from Internet properties on the company’s free plan that includes the leaked credentials feature.

When Cloudflare conducted this research, the biggest challenge was distinguishing between real humans an d bad actors. They focused on successful login attempts, because this indicates real humans were involved . The data revealed that 41% of human authentication attempts involved leaked credentials. Despite warning PSAs about reusing old passwords, users haven’t changed their ways.

Bot attacks are also on the rise. These bots are programmed with stolen passwords and credentials and are told to test them on targeted Web sites.

Here’s what Cloudflare found:

“Data from the Cloudflare network exposes this trend, showing that bot-driven attacks remain alarmingly high over time. Popular platforms like WordPress, Joomla, and Drupal are frequent targets, due to their widespread use and exploitable vulnerabilities, as we will explore in the upcoming section.

Once bots successfully breach one account, attackers reuse the same credentials across other services to amplify their reach. They even sometimes try to evade detection by using sophisticated evasion tactics, such as spreading login attempts across different source IP addresses or mimicking human behavior, attempting to blend into legitimate traffic. The result is a constant, automated threat vector that challenges traditional security measures and exploits the weakest link: password reuse.”

Cloudflare advises people to have multi-factor authentication on accounts, explore using passkeys, and for God’s sake please change your password. I have heard that Telegram’s technology enables some capable bots. Does Telegram rely on Cloudflare for some services? Huh.

Whitney Grace, April 8, 2025

Telegram Lecture at TechnoSecurity & Digital Forensics on June 4, 2025

April 3, 2025

No AI. Just a dinobaby sharing an observation about younger managers and their innocence.

No AI. Just a dinobaby sharing an observation about younger managers and their innocence.

The organizers of the June 2025 TechnoSecurity & Digital Forensics Conference posted a 60 second overview of our Telegram Overview lecture on LinkedIn. You can view the conference’s 60 second video at https://lnkd.in/eTSvpYFb. Erik and I have been doing presentations on specific Telegram subjects for law enforcement groups. Two weeks ago, we provided to the Massachusetts Association of Crime Analysts a 60-minute run down about the technical architecture of Telegram and identified three US companies providing services to Telegram. To discuss a presentation for your unit, please, message me via LinkedIn. (Plus, my son and I are working to complete our 100 page PDF notes of our examination of Telegram’s more interesting features. These range from bots which automate cross blockchain crypto movement to the automatic throttling function in the Telegram TON Virtual Machine to prevent transaction bottlenecks in complex crypto wallet obfuscations.) See you there. — Thank you, Stephen E Arnold, April 3, 2025, 223 pm U S Eastern

No Joke: Real Secrecy and Paranoia Are Needed Again

April 1, 2025

No AI. Just a dinobaby sharing an observation about younger managers and their innocence.

No AI. Just a dinobaby sharing an observation about younger managers and their innocence.

In the US and the UK, secrecy and paranoia are chic again. The BBC reported “GCHQ Worker Admits Taking top Secret Data Home.” Ah, a Booz Allen / Snowden type story? The BBC reports:

The court heard that Arshad took his work mobile into a top secret GCHQ area and connected it to work station. He then transferred sensitive data from a secure, top secret computer to the phone before taking it home, it was claimed. Arshad then transferred the data from the phone to a hard drive connected to his personal home computer.

Mr. Snowden used a USB drive. The question is, “What are the bosses doing? Who is watching the logs? Who is checking the video feeds? Who is hiring individuals with some inner need to steal classified information?

But outside phones in a top secret meeting? That sounds like a great idea. I attended a meeting held by a local government agency, and phones and weapons were put in little steel boxes. This outfit was no GHCQ, but the security fellow (a former Marine) knew what he was doing for that local government agency.

A related story addresses paranoia, a mental characteristic which is getting more and more popular among some big dogs.

CNBC reported an interesting approach to staff trust. “Anthropic Announces Updates on Security Safeguards for Its AI Models” reports:

In an earlier version of its responsible scaling policy, Anthropic said it would begin sweeping physical offices for hidden devices as part of a ramped-up security effort.

The most recent update to the firm’s security safeguards adds:

updates to the “responsible scaling” policy for its AI, including defining which of its model safety levels are powerful enough to need additional security safeguards.

The actual explanation is a master piece of clarity. Here’s snippet of what Anthropic actually said in its “Anthropic’s Responsible Scaling Policy” announcement:

The current iteration of our RSP (version 2.1) reflects minor updates clarifying which Capability Thresholds would require enhanced safeguards beyond our current ASL-3 standards.

The Anthropic methods, it seems to me, to include “sweeps” and “compartmentalization.”

Thus, we have two examples of outstanding management:

First, the BBC report implies that personal computing devices can plug in and receive classified information.

And:

Second, CNBC explains that sweeps are not enough. Compartmentalization of systems and methods puts in “cells” who can do what and how.

Andy Grove’s observation popped into my mind. He allegedly rattled off this statement:

Success breeds complacency. Complacency breeds failure. Only the paranoid survive.

Net net: Cyber security is easier to “trust” and “assume”. Real fixes edge into fear and paranoia.

Stephen E Arnold, April 9, 2025

FOGINT: Targets Draw Attention. Signal Is a Target

April 1, 2025

Dinobaby says, “No smart software involved. That’s for “real” journalists and pundits.

Dinobaby says, “No smart software involved. That’s for “real” journalists and pundits.

We have been plugging away on the “Telegram Overview: Notes for Analysts and Investigators.” We have not exactly ignored Signal or the dozens of other super secret, encrypted beyond belief messaging applications. We did compile a table of those we came across, and Signal was on that list.

I read “NSA Warned of Vulnerabilities in Signal App a Month Before Houthi Strike Chat.” I am not interested in the political facets of this incident. The important point for me is this statement:

The National Security Agency sent out an operational security special bulletin to its employees in February 2025 warning them of vulnerabilities in using the encrypted messaging application Signal

One of the big time cyber security companies spoke with me, and I mentioned that Signal might not be the cat’s pajamas. To the credit of that company and the former police chief with whom I spoke, the firm shifted to an end to end encrypted messaging app we had identified as slightly less wonky. Good for that company, and a pat on the back for the police chief who listened to me.

In my experience, operational bulletins are worth reading. When the bulletin is “special,” re-reading the message is generally helpful.

Signal, of course, defends itself vigorously. The coach who loses a basketball game says, “Our players put out a great effort. It just wasn’t enough.”

In the world of presenting oneself as a super secret messaging app immediately makes that messaging app a target. I know first hand that some whiz kid entrepreneurs believe that their EE2E solution is the best one ever. In fact, a year ago, such an entrepreneur told me, “We have developed a method that only a government agency can compromise.”

Yeah, that’s the point of the NSA bulletin.

Let me ask you a question: “How many computer science students in countries outside the United States are looking at EE2E messaging apps and trying to figure out how to compromise the data?” Years ago, I gave some lectures in Tallinn, Estonia. I visited a university computer science class. I asked the students who were working on projects each selected. Several of them told me that they were trying to compromise messaging systems. A favorite target was Telegram but Signal came up.

I know the wizards who cook up EE2E messaging apps and use the latest and greatest methods for delivering security with bells on are fooling themselves. Here are the reasons:

- Systems relying on open source methods are well documented. Exploits exist and we have noticed some CaaS offers to compromise these messages. Now the methods may be illegal in many countries, but they exist. (I won’t provide a checklist in a free blog post. Sorry.)

- Techniques to prevent compromise of secure messaging systems involve some patented systems and methods. Yes, the patents are publicly available, but the methods are simply not possible unless one has considerable resources for software, hardware, and deployment.

- A number of organizations turn EE2E messaging systems into happy eunuchs taking care of the sultan’s harem. I have poked fun at the blunders of the NSO Group and its Pegasus approach, and I have pointed out that the goodies of the Hacking Team escaped into the wild a long time ago. The point is that once the procedures for performing certain types of compromise are no longer secret, other humans can and will create a facsimile and use those emulations to suck down private messages, the metadata, and probably the pictures on the device too. Toss in some AI jazziness, and the speed of the process goes faster than my old 1962 Studebaker Lark.

Let me wrap up by reiterating that I am not addressing the incident involving Signal. I want to point out that I am not into the “information wants to be free.” Certain information is best managed when it is secret. Outfits like Signal and the dozens of other EE2E messaging apps are targets. Targets get hit. Why put neon lights on oneself and try to hide the fact that those young computer science students or their future employers will find a way to compromise the information.

Technical stealth, network fiddling, human bumbling — Compromises will continue to occur. There were good reasons to enforce security. That’s why stringent procedures and hardened systems have been developed. Today it’s marketing, and the possibility that non open source, non American methods may no longer be what the 23 year old art history who has a job in marketing says the systems actually deliver.

Stephen E Arnold, April 1, 2025

FOGINT: Dubai Makes a Crypto Move

March 26, 2025

Cryptocurrencies are on deck to replace fiat currencies. The Dubai Financial Services Authority (DFSA) recently recognized a cryptocurrencies says Gadgets 360: “USDC, EURC Stablecoins Secure ‘Token Recognition’ In Dubai.” The two new tokens recognized in Dubai are the stablecoins USDC and EURC from Circle.

The DFSA approved the use of these stablecoins within the Dubai International Financial Centre’s (DIFC) economic activities. EURC and USDC are the first crypto stablecoins to receive official recognition from the DFSA. Stablecoins are cryptocurrencies backed by traditional assets such as gold and regular hard currencies.

The DFSA issued a crypto token framework in 2022 so businesses working with cryptocurrencies would have safe guidelines. Only DFSA-recognized cryptocurrencies are allowed to be used within the DIFC. This is to ensure companies are protected from scams.

This is an important move for stablecoins:

Dante Disparte, Chief Strategy Officer and Head of Global Policy and Operations at Circle called the development a ‘milestone’ moment for the stablecoin sector. ‘This milestone aligns with our mission to make digital dollars and euros more accessible, interoperable, and useful for businesses, developers, and financial institutions worldwide,’ Dante said. ‘As the first stablecoins to receive this designation, USDC and EURC continue to set the global standard for transparency, compliance, and utility.’”

Circle is the second largest provider of stablecoins in the world after Tether. The company reported the USDC profit reached $18 trillion since launching in 2018. Dubai, Telegram, and crypto: Interesting ingredients.

Whitney Grace, March 18, 2025

Bankman-Fried and Cooled

March 20, 2025

We are not surprised a certain tech bro still has not learned to play by the rules, even in prison. Mediaite reports, "Unauthorized Tucker Carlson Interview Lands Sam Bankman-Fried in Solitary Confinement." Reporter Kipp Jones tells us:

"FTX founder Sam Bankman-Fried was reportedly placed in solitary confinement on Thursday following a video interview with Tucker Carlson that was not approved by corrections officials. The 33-year-old crypto billionaire-turned-inmate spoke to Carlson about a wide range of topics for an interview posted on X. Bankman-Fried and the former Fox News host discussed everything from prescription drug abuse to political contributions. According to The New York Times, prison officials became aware of the interview and put the crypto fraudster in the hole."

What riveting insights were worth that risk? Apparently he has made friends with Diddy, and he passes the time playing chess. That’s nice. He also holds no animosity toward prison staff, he said, though of course "no one wants to be in prison." Perhaps during his stint in solitary, Bankman-Fried will reflect on how he can stay out when he is released in 11 – 24 years.

Cynthia Murrell, March 20, 2025

AI Hiring Spoofs: A How To

March 12, 2025

Be aware. A dinobaby wrote this essay. No smart software involved.

Be aware. A dinobaby wrote this essay. No smart software involved.

The late Robert Steele, one of first government professionals to hop on the open source information bandwagon, and I worked together for many years. In one of our conversations in the 1980s, Robert explained how he used a fake persona to recruit people to assist him in his work on a US government project. He explained that job interviews were an outstanding source of information about a company or an organization.

“AI Fakers Exposed in Tech Dev Recruitment: Postmortem” is a modern spin on Robert’s approach. Instead of newspaper ads and telephone calls, today’s approach uses AI and video conferencing. The article presents a recipe for what was at one time a technique not widely discussed in the 1980s. Robert learned his approach from colleagues in the US government.

The write up explains that a company wants to hire a professional. Everything hums along and then:

…you discover that two imposters hiding behind deepfake avatars almost succeeded in tricking your startup into hiring them. This may sound like the stuff of fiction, but it really did happen to a startup called Vidoc Security, recently. Fortunately, they caught the AI impostors – and the second time it happened they got video evidence.

The cited article explains how to set and operate this type of deep fake play. I am not going to present the “how to” in this blog post. If you want the details, head to the original. The penetration tactic requires Microsoft LinkedIn, which gives that platform another use case for certain individuals gathering intelligence.

Several observations:

- Keep in mind that the method works for fake employers looking for “real” employees in order to obtain information from job candidates. (Some candidates are blissfully unaware that the job is a front for obtaining data about an alleged former employer.)

- The best way to avoid AI centric scams is to do the work the old-fashioned way. Smart software opens up a wealth of opportunities to obtain allegedly actionable information. Unfortunately the old fashioned way is slow, expensive, and prone to social engineering tactics.

- As AI and bad actors take advantage of the increased capabilities of smart software, humans do not adapt quickly when those humans are not actively involved with AI capabilities. Personnel related matters are a pain point for many organizations.

To sum up, AI is a tool. It can be used in interesting ways. Is the contractor you hired on Fiverr or via some online service a real person? Is the job a real job or a way to obtain information via an AI that is a wonderful conversationalist? One final point: The target referenced in the write was a cyber security outfit. Did the early alert, proactive, AI infused system prevent penetration?

Nope.

Stephen E Arnold, March 12, 2025

Dear New York Times, Your Online System Does Not Work

March 3, 2025

The work of a real, live dinobaby. Sorry, no smart software involved. Whuff, whuff. That’s the sound of my swishing dino tail. Whuff.

The work of a real, live dinobaby. Sorry, no smart software involved. Whuff, whuff. That’s the sound of my swishing dino tail. Whuff.

I gave up on the print edition to the New York Times because the delivery was terrible. I did not buy the online version because I could get individual articles via the local library. I received a somewhat desperate email last week. The message was, “Subscribe for $4 per month for two years.” I thought, “Yeah, okay. How bad could it be?”

Let me tell you it was bad, very bad.

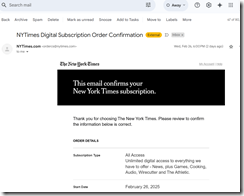

I signed up, spit out my credit card and received this in my email:

The subscription was confirmed on February 26, 2025. I tried to log in on the 27th. The system said, “Click here to receive an access code.” I did. In fact I did the click for the code three times. No code on the 27th.

Today is the 28th. I tried again. I entered my email and saw the click here for the access code. No code. I clicked four times. No code sent.

Dispirited, I called the customer service number. I spoke to two people. Both professionals told me they were sending the codes to my email. No codes arrived.

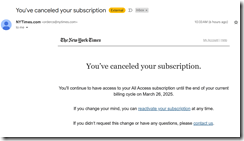

Guess what? I gave up and cancelled my subscription. I learned that I had to pay $4 for the privilege of being told my email was not working.

That was baloney. How do I know? Look at this screenshot:

The estimable newspaper was able to send me a notice that I cancelled.

How screwed up is the New York Times’ customer service? Answer: A lot. Two different support professionals told me I was not logged into my email. Therefore, I was not receiving the codes.

How screwed up are the computer systems at the New York Times? Answer: A lot, no, a whole lot.

I don’t think anyone at the New York Times knows about this issue. I don’t think anyone cares. I wonder how many people like me tried to buy a subscription and found that cancellation was the only viable option to escape automated billing for a service the buyer could not access.

Is this intentional cyber fraud? Probably not. I think it is indicative of poor management, cost cutting, and information technology that is just good enough. By the way, how can you send to my email a confirmation and a cancellation and NOT send me the access code? Answer: Ineptitude in action.

Well, hasta la vista.

Stephen E Arnold, March 3, 2025