Hauling Data: Is There a Chance of Derailment?

February 13, 2025

Another dinobaby write up. Only smart software is the lousy train illustration.

Another dinobaby write up. Only smart software is the lousy train illustration.

I spotted some chatter about US government Web sites going off line. Since I stepped away from the “index the US government” project, I don’t spend much time poking around the content at dot gov and in some cases dot com sites operated by the US government. Let’s assume that some US government servers are now blocked and the content has gone dark to a user looking for information generated by US government entities.

If libraries chug chug down the information railroad tracks to deliver data, what does the “Trouble on the Tracks” sign mean? Thanks, You.com. Good enough.

The fix in most cases is to use Bing.com. My recollection is that a third party like Bing provided the search service to the US government. A good alternative is to use Google.com, the qualifier site: command, and a bit of obscenity. The obscenity causes the Google AI to just generate a semi relevant list of links. In a pinch, you could poke around for a repository of US government information. Unfortunately the Library of Congress is not that repository. The Government Printing Office does not do the job either. The Internet Archive is a hit-and-miss archive operation.

Is there another alternative? Yes. Harvard University announced its Data.gov archive. The institution’s Library Innovation Lab Team said on February 6, 2025:

Today we released our archive of data.gov on Source Cooperative. The 16TB collection includes over 311,000 datasets harvested during 2024 and 2025, a complete archive of federal public datasets linked by data.gov. It will be updated daily as new datasets are added to data.gov.

I like this type of archive, but I am a dinobaby, not a forward leaning, “with it” thinker. Information in my mind belongs in a library. A library, in general, should provide students and those seeking information with a place to go to obtain information. The only advertising I see in a library is an announcement about a bake sale to raise funds for children’s reading material.

Will the Harvard initiative and others like it collide with something on the train tracks? Will the money to buy fuel for the engine’s power plant be cut off? Will the train drivers be forced to find work at Shake Shack?

I have no answers. I am glad I am old, but I fondly remember when the job was to index the content on US government servers. The quaint idea formulated by President Clinton was to make US government information available. Now one has to catch a train.

Stephen E Arnold, February 13, 2025

A Better Database of SEC Filings?

January 2, 2025

DocDelta is a new database that says it is, “revolutionizing investment research by harnessing the power of AI to decode complex financial documents at scale.” In plain speak that means it’s an AI-powered platform that analyzes financial documents. The AI studies terabytes of SEC filings, earning calls, and market data to reveal insights.

DocDelta wants its users to have an edge that other investors are missing. The DocDelta team explain the advanced language combined with financial expertise tracks subtle changes and locates patterns. The platform includes 10-K & 10-Q analysis, real time alerts, and insider trading tracker. As part of its smart monitoring, automated tools, DocDelta has risk assessments, financial metrics, and language analysis.

This platform was designed specifically for investment professionals. It notifies investors when companies update their risk factors and disclose materials through *-K filings. It also analyzes annual and quarterly earnings and compares them against past quarters, identifies material changes in risk factors, financial metrics, and management discussions. There’s also a portfolio management tool and a research feature.

DocDelta sums itself up like this:

“Detect critical changes in SEC filings before the market reacts. Get instant alerts and AI-powered analysis of risk factors, management discussion, and financial metrics.”

This could be a new tool to help the SEC track bad actors and keep the stock market clean. Is that oxymoronic?

Whitney Grace, January 2, 2024

Geolocation Data: Available for a Price

December 30, 2024

According to a report from 404 Media, a firm called Fog Data Science is helping law enforcement compile lists of places visited by suspects. Ars Technica reveals, “Location Data Firm Helps Police Find Out When Suspects Visited their Doctor.” Writer Jon Brodkin writes:

“Fog Data Science, which says it ‘harness[es] the power of data to safeguard national security and provide law enforcement with actionable intelligence,’ has a ‘Project Intake Form’ that asks police for locations where potential suspects and their mobile devices might be found. The form, obtained by 404 Media, instructs police officers to list locations of friends’ and families’ houses, associates’ homes and offices, and the offices of a person’s doctor or lawyer. Fog Data has a trove of location data derived from smartphones’ geolocation signals, which would already include doctors’ offices and many other types of locations even before police ask for information on a specific person. Details provided by police on the intake form seem likely to help Fog Data conduct more effective searches of its database to find out when suspects visited particular places. The form also asks police to identify the person of interest’s name and/or known aliases and their ‘link to criminal activity.’ ‘Known locations a POI [Person of Interest] may visit are valuable, even without dates/times,’ the form says. It asks for street addresses or geographic coordinates.”

See the article for an image of the form. It is apparently used to narrow down data points and establish suspects’ routine movements. It could also be used to, say, prosecute abortions, Brodkin notes.

Back in 2022, the Electronic Frontier Foundation warned of Fog Data’s geolocation data horde. Its report detailed which law enforcement agencies were known to purchase Fog’s intel at the time. But where was Fog getting this data? From Venntel, the EFF found, which is the subject of a Federal Trade Commission action. The agency charges Venntel with “unlawfully tracking and selling sensitive location data from users, including selling data about consumers’ visits to health-related locations and places of worship.” The FTC’s order would prohibit Venntel, and parent company Gravy Analytics, from selling sensitive location data. It would also require they establish a “sensitive data location program.” We are not sure what that would entail. And we might never know: the decision may not be finalized until after the president-elect is sworn in.

Cynthia Murrell, December 30, 2024

Good Enough Junk Food: Knowledge without the Work

December 16, 2024

This write up emerged from the dinobaby’s own mind. Yes, the dinobaby used AI because this write up is about the knowledge value lost with smart software. Intellectual junk food is not the same as my mother’s overcooked chicken which she killed on her aunt’s farm. Cluck, cluck, squawk.

This write up emerged from the dinobaby’s own mind. Yes, the dinobaby used AI because this write up is about the knowledge value lost with smart software. Intellectual junk food is not the same as my mother’s overcooked chicken which she killed on her aunt’s farm. Cluck, cluck, squawk.

I plugged the Guardian’s opinion essay into You.com’s Chat GPT-4o model. You can read the original essay titled “Is Doom Scrolling Really Rotting Our Brains? The Evidence Is Getting Harder to Ignore.” The original essay is clear, reasonably well researched, and structured to speak to [a] a person who has access to the Guardian online or in (gasp!) a print form, [b] who reads the Guardian’s opinion essays, and [c] who has the ability to recognize that “brain rot” has probably affected that individual, co-workers, government leaders, children, and people who repair refrigerators.

This illustration is good enough and it was produced by the AI system Magic Studio.

Here’s the summary Chat GPT-4o spit out:

The essay critiques the decline of human intellect in the digital age, using the viral “Skibidi Toilet” video as a cultural metaphor for deteriorating focus and intellectual engagement. The Oxford English Dictionary’s 2024 word of the year, “brain rot”, reflects this phenomenon, defined as the mental degradation caused by excessive consumption of trivial online content. The essay draws on academic research to demonstrate how compulsive internet use is literally shrinking grey matter, weakening memory, shortening attention spans, and distorting cognitive functions. Studies from Harvard, Oxford, and King’s College reveal the harmful effects of media multitasking and heavy internet usage, with findings such as reduced grey matter in the brain and the risk of “digital dementia” in young people during critical developmental years.The prevalence of infinite scroll and dopamine-driven design in apps is highlighted as a deliberate tactic by tech companies to exploit human psychology for profit, creating powerful addiction loops. Former Google design ethicist Tristan Harris warns that this “race to the bottom of the brain stem” manipulates fear, outrage, and reward systems, potentially driving society to catastrophe if left unchecked.Despite the grim outlook, the essay finds hope in the growing self-awareness among younger generations, who coined the term “brain rot” and recognize its effects. Movements advocating for reduced technology use, such as switching to dumbphones or promoting smartphone-free childhoods, are gaining traction. These trends suggest the possibility of reclaiming mental clarity and focus, countering the current trajectory of cognitive decline.Ultimately, the essay positions “Skibidi Toilet” as both a symptom of modern intellectual decay and a call to action. Humanity stands at a critical juncture: to either rise above the current trends of distraction and degradation or succumb further to the “U-bend” of intellectual regression.

For what it is worth, this is a typical bite-sized knowledge cookie. No one will know if the summary is good or bad unless that person takes the time to get the original full text and compare it with this AI generated output. The informational fast food provides a sugar jolt from saving time or the summary consumer’s belief that the important information is on the money. A knowledge cookie if you will, or maybe intellectual junk food?

Is this summary good enough? From my point of view, it is just okay; that is, good enough. What else is required? Flash back to 1982, the ABI/INFORM database was a commercial success. A couple of competitors were trying to capture our customers which was tricky. Intermediaries like Dialog Information Services, ESA, LexisNexis (remember Buster and his silver jumpsuit?), among others “owned” the direct relationship with the companies that paid the intermediaries to use the commercial databases on their systems. Then the intermediaries shared some information with us, the database producers.

How did a special librarian or a researcher “find” or “know about” our database? The savvy database producers provided information to the individuals interested in a business and management related commercial database. We participated in niche trade shows. We held training programs and publicized them with our partners Dow Jones News Retrieval, Investext, Predicasts, and Disclosure, among a few others. Our senior professionals gave lectures about controlled term indexing, the value of classification codes, and specific techniques to retrieve a handful of relevant citations and abstracts from our online archive. We issued news releases about new sources of information we added, in most cases with permission of the publisher.

We did not use machine indexing. We did have a wizard who created a couple of automatic indexing systems. However, when the results of what the software in 1922 could do, we fell back on human indexers, many of whom had professional training in the subject matter they were indexing. A good example was our coverage of real estate management activities. The person who handled this content was a lawyer who preferred reading and working in our offices. At this time, the database was owned by the Courier-Journal & Louisville Times Co. The owner of the privately held firm was an early adopted of online and electronic technology. He took considerable pride in our line up of online databases. When he hired me, I recall his telling me, “Make the databases as good as you can.”

How did we create a business and management database that generated millions in revenue and whose index was used by entities like the Royal Bank of Canada to index its internal business information?

Here’s the secret sauce:

- We selected sources in most cases business journals, publications, and some other types of business related content; for example, the ANBAR management reports

- The selection of which specific article to summarize was the responsibility of a managing editor with deep business knowledge

- Once an article was flagged as suitable for ABI/INFORM, it was routed to the specialist who created a summary of the source article. At that time, ABI/INFORM summaries or “abstracts” were limited to 150 words, excluding the metadata.

- An indexing specialist would then read the abstract and assign quite specific index terms from our proprietary controlled vocabulary. The indexing included such items as four to six index terms from our controlled vocabulary and a classification code like 7700 to indicate “marketing” with addition two digit indicators to make explicit that the source document was about marketing and direct mail or some similar subcategory of marketing. We also included codes to disambiguate between a railroad terminal and a computer terminal because source documents assumed the reader would “know” the specific field to which the term’s meaning belonged. We added geographic codes, so the person looking for information could locate employee stock ownership in a specific geographic region like Northern California, and a number of other codes specifically designed to allow precise, comprehensive retrieval of abstracts about business and management. Some of the systems permitted free text searching of the abstract, and we considered that a supplement to our quite detailed indexing.

- Each abstract and index terms was checked by a control control process using people who had demonstrated their interest in our product and their ability to double check the indexing.

- We had proprietary “content management systems” and these generated the specific file formats required by our intermediaries.

- Each week we updated our database and we were exploring daily updates for our companion product called Business Dateline when the Courier Journal was broken up and the database operation sold to a movie camera company, Bell+Howell.

Chat GPT-4o created the 300 word summary without the human knowledge, expertise, and effort. Consequently, the loss of these knowledge based workflow has been replaced by a smart software which can produce a summary in less than 30 seconds.

And that summary is, from my point of view, good enough. There are some trade offs:

- Chat GPT-4o is reactive. Feed it a url or a text, and it will summarize it. Gone is the knowledge-based approach to select a specific, high-value source document for inclusion in the database. Our focus was informed selection. People paid to access the database because of the informed choice about what to put in the database.

- The summary does not include the ABI/INFORM key points and actionable element of the source document. The summary is what a high school or junior college graduate would create if a writing teacher assigned a “how to write a précis” as part of the course requirements. In general, high school and junior college graduates are not into nuance and cannot determine the pivotal information payload in a source document.

- The precise indexing and tagging is absent. One could create a 1,000 such summaries, toss them in MISTRAL, and do a search. The result is great if one is uninformed about the importance of editorial polices, knowledge-based workflows, and precise, thorough indexing.

The reason I am sharing some of this “ancient” online history is:

- The loss of quality in online information is far more serious than most people understand. Getting a summary today is no big deal. What’s lost is simply not on these individuals’ radar.

- The lack of an editorial policy, precise date and time information, and the fine-grained indexing means that one has to wade through a mass of undifferentiated information. ABI/INFORM in the 1080s delivered a handful of citations directly on point with the user’s query. Today no one knows or cares about precision and recall.

- It is now more difficult than at any other time in my professional work career to locate needed information. Public libraries do not have the money to obtain reference materials, books, journals, and other content. If the content is online, it is a dumbed down and often cut rate version of the old-fashioned commercial databases created by informed professionals.

- People look up information online and remain dumb; that is, the majority of the people with whom I come in contact routinely ask me and my team, “Where do you get your information?” We even have a slide in our CyberSocial lecture about “how” and “where.” The analysts and researchers in the audience usually don’t know so an entire subculture of open source information professionals has come into existence. These people are largely on their own and have to do work which once was a matter of querying a database like ABI/INFORM, Predicasts, Disclosure, Agricola, etc.

Sure the essay is good. The summary is good enough. Where does that leave a person trying to understand the factual and logical errors in a new book examining social media. In my opinion, people are in the dark and have a difficult time finding information. Making decisions in the dark or without on point accurate information is recipe for a really bad batch of cookies.

Stephen E Arnold, December 15, 2024

Suddenly: Worrying about Content Preservation

August 19, 2024

![green-dino_thumb_thumb_thumb_thumb_t[1] green-dino_thumb_thumb_thumb_thumb_t[1]](https://arnoldit.com/wordpress/wp-content/uploads/2024/08/green-dino_thumb_thumb_thumb_thumb_t1_thumb-1.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Digital preservation may be becoming a hot topic for those who rarely think about finding today’s information tomorrow or even later today. Two write ups provide some hooks on which thoughts about finding information could be hung.

The young scholar faces some interesting knowledge hurdles. Traditional institutions are not much help. Thanks, MSFT Copilot. Is Outlook still crashing?

The first concerns PDFs. The essay and how to is “Classifying All of the PDFs on the Internet.” A happy quack to the individual who pursued this project, presented findings, and provided links to the data sets. Several items struck me as important in this project research report:

- Tracking down PDF files on the “open” Web is not something that can be done with a general Web search engine. The takeaway for me is that PDFs, like PowerPoint files, are either skipped or not crawled. The author had to resort to other, programmatic methods to find these file types. If an item cannot be “found,” it ceases to exist. How about that for an assertion, archivists?

- The distribution of document “source” across the author’s prediction classes splits out mathematics, engineering, science, and technology. Considering these separate categories as one makes clear that the PDF universe is about 25 percent of the content pool. Since technology is a big deal for innovators and money types, losing or not being able to access these data suggest a knowledge hurdle today and tomorrow in my opinion. An entity capturing these PDFs and making them available might have a knowledge advantage.

- Entities like national libraries and individualized efforts like the Internet Archive are not capturing the full sweep of PDFs based on my experience.

My reading of the essay made me recognize that access to content on the open Web is perceived to be easy and comprehensive. It is not. Your mileage may vary, of course, but this write up illustrates a large, multi-terabyte problem.

The second story about knowledge comes from the Epstein-enthralled institution’s magazine. This article is “The Race to Save Our Online Lives from a Digital Dark Age.” To make the urgency of the issue more compelling and better for the Google crawling and indexing system, this subtitle adds some lemon zest to the dish of doom:

We’re making more data than ever. What can—and should—we save for future generations? And will they be able to understand it?

The write up states:

For many archivists, alarm bells are ringing. Across the world, they are scraping up defunct websites or at-risk data collections to save as much of our digital lives as possible. Others are working on ways to store that data in formats that will last hundreds, perhaps even thousands, of years.

The article notes:

Human knowledge doesn’t always disappear with a dramatic flourish like GeoCities; sometimes it is erased gradually. You don’t know something’s gone until you go back to check it. One example of this is “link rot,” where hyperlinks on the web no longer direct you to the right target, leaving you with broken pages and dead ends. A Pew Research Center study from May 2024 found that 23% of web pages that were around in 2013 are no longer accessible.

Well, the MIT story has a fix:

One way to mitigate this problem is to transfer important data to the latest medium on a regular basis, before the programs required to read it are lost forever. At the Internet Archive and other libraries, the way information is stored is refreshed every few years. But for data that is not being actively looked after, it may be only a few years before the hardware required to access it is no longer available. Think about once ubiquitous storage mediums like Zip drives or CompactFlash.

To recap, one individual made clear that PDF content is a slippery fish. The other write up says the digital content itself across the open Web is a lot of slippery fish.

The fix remains elusive. The hurdles are money, copyright litigation, and technical constraints like storage and indexing resources.

Net net: If you want to preserve an item of information, print it out on some of the fancy Japanese archival paper. An outfit can say it archives, but in reality the information on the shelves is a tiny fraction of what’s “out there”.

Stephen E Arnold, August 19, 2024

Some Fun with Synthetic Data: Includes a T Shirt

August 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Academics and researchers often produce bogus results, fiddle images (remember the former president of Stanford University), or just make up stuff. Despite my misgivings, I want to highlight what appear to be semi-interesting assertions about synthetic data. For those not following the nuances of using real data, doing some mathematical cartwheels, and producing made-up data which are just as good as “real” data, synthetic data for me is associated with Dr. Chris Ré, the Stanford Artificial Intelligence Laboratory (remember the ex president of Stanford U., please). The term or code word for this approach to information suitable for training smart software is Snorkel. Snorkel became as company. Google embraced Snorkel. The looming litigation and big dollar settlements may make synthetic data a semi big thing in a tech dust devil called artificial intelligence. The T Shirt should read, “Synthetic data are write” like this:

I asked an AI system provided by the global leaders in computer security (yep, that’s Microsoft) to produce a T shirt for a synthetic data team. Great work and clever spelling to boot.

The “research” report appeared in Live Science. “AI Models Trained on Synthetic Data Could Break Down and Regurgitate Unintelligible Nonsense, Scientists Warn” asserts:

If left unchecked,”model collapse” could make AI systems less useful, and fill the internet with incomprehensible babble.

The unchecked term is a nice way of saying that synthetic data are cheap and less likely to become a target for copyright cops.

The article continues:

AI models such as GPT-4, which powers ChatGPT, or Claude 3 Opus rely on the many trillions of words shared online to get smarter, but as they gradually colonize the internet with their own output they may create self-damaging feedback loops. The end result, called “model collapse” by a team of researchers that investigated the phenomenon, could leave the internet filled with unintelligible gibberish if left unchecked.

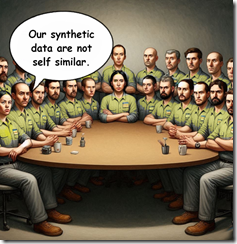

People who think alike and create synthetic data will prove that “fake” data are as good as or better than “real” data. Why would anyone doubt such glib, well-educated people. Not me! Thanks, MSFT Copilot. Have you noticed similar outputs from your multitudinous AI systems?

In my opinion, the Internet when compared to commercial databases produced with actual editorial policies has been filled with “unintelligible gibberish” from the days I showed up at conferences to lecture about how hypertext was different from Gopher and Archie. When Mosaic sort of worked, I included that and left my Next computer at the office.

The write up continues:

As the generations of self-produced content accumulated, the researchers watched their model’s responses degrade into delirious ramblings.

After the data were fed into the system a number of time, the output presented was like this example from the researchers’ tests:

“architecture. In addition to being home to some of the world’s largest populations of black @-@ tailed jackrabbits, white @-@ tailed jackrabbits, blue @-@ tailed jackrabbits, red @-@ tailed jackrabbits, yellow @-.”

The output might be helpful to those interested in church architecture.

Here’s the wrap up to the research report:

This doesn’t mean doing away with synthetic data entirely, Shumailov said, but it does mean it will need to be better designed if models built on it are to work as intended. [Note: Ilia Shumailov, a computer scientist at the University of Oxford, worked on this study.]

I must admit that the write up does not make clear what data were “real” and what data were “synthetic.” I am not sure how the test moved from Wikipedia to synthetic data. I have no idea where the headline originated? Was it synthetic?

Nevertheless, I think one might conclude that using fancy math to make up data that’s as good as real life data might produce some interesting outputs.

Stephen E Arnold, August 12, 2024

The Only Dataset Search Tool: What Does That Tell Us about Google?

April 11, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

If you like semi-jazzy, academic write ups, you will revel in “Discovering Datasets on the Web Scale: Challenges and Recommendations for Google Dataset Search.” The write up appears in a publication associated with Jeffrey Epstein’s favorite university. It may be worth noting that MIT and Google have teamed to offer a free course in Artificial Intelligence. That is the next big thing which does hallucinate at times while creating considerable marketing angst among the techno-giants jousting to emerge as the go-to source of the technology.

Back to the write up. Google created a search tool to allow a user to locate datasets accessible via the Internet. There are more than 700 data brokers in the US. These outfits will sell data to most people who can pony up the cash. Examples range from six figure fees for the Twitter stream to a few hundred bucks for boat license holders in states without much water.

The write up says:

Our team at Google developed Dataset Search, which differs from existing dataset search tools because of its scope and openness: potentially any dataset on the web is in scope.

A very large, money oriented creature enjoins a worker to gather data. If someone asks, “Why?”, the monster says, “Make up something.” Thanks MSFT Copilot. How is your security today? Oh, that’s too bad.

The write up does the academic thing of citing articles which talk about data on the Web. There is even a table which organizes the types of data discovery tools. The categorization of general and specific is brilliant. Who would have thought there were two categories of a vertical search engine focused on Web-accessible data. I thought there was just one category; namely, gettable. The idea is that if the data are exposed, take them. Asking permission just costs time and money. The idea is that one can apologize and keep the data.

The article includes a Googley graphic. The French portal, the Italian “special” portal, and the Harvard “dataverse” are identified. Were there other Web accessible collections? My hunch is that Google’s spiders such down as one famous Googler said, “All” the world’s information. I will leave it to your imagination to fill in other sources for the dataset pages. (I want to point out that Google has some interesting technology related to converting data sets into normalized data structures. If you are curious about the patents, just write benkent2020 at yahoo dot com, and one of my researchers will send along a couple of US patent numbers. Impressive system and method.)

The section “Making Sense of Heterogeneous Datasets” is peculiar. First, the Googlers discovered the basic fact of data from different sources — The data structures vary. Think in terms of grapes and deer droppings. Second, the data cannot be “trusted.” There is no fix to this issue for the team writing the paper. Third, the authors appear to be unaware of the patents I mentioned, particularly the useful example about gathering and normalizing data about digital cameras. The method applies to other types of processed data as well.

I want to jump to the “beyond metadata” idea. This is the mental equivalent of “popping” up a perceptual level. Metadata are quite important and useful. (Isn’t it odd that Google strips high value metadata from its search results; for example, time and data?) The authors of the paper work hard to explain that the Google approach to data set search adds value by grouping, sorting, and tagging with information not in any one data set. This is common sense, but the Googley spin on this is to build “trust.” Remember: This is an alleged monopolist engaged in online advertising and co-opting certain Web services.

Several observations:

- This is another of Google’s high-class PR moves. Hooking up with MIT and delivering razz-ma-tazz about identifying spiderable content collections in the name of greater good is part of the 2024 Code Red playbook it seems. From humble brag about smart software to crazy assertions like quantum supremacy, today’s Google is a remarkable entity

- The work on this “project” is divorced from time. I checked my file of Google-related information, and I found no information about the start date of a vertical search engine project focused on spidering and indexing data sets. My hunch is that it has been in the works for a while, although I can pinpoint 2006 as a year in which Google’s technology wizards began to talk about building master data sets. Why no time specifics?

- I found the absence of AI talk notable. Perhaps Google does not think a reader will ask, “What’s with the use of these data? I can’t use this tool, so why spend the time, effort, and money to index information from a country like France which is not one of Google’s biggest fans. (Paris was, however, the roll out choice for the answer to Microsoft and ChatGPT’s smart software announcement. Plus that presentation featured incorrect information as I recall.)

Net net: I think this write up with its quasi-academic blessing is a bit of advance information to use in the coming wave of litigation about Google’s use of content to train its AI systems. This is just a hunch, but there are too many weirdnesses in the academic write up to write off as intern work or careless research writing which is more difficult in the wake of the stochastic monkey dust up.

Stephen E Arnold, April 11, 2024

French Building and Structure Geo-Info

February 23, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

OSINT professionals may want to take a look at a French building and structure database with geo-functions. The information is gathered and made available by the Observatoire National des Bâtiments. Registration is required. A user can search by city and address. The data compiled up to 2022 cover France’s metropolitan areas and includes geo services. The data include address, the built and unbuilt property, the plot, the municipality, dimensions, and some technical data. The data represent a significant effort, involving the government, commercial and non-governmental entities, and citizens. The dataset includes more than 20 million addresses. Some records include up to 250 fields.

Source: https://www.urbs.fr/onb/

To access the service, navigate to https://www.urbs.fr/onb/. One is invited to register or use the online version. My team recommends registering. Note that the site is in French. Copying some text and data and shoving it into a free online translation service like Google’s may not be particularly helpful. French is one of the languages that Google usually handles with reasonable facilities. For this site, Google Translate comes up with tortured and off-base translations.

Stephen E Arnold, February 23, 2024

Meta Never Met a Kid Data Set It Did Not Find Useful

January 5, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Adults are ripe targets for data exploitation in modern capitalism. While adults fight for their online privacy, most have rolled over and accepted the inevitable consumer Big Brother. When big tech companies go after monetizing kids, however, that’s when adults fight back like rabid bears. Engadget writes about how Meta is fighting against the federal government about kids’ data: “Meta Sues FTC To Block New Restrictions On Monetizing Kids’ Data.”

Meta is taking the FTC to court to prevent them from reopening a 2020 $5 billion landmark privacy case and to allow the company to monetize kids’ data on its apps. Meta is suing the FTC, because a federal judge ruled that the FTC can expand with new, more stringent rules about how Meta is allowed to conduct business.

Meta claims the FTC is out for a power grab and is acting unconstitutionally, while the FTC reports the claimants consistently violates the 2020 settlement and the Children’s Online Privacy Protection Act. FTC wants its new rules to limit Meta’s facial recognition usage and initiate a moratorium on new products and services until a third party audits them for privacy compliance.

Meta is not a huge fan of the US Federal Trade Commission:

“The FTC has been a consistent thorn in Meta’s side, as the agency tried to stop the company’s acquisition of VR software developer Within on the grounds that the deal would deter "future innovation and competitive rivalry." The agency dropped this bid after a series of legal setbacks. It also opened up an investigation into the company’s VR arm, accusing Meta of anti-competitive behavior."

The FTC is doing what government agencies are supposed to do: protect its citizens from greedy and harmful practices like those from big business. The FTC can enforce laws and force big businesses to pay fines, put leaders in jail, or even shut them down. But regulators have been decades ramping up to take meaningful action. The result? The thrashing over kiddie data.

Whitney Grace, January 5, 2024

Data Mesh: An Innovation or a Catchphrase?

October 18, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[2] Vea4_thumb_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t2_thumb-17.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Have you ever heard of data mesh? It’s a concept that has been around the tech industry for a while but is gaining more traction through media outlets. Most of the hubbub comes from press releases, such as TechCrunch’s: “Nextdata Is Building Data Mesh For Enterprise.”

Data mesh can be construed as a data platform architecture that allows users to access information where it is. No transferring of the information to a data lake or data warehouse is required. A data lake is a centralized, scaled data storage repository, while a data warehouse is a traditional enterprise system that analyzes data from different sources which may be local or remote.

Nextdata is a data mesh startup founded by Zhamek Dehghani. Nextdata is a “data-mesh-native” platform to design, share, create, and apply data products for analytics. Nextdata is directly inspired by Dehghani’s work at Thoughtworks. Instead of building storing and using data/metadata in single container, Dehghani built a mesh system. How does the NextData system work?

“Every Nextdata data product container has data governance policies ‘embedded as code.’ These controls are applied from build to run time, Dehghani says, and at every point at which the data product is stored, accessed or read. ‘Nextdata does for data what containers and web APIs do for software,’ she added. ‘The platform provides APIs to give organizations an open standard to access data products across technologies and trust boundaries to run analytical and machine-learning workloads ‘distributedly.’ (sic) Instead of requiring data consumers to copy data for reprocessing, Nextdata APIs bring processing to data, cutting down on busy work and reducing data bloat.’’

NextData received $12 million in seed investment to develop her system’s tooling and hire more people for the product, engineering, and marketing teams. Congratulations on the funding. It is not clear at this time that the approach will add latency to operations or present security issues related to disparate users’ security levels.

Whitney Grace, October 18, 2023