Facebook: Ripples of Confusion, Denial, and Revisionism

March 18, 2019

Facebook contributed to an interesting headline about the video upload issue related to the bad actor in New Zealand. Here’s the headline I noted as it appeared on Techmeme’s Web page:

The Reuters’ story ran a different headline:

What caught my attention is the statement “blocked at upload.” If a video were blocked at upload, were those videos removed? If blocked, then the number of videos drops to 300 million.

This type of information is typical of the coverage of Facebook, a company which is become the embodiment of social media.

There were two other interesting Facebook stories in my news feed this morning.

The first concerns a high profile Silicon Valley investor, Marc Andreessen. The write up reports and updates a story whose main point is:

Facebook Board Member May Have Met Cambridge Analytica Whistleblower in 2016.

The Search Wars: When Open Starts to Close

March 12, 2019

Compass Search. The precursor. The result? Elasticsearch. No proprietary code. Free and open source. The world of enterprise search shifted.

As a result of Shay Bannon’s efforts, an alternative to proprietary search and interesting financial maneuvers, an individual or organization could download code and set up a functional enterprise search system.

There are proprietary search systems available like Coveo. But most of the offerings are sort of open sourcey. It is a marketing ploy. The forward leaning companies do not use the word search to market their products because zippier functionality is what brings tire kickers and some buyers.

The landscape of search seems to be doing its Hawaii volcano act. No real eruption buts shakes, hot gas, and cracks have begun to appear. The lava flows will come soon enough.

The path is clear to the intrepid developer.

The tip off is Amazon’s announcement that it now offers an open distro for Elasticsearch. Why is Amazon taking this step? The company explains:

Elasticsearch has become an essential technology for log analytics and search, fueled by the freedom open source provides to developers and organizations. Our goal is to ensure that open source innovation continues to thrive by providing a fully featured, 100% open source, community-driven distribution that makes it easy for everyone to use, collaborate, and contribute.

DarkCyber’s briefings about Amazon’s policeware initiative suggest that the online bookstore is adding another component to its robust intelligence system and services.

The move involves or will involve:

- Entrepreneurs who will see Amazon as creating low friction for new products and services

- Partners because implementing search can be a consulting gold mine

- Users

- Developers who will use an Amazon “off the shelf” solutions

- Competitors who may find the “other open source” Elasticsearch lagging behind the Amazon “house brand”.

The move is not much of a surprise. Amazon seeks to implement its version of IBM’s 1960s style vendor lock in. Open source is open source, isn’t it? A version of the popular Elasticsearch system which has utility in commercial products to add ons which help make log files more mine-able. Plus search snaps into the DNA of the Amazon jungle of services, functions, features, and services. Where there is confusion, there are opportunities to make money.

Adding a house brand to its ecosystem is a basic tactic in the Amazon playbook. Those T shirts with the great price are Amazon’s, not the expensive stuff with a fancy brand name. T shirts and search? Who cares?

What’s the play mean for over extended proprietary search systems which may never generate a pay day for investors? A lot of explaining seems likely.

What the play mean for Elastic, the company which now operates the son of Compass Search? Some long off site meetings may be ahead and maybe some chats with legal eagles.

What’s the play mean for vendors using Amazon as back end plumbing for their enterprise or policeware services? A swap out of the Elasticsearch system for the Amazon version could be in the cards. Amazon Elasticsearch will probably deliver fewer headaches and lost weekends than using the Banon-Elastic version. Who wants headaches in an already complex, expensive implementation?

The Register quotes an evangelist from AWS as saying:

“We will continue to send our contributions and patches upstream to advance these projects.”

DarkCyber interprets this action and Amazon’s explanations from the perspective and context of a high school football coach:

“Front line, listen up, fork that QB. I want that guy put down. Hard. Let’s go.”

Amazon. The best defense is a good offense, right?

The coach shouts:

“Let’s hit those Sheep hard. Arrrgh.”

Stephen E Arnold, March 12, 2019

IBM Debate Contest: Human Judges Are Unintelligent

February 12, 2019

I was a high school debater. I was a college debater. I did extemp. I did an event called readings. I won many cheesey medals and trophies. Also, I have a number of recollections about judges who shafted me and my team mate or just hapless, young me.

I learned:

Human judges mean human biases.

When I learned that the audience voted a human the victor over the Jeopardy-winning, subject matter expert sucking, and recipe writing IBM Watson, I knew the human penchant for distortion, prejudice, and foul play made an objective, scientific assessment impossible.

Humans may not be qualified to judge state of the art artificial intelligence from sophisticated organizations like IBM.

The rundown and the video of the 25 minute travesty is on display via Engadget with a non argumentative explanation in words in the write up “IBM AI Fails to Beat Human Debating Champion.” The real news report asserts:

The face-off was the latest event in IBM’s “grand challenge” series pitting humans against its intelligent machines. In 1996, its computer system beat chess grandmaster Garry Kasparov, though the Russian later accused the IBM team of cheating, something that the company denies to this day — he later retracted some of his allegations. Then, in 2011, its Watson supercomputer trounced two record-winning Jeopardy! contestants.

Yes, past victories.

Now what about the debate and human judges.

My thought is that the dust up should have been judged by a panel of digital devastators; specifically:

- Google DeepMind. DeepMind trashed a human Go player and understands the problems humanoids have being smart and proud

- Amazon SageMaker. This is a system tuned with work for a certain three letter agency and, therefore, has a Deep Lens eye to spot the truth

- Microsoft Brainwave (remember that?). This is a system which was the first hardware accelerated model to make Clippy the most intelligent “bot” on the planet. Clippy, come back.

Here’s how this judging should have worked.

- Each system “learns” what it takes to win a debate, including voice tone, rapport with the judges and audience, and physical gestures (presence)

- Each system processes the video, audio, and sentiment expressed when the people in attendance clap, whistle, laugh, sub vocalize “What a load of horse feathers,” etc.

- Each system generates a score with 0.000001 the low and 0.999999 the high

- The final tally would be calculated by Facebook FAIR (Facebook AI Research). The reason? Facebook is among the most trusted, socially responsible smart software companies.

The notion of a human judging a machine is what I call “deep stupid.” I am working on a short post about this important idea.

A human judged by humans is neither just nor impartial. Not Facebook FAIR.

An also participated award goes to IBM marketing.

IBM snagged an also participated medal. Well done.

Stephen E Arnold, February 13, 2019

Deloitte and NLP: Is the Analysis On Time and Off Target?

January 18, 2019

I read “Using AI to Unleash the Power of Unstructured Government Data.” I was surprised because I thought that US government agencies were using smart software (NLP, smart ETL, digital notebooks, etc.). My recollection is that use of these types of tools began in the mid 1990s, maybe a few years earlier. i2 Ltd., a firm for which I did a few minor projects, rolled out its Analyst’s Notebook in the mid 1990s, and it gained traction in a number of government agencies a couple of years after British government units began using the software.

The write up states:

DoD’s Defense Advanced Research Projects Agency (DARPA) recently created the Deep Exploration and Filtering of Text (DEFT) program, which uses natural language processing (NLP), a form of artificial intelligence, to automatically extract relevant information and help analysts derive actionable insights from it.

My recollection is that DEFT fired up in 2010 or 2011. Once funding became available, activity picked up in 2012. That was six years ago.

However, DEFT is essentially a follow on from other initiatives which reach by to Purple Yogi (Stratify) and DR-LINK, among others.

The capabilities of NLP are presented as closely linked technical activities; for example:

- Name entity resolution

- Relationship extraction

- Sentiment analysis

- Topic modeling

- Text categorization

- Text clustering

- Information extraction

The collection of buzzwords is interesting. I would annotate each of these items to place them in the context of my research into content processing, intelware, and related topics:

Google: Innovation Desperation or Innovation Innovation

December 5, 2018

Google has an innovation problem. The company has tried 20 percent free time. Engineers were supposed to work on personal projects. Google tried creating investment units. Google has acquired companies, often in time frames that seemed compressed. Anyone remember buying Motorola Mobility in 2011? Google created a super secret innovation center because the ageing Google Labs was not up to the task of creating Loon balloons and solving death. There have been competitions to identity bright young sprouts who can bring new ideas to the Google. If I dig through my files, there are probably innovation initiatives I have forgotten. Google is either a forward looking outfit, or it is struggling to do more than keep the 20 year old system looking young.

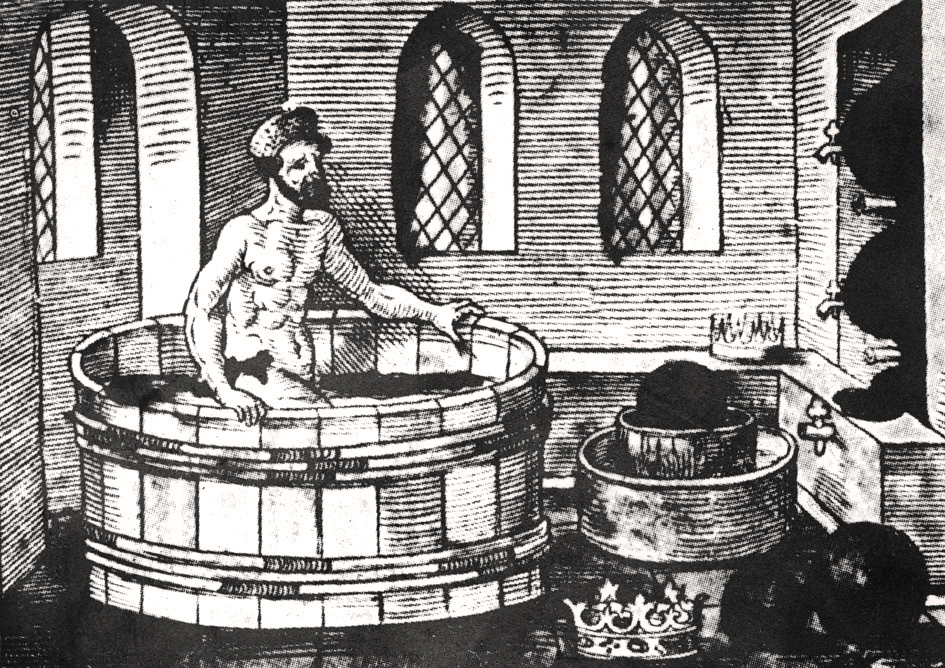

Has Google tried thinking in the hot tub like Archimedes? Google has bean bags, volleyball courts, and Foosball. But real innovations like those AltaVista mechanisms or GoTo’s pay to play for search visibility? There is Web Accelerator, of course.

I read “An Exclusive look inside Google’s in-house incubator Area 120.” The write up reports that a wizard Googler allegedly said and may actually believe:

“We built a place and a process to be able to have those folks come to us and then select what we thought were the most promising teams, the most promising ideas, the most promising markets,” explains managing director Alex Gawley, who has spent a decade at Google and left his role as product manager for Google Apps (since renamed G Suite) to spearhead this new effort. Employees “can actually leave their jobs and come to us to spend 100% of their time pursuing something that they are particularly passionate about,” he says.

Okay, Area 120. That even more mysterious than the famous Area 51. I am thinking of the theme from “Outer Limits.”

The Googlers “pitch” ideas in the hope of getting funding. A Japanese management expert explained a somewhat similar approach to keeping smart employees innovating. See Kuniyasu Sakai’s explanations of the method in “To Expand We Divide.” You probably have this and his other management writings on your desk, right? Someone at Google seems to have brushed against these concepts. In Fast Company / Google speak, these new companies are “hatchlings.”

Several observations:

- Innovation is a problem as companies become larger. Google illustrates this problem.

- Google’s approach to innovation is bifurcated. Most of its “innovations” originated elsewhere; for example, IBM Clever, AltaVista technology, GoTo-Overture “pay to play” advertising. The company’s goal is to innovate using original ideas, not refinements of other innovators’ breakthroughs.

- Google faces an innovation free environment. A recent example may be found in the wild and crazy Amazon announcements at its Re:Invent conference. Somewhere in the jet blast of announcements, there were a couple of substantive innovations. Google does phones with problems and wraps search in layers of cotton wool. Amazon, its seems, is sucking search innovation from Google.

For these reasons Google is gasping. Even rah rah write ups about Google like the recent encomium to Jeff Dean and Sanjay Ghemawat (both AltaVista veterans) is a technical “You Can’t Go Home Again” description of the good old days.

On one hand, Google’s efforts to become innovative are admirable. Persistence, patience, investment—yada yada. On the other hand, Google remains trapped as a servant to its Yahoo (GoTo and Overture) business model for online advertising.

The PR will continue to flow, but innovations? Maybe.

Stephen E Arnold, December 5, 2018

A Reasonable Assertion: Google Is Dying

October 10, 2018

Nope, this is not the view in Harrod’s Creek. The idea that “Google Is Dying” comes from a write up in Vortex by Lauren, whom I assume is a real, living entity and not an avatar, construct, or VR thing.

You can find the analysis at this link.

I am not going to push back against the entity Lauren’s ideas.

I want to point out that:

- Companies, like real living humans, have a lifespan. It does not matter that some Googlers are awaiting the opportunity to merge with a machine, save their brain (assuming that intelligence is indeed the sole province of thought), and live a long time. Ideally? Forever. The death of Google, therefore, is hard wired, and, if I may offer a controversial idea, has already taken place. Today we are dealing with the progeny of Google.

- The missteps which have captured some Google embracers’ attention is the outright failure of Google’s ability to create a secure environment for management and for users of the descendent of Orkut. The lapses are not an indication that Google is dying. The examples are logical manifestations of the consequences of inbreeding. Imagine West Virginia’s isolated communities connected via a mobile system. That does not change the inbreeding for some individuals. If you are not up on inbreeding, here’s a handy reference. The key point is cognitive deterioration. Stated more clearly, stupid decision making, impaired analytic skills, etc.

- Google’s lab rat approach to innovation has not, so far, been able to disprove Steve Ballmer’s brilliant observation: “One trick pony.” But what few analysts care to remember is that the “one trick pony” was online advertising derived from the GoTo.com/Overture.com/Yahoo.com idea. My recollection is that prior to the Google IPO, a legal settlement was reached with Yahoo. This billion dollar deal kept good old Yahoo afloat for several years. Thus, Google’s big idea was a bit of a “me too.” One might argue that the failure to find a way to generate an equivalent amount of revenue is not surprising. Even the Android ecosystem is like a sucker fish on a shark. The symbiosis between online advertising, data harvesting, and revenue is difficult to disentangle. The key point: The big idea was GoTo.com, implemented in a Googley way.

After writing three monographs about the Google and adding comments to my research about the company, I could write more.

Read the alleged humanoid’s “real news” essay. Make your own decision.

I am not pushing back. I am just disappointed that 20 years after the Backrub folks morphed into Google, analyses continue to look at here-and-now events, not the broader trends the company manifests.

Maybe Generation Z will step forward and fill the void?

Stephen E Arnold, October 11, 2018

Machine Learning Frameworks: Why Not Just Use Amazon?

September 16, 2018

A colleague sent me a link to “The 10 Most Popular Machine Learning Frameworks Used by Data Scientists.” I found the write up interesting despite the author’s failure to define the word popular and the bound phrase data scientists. But few folks in an era of “real” journalism fool around with my quaint notions.

According to the write up, the data come from an outfit called Figure Eight. I don’t know the company, but I assume their professionals adhere to the basics of Statistics 101. You know the boring stuff like sample size, objectivity of the sample, sample selection, data validity, etc. Like information in our time of “real” news and “real” journalists, some of these annoying aspects of churning out data in which an old geezer like me can have some confidence. You know like the 70 percent accuracy of some US facial recognition systems. Close enough for horseshoes, I suppose.

Here’s the list. My comments about each “learning framework” appear in italics after each “learning framework’s” name:

- Pandas — an open source, BSD-licensed library

- Numpy — a package for scientific computing with Python

- Scikit-learn — another BSD licensed collection of tools for data mining and data analysis

- Matplotlib — a Python 2D plotting library for graphics

- TensorFlow — an open source machine learning framework

- Keras — a high-level neural networks API, written in Python and capable of running on top of TensorFlow, CNTK, or Theano

- Seaborn — a Python data visualization library based on matplotlib

- Pytorch & Torch

- AWS Deep Learning AMI — infrastructure and tools to accelerate deep learning in the cloud. Not to be annoying but defining AMI as Amazon Machine Learning Interface might be useful to some

- Google Cloud ML Engine — neural-net-based ML service with a typically Googley line up of Googley services.

Stepping back, I noticed a handful of what I am sure are irrelevant points which are of little interest to a “real” journalists creating “real” news.

First, notice that the list is self referential with python love. Frameworks depend on other python loving frameworks. There’s nothing inherently bad about this self referential approach to shipping up a list, and it makes it a heck of a lot easier to create the list in the first place.

Second, the information about Amazon is slightly misleading. In my lecture in Washington, DC on September 7, I mentioned that Amazon’s approach to machine learning supports Apache MXNet and Gluon, TensorFlow, Microsoft Cognitive Toolkit, Caffe, Caffe2, Theano, Torch, PyTorch, Chainer, and Keras. I found this approach interesting, but of little interest to those creating a survey or developing an informed list about machine learning frameworks; for example, Amazon is executing a quite clever play. In bridge, I think the phrase “trump card” suggests what the Bezos momentum machine has cooked up. Notice the past tense because this Amazon stuff has been chugging along in at least one US government agency for about four, four and one half years.

Third, Google brings up dead last. What about IBM? What about Microsoft and its CNTK. Ah, another acronym, but I as a non real journalist will reveal that this acronym means Microsoft Cognitive Toolkit. More information is available in Microsoft’s wonderful prose at this link. By the way, the Amazon machine learning spinning momentum thing supports the CNTK. Imagine that? Right, I didn’t think so.

Net net: The machine learning framework list may benefit from a bit of refinement. On the other hand, just use Amazon and move down the road to a new type of smart software lock in. Want to know more? Write benkent2020 @ yahoo dot com and inquire about our for fee Amazon briefing about machine learning, real time data marketplaces, and a couple of other most off the radar activities. Have you seen Amazon’s facial recognition camera? It’s part of the Amazon machine learning imitative, and it has some interesting capabilities.

Stephen E Arnold, September 16, 2018

IBM Watson Workspace

August 6, 2018

I read “What Is Watson Workspace?” I have been assuming that WW is a roll up of:

- IBM Lotus Connections

- IBM Lotus Domino

- IBM Lotus Mashups

- IBM Lotus Notes

- IBM Lotus Quickr

- IBM Lotus Sametime

The write up explains how wrong I am (yet again. Such a surprise for a person who resides in rural Kentucky). The write up states:

IBM Watson Workspace offers a “smart” destination for employees to collaborate on projects, share ideas, and post questions, all built from the ground up to take advantage of Watson’s cognitive computing abilities.

Yeah, but I thought the Lotus products provided these services.

How silly of me?

The different is that WW includes cognitive APIs. Sounds outstanding. I can:

- Draw insights from conversations

- Turn conversations into actions

- Access video conferencing

- Customize Watson Workspace.

When I was doing a little low level work for one of the US government agencies (maybe it was the White House?) I recall sitting in a briefing and these functions were explained. A short time thereafter I had the thankless job of reviewing a minor contract to answer an almost irrelevant question. Guess what? The “workspace” did not contain the email nor the attachments I sought. The system, it was explained to me by someone from IBM in Gaithersburg, was that it was not the fault of the IBM system.

Business Intelligence: What Is Hot? What Is Not?

July 16, 2018

I read “Where Business Intelligence is Delivering Value in 2018.” The write up summarizes principal findings from a study conducted by Dresner Advisory Services, an outfit with which I am not familiar. I suggest you scan the summary in Cloud Tweaks and then, if you find the data interesting, chase after the Dresner outfit. My hunch is that the sales professionals will respond to your query.

Several items warranted my uncapping my trusty pink marker and circling an item of information.

First, I noticed a chart called Technologies and Initiatives Strategic to Business Intelligence. The chart presents data about 36 “technologies.” I noticed that “enterprise search” did not make the list. I did note that cognitive business intelligence, artificial intelligence, t4ext analytics, and natural language analytics did. If I were generous to a fault, I would say, “These Dresner analysts are covering enterprise search, just taking the Tinker Toy approach by naming areas of technologies.” However, I am not feeling generous, and I find it difficult to believe that Dresner or any other knowledge worker can do “work” without being able to find a file, data, look up a factoid, or perform even the most rudimentary type of research without using search. The omission of this category is foundational, and I am not sure I have much confidence in the other data arrayed in the report.

Second, I don’t know what “data storytelling” is. I suppose (and I am making a wild and crazy guess here) that a person who has some understanding of the source data, the algorithmic methods used to produce output, and the time to think about the likely accuracy of the output creates a narrative. For example, I have been in a recent meeting with the president of a high technology company who said, “We have talked to our customers, and we know we have to create our own system.” Obviously the fellow knows his customers, essentially government agencies. The customers (apparently most of them) want an alternative, and realizes change is necessary. The actual story based on my knowledge of the company, the product and service he delivers, and the government agencies’ budget constraints. The “real story” boils down to: “Deliver a cheaper product or you will lose the contract.” Stories, like those from teenagers who lose their homework, often do not reflect reality. What’s astounding is that data story telling is number eight on the hit parade of initiatives strategic to business intelligence. I was indeed surprised. But governance made the list as did governance. What the heck is governance?

What Has Happened to Enterprise Search?

June 28, 2018

I read “Enterprise Search in 2018: What a Long Strange Trip It’s Been.” I found the information presented interesting. The thesis is that enterprise search has been on a journey almost like a “Wizard of Oz” experience.

The idea of consolidation, from my point of view, boils down to executives who want to cash in, get out, and move on. The reasons are not far to seek: Over promising and under delivering, financial manipulations, and positioning a nuts and bolts utility as something that solves information problems.

Some, maybe many, licensees of proprietary enterprise search systems may have viewed their investment as an opportunity that delivered an unexpected but inevitable outcome. Where is that lush scenery? Where’s the beach?

The reality is that enterprise search vendors were aced by Shay Banon. His Act II of Compass: A Finding Story was Elasticsearch and the company Elastic. Why not use free and open source software. At least the code gets some bugs fixed unlike old school proprietary enterprise search systems. Bug fixes? Yep, good luck with your Fast Search & Retrieval ESP platform idiosyncrasies.

The landscape today is a bit like the volcanic transformation of Hawaii’s Vacationland. Real estate agents will be explaining that the lava flows have created new beach views, promising that eruptions are a low probability event.

The write up does not highlight one simple fact: Enterprise search has given way to “roll up” services or what I call “meta-plays.” The idea is that search is tucked inside systems like Diffeo, Palantir Gotham, and other “intelligence” platforms.

Why aren’t these enterprise grade solutions sold as “enterprise search” or “enterprise business intelligence and discovery solutions”?

The answer is that the information retrieval nest has been marginalized by the actions of vendors stretching back to the Smart system and to the present with “proprietary” solutions which actually include open source technology. These systems are anchored in the past.

Consider Diffeo?

Why offer enterprise search when one can provide a solution that delivers information in context, provides collaboration tools, and displays information in different ways with a single mouse click?