Telegram Lecture at TechnoSecurity & Digital Forensics on June 4, 2025

April 3, 2025

No AI. Just a dinobaby sharing an observation about younger managers and their innocence.

No AI. Just a dinobaby sharing an observation about younger managers and their innocence.

The organizers of the June 2025 TechnoSecurity & Digital Forensics Conference posted a 60 second overview of our Telegram Overview lecture on LinkedIn. You can view the conference’s 60 second video at https://lnkd.in/eTSvpYFb. Erik and I have been doing presentations on specific Telegram subjects for law enforcement groups. Two weeks ago, we provided to the Massachusetts Association of Crime Analysts a 60-minute run down about the technical architecture of Telegram and identified three US companies providing services to Telegram. To discuss a presentation for your unit, please, message me via LinkedIn. (Plus, my son and I are working to complete our 100 page PDF notes of our examination of Telegram’s more interesting features. These range from bots which automate cross blockchain crypto movement to the automatic throttling function in the Telegram TON Virtual Machine to prevent transaction bottlenecks in complex crypto wallet obfuscations.) See you there. — Thank you, Stephen E Arnold, April 3, 2025, 223 pm U S Eastern

More about NAMER, the Bitext Smart Entity Technology

January 14, 2025

A dinobaby product! We used some smart software to fix up the grammar. The system mostly worked. Surprised? We were.

A dinobaby product! We used some smart software to fix up the grammar. The system mostly worked. Surprised? We were.

We spotted more information about the Madrid, Spain based Bitext technology firm. The company posted “Integrating Bitext NAMER with LLMs” in late December 2024. At about the same time, government authorities arrested a person known as “Broken Tooth.” In 2021, an alert for this individual was posted. His “real” name is Wan Kuok-koi, and he has been in an out of trouble for a number of years. He is alleged to be part of a criminal organization and active in a number of illegal behaviors; for example, money laundering and human trafficking. The online service Irrawady reported that Broken Tooth is “the face of Chinese investment in Myanmar.”

Broken Tooth (né Wan Kuok-koi, born in Macau) is one example of the importance of identifying entity names and relating them to individuals and the organizations with which they are affiliated. A failure to identify entities correctly can mean the difference between resolving an alleged criminal activity and a get-out-of-jail-free card. This is the specific problem that Bitext’s NAMER system addresses. Bitext says that large language models are designed for for text generation, not entity classification. Furthermore, LLMs pose some cost and computational demands which can pose problems to some organizations working within tight budget constraints. Plus, processing certain data in a cloud increases privacy and security risks.

Bitext’s solution provides an alternative way to achieve fine-grained entity identification, extraction, and tagging. Bitext’s solution combines classical natural language processing solutions solutions with large language models. Classical NLP tools, often deployable locally, complement LLMs to enhance NER performance.

NAMER excels at:

- Identifying generic names and classifying them as people, places, or organizations.

- Resolving aliases and pseudonyms.

- Differentiating similar names tied to unrelated entities.

Bitext supports over 20 languages, with additional options available on request. How does the hybrid approach function? There are two effective integration methods for Bitext NAMER with LLMs like GPT or Llama are. The first is pre-processing input. This means that entities are annotated before passing the text to the LLM, ideal for connecting entities to knowledge graphs in large systems. The second is to configure the LLM to call NAMER dynamically.

The output of the Bitext system can generate tagged entity lists and metadata for content libraries or dictionary applications. The NAMER output can integrate directly into existing controlled vocabularies, indexes, or knowledge graphs. Also, NAMER makes it possible to maintain separate files of entities for on-demand access by analysts, investigators, or other text analytics software.

By grouping name variants, Bitext NAMER streamlines search queries, enhancing document retrieval and linking entities to knowledge graphs. This creates a tailored “semantic layer” that enriches organizational systems with precision and efficiency.

For more information about the unique NAMER system, contact Bitext via the firm’s Web site at www.bitext.com.

Stephen E Arnold, January 14, 2025

FOGINT: Big Takedown Coincident with Durov Detainment. Coincidence?

December 19, 2024

This blog post is the work of an authentic dinobaby. No smart software was used.

This blog post is the work of an authentic dinobaby. No smart software was used.

In recent years, global authorities have taken down several encrypted communication channels. Exclu and Ghost, for example. Will a more fragmented approach keep the authorities away? Apparently not. A Europol press release announces, “International Operation Takes Down Another Encrypted Messaging Service Used by Criminals.” The write-up notes:

“Criminals, in response to the disruptions of their messaging services, have been turning to a variety of less-established or custom-built communication tools that offer varying degrees of security and anonymity. While the new fragmented landscape poses challenges for law enforcement, the takedown of established communication channels shows that authorities are on top of the latest technologies that criminals use.”

Case in point: After a three-year investigation, a multi-national law enforcement team just took down MATRIX. The service, “by criminals for criminals,” was discovered in 2021 on a convicted murderer’s phone. It was a sophisticated tool bad actors must be sad to lose. We learn:

“It was soon clear that the infrastructure of this platform was technically more complex than previous platforms such as Sky ECC and EncroChat. The founders were convinced that the service was superior and more secure than previous applications used by criminals. Users were only able to join the service if they received an invitation. The infrastructure to run MATRIX consisted of more than 40 servers in several countries with important servers found in France and Germany. Cooperation between the Dutch and French authorities started through a JIT set up at Eurojust. By using innovative technology, the authorities were able to intercept the messaging service and monitor the activity on the service for three months. More than 2.3 million messages in 33 languages were intercepted and deciphered during the investigation. The messages that were intercepted are linked to serious crimes such as international drug trafficking, arms trafficking, and money laundering. Actions to take down the service and pursue serious criminals happened on 3 December in four countries.”

Those four countries are France, Spain, Lithuania, and Germany, with an assist by the Netherlands. Interpol highlights the importance of international cooperation in fighting organized crime. Is this the key to pulling ahead in the encryption arms race?

Cynthia Murrell, December 19, 2024

FOGINT: Telegram Steps Up Its Cooperation with Law Enforcement

December 12, 2024

This short item is the work of the dinobaby. The “fog” is from Gifr.com.

This short item is the work of the dinobaby. The “fog” is from Gifr.com.

Engadget, an online news service, reported “Telegram Finally Takes Action to Remove CSAM from Its Platform.” France picks up Telegram founder Pavel Durov and explains via his attorney how the prison system works in the country. Mr. Durov, not yet in prison, posted an alleged Euro 5 million with the understanding he could not leave the country. According to Engadget, Mr. Durov is further modifying his attitude toward “free speech” and “freedom.”

The article states:

Telegram is taking a significant step to reduce child sexual abuse material (CSAM), partnering with the International Watch Foundation (IWF) four months after the former’s founder and CEO Pavel Durov was arrested. The French authorities issued 12 charges against Durov in August, including complicity in “distributing, offering or making available pornographic images of minors, in an organized group” and “possessing pornographic images of minors.”

For those not familiar with the International Watch Foundation, the organization serves as a “hub” for law enforcement and companies acting as intermediaries for those engaged in buying, leasing, selling, or exchanging illicit images or videos of children. Since 2013, Telegram has mostly been obstinate when asked to cooperate with investigators. The company has waved its hands and insisted that it is not into curtailing free speech.

After the French snagged Mr. Durov, he showed a sudden interest in cooperating with authorities. The Engadget report says:

Telegram has taken other steps since Durov’s arrest, announcing in September that it would hand over IP addresses and phone numbers in legal requests — something it fought in the past. Durov must remain in France for the foreseeable future.

What’s Telegram going to do after releasing handles, phone numbers, and possibly some of that log data allegedly held in servers available to the company? The answer is, “Telegram is pursuing its next big thing.” Engadget does not ask, “What’s Telegram’s next act?” Surprisingly a preview of Telegram’s future is unfolding in TON Foundation training sessions in Vancouver, Istanbul, and numerous other locations.

But taking that “real” work next step is not in the cards for most Telegram watchers. The “finally” is simply bringing down the curtain of Telegram’s first act. More acts are already on stage.

Stephen E Arnold, December 12, 2024

Dark Web: Clever and Cute Security Innovations

December 11, 2024

This write up was created by an actual 80-year-old dinobaby. If there is art, assume that smart software was involved. Just a tip.

This write up was created by an actual 80-year-old dinobaby. If there is art, assume that smart software was involved. Just a tip.

I am not sure how the essay / technical analysis “The Fascinating Security Model of Dark Web Marketplaces” will diffuse within the cyber security community. I want to highlight what strikes me as a useful analysis and provide a brief, high-level summary of the points which my team and I found interesting. We have not focused on the Dark Web since we published Dark Web Notebook, a complement to my law enforcement training sessions about the Dark Web in the period from 2013 to 2016.

This write up does a good job of explaining use of open source privacy tools like Pretty Good Privacy and its two-factor authentication. The write up walks through a “no JavaScript” approach to functions on the Dark Web site. The references to dynamic domain name operations is helpful as well.

The first observation I would offer is that in the case of the Dark Web site analyzed in the cited article is that the security mechanisms in use have matured and, in the opinion of my research team, advanced to thwart some of the techniques used to track and take down the type of sites hosted by Cyberbunker in Germany. This is — alas — inevitable, and it makes the job of investigators more difficult.

The second observation is that this particular site makes use of distributed services. With the advent of certain hosting providers to offer self managed virtual servers and a professed inability to know what’s happening on physical machines. Certain hosting providers “comply” and then say, “If you try to access the virtual machines, they can fail. Since we don’t manage them, you guys will have to figure out how to get them back up.” Cute and effective.

The third observation is that the hoops through which a potential drug customer has to get through are likely to make a person with an addled brain get clean and then come back and try again. On the other hand, the Captcha might baffle a sober user or investigator as well. Cute and annoying.

The essay is useful and worth reading because it underscores the value of fluid online infrastructures for bad actors.

Stephen E Arnold, December 11, 2024

Explaining Graykey: Helpful or Harmful for Law Enforcement?

November 25, 2024

I am not keen on making some “secrets” publicly available. Those keen on channeling Edward Snowden may have glory words to describe their activities. I take a different view: Some types of information should be proprietary and made known only to those engaged in trying to enforce applicable laws. That said, I want to point to a Reddit.com post about “privacy.” The trigger for the post is an article behind a paywall about a device used to extract information from a mobile phone.

The Reddit post provides a link to the source document “Leaked Documents Show What Phones Secretive Tech ‘Graykey’ Can Unlock”. That write up is typical of non-LE and intel professional reactions to certain types of specialized software and hardware.

What I want to mention is that the Reddit post provides some supplementary information which is not widely known and generally not bandied about outside of certain professional groups. You can find this post, the links to the additional information, and some commentary to disambiguate the jargon used to keep chatter about specialized products and services within a “community.” Here’s the link to the Reddit information: https://shorturl.at/KsDwc

To be frank, I miss the good old days when information of a sensitive nature did not become course material for a computer science and programming class or a road map for outfits competing with US firms. But I am a dinobaby. Believe me, no one cares about my old-timey thoughts.

Stephen E Arnold, November 25, 2024

Entity Extraction: Not As Simple As Some Vendors Say

November 19, 2024

No smart software. Just a dumb dinobaby. Oh, the art? Yeah, MidJourney.

No smart software. Just a dumb dinobaby. Oh, the art? Yeah, MidJourney.

Most of the systems incorporating entity extraction have been trained to recognize the names of simple entities and mostly based on the use of capitalization. An “entity” can be a person’s name, the name of an organization, or a location like Niagara Falls, near Buffalo, New York. The river “Niagara” when bound to “Falls” means a geologic feature. The “Buffalo” is not a Bubalina; it is a delightful city with even more pleasing weather.

The same entity extraction process has to work for specialized software used by law enforcement, intelligence agencies, and legal professionals. Compared to entity extraction for consumer-facing applications like Google’s Web search or Apple Maps, the specialized software vendors have to contend with:

- Gang slang in English and other languages; for example, “bumble bee.” This is not an insect; it is a nickname for the Latin Kings.

- Organizations operating in Lao PDR and converted to English words like Zhao Wei’s Kings Romans Casino. Mr. Wei has been allegedly involved in gambling activities in a poorly-regulated region in the Golden Triangle.

- Individuals who use aliases like maestrolive, james44123, or ahmed2004. There are either “real” people behind the handles or they are sock puppets (fake identities).

Why do these variations create a challenge? In order to locate a business, the content processing system has to identify the entity the user seeks. For an investigator, chopping through a thicket of language and idiosyncratic personas is the difference between making progress or hitting a dead end. Automated entity extraction systems can work using smart software, carefully-crafted and constantly updated controlled vocabulary list, or a hybrid system.

Automated entity extraction systems can work using smart software, carefully-crafted and constantly updated controlled vocabulary list, or a hybrid system.

Let’s take an example which confronts a person looking for information about the Ku Group. This is a financial services firm responsible for the Kucoin. The Ku Group is interesting because it has been found guilty in the US for certain financial activities in the State of New York and by the US Securities & Exchange Commission.

Pavel Durov and Telegram: In the Spotlight Again

October 21, 2024

No smart software used for the write up. The art, however, is a different story.

No smart software used for the write up. The art, however, is a different story.

Several news sources reported that the entrepreneurial Pavel Durov, the found of Telegram, has found a way to grab headlines. Mr. Durov has been enjoying a respite in France, allegedly due to his contravention of what the French authorities views as a failure to cooperate with law enforcement. After his detainment, Mr. Durov signaled that he has cooperated and would continue to cooperate with investigators in certain matters.

A person under close scrutiny may find that the experience can be unnerving. The French are excellent intelligence operators. I wonder how Mr. Durov would hold up under the ministrations of Israeli and US investigators. Thanks, ChatGPT, you produced a usable cartoon with only one annoying suggestion unrelated to my prompt. Good enough.

Mr. Durov may have an opportunity to demonstrate his willingness to assist authorities in their investigation into documents published on the Telegram Messenger service. These documents, according to such sources as Business Insider and South China Morning Post, among others, report that the Telegram channel Middle East Spectator dumped information about Israel’s alleged plans to respond to Iran’s October 1, 2024, missile attack.

The South China Morning Post reported:

The channel for the Middle East Spectator, which describes itself as an “open-source news aggregator” independent of any government, said in a statement that it had “received, through an anonymous source on Telegram who refused to identify himself, two highly classified US intelligence documents, regarding preparations by the Zionist regime for an attack on the Islamic Republic of Iran”. The Middle East Spectator said in its posted statement that it could not verify the authenticity of the documents.

Let’s look outside this particular document issue. Telegram’s mostly moderation-free approach to the content posted, distributed, and pushed via the Telegram platform is like to come under more scrutiny. Some investigators in North America view Mr. Durov’s system as a less pressing issue than the content on other social media and messaging services.

This document matter may bring increased attention to Mr. Durov, his brother (allegedly with the intelligence of two PhDs), the 60 to 80 engineers maintaining the platform, and its burgeoning ancillary interests in crypto. Mr. Durov has some fancy dancing to do. One he is able to travel, he may find that additional actions will be considered to trim the wings of the Open Network Foundation, the newish TON Social service, and the “almost anything” goes approach to the content generated and disseminated by Telegram’s almost one billion users.

From a practical point of view, a failure to exercise judgment about what is allowed on Messenger may derail Telegram’s attempts to become more of a mover and shaker in the world of crypto currency. French actions toward Mr. Pavel should have alerted the wizardly innovator that governments can and will take action to protect their interests.

Now Mr. Durov is placing himself, his colleagues, and his platform under more scrutiny. Close scrutiny may reveal nothing out of the ordinary. On the other hand, when one pays close attention to a person or an organization, new and interesting facts may be identified. What happens then? Often something surprising.

Will Mr. Durov get that message?

Stephen E Arnold, October 21, 2024

Surveillance Watch Maps the Surveillance App Ecosystem

October 1, 2024

Here is an interesting resource: Surveillance Watch compiles information about surveillance tech firms, organizations that fund them, and the regions in which they are said to operate. The lists, compiled from contributions by visitors to the site, are not comprehensive. But they are full of useful information. The About page states:

“Surveillance technology and spyware are being used to target and suppress journalists, dissidents, and human rights advocates everywhere. Surveillance Watch is an interactive map that documents the hidden connections within the opaque surveillance industry. Founded by privacy advocates, most of whom were personally harmed by surveillance tech, our mission is to shed light on the companies profiting from this exploitation with significant risk to our lives. By mapping out the intricate web of surveillance companies, their subsidiaries, partners, and financial backers, we hope to expose the enablers fueling this industry’s extensive rights violations, ensuring they cannot evade accountability for being complicit in this abuse. Surveillance Watch is a community-driven initiative, and we rely on submissions from individuals passionate about protecting privacy and human rights.”

Yes, the site makes it easy to contribute information to its roundup. Anonymously, if one desires. The site’s information is divided into three alphabetical lists: Surveilling Entities, Known Targets, and Funding Organizations. As an example, here is what the service says about safeXai (formerly Banjo):

“safeXai is the entity that has quietly resumed the operations of Banjo, a digital surveillance company whose founder, Damien Patton, was a former Ku Klux Klan member who’d participated in a 1990 drive-by shooting of a synagogue near Nashville, Tennessee. Banjo developed real-time surveillance technology that monitored social media, traffic cameras, satellites, and other sources to detect and report on events as they unfolded. In Utah, Banjo’s technology was used by law enforcement agencies.”

We notice there are no substantive links which could have been included, like ones to footage of the safeXai surveillance video service or the firm’s remarkable body of patents. In our view, these patents represent an X-ray look at what most firms call artificial intelligence.

A few other names we recognize are IBM, Palantir, and Pegasus owner NSO Group. See the site for many more. The Known Targets page lists countries that, when clicked, list surveilling entities known or believed to be operating there. Entries on the Funding Organizations page include a brief description of each organization with a clickable list of surveillance apps it is known or believed to fund at the bottom. It is not clear how the site vets its entries, but the submission form does include boxes for supporting URL(s) and any files to upload. It also asks whether one consents to be contacted for more information.

Cynthia Murrell, October 1, 2024

Zapping the Ghost Comms Service

September 23, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Europol generated a news release titled “Global Coalition Takes Down New Criminal Communication Platform.” One would think that bad actors would have learned a lesson from the ANOM operation and from the take downs of other specialized communication services purpose built for bad actors. The Europol announcement explains:

Europol and Eurojust, together with law enforcement and judicial authorities from around the world, have successfully dismantled an encrypted communication platform that was established to facilitate serious and organized crime perpetrated by dangerous criminal networks operating on a global scale. The platform, known as Ghost, was used as a tool to carry out a wide range of criminal activities, including large-scale drug trafficking, money laundering, instances of extreme violence and other forms of serious and organized crime.

Eurojust, as you probably know, is the EU’s agency responsible for dealing with judicial cooperation in criminal matters among agencies. The entity was set up 2002 and concerns itself serious crime and cutting through the red tape to bring alleged bad actors to court. The dynamic of Europol and Eurojust is to investigate and prosecute with efficiency.

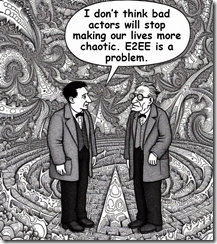

Two cyber investigators recognize that the bad actors can exploit the information environment to create more E2EE systems. Thanks, MSFT Copilot. You do a reasonable job of illustrating chaos. Good enough.

The marketing-oriented name of the system is or rather was Ghost. Here’s how Europol describes the system:

Users could purchase the tool without declaring any personal information. The solution used three encryption standards and offered the option to send a message followed by a specific code which would result in the self-destruction of all messages on the target phone. This allowed criminal networks to communicate securely, evade detection, counter forensic measures, and coordinate their illegal operations across borders. Worldwide, several thousand people used the tool, which has its own infrastructure and applications with a network of resellers based in several countries. On a global scale, around one thousand messages are being exchanged each day via Ghost.

With law enforcement compromising certain bad actor-centric systems like Ghost, what are the consequences of these successful shutdowns? Here’s what Europol says:

The encrypted communication landscape has become increasingly fragmented as a result of recent law enforcement actions targeting platforms used by criminal networks. Following these operations, numerous once-popular encrypted services have been shut down or disrupted, leading to a splintering of the market. Criminal actors, in response, are now turning to a variety of less-established or custom-built communication tools that offer varying degrees of security and anonymity. By doing so, they seek new technical solutions and also utilize popular communication applications to diversify their methods. This strategy helps these actors avoid exposing their entire criminal operations and networks on a single platform, thereby mitigating the risk of interception. Consequently, the landscape of encrypted communications remains highly dynamic and segmented, posing ongoing challenges for law enforcement.

Nevertheless, some entities want to create secure apps designed to allow criminal behaviors to thrive. These range from “me too” systems like one allegedly in development by a known bad actor to knock offs of sophisticated hardware-software systems which operate within the public Internet. Are bad actors more innovative than the whiz kids at the largest high-technology companies? Nope. Based on my team’s research, notable sources of ideas to create problems for law enforcement include:

- Scanning patent applications for nifty ideas. Modern patent search systems make the identification of novel ideas reasonably straightforward

- Hiring one or more university staff to identify and get students to develop certain code components as part of a normal class project

- Using open source methods and coming up with ad hoc ways to obfuscate what’s being done. (Hats off to the open source folks, of course.)

- Buying technology from middle “men” who won’t talk about their customers. (Is that too much information, Mr. Oligarch’s tech expert?)

Like much in today’s digital world or what I call the datasphere, each successful takedown provides limited respite. The global cat-and-mouse game between government authorities and bad actors is what some at the Santa Fe Institute might call “emergent behavior” at the boundary between entropy and chaos. That’s a wonderful insight despite suggesting another consequence of living at the edge of chaos.

Stephen E Arnold, September 23, 2024

x

A