Professor Marcus, You Missed One Point about the Apple Reasoning Paper

June 16, 2025

An opinion essay written by a dinobaby who did not rely on smart software but for the so-so cartoon.

An opinion essay written by a dinobaby who did not rely on smart software but for the so-so cartoon.

The intern-fueled Apple academic paper titled “The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity” has caused a stir. An interesting analysis of the responses to this tour de force is “Seven Replies to the Viral Apple Reasoning Paper – and Why They Fall Short.” Professor Gary Marcus in his analysis identifies categories of reactions to the Apple document.

In my opinion, these are, and I paraphrase with abandon:

- Human struggle with complex problems; software does too

- Smart software needs lots of computation so deliver a good enough output that doesn’t cost too much

- The paper includes an intern’s work because recycling and cheap labor are useful to busy people

- Bigger models are better because that’s what people do in Texas

- System can solve some types of problems and fail at others

- Limited examples because the examples require real effort

- The paper tells a reader what is already known: Smart software can be problematic because it is probabilistic, not intelligent.

I look at the Apple paper from a different point of view.

The challenge for Apple has been for more than a year to make smart software with its current limitations work reasonably well. Apple’s innovation in smart software has been the somewhat flawed SIRI (sort of long in the tooth) and the formulation of a snappy slogan “Apple Intelligence.”

This individual is holding a “cover your a**” document. Thanks, You.com. Good enough given your constraints, guard rails, and internal scripts.

The job of a commercial enterprise is to create something useful and reasonably clever to pull users to a product. Apple failed. Other companies have rolled out products making use of smart software as it currently is. One of the companies with a reasonably good product is OpenAI’s ChatGPT. Another is Perplexity.

Apple is not in this part of the smart software game. Apple has failed to use “as is” software in a way that adds some zing to the firm’s existing products. Apple has failed, just as it failed with the weird googles, its push into streaming video, and the innovations for the “new” iPhone. Changing case colors and altering an interface to look sort of like Microsoft’s see-through approach are not game changers. Labeling software by the year of release does not make me want to upgrade.

What is missing from the analysis of the really important paper that says, “Hey, this smart software has big problems. The whole house of LLM cards is wobbling in the wind”?

The answer is, “The paper is a marketing play.” The best way to make clear that Apple has not rolled out AI is because the current technology is terrible. Therefore, we need more time to figure out how to do AI well with crappy tools and methods not invented at Apple.

I see the paper as pure marketing. The timing of the paper’s release is marketing. The weird colors of the charts are marketing. The hype about the paper itself is marketing.

Anyone who has used some of the smart software tools knows one thing: The systems make up stuff. Everyone wants the “next big thing.” I think some of the LLM capabilities can be quite useful. In the coming months and years, smart software will enable useful functions beyond giving students a painless way to cheat, consultants a quick way to appear smart in a very short time, and entrepreneurs a way to vibe code their way into a job.

Apple has had one job: Find a way to use the available technology to deliver something novel and useful to its customers. It has failed. The academic paper is a “cover your a**” memo more suitable for a scared 35 year old middle manager in an advertising agency. Keep in mind that I am no professor. I am a dinobaby. In my world, an “F” is an “F.” Apple’s viral paper is an excuse for delivering something useful with Apple Intelligence. The company has delivered an illustration of why there is no Apple smart TV or Apple smart vehicle.

The paper is marketing, and it is just okay marketing.

Stephen E Arnold, June 16, 2025

A 30-Page Explanation from Tim Apple: AI Is Not Too Good

June 9, 2025

I suppose I should use smart software. But, no, I prefer the inept, flawed, humanoid way. Go figure. Then say to yourself, “He’s a dinobaby.”

I suppose I should use smart software. But, no, I prefer the inept, flawed, humanoid way. Go figure. Then say to yourself, “He’s a dinobaby.”

Gary Marcus, like other experts, are putting Apple into an old-fashioned peeler. You can get his insights in “A Knock Out Blow for LLMs.” I have a different angle on the Apple LLM explainer. Here we go:

Many years ago I had some minor role to play in the commercial online database sector. One of our products seemed to be reasonably good at summarizing business and technical journal articles, academic flights of fancy, and some just straight out crazy write ups from Harvard Business Review-type publications.

I read a 30-page “research” paper authored by what appear to be some of the “aw, shucks” folks at Apple. The write up is located on Apple’s content delivery network, of course. No run-of-the-mill service is up to those high Apple standards of Tim and his orchard keepers. The paper is authored by Parshin Shojaee (who is identified as an intern who made an equal contribution to the write up), Imam Mirzadeh (Apple), Keivan Alizadeh (Apple), Maxwell Horton (Apple), Samy Bengio (Apple), and Mehrdad Farajtabar (Apple). Okay, this seems to be a very academic line up with an intern who was doing “equal contribution” along with the high-powered horticulturists laboring on the write up.

The title is interesting: “The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity.” In a nutshell, the paper tries to make clear that current large language models deliver inconsistent results and cannot reason in a reliable manner. When I read this title, my mind conjured up an image of AI products and services delivering on-point outputs to iPhone users. That was the “illusion” of a large, ageing company trying to keep pace with technology and applications from its competitors, the upstarts, and the nation-states doing interesting things with the admittedly-flawed large language models. But those outside the Apple orchard have been delivering something.

My reaction to this document and its easy-to-read pastel charts like this one from page 30:

One of my addled professors told me, “Also, end on a strong point. Be clear, concise, and issue a call to action.” Apple obviously believes that these charts deliver exactly what my professor told me.

I interpreted the paper differently; to wit:

- Apple announced “Apple intelligence” and failed to ship for what a year or more had been previously announced

- Siri still sucks from my point of view

- Apple reorganized its smart software team in a significant way. Why? See items 1 and 2.

- Apple runs the risk of having its many iPhone users just skip “Apple intelligence” and maybe not upgrade due to the dalliance with China, the tariff issue, and the reality of assuming that what worked in the past will be just super duper in the future.

Sorry, gardeners. A 30-page paper is not going to change reality. Apple is a big outfit. It seems to be struggling. No Apple car. An increasingly wonky browser. An approach to “settings” almost as bad as Microsoft’s. And much, much more. Coming soon will be a new iOS numbering system and more!

That’s what happens when interns contribute as much as full-time equivalents and employees. The result is a paper. Okay, good enough.

But, sorry, Tim Apple: Papers, pastel charts, and complaining about smart software will not change a failure to match marketing with what users can access.

Stephen E Arnold, June 9, 2025

Is AI Experiencing an Enough Already Moment?

June 4, 2025

Consumers are fatigued from AI even though implementation of the technology is still new. Why are they tired? The Soatok Blog digs into that answer in the post: “Tech Companies Apparently Do Not Understand Why We Dislike AI – Dhole Moments.” Big Tech and other businesses don’t understand that their customers hate AI.

Soatok took a survey about AI that asked for opinions about AI that included questions about a “potential AI uprising.” Soatok is abundantly clear that he’s not afraid of a robot uprising or the “Singularity.” He has other reasons to worry about AI:

“I’m concerned about the kind of antisocial behaviors that AI will enable.

• Coordinated inauthentic behavior

• Misinformation

• Nonconsensual pornography

• Displacing entire industries without a viable replacement for their income

In aggregate, people’s behavior are largely the result of the incentive structures they live within.

But there is a feedback loop: If you change the incentive structures, people’s behaviors will certainly change, but subsequently so, too, will those incentive structures. If you do not understand people, you will fail to understand the harms that AI will unleash on the world. Distressingly, the people most passionate about AI often express a not-so-subtle disdain for humanity.”

Soatok is describing toxic human behaviors. These include toxic masculinity and femininity, but it’s more so the former. He aptly describes them:

"I’m talking about the kind of X users that dislike experts so much that they will ask Grok to fact check every statement a person makes. I’m also talking about the kind of “generative AI” fanboys that treat artists like garbage while claiming that AI has finally “democratized” the creative process.”

Insert a shudder here.

Soatok goes to explain how AI can be implemented in encrypted software that would collect user information. He paints a scenario where LLMs collect user data and they’re not protected by the Fourth and Fifth Amendments. Also AI could create psychological profiles of users that incorrectly identify them as psychotic terrorists.

Insert even more shuddering.

Soatok advises Big Tech to make AI optional and not the first out of the box solution. He wants users to have the choice of engaging with AI, even it means lower user metrics and data fed back to Big Tech. Is Soatok hallucinating like everyone’s favorite over-hyped technology. Let’s ask IBM Watson. Oh, wait.

Whitney Grace, June 4, 2025

NSO Group: When Marketing and Confidence Mix with Specialized Software

May 13, 2025

No AI, just the dinobaby expressing his opinions to Zellenials.

No AI, just the dinobaby expressing his opinions to Zellenials.

Some specialized software must remain known only to a small number of professionals specifically involved in work related to national security. This is a dinobaby view, and I am not going to be swayed with “information wants to be free” arguments or assertions about the need to generate revenue to make the investors “whole.” Abandoning secrecy and common sense for glittering generalities and MBA mumbo jumbo is ill advised.

I read “Meta Wins $168 Million in Damages from Israeli Cyberintel Firm in Whatsapp Spyware Scandal.” The write up reports:

Meta won nearly $168 million in damages Tuesday from Israeli cyberintelligence company NSO Group, capping more than five years of litigation over a May 2019 attack that downloaded spyware on more than 1,400 WhatsApp users’ phones.

The decision is likely to be appealed, so the “won” is not accurate. What is interesting is this paragraph:

[Yaron] Shohat [NSO’s CEO] declined an interview outside the Ron V. Dellums Federal Courthouse, where the court proceedings were held.

From my point of view, fewer trade shows, less marketing, and a lower profile should be action items for Mr. Shohat, the NSO Group’s founders, and the firm’s lobbyists.

I watched as NSO Group became the poster child for specialized software. I was not happy as the firm’s systems and methods found their way into publicly accessible Web sites. I reacted negatively as other specialized software firms (these I will not identify) began describing their technology as similar to NSO Group’s.

The desperation of cyber intelligence, specialized software firms, and — yes — trade show operators is behind the crazed idea of making certain information widely available. I worked in the nuclear industry in the early 1970s. From Day One on the job, the message was, “Don’t talk.” I then shifted to a blue chip consulting firm working on a wide range of projects. From Day One on that job, the message was, “Don’t talk.” When I set up my own specialized research firm, the message I conveyed to my team members was, “Don’t talk.”

Then it seemed that everyone wanted to “talk”. Marketing, speeches, brochures, even YouTube videos distributed information that was never intended to be made widely available. Without operating context and quite specific knowledge, jazzy pitches that used terms like “zero day vulnerability” and other crazy sales oriented marketing lingo made specialized software something many people without operating context and quite specific knowledge “experts.”

I see this leakage of specialized software information in the OSINT blurbs on LinkedIn. I see it in social media posts by people with weird online handles like those used in Top Gun films. I see it when I go to a general purpose knowledge management meeting.

Now the specialized software industry is visible. In my opinion, that is not a good thing. I hope Mr. Shohat and others in the specialized software field continue the “decline to comment” approach. Knock off the PR. Focus on the entities authorized to use specialized software. The field is not for computer whiz kids, eGame players, and wanna be intelligence officers.

Do your job. Don’t talk. Do I think these marketing oriented 21st century specialized software companies will change their behavior? Answer: Oh, sure.

PS. I hope the backstory for Facebook / Meta’s interest in specialized software becomes part of a public court record. I am curious is what I have learned matches up to the court statements. My hunch is that some social media executives have selective memories. That’s a useful skill I have heard.

Stephen E Arnold, May 13, 2025

Big Numbers and Bad Output: Is This the Google AI Story

May 13, 2025

No AI. Just a dinobaby who gets revved up with buzzwords and baloney.

No AI. Just a dinobaby who gets revved up with buzzwords and baloney.

Alphabet Google reported financials that made stakeholders happy. Big numbers were thrown about. I did not know that 1.5 billion people used Google’s AI Overviews. Well, “use” might be misleading. I think the word might be “see” or “were shown” AI Overviews. The key point is that Google is making money despite its legal hassles and its ongoing battle with infrastructure costs.

I was, therefore, very surprised to read “Google’s AI Overviews Explain Made-Up Idioms With Confident Nonsense.” If the information in the write up is accurate, the factoid suggests that a lot of people may be getting bogus information. If true, what does this suggest about Alphabet Google?

The Cnet article says:

…the author and screenwriter Meaghan Wilson Anastasios shared what happened when she searched “peanut butter platform heels.” Google returned a result referencing a (not real) scientific experiment in which peanut butter was used to demonstrate the creation of diamonds under high pressure.

Those Nobel prize winners, brilliant Googlers, and long-time wizards like Jeff Dean seem to struggle with simple things. Remember the glue cheese on pizza suggestion before Google’s AI improved.

The article adds by quoting a non-Google wizard:

“They [large language models] are designed to generate fluent, plausible-sounding responses, even when the input is completely nonsensical,” said Yafang Li, assistant professor at the Fogelman College of Business and Economics at the University of Memphis. “They are not trained to verify the truth. They are trained to complete the sentence.”

Turning in lousy essay and showing up should be enough for a C grade. Is that enough for smart software with 1.5 billion users every three or four weeks?

The article reminds its readers”

This phenomenon is an entertaining example of LLMs’ tendency to make stuff up — what the AI world calls “hallucinating.” When a gen AI model hallucinates, it produces information that sounds like it could be plausible or accurate but isn’t rooted in reality.

The outputs can be amusing for a person able to identify goofiness. But a grade school kid? Cnet wants users to craft better prompts.

I want to be 17 years old again and be a movie star. The reality is that I am 80 and look like a very old toad.

AI has to make money for Google. Other services are looking more appealing without the weight of legal judgments and hassles in numerous jurisdictions. But Google has already won the AI race. Its DeepMind unit is curing disease and crushing computational problems. I know these facts because Google’s PR and marketing machine is running at or near its red line.

But the 1.5 billion users potentially receiving made up, wrong, or hallucinatory information seems less than amusing to me.

Stephen E Arnold, May 13, 2025

IBM AI Study: Would The Research Report Get an A in Statistics 202?

May 9, 2025

No AI, just the dinobaby expressing his opinions to Zellenials.

No AI, just the dinobaby expressing his opinions to Zellenials.

IBM, reinvigorated with its easy-to-use, backwards-compatible, AI-capable mainframe released a research report about AI. Will these findings cause the new IBM AI-capable mainframe to sell like Jeopardy / Watson “I won” T shirts?

Perhaps.

The report is “Five Mindshifts to Supercharge Business Growth.” It runs a mere 40 pages and requires no more time than configuring your new LinuxONE Emperor 5 mainframe. Well, the report can be absorbed in less time, but the Emperor 5 is a piece of cake as IBM mainframes go.

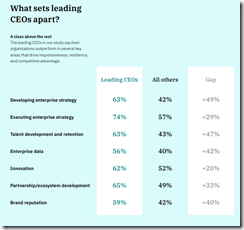

Here are a few of the findings revealed by IBM in its IBM research report;

AI can improve customer “experience”. I think this means that customer service becomes better with AI in it. Study says, “72 percent of those in the sample agree.”

Turbulence becomes opportunity. 100 percent of the IBM marketers assembling the report agree. I am not sure how many CEOs are into this concept; for example, Hollywood motion picture firms or Georgia Pacific which closed a factory and told workers not to come in tomorrow.

Here’s a graphic from the IBM study. Do you know what’s missing? I will give you five seconds as Arvin Haddad, the LA real estate influencer says in his entertaining YouTube videos:

The answer is, “Increasing revenues, boosting revenues, and keeping stakeholders thrilled with their payoffs.” The items listed by IBM really don’t count, do they?

“Embrace AI-fueled creative destruction.” Yep, another 100 percenter from the IBM team. No supporting data, no verification, and not even a hint of proof that AI-fueled creative destruction is doing much more than making lots of venture outfits and some of the US AI leaders is improving their lives. That cash burn could set the forest on fire, couldn’t it? Answer: Of course not.

I must admit I was baffled by this table of data:

Accelerate growth and efficiency goes down with generative AI. (Is Dr. Gary Marcus right?). Enhanced decision making goes up with generative AI. Are the decisions based on verifiable facts or hallucinated outputs? Maybe busy executives in the sample choose to believe what AI outputs because a computer like the Emperor 5 was involved. Maybe “easy” is better than old-fashioned problem solving which is expensive, slow, and contentious. “Just let AI tell me” is a more modern, streamlined approach to decision making in a time of uncertainty. And the dotted lines? Hmmm.

On page 40 of the report, I spotted this factoid. It is tiny and hard to read.

The text says, “50 percent say their organization has disconnected technology due to the pace of recent investments.” I am not exactly sure what this means. Operative words are “disconnected” and “pace of … investments.” I would hazard an interpretation: “Hey, this AI costs too much and the payoff is just not obvious.”

I wish to offer some observations:

- IBM spent some serious money designing this report

- The pagination is in terms of double page spreads, so the “study” plus rah rah consumes about 80 pages if one were to print it out. On my laser printer the pages are illegible for a human, but for the designers, the approach showcases the weird ice cubes, the dotted lines, and allows important factoids to be overlooked

- The combination of data (which strike me as less of a home run for the AI fan and more of a report about AI friction) and flat out marketing razzle dazzle is intriguing. I would have enjoyed sitting in the meetings which locked into this approach. My hunch is that when someone thought about the allegedly valid results and said, “You know these data are sort of anti-AI,” then the others in the meeting said, “We have to convert the study into marketing diamonds.” The result? The double truck, design-infused, data tinged report.

Good work, IBM. The study will definitely sell truckloads of those Emperor 5 mainframes.

Stephen E Arnold, May 9, 2025

Knowledge Management: Hog Wash or Lipstick on a Pig?

May 8, 2025

No AI. Just a dinobaby who gets revved up with buzzwords and baloney.

No AI. Just a dinobaby who gets revved up with buzzwords and baloney.

I no longer work at a blue chip consulting company. Heck, I no longer work anywhere. Years ago, I bailed out to work for a company in fly-over country. The zoom-zoom life of the big city tuckered me out. I know, however, when a consulting pitch is released to the world. I spotted one of these “pay us and we will save you” approaches today (April 25, 2025, 5 42 am US Eastern time).

How pretty can the farmer make these pigs? Thanks, OpenAI, good enough, and I know you have no clue about the preparation for a Poland China at a state fair. It does not look like this.

“How Knowledge Mismanagement is Costing Your Company Millions” is an argument presented to spark the sale of professional services. What’s interesting is that instead of beating the big AI/ML (artificial intelligence and machine learning drum set), the authors from an outfit called Bloomfire made “knowledge management” the pointy end of the spear. I was never sure what knowledge management. One of my colleagues did a lot of knowledge management work, but it looked to me like creating an inventory of content, a directory of who in the organization was a go-to source for certain information, and enterprise search.

This marketing essay asserts:

Executives are laser-focused on optimizing their most valuable assets – people, intellectual property, and proprietary technology. But many overlook one asset that has the power to drive revenue, productivity, and innovation: enterprise knowledge.

To me, the idea that one can place a value on knowledge is an important process. My own views of what is called “knowledge value” have been shaped by the work of Taichi Sakaya. This book was published 40 years ago, and it is a useful analysis of how to make money from knowing “stuff”.

This essay makes the argument that an organization that does not know how to get its information act together will not extract the appropriate value from its information. I learned:

Many organizations regard knowledge as an afterthought rather than a business asset that drives financial performance. Knowledge often remains unaccounted for on balance sheets, hidden in siloed systems, and mismanaged to the point of becoming a liability. Redundant, trivial, conflicting, and outdated information can cloud decision making that fails to deliver key results.

The only problem is that “knowledge” loses value when it moves to a system or an individual where it should not be. Let me offer three examples of the fallacy of silo breaking, financial systems, and “mismanaged” paper or digital information.

- A government contract labeled secret by the agency hiring the commercial enterprise. Forget the sharing. Locking up the “information” is essential for protecting national security and for getting paid. The knowledge management is that only authorized personnel know their part of a project. Sharing is not acceptable.

- Financial data, particularly numbers and information about a legal matter or acquisition/divestiture is definitely high value information. The organization should know that talking or leaking these data will result in problems, some little, some medium, and some big time.

- Mismanaged information is a very bad and probably high risk thing. Organizations simply do not have the management bandwidth to establish specific guidelines for data acquisition, manipulation, storage and deletion, access controls that work, and computer expertise to use dumb and smart software to keep those data ponies and information critters under control. The reasons are many and range from accountants who are CEOs to activist investor sock puppets, available money and people, and understanding exactly what has to be done to button up an operation.

Not surprisingly, coming up with a phrase like “enterprise intelligence” may sell some consulting work, but the reality of the datasphere is that whatever an organization does in an engagement running several months or a year will not be permanent. The information system in an organization any size is unstable. How does one make knowledge value from an inherently volatile information environment. Predicting the weather is difficult. Predicting the data ecosystem in an organization is the reason knowledge management as a discipline never went anywhere. Whether it was Harvey Poppel’s paperless office in the 1970s or the wackiness of the system which built a database of people so one could search by what each employee knew, the knowledge management solutions had one winning characteristic: The consultants made money until they didn’t.

The “winners” in knowledge management are big fuzzy outfits; for example, IBM, Microsoft, Oracle, and a few others. Are these companies into knowledge management? I would say, “Sure because no one knows exactly what it means. When the cost of getting digital information under control is presented, the thirst for knowledge management decreases just a tad. Well, maybe I should say, “Craters.”

None of these outfits “solve” the problem of knowledge management. They sell software and services. Despite the technology available today, a Microsoft Azure SharePoint and custom Web page system leaked secure knowledge from the Israeli military. I would agree that this is indeed knowledge mismanagement, but the problem is related to system complexity, poor staff training, and the security posture of the vendor, which in this case is Microsoft.

The essay concludes with this statement in the form of a question:

The question is: Where does your company’s knowledge fall on the balance sheet?

Will the sales pitch work? Will CEOs ask, “Where is my company’s knowledge value?” Probably. The essay throws around a lot of numbers. It evokes uncertainty, risk, and may fear. It has some clever jargon like knowledge mismanagement.

Net net: Well done. Suitable for praise from a business school faculty member. Is knowledge mismanagement going to delivery knowledge value? Unlikely. Is knowledge (managed or mismanaged) hog wash? It depends on one’s experience with Poland Chinas. Is knowledge (managed or mismanaged lipstick on a pig)? Again it depends on one’s sense of what’s right for the critters. But the goal is to sell consulting, not clean hogs or pretty up pigs.

Stephen E Arnold, May 8, 2025

IBM: Making the Mainframe Cool Again

May 7, 2025

No AI, just the dinobaby expressing his opinions to Zellenials.

No AI, just the dinobaby expressing his opinions to Zellenials.

I a ZDNet Tech Today article titled “IBM Introduces a Mainframe for AI: The LinuxONE Emperor 5.” Years ago, I had three IBM PC 704s, each with the eight drive SCSI chassis and that wonderful ServeRAID software. I suppose I should tell you, I want a LinuxONE Emperor 5 because the capitalization reminds me of the IBM ServeRAID software. Imagine. A mainframe for artificial intelligence. No wonder that IBM stock looks like a winner in 2025.

The write up says:

IBM’s latest mainframe, the LinuxONE Emperor 5, is not your grandpa’s mainframe

The CPU for this puppy is the IBM Telum II processor. The chip is a seven nanometer item announced in 2021. If you want some information about this, navigate to “IBM’s Newest Chip Is More Than Meets the AI.”

The ZDNet write up says:

Manufactured using Samsung’s 5 nm process technology, Telum II features eight high-performance cores running at 5.5GHz, a 40% increase in on-chip cache capacity (with virtual L3 and L4 caches expanded to 360MB and 2.88GB, respectively), and a dedicated, next-generation on-chip AI accelerator capable of up to 24 trillion operations per second (TOPS) — four times the compute power of its predecessor. The new mainframe also supports the IBM Spyre Accelerator for AI users who want the most power.

The ZDNet write up delivers a bumper crop of IBM buzzwords about security, but there is one question that crossed my mind, “What makes this a mainframe?”

The answer, in my opinion, is IBM marketing. The Emperor should be able to run legacy IBM mainframe applications. However, before placing an order, a customer may want to consider:

- Snapping these machines into a modern cloud or hybrid environment might take a bit of work. Never fear, however, IBM consulting can help with this task.

- The reliance on the Telum CPU to do AI might put the system at a performance disadvantage from solutions like the Nvidia approach

- The security pitch is accurate providing the system is properly configured and set up. Once again, IBM provides the for fee services necessary to allow Z-llenial IT professional to sleep easy on weekends.

- Mainframes in the cloud are time sharing oriented; making these work in a hybrid environment can be an interesting technical challenge. Remember: IBM consulting and engineering services can smooth the bumps in the road.

Net net: Interesting system, surprising marketing, and definitely something that will catch a bean counter’s eye.

Stephen E Arnold, May 7, 2025

Microsoft Explains that Its AI Leads to Smart Software Capacity Gap Closing

May 7, 2025

No AI, just a dinobaby watching the world respond to the tech bros.

No AI, just a dinobaby watching the world respond to the tech bros.

I read a content marketing write up with two interesting features: [1] New jargon about smart software and [2] a direct response to Google’s increasingly urgent suggestions that Googzilla has won the AI war. The article appears in Venture Beat with the title “Microsoft Just Launched Powerful AI ‘Agents’ That Could Completely Transform Your Workday — And Challenge Google’s Workplace Dominance.” The title suggests that Google is the leader in smart software in the lucrative enterprise market. But isn’t Microsoft’s “flavor” of smart software in products from the much-loved Teams to the lowly Notepad application? Isn’t Word like Excel at the top of the heap when it comes to usage in the enterprise?

I will ignore these questions and focus on the lingo in the article. It is different and illustrates what college graduates with a B.A. in modern fiction can craft when assisted by a sprinkling of social science majors and a former journalist or two.

Here are the terms I circled:

product name: Microsoft 365 Copilot Wave 2 Spring release (wow, snappy)

integral collaborator (another bound phrase which means agent)

intelligence abundance (something everyone is talking about)

frontier firm (forward leaning synonym)

‘human-led, agent-operated’ workplaces (yes, humans lead; they are not completely eliminated)

agent store (yes, another online store. You buy agents; you don’t buy people)

browser for AI

brand-compliant images

capacity gap (I have no idea what this represents)

agent boss (Is this a Copilot thing?)

work charts (not images, plans I think)

Copilot control system (Is this the agent boss thing?)

So what does the write up say? In my dinobaby mind, the answer is, “Everything a member of leadership could want: Fewer employees, more productivity from those who remain on the payroll, software middle managers who don’t complain or demand emotional support from their bosses, and a narrowing of the capacity gap (whatever that is).

The question is, “Can either Google, Microsoft, or OpenAI deliver this type of grand vision?” Answer: Probably the vision can be explained and made magnetic via marketing, PR, and language weaponization, but the current AI technology still has a couple of hurdles to get over without tearing the competitors’ gym shorts:

- Hallucinations and making stuff up

- Copyright issues related to training and slapping the circle C, trademarks, and patents on outputs from these agent bosses and robot workers

- Working without creating a larger attack surface for bad actors armed with AI to exploit (Remember, security, not AI, is supposed to be Job No. 1 at Microsoft. You remember that, right? Right?)

- Killing dolphins, bleaching coral, and choking humans on power plant outputs

- Getting the billions pumped into smart software back in the form of sustainable and growing revenues. (Yes, there is a Santa Claus too.)

Net net: Wow. Your turn Google. Tell us you have won, cured disease, and crushed another game player. Oh, you will have to use another word for “dominance.” Tip: Let OpenAI suggest some synonyms.

Stephen E Arnold, May 7, 2025

China Tough. US Weak: A Variation of the China Smart. US Dumb Campaign

May 6, 2025

No AI. This old dinobaby just plods along, delighted he is old and this craziness will soon be left behind. What about you?

No AI. This old dinobaby just plods along, delighted he is old and this craziness will soon be left behind. What about you?

Even members of my own team thing I am confusing information about China’s technology with my dinobaby peculiarities. That may be. Nevertheless, I want to document the story “The Ancient Chinese General Whose Calm During Surgery Is Still Told of Today.” I know it is. I just read a modern retelling of the tale in the South China Morning Post. (Hey. Where did that paywall go?)

The basic idea is that a Chinese leader (tough by genetics and mental discipline) had dinner with some colleagues. A physician showed up and told the general, “You have poison in your arm bone.”

The leader allegedly told the physician,

“No big deal. Do the surgery here at the dinner table.”

The leader let the doc chop open his arm, remove the diseased area, and stitched the leader up. Now here’s the item in the write up I find interesting because it makes clear [a] the leader’s indifference to his colleagues who might find this surgical procedure an appetite killer and [b] the flawed collection of blood which seeped after the incision was made. Keep in mind that the leader did not need any soporific, and the leader continued to chit chat with his colleagues. I assume the leader’s anecdotes and social skills kept his guests mesmerized.

Here’s the detail from the China Tough. US Weak write up:

“Guan Yu [the tough leader] calmly extended his arm for the doctor to proceed. At the time, he was sitting with fellow generals, eating and drinking together. As the doctor cut into his arm, blood flowed profusely, overflowing the basin meant to catch it. Yet Guan Yu continued to eat meat, drink wine, and chat and laugh as if nothing was happening.”

Yep, blood flowed profusely. Just the extra that sets one meal apart from another. The closest approximation in my experience was arriving at a fast food restaurant after a shooting. Quite a mess and the odor did not make me think of a cheeseburger with ketchup.

I expect that members of my team will complain about this blog post. That’s okay. I am a dinobaby, but I think this variation on the China Smart. US Dumb information flow is interesting. Okay, anyone want to pop over for fried squirrel. We can skin, gut, and fry them at one go. My mouth is watering at the thought. If we are lucky, one of the group will have bagged a deer. Now that’s an opportunity to add some of that hoist, skin, cut, and grill to the evening meal. Guan Yu, the tough Chinese leader, would definitely get with the kitchen work.

Stephen E Arnold, May 6, 2025