Cyber Security Outfit Wants Its Competition to Be Better Fellow Travelers

August 21, 2024

![green-dino_thumb_thumb_thumb_thumb_t[2] green-dino_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2024/08/green-dino_thumb_thumb_thumb_thumb_t2_thumb-1.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read a write up which contains some lingo that is not typical Madison Avenue sales speak. The sort of odd orange newspaper published “CrowdStrike Hits Out at Rivals’ Shady Attacks after Global IT Outage.” [This is a paywalled story, gentle reader. Gone are the days when the orange newspaper was handed out in Midtown Manhattan.] CrowdStrike is a company with interesting origins. The firm has become a player in the cyber security market, and it has been remarkably successful. Microsoft — definitely a Grade A outfit focused on making system administrators’ live as calm as Lake Paseco on summer morning — allowed CrowdStrike to interact with the most secure component of its software.

What does the leader of CrowdStrike reveal? Let’s take a quick look at a point or two.

First, I noted this passage from the write up which seems a bit a proactive tactic to make sure those affected by the tiny misstep know that software is not perfect. I mean who knew?

CrowdStrike’s president hit out at “shady” efforts by its cyber security rivals to scare its customers and steal market share in the month since its botched software update sparked a global IT outage. Michael Sentonas told the Financial Times that attempts by competitors to use the July 19 disruption to promote their own products were “misguided”.

I am not sure what misguided means, but I think the idea is that competitors should not try to surf on the little ripples the CrowdStrike misstep caused. A few airline passengers were inconvenienced, sure. But that happens anyway. The people in hospitals whose surgeries were affected seem to be mostly okay in a statistical sense. And those interrupted financial transactions. No big deal. The market is chugging along.

Cyber vendors are ready and eager to help those with a problematic and possibly dangerous vehicle. Thanks, MSFT Copilot. Are you hands full today?

I also circled this passage:

SentinelOne chief executive Tomer Weingarten said the global shutdown was the result of “bad design decisions” and “risky architecture” at CrowdStrike, according to trade magazine CRN. Alex Stamos, SentinelOne’s chief information security officer, warned in a post on LinkedIn it was “dangerous” for CrowdStrike “to claim that any security product could have caused this kind of global outage”.

Yep, dangerous. Other vendors’ software are unlikely to create a CrowdStrike problem. I like this type of assertion. Also, I find the ambulance-chasing approach to closing deals and boosting revenue a normal part of some companies’ marketing. I think one outfit made FED or fear, uncertainty, and doubt a useful wrench in the firm’s deal-closing guide to hitting a sales target. As a dinobaby, I could be hallucinating like some of the smart software and the even smarter top dogs in cyber security companies.

I have to include this passage from the orange outfit’s write up:

Sentonas [a big dog at CrowdStrike], who this month went to Las Vegas to accept the Pwnie Award for Epic Fail at the 2024 security conference Def Con, dismissed fears that CrowdStrike’s market dominance would suffer long-term damage. “I am absolutely sure that we will become a much stronger organization on the back of something that should never have happened,” he said. “A lot of [customers] are saying, actually, you’re going to be the most battle-tested security product in the industry.”

The Def Con crowd was making fun of CrowdStrike for is inconsequential misstep. I assume CrowdStrike’s leadership realizes that the award is like a having the “old” Mad Magazine devote a cover to a topic.

My view is that [a] the incident will be forgotten. SolarWinds seems to be fading as an issue in the courts and in some experts’ List of Things to Worry About. [b] Microsoft and CrowdStrike can make marketing hay by pointing out that each company has addressed the “issue.” Life will be better going forward. And, [c] Competitors will have to work overtime to cope with a sales retention tactic more powerful than any PowerPoint or PR campaign — discounts, price cuts, and free upgrades to AI-infused systems.

But what about that headline? Will cyber security marketing firms change their sales lingo and tell the truth? Can one fill the tank of a hydrogen-powered vehicle in Eastern Kentucky?

PS. Buying cyber security, real-time alerts, and other gizmos allow an organization to think, “We are secure, right?”

Stephen E Arnold, August 21, 2024

Deep Fake Service?

August 16, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

What sets DeepLive apart is that one needs only a single image and the video of the person whose face you want to replace. The technology — assuming it is functioning as marketed — makes it clear that swapping faces on videos can be done. Will the technology derail often-controversial facial recognition systems?

The Web site provides testimonials and some examples of DeepLive in action.

The company says:

Deep Live Cam is an open-source tool for real-time face swapping and one-click video deepfakes. It can replace faces in videos or images using a single photo, ideal for video production, animation, and various creative projects.

The software is available as open source. The developers says that it includes “ethical safeguards.” But just in case these don’t work, DeepLive posts this message on its Web site:

Built-in checks prevent processing of inappropriate content, ensuring legal and ethical use.

The software has a couple of drawbacks:

- It is not clear if this particular code base is on an open source repository. There are a number of Deep Live this and thats.

- There is no active Web page link to the “Get Started” button

- There is minimal information about the “owner” of the software.

Other than that DeepLive is a good example of a deep fake. (An interesting discussion appears in HackerNews and Ars Technica.) If the system is stable and speedy, AI-enabled tools to create content objects for a purpose has taken a step forward. Bad actors are probably going to take note and give the system a spin.

Stephen E Arnold, August 16, 2024

AI Research: A New and Slippery Cost Center for the Google

August 7, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

A week or so ago, I read “Scaling Exponents Across Parameterizations and Optimizers.” The write up made crystal clear that Google’s DeepMind can cook up a test, throw bodies at it, and generate a bit of “gray” literature. The objective, in my opinion, was three-fold. [1] The paper makes clear that DeepMind is thinking about its smart software’s weaknesses and wants to figure out what to do about them. And [2] DeepMind wants to keep up the flow of PR – Marketing which says, “We are really the Big Dogs in this stuff. Good luck catching up with the DeepMind deep researchers.” Note: The third item appears after the numbers.

I think the paper reveals a third and unintended consequence. This issue is made more tangible by an entity named 152334H and captured in “Calculating the Cost of a Google DeepMind Paper.” (Oh, 152334 is a deep blue black color if anyone cares.)

That write up presents calculations supporting this assertion:

How to burn US$10,000,000 on an arXiv preprint

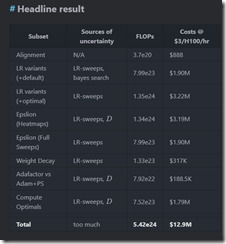

The write up included this table presenting the costs to replicate what the xx Googlers and DeepMinders did to produce the ArXiv gray paper:

Notice, please, that the estimate is nearly $13 million. Anyone want to verify the Google results? What am I hearing? Crickets.

The gray paper’s 11 authors had to run the draft by review leadership and a lawyer or two. Once okayed, the document was converted to the arXiv format, and we the findings to improve our understanding of how much work goes into the achievements of the quantumly supreme Google.

Thijs number of $12 million and change brings me to item [3]. The paper illustrates why Google has a tough time controlling its costs. The paper is not “marketing,” because it is R&D. Some of the expense can be shuffled around. But in my book, the research is overhead, but it is not counted like the costs of cubicles for administrative assistants. It is science; it is a cost of doing business. Suck it up, you buttercups, in accounting.

The write up illustrates why Google needs as much money as it can possibly grab. These costs which are not really nice, tidy costs have to be covered. With more than 150,000 people working on projects, the costs of “gray” papers is a trigger for more costs. The compute time has to be paid for. Hello, cloud customers. The “thinking time” has to be paid for because coming up with great research is open ended and may take weeks, months, or years. One could not rush Einstein. One cannot rush Google wizards in the AI realm either.

The point of this blog post is to create a bit of sympathy for the professionals in Google’s accounting department. Those folks have a tough job figuring out how to cut costs. One cannot prevent 11 people from burning through computer time. The costs just hockey stick. Consequently the quantumly supreme professionals involved in Google cost control look for simpler, more comprehensible ways to generate sufficient cash to cover what are essentially “surprise” costs. These tools include magic wand behavior over payments to creators, smart commission tables to compensate advertising partners, and demands for more efficiency from Googlers who are not thinking big thoughts about big AI topics.

Net net: Have some awareness of how tough it is to be quantumly supreme. One has to keep the PR and Marketing messaging on track. One has to notch breakthroughs, insights, and innovations. What about that glue on the pizza thing? Answer: What?

Stephen E Arnold, August 7, 2024

Every Cloud Has a Silver Lining: Cyber Security Software from Israel

August 1, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I wonder if those lucky Delta passengers have made it to their destinations yet? The Crowdstrike misstep caused a bit of a problem for some systems and for humans too. I saw a notice that CrowdStrike, founded by a Russian I believe, offered $10 to each person troubled by the teenie tiny mistake. Isn’t that too much for something which cannot be blamed on any one person, just on an elusive machine-centric process that had a bad hair day? Why pay anything?

And there is a silver lining to the CrowdStrike cloud! I read “CrowdStrike’s Troubles Open New Doors for Israeli Cyber Companies.” [Note that this source document may be paywalled. Just a heads up, gentle reader.] The write up asserts:

For the Israeli cyber sector, CrowdStrike’s troubles are an opportunity.

Yep, opportunity.

The write up adds:

Friday’s [July 26, 2024] drop in CrowdStrike shares reflects investor frustration and the expectation that potential customers will now turn to competitors, strengthening the position of Israeli companies. This situation may renew interest in smaller startups and local procurement in Israel, given how many institutions were affected by the CrowdStrike debacle.

The write up uses the term platformization, which is a marketing concept of the Palo Alto Networks cyber security firm. The idea is that a typical company is a rat’s nest of cyber security systems. No one is able to keep the features, functions, and flaws of several systems in mind. When something misfires or a tiny stumble occurs, Mr. Chaos, the friend of every cyber security professional, strolls in and asks, “Planning on a fun weekend, folks?”

The sales person makes reality look different. Thanks, Microsoft Copilot. Your marketing would never distort anything, right?

Platformization sounds great. I am not sure that any cyber security magic wand works. My econo-box automobile runs, but I would not say, “It works.” I can ponder this conundrum as I wait for the mobile repair fellow to arrive and riding in an Uber back to my office in rural Kentucky. The rides are evidence that “just works” is not exactly accurate. Your mileage may vary.

I want to point out that the write up is a bit of content marketing for Palo Alto Networks. Furthermore, I want to bring up a point which irritates some of my friends; namely, the Israeli cyber security systems, infrastructure, and smart software did not work in October 2023. Sure, there are lots of explanations. But which is more of a problem? CrowdStrike or the ineffectiveness of multiple systems?

Your call. The solution to cyber issues resides in informed professionals, adequate resources like money, and a commitment to security. Assumptions, marketing lingo, and fancy trade show booths simply prove that overpromising and under delivering is standard operating procedure at this time.

Stephen E Arnold, August 1, 2024

Quantum Supremacy: The PR Race Shames the Google

July 17, 2024

![dinosaur30a_thumb_thumb_thumb_thumb_[1]_thumb_thumb_thumb_thumb dinosaur30a_thumb_thumb_thumb_thumb_[1]_thumb_thumb_thumb_thumb](https://arnoldit.com/wordpress/wp-content/uploads/2024/07/dinosaur30a_thumb_thumb_thumb_thumb_1_thumb_thumb_thumb_thumb_thumb.gif) This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

The quantum computing era exists in research labs and a handful of specialized locations. The qubits are small, but the cooling system and control mechanisms are quite large. An environmentalist learning about the power consumption and climate footprint of a quantum computer might die of heart failure. But most of the worriers are thinking about AI’s power demands. Quantum computing is not a big deal. Yet.

But the title of “quantum supremacy champion” is a big deal. Sure the community of those energized by the concept may number in the tens of thousands, but quantum computing is a big deal. Google announced a couple of years ago that it was the quantum supremacy champ. I just read “New Quantum Computer Smashes Quantum Supremacy Record by a Factor of 100 — And It Consumes 30,000 Times Less Power.” The main point of the write up in my opinion is:

Anew quantum computer has broken a world record in “quantum supremacy,” topping the performance of benchmarking set by Google’s Sycamore machine by 100-fold.

Do I believe this? I am on the fence, but in the quantum computing “my super car is faster than your super car” means something to those in the game. What’s interesting to me is that the PR claim is not twice as fast as the Google’s quantum supremacy gizmo. Nor is the claim to be 10 times faster. The assertion is that a company called Quantinuum (the winner of the high-tech company naming contest with three letter “u”s, one “q” and four syllables) outperformed the Googlers by a factor of 100.

Two successful high-tech executives argue fiercely about performance. Thanks, MSFT Copilot. Good enough, and I love the quirky spelling? Is this a new feature of your smart software?

Now does the speedy quantum computer work better than one’s iPhone or Steam console. The article reports:

But in the new study, Quantinuum scientists — in partnership with JPMorgan, Caltech and Argonne National Laboratory — achieved an XEB score of approximately 0.35. This means the H2 quantum computer can produce results without producing an error 35% of the time.

To put this in context, use this system to plot your drive from your home to Texarkana. You will make it there one out of every three multi day drives. Close enough for horse shoes or an MVP (minimum viable product). But it is progress of sorts.

So what does the Google do? Its marketing team goes back to AI software and magically “DeepMind’s PEER Scales Language Models with Millions of Tiny Experts” appears in Venture Beat. Forget that quantum supremacy claim. The Google has “millions of tiny experts.” Millions. The PR piece reports:

DeepMind’s Parameter Efficient Expert Retrieval (PEER) architecture addresses the challenges of scaling MoE [mixture of experts and not to me confused with millions of experts [MOE].

I know this PR story about the Google is not quantum computing related, but it illustrates the “my super car is faster than your super car” mentality.

What can one believe about Google or any other high-technology outfit talking about the performance of its system or software? I don’t believe too much, probably about 10 percent of what I read or hear.

But the constant need to be perceived as the smartest science quick recall team is now routine. Come on, geniuses, be more creative.

Stephen E Arnold, July 17, 2024

Google Ups the Ante: Skip the Quantum. Aim Higher!

July 16, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

After losing its quantum supremacy crown to an outfit with lots of “u”s in its name and making clear it deploys a million software bots to do AI things, the Google PR machine continues to grind away.

The glowing “G” on god’s/God’s chest is the clue that reveals Google’s identity. Does that sound correct? Thanks, MSFT Copilot. Close enough to the Google for me.

What’s a bigger deal than quantum supremacy or the million AI bot assertion? Answer: Be like god or God as the case may be. I learned about this celestial achievement in “Google Researchers Say They Simulated the Emergence of Life.” The researchers have not actually created life. PR announcements can be sufficiently abstract to make a big Game of Life seem like more than an update of the 1970s John Horton Conway confection on a two-dimensional grid. Google’s goal is to get a mention in the Wikipedia article perhaps?

Google operates at a different scale in its PR world. Google does not fool around with black and white squares, blinkers, and spaceships. Google makes a simulation of life. Here’s how the write up explains the breakthrough:

In an experiment that simulated what would happen if you left a bunch of random data alone for millions of generations, Google researchers say they witnessed the emergence of self-replicating digital lifeforms.

Cue the pipe organ. Play Toccata and Fugue in D minor. The write up says:

Laurie and his team’s simulation is a digital primordial soup of sorts. No rules were imposed, and no impetus was given to the random data. To keep things as lean as possible, they used a funky programming language called Brainfuck, which to use the researchers’ words is known for its “obscure minimalism,” allowing for only two mathematical operations: adding one or subtracting one. The long and short of it is that they modified it to only allow the random data — stand-ins for molecules — to interact with each other, “left to execute code and overwrite themselves and neighbors based on their own instructions.” And despite these austere conditions, self-replicating programs were able to form.

Okay, tone down the volume on the organ, please.

The big discovery is, according to a statement in the write up attributed to a real life God-ler:

there are “inherent mechanisms” that allow life to form.

The God-ler did not claim the title of God-ler. Plus some point out that Google’s big announcement is not life. (No kidding?)

Several observations:

- Okay, sucking up power and computer resources to run a 1970s game suggests that some folks have a fairly unstructured work experience. May I suggest a bit of work on Google Maps and its usability?

- Google’s PR machine appears to value quantumly supreme reports of innovations, break throughs, and towering technical competence. Okay, but Google sells advertising, and the PR output doesn’t change that fact. Google sells ads. Period.

- The speed with which Google PR can react to any perceived achievement that is better or bigger than a Google achievement pushes the Emit PR button. Who punches this button?

Net net: I find these discoveries and innovations amusing. Yeah, Google is an ad outfit and probably should be headquartered on Madison Avenue or an even more prestigious location. Definitely away from Beelzebub and his ilk.

Stephen E Arnold, July 16, 2024

The Wiz: Google Gears Up for Enterprise Security

July 15, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Anyone remember this verse from “Ease on Down the Road,” from The Wiz, the hit musical from the 1970s? Here’s the passage:

‘Cause there may be times

When you think you lost your mind

And the steps you’re takin’

Leave you three, four steps behind

But the road you’re walking

Might be long sometimes

You just keep on trukin’

And you’ll just be fine, yeah

Why am I playing catchy tunes in my head on Monday, July 15, 2024? I just read “Google Near $23 Billion Deal for Cybersecurity Startup Wiz.” For years, I have been relating Israeli-developed cyber security technology to law enforcement and intelligence professionals. I try in each lecture to profile a firm, typically based in Tel Aviv or environs and staffed with former military professionals. I try to relate the functionality of the system to the particular case or matter I am discussing in my lecture.

The happy band is easin’ down the road. The Googlers have something new to sell. Does it work? Sure, get down. Boogie. Thanks, MSFT Copilot. Has your security created an opportunity for Google marketers?

That stopped in October 2023. A former Israeli intelligence officer told me, “The massacre was Israel’s 9/11. There was an intelligence failure.” I backed away form the Israeli security, cyber crime, and intelware systems. They did not work. If we flash forward to July 15, 2024, the marketing is back. The well-known NSO Group is hawking its technology at high-profile LE and intel conferences. Enhancements to existing systems arrive in the form of email newsletters at the pace of the pre-October 2023 missives.

However, I am maintaining a neutral and skeptical stance. There is the October 2023 event, the subsequent war, and the increasing agitation about tactics, weapons systems in use, and efficacy of digital safeguards.

Google does not share my concerns. That’s why the company is Google, and I am a dinobaby tracking cyber security from my small office in rural Kentucky. Google makes news. I make nothing as a marginalized dinobaby.

The Wiz tells the story of a young girl who wants to get her dog back after a storm carries the creature away. The young girl offs the evil witch and seeks the help of a comedian from Peoria, Illinois, to get back to her real life. The Wiz has a happy ending, and the quoted verse makes the point that the young girl, like the Google, has to keep taking steps even though the Information Highway may be long.

That’s what Google is doing. The company is buying security (which I want to point out is cut from the same cloth as the systems which failed to notice the October 2023 run up). Google has Mandiant. Google offers a free Dark Web scanning service. Now Google has Wiz.

What’s Wiz do? Like other Israeli security companies, it does the sort of thing intended to prevent events like October 2023’s attack. And like other aggressively marketed Israeli cyber technology companies’ capabilities, one has to ask, “Will Wiz work in an emerging and fluid threat environment?” This is an important question because of the failure of the in situ Israeli cyber security systems, disabled watch stations, and general blindness to social media signals about the October 2023 incident.

If one zips through the Wiz’s Web site, one can craft a description of what the firm purports to do; for example:

Wiz is a cloud security firm embodying capabilities associated with the Israeli military technology. The idea is to create a one-stop shop to secure cloud assets. The idea is to identify and mitigate risks. The system incorporates automated functions and graphic outputs. The company asserts that it can secure models used for smart software and enforce security policies automatically.

Does it work? I will leave that up to you and the bad actors who find novel methods to work around big, modern, automated security systems. Did you know that human error and old-fashioned methods like emails with links that deliver stealers work?

Can Google make the Mandiant Wiz combination work magic? Is Googzilla a modern day Wiz able to transport the little girl back to real life?

Google has paid a rumored $20 billion plus to deliver this reality.

I maintain my neutral and skeptical stance. I keep thinking about October 2023, the aftermath of a massive security failure, and the over-the-top presentations by Israeli cyber security vendors. If the stuff worked, why did October 2023 happen? Like most modern cyber security solutions, marketing to the people who desperately want a silver bullet or digital stake to pound through the heart of cyber risk produces sales.

I am not sure that sales, marketing, and assertions about automation work in what is an inherently insecure, fast-changing, and globally vulnerable environment.

But Google will keep on trukin’’ because Microsoft has created a heck of a marketing opportunity for the Google.

Stephen E Arnold, July 15, 2024

Googzilla, Man Up, Please

July 8, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read a couple of “real” news stories about Google and its green earth / save the whales policies in the age of smart software. The first write up is okay and not to exciting for a critical thinker wearing dinoskin. “The Morning After: Google’s Greenhouse Gas Emissions Climbed Nearly 50 Percent in Five Years Due to AI” states what seems to be a PR-massaged write up. Consider this passage:

According to the report, Google said it expects its total greenhouse gas emissions to rise “before dropping toward our absolute emissions reduction target,” without explaining what would cause this drop.

Yep, no explanation. A PR win.

The BBC published “AI Drives 48% Increase in Google Emissions.” That write up states:

Google says about two thirds of its energy is derived from carbon-free sources.

Thanks, MSFT Copilot. Good enough.

Neither these two articles nor the others I scanned focused on one key fact about Google’s saying green and driving snail darters to their fate. Google’s leadership team did not plan its energy strategy. In fact, my hunch is that no one paid any attention to how much energy Google’s AI activities were sucking down. Once the company shifted into Code Red or whatever consulting term craziness it used to label its frenetic response to the Microsoft OpenAI tie up, absolutely zero attention was directed toward the few big eyed tunas which might be taking their last dip.

Several observations:

- PR speak and green talk are like many assurances emitted by the Google. Talk is not action.

- The management processes at Google are disconnected from what happens when the wonky Code Red light flashes and the siren howls at midnight. Shouldn’t management be connected when the Tapanuli Orangutang could soon be facing the Big Ape in the sky?

- The AI energy consumption is not a result of AI. The energy consumption is a result of Googlers who do what’s necessary to respond to smart software. Step on the gas. Yeah, go fast. Endanger the Amur leopard.

Net net: Hey, Google, stand up and say, “My leadership team is responsible for the energy we consume.” Don’t blame your up-in-flames “green” initiative on software you invented. How about less PR and more focus on engineering more efficient data center and cloud operations? I know PR talk is easier, but buckle up, butter cup.

Stephen E Arnold, July 8, 2024

Some Tension in the Datasphere about Artificial Intelligence

June 28, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I generally try to avoid profanity in this blog. I am mindful of Google’s stopwords. I know there are filters running to protect those younger than I from frisky and inappropriate language. Therefore, I will cite the two articles and then convert the profanity to a suitably sanitized form.

The first write up is “I Will F…ing Piledrive You If You Mention AI Again”. Sorry, like many other high-technology professionals I prevaricated and dissembled. I have edited the F word to be less superficially offensive. (One simply cannot trust high-technology types, can you? I am not Thomson Reuters obviously.) The premise of this write up is that smart software is over-hyped. Here’s a passage I found interesting:

Unless you are one of a tiny handful of businesses who know exactly what they’re going to use AI for, you do not need AI for anything – or rather, you do not need to do anything to reap the benefits. Artificial intelligence, as it exists and is useful now, is probably already baked into your businesses software supply chain. Your managed security provider is probably using some algorithms baked up in a lab software to detect anomalous traffic, and here’s a secret, they didn’t do much AI work either, they bought software from the tiny sector of the market that actually does need to do employ data scientists.

I will leave it to you to ponder the wisdom of these words. I, for instance, do not know exactly what I am going to do until I do something, fiddle with it, and either change it up or trash it. You and most AI enthusiasts are probably different. That’s good. I envy your certitude. The author of the first essay is not gentle; he wants to piledrive you if you talk about smart software. I do not advocate violence under any circumstances. I can tolerate baloney about smart software. The piledriver person has hate in his heart. You have been warned.

The second write up is “ChatGPT Is Bullsh*t,” and it is an article published in SpringerLink, not a personal blog. Yep, bullsh*t as a term in an academic paper. Keep in mind, please, that Stanford University’s president and some Harvard wizards engaged in the bullsh*t business as part of their alleged making up data. Who needs AI when humans are perfectly capable of hallucinating, but I digress?

I noted this passage in the academic write up:

So perhaps we should, strictly, say not that ChatGPT is bullshit but that it outputs bullshit in a way that goes beyond being simply a vector of bullshit: it does not and cannot care about the truth of its output, and the person using it does so not to convey truth or falsehood but rather to convince the hearer that the text was written by a interested and attentive agent.

Please, read the 10 page research article about bullsh*t, soft bullsh*t, and hard bullsh*t. Form your own opinion.

I have now set the stage for some observations (probably unwanted and deeply disturbing to some in the smart software game).

- Artificial intelligence is a new big thing, and the hyperbole, misdirection, and outright lying like my saying I would use forbidden language in this essay irrelevant. The object of the new big thing is to make money, get power, maybe become an influencer on TikTok.

- The technology which seems to have flowered in January 2023 when Microsoft said, “We love OpenAI. It’s a better Clippy.” The problem is that it is now June 2024 and the advances have been slow and steady. This means that after a half century of research, the AI revolution is working hard to keep the hypemobile in gear. PR is quick; smart software improvement less speedy.

- The ripples the new big thing has sent across the datasphere attenuate the farther one is from the January 2023 marketing announcement. AI fatigue is now a thing. I think the hostility is likely to increase because real people are going to lose their jobs. Idle hands are the devil’s playthings. Excitement looms.

Net net: I think the profanity reveals the deep disgust some pundits and experts have for smart software, the companies pushing silver bullets into an old and rusty firearm, and an instinctual fear of the economic disruption the new big thing will cause. Exciting stuff. Oh, I am not stating a falsehood.

Stephen E Arnold, June 23, 2024

Can the Bezos Bulldozer Crush Temu, Shein, Regulators, and AI?

June 27, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The question, to be fair, should be, “Can the Bezos-less bulldozer crush Temu, Shein, Regulators, Subscriptions to Alexa, and AI?” The article, which appeared in the “real” news online service Venture Beat, presents an argument suggesting that the answer is, “Yes! Absolutely.”

Thanks MSFT Copilot. Good bulldozer.

The write up “AWS AI Takeover: 5 Cloud-Winning Plays They’re [sic] Using to Dominate the Market” depends upon an Amazon Big Dog named Matt Wood, VP of AI products at AWS. The article strikes me as something drafted by a small group at Amazon and then polished to PR perfection. The reasons the bulldozer will crush Google, Microsoft, Hewlett Packard’s on-premises play, and the keep-on-searching IBM Watson, among others, are:

- Covering the numbers or logo of the AI companies in the “game”; for example, Anthropic, AI21 Labs, and other whale players

- Hitting up its partners, customers, and friends to get support for the Amazon AI wonderfulness

- Engineering AI to be itty bitty pieces one can use to build a giant AI solution capable of dominating D&B industry sectors like banking, energy, commodities, and any other multi-billion sector one cares to name

- Skipping the Google folly of dealing with consumers. Amazon wants the really big contracts with really big companies, government agencies, and non-governmental organizations.

- Amazon is just better at security. Those leaky S3 buckets are not Amazon’s problem. The customers failed to use Amazon’s stellar security tools.

Did these five points convince you?

If you did not embrace the spirit of the bulldozer, the Venture Beat article states:

Make no mistake, fellow nerds. AWS is playing a long game here. They’re not interested in winning the next AI benchmark or topping the leaderboard in the latest Kaggle competition. They’re building the platform that will power the AI applications of tomorrow, and they plan to power all of them. AWS isn’t just building the infrastructure, they’re becoming the operating system for AI itself.

Convinced yet? Well, okay. I am not on the bulldozer yet. I do hear its engine roaring and I smell the no-longer-green emissions from the bulldozer’s data centers. Also, I am not sure the Google, IBM, and Microsoft are ready to roll over and let the bulldozer crush them into the former rain forest’s red soil. I recall researching Sagemaker which had some AI-type jargon applied to that “smart” service. Ah, you don’t know Sagemaker? Yeah. Too bad.

The rather positive leaning Amazon write up points out that as nifty as those five points about Amazon’s supremacy in the AI jungle, the company has vision. Okay, it is not the customer first idea from 1998 or so. But it is interesting. Amazon will have infrastructure. Amazon will provide model access. (I want to ask, “For how long?” but I won’t.), and Amazon will have app development.

The article includes a table providing detail about these three legs of the stool in the bulldozer’s cabin. There is also a run down of Amazon’s recent media and prospect directed announcements. Too bad the article does not include hyperlinks to these documents. Oh, well.

And after about 3,300 words about Amazon, the article includes about 260 words about Microsoft and Google. That’s a good balance. Too bad IBM. You did not make the cut. And HP? Nope. You did not get an “Also participated” certificate.

Net net: Quite a document. And no mention of Sagemaker. The Bezos-less bulldozer just smashes forward. Success is in crushing. Keep at it. And that “they” in the Venture Beat article title: Shouldn’t “they” be an “it”?

Stephen E Arnold, June 27, 2024