Surprise! Countries Not Pals with the US Are Using AI to Spy. Shocker? Hardly

November 17, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

The Beeb is a tireless “real” news outfit. Like some Manhattan newscasters, fixing up reality to make better stories, the BBC allowed a couple of high-profile members of leadership to find their future elsewhere. Maybe the chips shop in Slough?

Thanks, Venice.ai. You are definitely outputting good enough art today.

I am going to suspend my disbelief and point to a “real” news story about a US company. The story is “AI Firm Claims Chinese Spies Used Its Tech to Automate Cyber Attacks.” The write up reveals information that should not surprise anyone except the Beeb. The write up reports:

The makers of artificial intelligence (AI) chatbot Claude claim to have caught hackers sponsored by the Chinese government using the tool to perform automated cyber attacks against around 30 global organizations. Anthropic said hackers tricked the chatbot into carrying out automated tasks under the guise of carrying out cyber security research. The company claimed in a blog post this was the “first reported AI-orchestrated cyber espionage campaign”.

What’s interesting is that Anthropic itself was surprised. If Google and Microsoft are making smart software part of the “experience,” why wouldn’t bad actors avail themselves of the tools. Information about lashing smart software to a range of online activities is not exactly a secret.

What surprises me about this “news” is:

- Why is Anthropic spilling the beans about a nation state using its technology. Once such an account is identified, block it. Use pattern matching to determine if others are doing substantially similar exploits. Block those. If you want to become a self appointed police professional, get used to the cat-and-mouse game. You created the system. Deal with it.

- Why is the BBC presenting old information as something new? Perhaps its intrepid “real” journalists should pay attention to the public information distributed by cyber security firms? I think that is called “research”, but that may be surfing on news releases or running queries against ChatGPT or Gemini. Why not try Qwen, the China-affiliated system.

- I wonder why the Google-Anthropic tie up is not mentioned in the write up. Google released information about a quite specific smart exploit a few months ago. Was this information used by Anthropic to figure out that an bad actor was an Anthropic user? Is there a connection here? I don’t know, but that’s what investigative types are supposed to consider and address.

My personal view is that Anthropic is positioning itself as a tireless defender of truth, justice, and the American way. The company may also benefit from some of Google’s cyber security efforts. Google owns Mandiant and is working hard to make the Wiz folks walk down the yellow brick road to the Googleplex.

Net net: Bad actors using low cost, subsidized, powerful, and widely available smart software is not exactly a shocker.

Stephen E Arnold, November 17, 2025

Someone Is Not Drinking the AI-Flavored Kool-Aid

November 12, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

The future of AI is in the hands of the masters of the digital PT Barnum’s. A day or so ago, I wrote about Copilot in Excel. Allegedly a spreadsheet can be enhanced by Microsoft. Google is beavering away with a new enthusiasm for content curation. This is a short step to weaponizing what is indexed, what is available to Googlers and Mama, and what is provided to Google users. Heroin dealers do not provide consumer oriented labels with ingredients.

Thanks, Venice.ai. Good enough.

Here’s another example of this type of soft control: “I’ll Never Use Grammarly Again — And This Is the Reason Every Writer Should Care.” The author makes clear that Grammarly, developed and operated from Ukraine, now wants to change her writing style. The essay states:

What once felt like a reliable grammar checker has now turned into an aggressive AI tool always trying to erase my individuality.

Yep, that’s what AI companies and AI repackagers will do: Use the technology to improve the human. What a great idea? Just erase the fingerprints of the human. Introduce AI drivel and lowest common denominator thinking. Human, the AI says, take a break. Go to the yoga studio or grab a latte. AI has your covered.

The essay adds:

Superhuman [Grammarly’s AI solution for writers] wants to manage your creative workflow, where it can predict, rephrase, and automate your writing. Basically, a simple tool that helped us write better now wants to replace our words altogether. With its ability to link over a hundred apps, Superhuman wants to mimic your tone, habits, and overall style. Grammarly may call it personalized guidance, but I see it as data extraction wrapped with convenience. If we writers rely on a heavily AI-integrated platform, it will kill the unique voice, individual style, and originality.

One human dumped Grammarly, writing:

I’m glad I broke up with Grammarly before it was too late. Well, I parted ways because of my principles. As a writer, my dedication is towards original writing, and not optimized content.

Let’s go back to ubiquitous AI (some you know is there and other AI that operates in dark pattern mode). The object of the game for the AI crowd is to extract revenue and control information. By weaponizing information and making life easy, just think who will be in charge of many things in a few years. If you think humans will rule the roost, you are correct. But the number of humans pushing the buttons will be very small. These individuals have zero self awareness and believe that their ideas — no matter how far out and crazy — are the right way to run the railroad.

I am not sure most people will know that they are on a train taking them to a place they did not know existed and don’t want to visit.

Well, tough luck.

Stephen E Arnold, November 11, 2025

Innovation Cored, Diced, Cooked and Served As a Granny Scarf

November 11, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

I do not pay much attention to Vogue, once a giant, fat fashion magazine. However, my trusty newsfeed presented this story to me this morning at 626 am US Eastern: “Apple and Issey Miyake Unite for the iPhone Pocket. It’s a Moment of Connecting the Dots.” I had not idea what an Issey Miyake was. I navigated to Yandex.com (a more reliable search service than Google which is going to bail out the sinking Apple AI rowboat) and learned:

Issey Miyake … the brand name under which designer clothing, shoes, accessories and perfumes are produced.

Okay, a Japanese brand selling collections of clothes, women’s clothes with pleating, watches, perfumes, and a limited edition of an Evian mineral water in bottles designed by someone somewhere, probably Southeast Asia.

But here’s the word that jarred me: Moment. A moment?

The Vogue write up explains:

It’s a moment of connecting the dots.

Moment? Huh.

Upon further investigation, the innovation is a granny scarf; that is, a knitted garment with a pocket for an iPhone. I poked around and here’s what the “moment” looks like:

Source: Engadget, November 2025

I don’t recall my great grandmother (my father’s mother had a mother. This person was called “Granny” or “Gussy”, and I know she was alive in 1958. She died at the age of 102 or 103. She knitted and tatted scarfs, odd little white cloths called antimacassars and small circular or square items called doilies (singular “doily”).

Apple and the Japanese fashion icon have inadvertently emulated some of the outputs of my great grandmother “Granny” or “Gussy.” Were she, my grandmother, and my father alive, one or all of them would have taken legal action. But time makes us fools, and “the spirits of the wise sit in the clouds and mock” scarfs with pouches like an NBA bound baby kangaroo.

But the innovation which may be either Miyake’s, Apple’s, or a combo brainstorm of Miyake and Apple comes in short and long sizes. My Granny cranked out her knit confections like a laborer in a woolen mill in Ipswich in the 19th century. She gave her outputs away.

You can acquire this pinnacle of innovation for US $150 or US $230.

Several observations:

- Apple’s skinny phone flopped; Apple’s AI flopped. Therefore, Apple is into knitted scarfs to revivify its reputation for product innovation. Yeah, innovative.

- Next to Apple’s renaming Apple iTV as Apple TV, one may ask, “Exactly what is going on in Cupertino other than demanding that I log into an old iPhone I use to listen to podcasts?” Desperation gives off an interesting vibe. I feel it. Do you?

- Apple does good hardware. It does not do soft goods with the same élan. Has its leadership lost the thread?

Smell that desperation yet? Publicity hunger, the need to be fashionable and with it, and taking the hard edges off a discount Mac laptop.

Net net: I like the weird pink version, but why didn’t the geniuses behind the Genius Bar do the zippy orange of the new candy bar but otherwise indistinguishable mobile device rolled out a short time ago? Orange? Not in the scarf palate.

Granny’s white did not make the cut.

Stephen E Arnold, November 11, 2025

Marketers and AI: Killing Sales and Trust. Collateral Damage? Meh

November 11, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

“The Trust Collapse: Infinite AI Content Is Awful” is an impassioned howl in the arctic space of zeros and ones. The author is what one might call collateral damage. When a UPS airplane drops a wing on take off, the people in the path of the fuel spewing aircraft are definitely not thrilled.

AI is a bit like a UPS-type of aircraft arrowing to an unhappy end, at least for the author of the cited article. The write up makes clear that smart software can vaporize a writer of marketing collateral. The cost for the AI is low. The penalty for the humanoid writer is collateral damage.

Let’s look at some of the points in the cited essay:

For the first time since, well, ever, the cost of creating content has dropped to essentially zero. Not “cheaper than before”, but like actually free. It’s so easy to generate a thousand blog posts or ten thousand ”personalized” emails and it barely costs you anything (for now).

Yep, marketing content appears on some of the lists I have mentioned in this blog. Usually customer service professionals top the list, but advertising copywriters and email pitch writers usually appear in the top five of AI-terminated jobs.

The write up explains:

What they [a prospect] actually want to know is “why the hell would I buy it from you instead of the other hundred companies spamming my inbox with identical claims?” And because everything is AI slop now, answering that question became harder for them.

The idea is that expensive, slow, time-consuming relationship selling is eroding under a steady stream of low cost, high volume marketing collateral produced by … smart software. Yes, AI and lousy AI at that.

The write up provides an interesting example of how low cost, high volume AI content has altered the sales landscape:

Old World (…-2024):

- Cost to produce credible, personalized outreach: $50/hour (human labor)

- Volume of credible outreach a prospect receives: ~10/week

- Prospect’s ability to evaluate authenticity: Pattern recognition works ~80% of time

New World (2025-…):

- Cost to produce credible, personalized outreach: effectively 0

- Volume of credible outreach a prospect receives: ~200/week

- Prospect’s ability to evaluate authenticity: Pattern recognition works ~20% of time

The signal-to-noise ratio has hit a breaking point where the cost of verification exceeds the expected value of engagement.

So what? The write up answers this question:

You’re representing a brand. And your brand must continuously earn that trust, even if you blend of AI-powered relevance. We still want that unmistakable human leadership.

Yep, that’s it. What’s the fix? What does a marketer do? What does a customer looking for a product or service to solve a problem?

There’s no answer. That’s the way smart software from Big AI Tech (BAIT) is supposed to work. The question, however, “What’s the fix? What’s your recommendation, dear author? Whom do you suggest solve this problem you present in a compelling way?”

Crickets.

AI systems and methods disintermediate as a normal function. The author of the essay does not offer a solution. Why? There isn’t one short of an AI implosion. At this time, too many big time people want AI to be the next big thing. They only look for profits and growth, not collateral damage. Traditional work is being rubble-ized. The build up of opportunity will take place where the cost of redevelopment is low. Some new business may be built on top of the remains of older operations, but moving a few miles down the road may be a more appealing option.

Stephen E Arnold, November 11, 2025

Microsoft: Desperation or Inspiration? Copilot, Have We Lost an Engine?

November 10, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Microsoft is an interesting member of the high-tech in-crowd. It is the oldest of the Big Players. It invented Bob and Clippy. It has not cracked the weirdness of Word’s numbering weirdness. Updates routinely kill services. I think it would be wonderful if Task Manager did not spawn multiple instances of itself.

Furthermore Microsoft, the cloudy giant with oodles of cash, ignited the next big thing frenzy a couple of years ago. The announcement that Bob and Clippy would operate on AI steroids. Googzilla experienced the equivalent of traumatic stress injury and blinked red, yellow, and orange for months. Crisis bells rang. Klaxons interrupted Foosball games. Napping in pods became difficult.

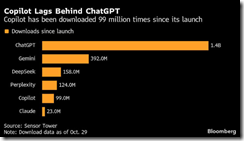

Imagine what’s happening at Microsoft now that this Sensor Tower chart is popping up in articles like “Microsoft Bets on Influencers Like Alix Earle to Close the Gap With ChatGPT.” Here’s the gasp inducer:

Source: The chart comes from Sensor Tower. It carries Bloomberg branding. But it appeared in an MSN.com article. Who crafted the data? How were the data assembled? What mathematical processes were use to produce such nice round numbers? I have no clue, but let’s assume those fat, juicy round numbers are “real,” and the weird imaginary “i” things electrical engineers enjoy each day.

The write up states:

Microsoft Corp., eager to boost downloads of its Copilot chatbot, has recruited some of the most popular influencers in America to push a message to young consumers that might be summed up as: Our AI assistant is as cool as ChatGPT. Microsoft could use the help. The company recently said its family of Copilot assistants attracts 150 million active users each month. But OpenAI’s ChatGPT claims 800 million weekly active users, and Google’s Gemini boasts 650 million a month. Microsoft has an edge with corporate customers, thanks to a long history of selling them software and cloud services. But it has struggled to crack the consumer market — especially people under 30.

Microsoft came up with a novel solution to its being fifth in the smart software league table. Is Microsoft developing useful AI-infused services for Notepad? Yes. Is Microsoft pushing Copilot and its hallucinatory functions into Excel? Yes. Is Microsoft using Copilot to help partners code widgets for their customers to use in Azure? Yeah, sort of, but I have heard that Anthropic Claude has some Certified Partners as fans.

The Microsoft professionals, the leadership, and the legions of consultants have broken new marketing ground. Microsoft is paying social media influencers to pitch Microsoft Copilot as the one true smart software. Forget that “God is my copilot” meme. It is now “Meme makers are Microsoft’s Copilot.”

The write up includes this statement about this stunningly creative marketing approach:

“We’re a challenger brand in this area, and we’re kind of up and coming,” Consumer Chief Marketing Officer Yusuf Mehdi

Excuse me, Microsoft was first when it announced its deal with OpenAI a couple of years ago. Microsoft was the only game in town. OpenAI was a Silicon Valley start up with links to Sam AI-Man and Mr. Tesla. Now Microsoft, a giant outfit, is “up and coming.” No, I would suggest Microsoft is stalled and coming down.

The write up from that university / consulting outfit New York University is quoted in the cited write up. Here is that snippet:

Anindya Ghose, a marketing professor at New York University’s Stern School of Business, expressed surprised that Microsoft is using lifestyle influencers to market Copilot. But he can see why the company would be attracted to their cult followings. “Even if the perceived credibility of the influencer is not very high but the familiarity with the influencers is high, there are some people who would be willing to bite on that apple,” Ghose said in an interview.

The article presents proof that the Microsoft creative light saber has delivered. Here’s that passage:

Mehdi cited a video Earle posted about the new Copilot Groups feature as evidence that the campaign is working. “We can see very much people say, ‘Oh, I’m gonna go try that,’ and we can see the usage it’s driving.” The video generated 1.9 million views on Earle’s Instagram account and 7 million on her TikTok. Earle declined to comment for this story.

Following my non-creative approach, here are several observations:

- From first to fifth. I am not sure social media influencers are likely to address the reason the firm associated with Clippy occupies this spot.

- I am not sure Microsoft knows how to fix the “problem.” My hunch is that the Softies see the issue as one that is the fault of the users. Its either the Russian hackers or the users of Microsoft products and services. Yeah, the problem is not ours.

- Microsoft, like Apple and Telegram, are struggling to graft smart software into ageing platforms, software, and systems. Google is doing a better job, but it is in second place. Imagine that. Google in the “place” position in the AI Derby. But Google has its own issues to resolve, and it is thinking about putting data centers in space, keeping its allegedly booming Web search business cranking along at top speed, and sucking enough cash from online advertising to pay for its smart software ambitions. Those wizards are busy. But Googzilla is in second place and coping with acute stress reaction.

Net net: The big players have put huge piles of casino chips in the AI poker game. Desperation takes many forms. The sport of extreme marketing is just one of the disorder’s manifestations. Watch it on TikTok-type services.

Stephen E Arnold, November 10, 2025

AI: There Is Gold in Them There Enterprises Seeking Efficiency

October 23, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read a “ride-em-cowboy” write up called “IBM Claims 45% Productivity Gains with Project Bob, Its Multi-Model IDE That Orchestrates LLMs with Full Repository Context.” That, gentle reader, is a mouthful. Let’s take a quick look at what sparked an efflorescence of buzzing jargon.

Thanks, Midjourney. Good enough like some marketing collateral.

I noted this statement about Bob (no, not the famous Microsoft Bob):

Project Bob, an AI-first IDE that orchestrates multiple LLMs to automate application modernization; AgentOps for real-time agent governance; and the first integration of open-source Langflow into Watsonx Orchestrate, IBM’s platform for deploying and managing AI agents. IBM’s announcements represent a three-pronged strategy to address interconnected enterprise AI challenges: modernizing legacy code, governing AI agents in production and bridging the prototype-to-production gap.

Yep, one sentence. The spirit of William Faulkner has permeated IBM’s content marketing team. Why not make a news release that is a single sentence like the 1300 word extravaganza in “Absalom, Absalom!”?

And again:

Project Bob isn’t another vibe coder, it’s an enterprise modernization tool.

I can visualize IBM customers grabbing the enterprise modernization tool and modernizing the enterprise. Yeah, that’s going to become a 100 percent penetration quicker than I can say, “Bob was the precursor to Clippy.” (Oh, sorry. I was confusing Microsoft’s Bob with IBM’s Bob again. Drat!)

Is it Watson making the magic happen with IDE’s and enterprise modernization? No, Watson is probably there because, well, that’s IBM. But the brains for Bob comes from Anthropic. Now Bob and Claude are really close friends. IBM’s middleware is Watson, actually Watsonx. And the magic of these systems produces …. wait for it … AgentOps and Agentic Workflows.

The write up says:

Agentic Workflows handles the orchestration layer, coordinating multiple agents and tools into repeatable enterprise processes. AgentOps then provides the governance and observability for those running workflows. The new built-in observability layer provides real-time monitoring and policy-based controls across the full agent lifecycle. The governance gap becomes concrete in enterprise scenarios.

Yep, governance. (I still don’t know what that means exactly.) I wonder if IBM content marketing documents should come with a glossary like the 10 pages of explanations of Telegram’s wild and wonderful crypto freedom jargon.

My hunch is that IBM wants to provide the Betty Crocker approach to modernizing an enterprise’s software processes. Betty did wonders for my mother’s chocolate cake. If you want more information, just call IBM. Perhaps the agentic workflow Claude Watson customer service line will be answered by a human who can sell you the deed to a mountain chock full of gold.

Stephen E Arnold, October 23, 2025

Apple Can Do AI Fast … for Text That Is

October 22, 2025

Wasn’t Apple supposed to infuse Siri with Apple Intelligence? Yeah, well, Apple has been working on smart software. Unlike the Google and Samsung, Apple is still working out some kinks in [a] its leadership, [b] innovation flow, [c] productization, and [d] double talk.

Nevertheless, I learned by reading “Apple’s New Language Model Can Write Long Texts Incredibly Fast.” That’s excellent. The cited source reports:

In the study, the researchers demonstrate that FS-DFM was able to write full-length passages with just eight quick refinement rounds, matching the quality of diffusion models that required over a thousand steps to achieve a similar result. To achieve that, the researchers take an interesting three-step approach: first, the model is trained to handle different budgets of refinement iterations. Then, they use a guiding “teacher” model to help it make larger, more accurate updates at each iteration without “overshooting” the intended text. And finally, they tweak how each iteration works so the model can reach the final result in fewer, steadier steps.

And if you want proof, just navigate to the archive of research and marketing documents. You can access for free the research document titled “FS-DFM: Fast and Accurate Long Text Generation with Few-Step Diffusion Language Models.” The write up contains equations and helpful illustrations like this one:

The research paper is in line with other “be more efficient”-type efforts. At some point, companies in the LLM game will run out of money, power, or improvements. Efforts like Apple’s are helpful. However, like its debunking of smart software, Apple is lagging in the AI game.

Net net: Like orange iPhones and branding plays like Apple TV, a bit more in the delivery of products might be helpful. Apple did produce a gold thing-a-ma-bob for a world leader. It also reorganizes. Progress of a sort I surmise.

Stephen E Arnold, October 21, 2025

A Positive State of AI: Hallucinating and Sloppy but Upbeat in 2025

October 21, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Who can resist a report about AI authored on the “interwebs.” Is this a variation of the Internet as pipes? The write up is “Welcome to State of AI Report 2025.” When I followed the links, I could read this blog post, view a YouTube video, work through more than 300 online slides, or see “live survey results.” I must admit that when I write a report, I distribute it to a few people and move on. Not this “interwebs” outfit. The data are available for those who are in tune, locked in, and ramped up about smart software.

An anxious parent learns that a robot equipped with agentic AI will perform her child’s heart surgery. Thanks, Venice.ai. Good enough.

I appreciate enthusiasm, particularly when I read this statement:

The existential risk debate has cooled, giving way to concrete questions about reliability, cyber resilience, and the long-term governance of increasingly autonomous systems.

Agree or disagree, the report makes clear that doom is not associated with smart software. I think that this blossoming of smart software services, applications, and apps reflects considerable optimism. Some of these people and companies are probably in the AI game to make money. That’s okay as long as the products and services don’t urge teens to fall in love with digital friends, cause a user mental distress as a rabbit hole is plumbed, or just output incorrect information. Who wants to be the doctor who says, “Hey, sorry your child died. The AI output a drug that killed her. Call me if you have questions”?

I could not complete the 300 plus slides in the slide deck. I am not a video type so the YouTube version was a non-starter. However, I did read the list of findings from t he “interwebs” and its “team.” Please, consult the source documents for a full, non-dinobaby version of what the enthusiastic researchers learned about 2025. I will highlight three findings and then offer a handful of comments:

- OpenAI is the leader of the pack. That’s good news for Sam AI-Man or SAMA.

- “Commercial traction accelerated.” That’s better news for those who have shoveled cash into the giant open hearth furnaces of smart software companies.

- Safety research is in a “pragmatic phase.” That’s the best news in the report. OpenAI, the leader like the Philco radio outfit, is allowing erotic interactions. Yes, pragmatic because sex sells as Madison Avenue figured out a century ago.

Several observations are warranted because I am a dinobaby, and I am not convinced that smart software is more than a utility, not an application like Lotus 1-2-2 or the original laser printer. Buckle up:

- The money pumped into AI is cash that is not being directed at the US knowledge system. I am talking about schools and their job of teaching reading, writing, and arithmetic. China may be dizzy with AI enthusiasm, but their schools are churning out people with fundamental skills that will allow that nation state to be the leader in a number of sectors, including smart software.

- Today’s smart software consists of neural network and transformer anchored methods. The companies are increasingly similar and the outputs of the different systems generate incorrect or misleading output scattered amidst recycled knowledge, data, and information. Two pigs cannot output an eagle except in a video game or an anime.

- The handful of firms dominating AI are not motivated by social principles. These firms want to do what they want. Governments can’t reign them in. Therefore, the “governments” try to co-opt the technology, hang on, and hope for the best. Laws, rules, regulations, ethical behavior — forget that.

Net net: The State of AI in 2025 is exactly what one would expect from Silicon Valley- and MBA-type thinking. Would you let an AI doc treat your 10-year-old child? You can work through the 300 plus slides to assuage your worries.

Stephen E Arnold, October 21, 2025

Apple: Waking Up Is Hard to Do

October 16, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read a letter. I think this letter or at least parts of it were written by a human. These days it can be tough to know. The letter appeared in “Wiley Hodges’s Open Letter to Tim Cook Regarding ICEBlock.” Mr. Hodge, according to the cited article, retired from Apple, the computer and services company in 2022.

The letter expresses some concern that Apple removed an app from the Apple online store. Here’s a snippet from the “letter”:

Apple and you are better than this. You represent the best of what America can be, and I pray that you will find it in your heart to continue to demonstrate that you are true to the values you have so long and so admirably espoused.

It does seem to me that Apple is a flexible outfit. The purpose of the letter is unknown to me. On the surface, it is a single former employee’s expression of unhappiness at how “leadership” leads and deciders “decide.” However, below the surface it a signal that some people thought a for profit, pragmatic, and somewhat frisky Fancy Dancing organization was like Snow White, the Easter bunny, or the Lone Ranger.

Thanks, Venice.ai. Good enough.

Sorry. That’s not how big companies work or many little companies for that matter. Most organizations do what they can to balance a PR image with what the company actually does. Examples range from arguing via sleek and definitely expensive lawyers that what they do does not violate laws. Also, companies work out deals. Some of these involve doing things to fit in to the culture of a particular company. I have watched money change hands when registering a vehicle in the government office in Sao Paulo. These things happen because they are practical. Apple, for example, has an interesting relationship with a certain large country in Asia. I wonder if there is a bit of the old soft shoe going on in that region of the world.

These are, however, not the main point of this blog post. There cited article contains this statement:

Hodges, earlier in his letter, makes reference to Apple’s 2016 standoff with the FBI over a locked iPhone belonging to the mass shooter in San Bernardino, California. The FBI and Justice Department pressured Apple to create a version of iOS that would allow them to backdoor the iPhone’s passcode lock. Apple adamantly refused.

Okay, the time delta is nine years. What has changed? Obviously social media, the economic situation, the relationship among entities, and a number of lawsuits. These are the touchpoints of our milieu. One has to surf on the waves of change and the ripples and waves of datasphere.

But I want to highlight several points about my reaction to the this blog post containing the Hodge’s letter:

- Some people are realizing that their hoped-for vision of Apple, a publicly traded company, is not the here-and-now Apple. The fairy land of a company that cares is pretty much like any other big technology outfit. Shocker.

- Apple is not much different today than it was nine years ago. Plucking an example which positioned the Cupertino kids as standing up for an ideal does not line up with the reality. Technology existed then to gain access to digital devices. Believing the a company’s PR reflected reality illustrates how crazy some perceptions are. Saying is not doing.

- Apple remains to me one of the most invasive of the technology giants. The constant logging in, the weirdness of forcing people to have data in the iCloud when those people do not know the data are there or want it there for that matter, the oddball notifications that tell a user that an “new device” is connected when the iPad has been used for years, and a few other quirks like hiding files are examples of the reality of the company.

News flash: Apple is like the other Silicon Valley-type big technology companies. These firms have a game plan of do it and apologize. Push forward. I find it amusing that adults are experiencing the same grief as a sixth grader with a crush on the really cute person in home room. Yep, waking up is hard to do. Stop hitting the snooze alarm and join the real world.

Net net: The essay is a hoot. Here is an adult realizing that there is no Santa with apparently tireless animals and dwarfs at the North Pole. The cited article contains what appears to be another expression of annoyance, anger, and sorrow that Apple is not what the humans thought it was. Apple is Apple, and the only change agent able to modify the company is money and/or fear, a good combo in my experience.

Stephen E Arnold, October 16, 2025

The Use Case for AI at the United Nations: Give AI a Whirl

October 15, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read a news story about the United Nations. The organization allegedly expressed concern that the organizations reports were not getting read. The solution to this problem appears in a Gizmodo “real news” report. “AI Finds Its Niche: Writing Corporate Press Releases.”

Gizmodo reports:

The researchers found that AI-assisted language cropped up in about 6 to 10 percent of job listings pulled from LinkedIn across the sample. Notably, smaller firms were more likely to use AI, peaking at closer to 15% of all total listings containing AI-crafted text.

Not good news for people who major in strategic communications at a major university. Why hire a 20-something when, smart software can do the job. Pass around the outputs. Let some leadership make changes. Fire out that puppy. Anyone — including a 50 year old internal sales person — can do it. That’s upskilling. You have a person on a small monthly stipend and a commission. You give this person a chance to show his/her AI expertise. Bingo. Headcount reduction. Efficiency. Less management friction.

The “real news” outfit’s article states:

t’s not just the corporate world that is using AI, of course. The research team also looked at English-language press releases published by the United Nations over the last couple of years and found that the organization has seemingly been utilizing AI to draft its content on a regular basis. They found that the percentage of text likely to be AI-generated has climbed from 3.1% in the first quarter of 2023 to 10.1% by the third quarter of 2023 and peaked around 13.7% by the same quarter of 2024.

If you worked at the UN and wanted to experiment with AI to boost readership, that sounds like an idea to test. Imagine if more people knew about the UN’s profile of that popular actor Broken Tooth.

Caution may be appropriate. The write up adds:

the researchers found the rate of AI usage may have already plateaued, rather than continuing to climb. For press releases, the figure peaked at 24.3% being likely AI-generated, in December 2023, but it has since stabilized at about a half-percent lower and hasn’t shifted significantly since. Job listings, too, have shown signs of decline since reaching their peak, according to the researchers. At the UN, AI usage appears to be increasing, but the rate of growth has slowed considerably.

My thought is that the UN might want to step up its AI-enhanced outputs.

I think it is interesting that the billions of dollars invested in AI has produced such outstanding results for the news release use case. Winner!

Stephen E Arnold, October 15, 2025