The Branding Genius of Cowpilot: New Coke and Jaguar Are No Longer the Champs

January 6, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

We are beavering away on our new Telegram Notes series. I opened one of my newsfeeds and there is was. Another gem of a story from PCGamer. As you may know, PCGamer inspired this bit of art work from the and AI system. I thought I would have a “cow” when I saw. Here’s the visual gag again:

Is that cow standing in its output? Could that be a metaphor for “cowpilot” output? I don’t know. Qwen, like other smart software can hallucinate. Therefore, I see a semi-sacred bovine standing in a muddy hole. I do not see AI output. If you do, I am not sure you are prepared for the contents about which I shall comment; that is, the story from PCGamer called “In a Truly Galaxy-Brained Rebrand, Microsoft Office Is Now the Microsoft 365 Copilot App, but Copilot Is Also Still the Name of the AI Assistant.”

I thought New Coke was an MBA craziness winner. I thought the Jaguar rebrand was an even crazier MBA craziness winner. I thought the OpenAI smart software non mobile phone rebranding effort that looks like a 1950s dime store fountain pen was in the running for crazy. Nope. We have a a candidate for the rebranding that tops the leader board.

Microsoft Office is now the M3CA or Microsoft 365 Copilot App.

The PCGamer write up says:

Copilot is the app for launching the other apps, but it’s also a chatbot inside the apps.

Yeah, I have a few. But what else does PCGamer say in this write up?

An MBA study group discusses the branding strategy behind Cowpilot. Thanks, Qwen. Nice consistent version of the heifer.

Here’s a statement I circled:

Copilot is, notably, a thing that already exists! But as part of the ongoing effort to juice AI assistant usage numbers by making it impossible to not use AI, Microsoft has decided to just call its whole productivity software suite Copilot, I guess.

Yep, a “guess.” That guess wording suggests that Microsoft is simply addled. Why name a product that causes a person to guess? Not even Jaguar made people “guess” about a weird square car painted some jazzy semi hip color. Even the Atlanta semi-behemoth slapped “new” Coke on something that did not have that old Coke vibe. Oh, both of these efforts were notable. I even remember when the brain trust at NBC dumped the peacock for a couple of geometric shapes. But forcing people to guess? That’s special.

Here’s another statement that caught my dinobaby brain:

Should Microsoft just go ahead and rebrand Windows, the only piece of its arsenal more famous than Office, as Copilot, too? I do actually think we’re not far off from that happening. Facebook rebranded itself “Meta” when it thought the metaverse would be the next big thing, so it seems just as plausible that Microsoft could name the next version of Windows something like “Windows with Copilot” or just “Windows AI.” I expect a lot of confusion around whatever Office is called now, and plenty more people laughing at how predictably silly this all is.

I don’t agree with this statement. I don’t think “silly” captures what Microsoft is attempting to do. In my experience, Microsoft is a company that bet on the AI revolution. That turned into a cost sink hole. Then AI just became available. Suddenly Microsoft has to flog its business customers to embrace not just Azure, Teams, and PowerPoint. Microsoft has to make it so users of these services have to do Copilot.

Take your medicine, Stevie. Just like my mother’s approach to giving me cough medicine. Take your medicine or I will nag you to your grave. My mother haunting me for the rest of my life was a bummer thought. Now I have the Copilot thing. Yikes, I have to take my Copilot medicines whether I want to or not. That’s not “silly.” This is desperation. This is a threat. This is a signal that MBA think has given common sense a pink slip.

Stephen E Arnold, January 6, 2026

AlphaTON: Tactical Brilliance or Bumbling?

January 6, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

A few days ago, I published on my “Telegram Notes” page a story about some fancy dancing. I thought the insights into strategic thinking and tactical moves might be of interest to those who follow Beyond Search and its information on social media like the estimable LinkedIn.

What caught my attention? Telegram — again. Pavel Durov is headed for a trial in France. He’s facing about a baker’s dozens of charges related to admirable activities like ignoring legitimate requests for information about terrorism, a little bit of kiddie video excitement, and the more mundane allegations related to financial fancy dancing.

This means that although other nation states could send a diplomatic note to the French government, that is unlikely. Thus, some casual conversations may be held, but the wheels of French justice which rolled like tumbrils during the French revolution, are grinding forward. The outcome is difficult to predict. If you have been in France, you know how some expected things become “quelle surprise.” And fast.

Not surprisingly, the activity related to Telegram has been stepped up. Nikolai Durov has apparently come out of his cocoon to allow Pavel, his GOAT and greatest of all time brother, to say:

It happened. Our decentralized confidential compute network, Cocoon, is live. The first AI requests from users are now being processed by Cocoon with 100% confidentiality. GPU owners are already earning TON.

Then a number of interesting things happened.

First, there was joy of Telegram true believers who wondered by the GOAT and his two Ph.D. toting brother were acting like Tim Apple and its non-AI initiative. Telegram was doing AI as a distributed service. Yep, AI mining along with other types of digital mining. Instead of buying scare and expensive hardware, Nikolai ideated a method that would use other people’s GPUs. Do some work for Telegram and get paid in TONcoin. Yep, that the currency completely and totally under the control of the independent TON Foundation. Yep, completely separate.

Second, there was some executive shuffling at the TON Foundation. You know that this outfit is totally, 100 percent separate from Telegram and now has responsibility for the so called TON blockchain technology and the marketing of all things Telegram. Manual (Manny) Stotz, a German financial wizard, left his job at the TON Foundation and became the president of TON Strategy Company. I think he convinced a person with a very low profile named Veronika Kapustina to help manage the new public company. TON Strategy has a tie up with Manny’s own Kingsway Capital and possibly with Manny’s other outfit Koenigsweg Holding. (Did you know that Koenigsweg means Kingsway in German?)

Is it possible for a pop up company to offer open market visitors an deal on hot TONcoins? I don’t know. I do know that Qwen did a good enough job on this illustration, however.

Third, was the beep beep Road Runner acceleration of AlphaTON Capital. Like TON Strategy Company (which rose like a phoenix from the smoking shell of VERB Technology), AlphaTON popped into existence about four months ago. Like TON Strategy, it sported a NASDAQ listing under the ticker symbol ATON. Some Russians might remember the famous or infamous ATON Bank affair. (I wonder if someone was genuinely clueless about the ATON ticker symbol’s metaphorical association or just having a bit of fun on the clueless, tightly leashed “senior managers” of AlphaTON. I thought I heard a hooray when AlphaTON was linked to the very interesting high frequency trading outfit DWF MaaS. No CFO or controller was needed when the company appeared like a pop up store in the Mall of America. A person with an interesting background would be in charge of AlphaTON’s money. For those eager to input one type of currency and trade it for another, the DWF MaaS outfit and its compatriots in Switzerland could do the job.

But what happened to these beep beep Road Runner moves?

Answer: TONcoin value went down. TON Strategy Company share price went down. AlphaTON share price cratered. On January 2, one AlphaTON share was about US$0.77. That is just a 85 percent drop since the pop distributed mining company came into existence by morphing from a cancer fighting company to an AI mining outfit.

In the midst of these financial flameouts, Manny had to do some fast dancing for the US SEC because he did not do the required shareholder vote process before making some money moves. Then, a couple of weeks later, the skilled senior managers of AlphaTON announced a deal with the high flying private company Anduril. But there was one small problem. Anduril came out and said, “AlphaTON is not telling the truth.” The blue chip thinkers at AlphaTON had to issue a statement to the effect that it was acting like an AI system and hallucinating. There was no deal with Anduril.

Then the big news was AlphaTON’s cutting ties with DWF MaaS, distancing itself from the innovative owner of DWF Maas, and paying DWF MaaS an alleged US$15 million to just go away pronto.

Where is AlphaTON now?

That’s a good question. I think 2026 will allow AlphaTON to demonstrate its business acumen. Personally I hope that information becomes available to answer these questions:

- Was AlphaTON’s capitalization at US$420.69 million a joke like ATON as the ticker symbol?

- What’s the linkage among RSV Capital (Canada) and sources of money in Russia and Telegram’s TONcoin (which is totally under the control of the completely separate TON Foundation)?

- What does Enzo Villani know about mining artificial intelligence?

- What does Britany Kaiser know about mining artificial intelligence?

- What does Logan Ryan Golema, an egamer, know about building out AI data centers?

- How does AlphaTON plan to link financial services, cancer fighting, and AI mining into a profitable business in 2026?

If you want to read the post in Telegram Notes, click here.

Stephen E Arnold, January 6, 2026

Grok AI Hallucinates Less Than Any Other AI. Believe It or Not!

January 6, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I had to put down my Telegram Notes project to capture the factoids in “Elon Musk’s Grok Records Lowest Hallucination Rate in AI Reliability Study.” Of course, I believe everything I read on the Internet. I am a veritable treasure trove of the factoids about Elon Musk’s technologies; for example, full self driving, the trade in value of a used Tesla Model S, and the launch date for a trip to Mars. I am so ready.

The AI system tells the programmers, “Yes, you can use AI to predict which of the egame players are most likely to have access to a parent’s credit card. This card can be used to purchase tokens or crypto to enter higher-stake gambling games. Does that help?” Thanks, Qwen. Too bad you weren’t in the selection of AI systems tested by the wizards at Relum.

The write up presents information from gold-standard sources too; for instance, the casino games aggregation company Relum. And what did this estimable company’s research reveal? Consider these factoids:

- Grok’s hallucination “rate” is just eight percent. Out of 100 prompts / queries, Grok goes off the rails only eight times. This is outstanding. Everyone wants such a low rate of hallucination. Exceptions may apply for some nitpickers.

- The worst LLMs in the hallucination rate category are ChatGPT and Google Gemini. These outfits make up information more than one third of the time. That’s not too bad if you are planning on selling adds. The idea is “prompt relaxation.” The more relaxed, the wider the net for allegedly relevant ads. More ads yield more revenue. Maybe there is more to making up answers than meets the idea. I am okay with ChatGPT and Google competing for the most hallucinogenic crown. Have at it, folks.

- Deepseek, the Chinese freebie, hallucinates only 14 percent of the time. Way to go, Chinese political strategists. (Qwen’s hallucination was not reported in the article. By the way, that’s one of the models Pavel Durov’s Telegram will allegedly rely upon to translate Messenger content and perform other magical functions for the TONcoin pivot. Note the word “magical.” Two public companies listed on NASDAQ in six months. As I said, “Magic.”

Here’s a quote from the gambling company’s chief product officer. Obviously this individual is an expert in the field of machine learning, neural networks, matrix transforms, and the other bits and bobs of building smart software. Here’s the statement:

About 65% of US companies now use AI chatbots in their daily work, and nearly 45% of employees admit they’ve shared sensitive company information with these tools. These numbers show well how important chatbots have become in everyday work.

Absolutely. When one runs Windows, the user is “using” smart software. When one uses Google, AI is there. These market winners are moving forward, greased on wheels of fabricated output. Yeah, great.

Several observations:

- Grok seems unaware of messages posted on X.com. I wonder why.

- A bad actor has to sign up to access the Grok API and the X.com API in order to pull off some slick AI-based cyber activities. I wonder why.

- Grok’s appeal to online gaming companies is interesting. I wonder way.

I have no answers. Relum does. These data do not reassure me about Mr. Musk’s business tactics for building Grok’s market footprint.

Stephen E Arnold, January 6, 2025

A Revised AI Glossary for 2026

January 5, 2026

![green-dino_thumb_thumb[3]_thumb_thumb green-dino_thumb_thumb[3]_thumb_thumb](https://www.arnoldit.com/wordpress/wp-content/uploads/2026/01/green-dino_thumb_thumb3_thumb_thumb_thumb.gif) Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I have a lot to do. I spotted this article: “ChatGPT Glossary: 61 AI Terms Everyone Should Know.” I read the list and the definitions. I have decided to invest a bit of time to de-obfuscate this selection of verbal gymnastics. My hunch is that few will be amused. I, however, find this type of exercise very entertaining. On the other hand, my reframing of this “everyone should know” stuff reflects on my role as an addled dinobaby limping around rural Kentucky.

Herewith my recasting of the “everyone should know” list. Remember. Everyone means everyone. That’s a categorical affirmative, and these assertions trigger me.

Artificial general intelligence. Sci-fi craziness from “we can rule the world” wizards

Agentive. You, human, don’t do this stuff anymore.

AI ethics. Anything goes, bros.

AI psychosis. It’s software and it’s driving you nuts.

AI safety. Sure, only if something does not make money or abrogate our control

Algorithms. Numerical recipes explained in classes normal people do not take

Alignment. Weaponizing models and information outputs

Anthropomorphism. Sure, fall in love with outputs. We don’t really care. Just click on the sponsored content.

Artificial intelligence. Code words for baloney and raising money

Autonomous agents. Stay home and make stuff to sell on Etsy

Bias. Our way is the only way

Chatbot. Talk to our model, pal

Claude. An example of tech bro-loney

Cognitive computing. A librarian consultant’s contribution to gibberish

Data augmentation. Indexing

Dataset. Anything an AI outfit can grab and process

Deep learning. Pretending to be smart

Diffusion. Moral dissipation and hot gas

Emergent behavior. Shameless rip off of the Santa Fe Institute and Walter Kaufman

End-to-end learning. Update models instead of retraining them

Ethical considerations. Pontifical statements or “"Those are my ethical principles, and if you don’t like them… well, I have others."

Foom. GenZ’s spelling of the Road Runner’s cartoon beep beep

Generative adversarial network. Jargon fog for inputs along the way to an output

Generative AI. Reason to fire writers and PR people

Google Gemini. An example of tech bro-loney from an ad sales outfit

Guardrails. Stuff to minimize suicides, law suits, and the proliferation of chemical weapons

Hallucination. Errors

Inference. Guesses

LLM. Today’s version of LSMFT

Machine learning. Math from half century ago

Microsoft Bing. Beats the heck out of me

Multimodal AI. A fancy way to say words, sound, pix, and video to help un-employ humans or did this type of work

Natural language processing. Software that understands William Carlos Williams’ poetry

Neural network. Lots of probability and human-fiddled thresholds

Open weights. You can put your finger on the scale too

Overfitting. Baloney about hallucinations, being wrong, and helping kids commit de-living

Paperclips. Less sexy than The Terminator but loved by tech bros who like the 1999 film Office Space

Parameters. Where you put your finger on the scale to fiddle outputs

Perplexity. Another example of tech bro-loney

Prompt. A query

Prompt chaining. Related queries fed into the baloney machine

Prompt engineering. Hunting for words and phrases to output pornography, instructions for making poison gas, and ways to defraud elders online

Prompt injection. Pressing enter after prompt engineering

Quantization. Jargon to say, “We won’t need so much money now, Mr. Venture Bankman”

Slop. Outputs from smart software

Sora. Lights, camera, you’re fired. Cut.

Stochastic parrot. A bound phrase that allowed Google to give Timnit Gebru a chance to find her future elsewhere

Style transfer. You too can generate a sketch in the style of Max Ernst and a Batman comic book

Sycophancy. AI models emulate new hires at McKinsey & Company

Synthetic data. Hey, we just fabricate data. No copyright problems, right

Temperature. A fancy way to explain twiddling with parameters

Text-to-image generation. Artists. Who needs them?

Tokens. n-grams but to crypto dudes it’s value

Training data. Copyright protected information, personally identifiable information, and confidential inputs in any format, even the synthetic made up stuff

Transformer model. A reason for Google leadership to ask, “Why did we release this to open source?”

Turing test. Do you love me? Of course, the system does. Now read the sponsored content

Unsupervised learning. Automated theft of training data

Weak AI (narrow AI). A model trained on a bounded data set, not whatever the AI company can suck down

Zero-shot learning. A stepping stone to artificial intelligence able to do more than any miserable human

I love smart software.

Oh, the cited source leaves out OpenAI’s ChatGPT. This means “Titanic” after the iceberg.

Stephen E Arnold, January 5, 2025

Microsoft AI PR and PC Gamer: Really?1

January 5, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I was poking around in online gaming magazines. I was looking for some information about a “former gamer” named Logan Ryan Golema. He allegedly jumped from the lucrative world of eGames to chief technical officer of an company building AI data centers for distributed AI gaming. I did not find the interesting Mr. Golema, who has moved around with locations in the US, the Cayman Islands, and Portugal.

No Mr. Golema, but I did spot a write up with the interesting “gamer” title “Microsoft CEO Satya Nadella Says It’s Time to Stop Talking about AI Slop and Start Talking about a Theory of the Mind That Accounts for Humans Being Equipped with These New Cognitive Amplifier Tools.”

Wow, from AI slop to “new cognitive amplifier tools.” Remarkable. I have concluded that the Microsoft AI PR push for Copilot has achieved the public relations equivalent of climbing the Jirishanca mountain in Peru. Well done!

Thanks, Qwen. Good enough for AI output.

And what did the PC Gamer write up report as compelling AI news requiring a 34 word headline?

I noted this passage:

Nadella listed three key points the AI industry needs to focus on going forward, the first of which is developing a “new concept” of AI that builds upon the “bicycles for the mind” theory put forth by Steve Jobs in the early days of personal computing. “What matters is not the power of any given model, but how people choose to apply it to achieve their goals,” Nadella wrote. “We need to get beyond the arguments of slop vs. sophistication and develop a new equilibrium in terms of our ‘theory of the mind’ that accounts for humans being equipped with these new cognitive amplifier tools as we relate to each other. This is the product design question we need to debate and answer.”

“Bicycles of the mind” is not the fingerprint of Copilot, a dearly loved and revered AI system. “Bicycles of the mind” is the product of small meetings of great minds. The brilliance of the metaphor exceeds the cost of the educations of the participants in these meetings. Are the attendees bicycle riders? Yes. Do they depend on AI? Yes, yes, of course. Do they believe that regular people can “get beyond” slop versus sophistication?

Probably not. But these folks get paid, and they can demonstrate interest, enthusiasm, and corporatized creativity. Energized by Teams’ meetings. Invigorated with Word plus Copilot and magnetized by wonder of hallucinating data in Excel — these people believe in AI.

PC Gamer points out:

There’s a lot of jargon and bafflegab in Nadella’s post, as you might expect from a CEO who really needs to sell this stuff to someone, but what I find more interesting is the sense that he’s hedging a bit. Microsoft has sunk tens of billions of dollars into its pursuit of an AI panacea and expressed outright bafflement that people don’t get how awesome it all is (even though, y’know, it’s pretty obvious), and the chief result of that effort is hot garbage and angry Windows users.

Yep, bafflegab. I think this is the equivalent of cow output, but I am not sure. I am sure about that 34 word headline. I would have titled the essay “Softie Spews Bafflegab.” But I am a dinobaby. What do I know? How about AI output is not slop?

Stephen E Arnold, January 5, 2026

Telegram Notes: Is ATON an Inside Joke?

January 2, 2026

![green-dino_thumb_thumb[3]_thumb green-dino_thumb_thumb[3]_thumb](https://www.arnoldit.com/wordpress/wp-content/uploads/2026/01/green-dino_thumb_thumb3_thumb_thumb.gif) Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

On New Year’s Day, I continued to sift through my handwritten notecards. (I am a dinobaby. No Obsidian for me!) I came across a couple of items that I thought were funny or at the least “inside jokes” related to the beep beep Road Runner spin of a company involved (allegedly) in pharma research into a distributed AI mining company. Yeah, I am not sure what that means either. I did notice a link to an outfit with a confusing deal to manage the company named AlphaTON, captained by a former Cambridge Analytica professional, and featuring a semi-famous person in Moscow named Yuri Mitin of the Red Shark Ventures outfit.

Several financial wizards, eager to tap into US financial markets, toss around names for the NASDAQ’s official ticker symbol. Thanks, Venice.ai. Good enough.

What’s the joke? I am not sure you will laugh outload, but I know of a couple people who would slap their knee and chortle.

Joke 1: The ticker symbol for the beep beep outfit is ATON. That is the “name” of a famous or infamous Russian bank. Naming a company listed on the US NASDAQ as ATON is a bit like using the letters CIA or NSA on a company listed on the MOEX.

Joke 2: The name “Red Shark” suggests an intelligence operation; for example, there was an Israeli start up called “Sixgill.” That’s a shark reference too. Quite a knee slapper because the “old” Red Shark Ventures is now RSV Capital, and it has a semi luminary in Moscow’s financial world as its chief business development officer. Yuri Mitin. Do you have tears in your eyes yet?

Joke 3: AlphaTON’s SEC S-3/F-3 shelf registration for this dollar amount: US$420.69 million. If you are into the cannabis world, “420” means either partaking of cannabis at 4:20 pm each afternoon or holding a protest on April 20th each year. Don’t feel bad. I did not get this either, but Perplexity as able to explain this in great detail. And the “69” is supposed to be a signal to crypto bros that this stock is going to be a roller coaster. I think “69” has another slang reference, but I don’t recall that. You may, however.

Joke 4: The name Alpha may be a favorite of the financial wizard Enzo Villani involved with AlphaTON, but one of my long time clients (a former CIA operations officers) told me that “A” was an easy way to refer to those entertaining people in the Russian Spetsgruppa "A", the FSB’s elite counter-terrorism unit.

Whether these are making you laugh, I don’t know. I wonder if these references are a coincidence. Probably not. AlphaTON, supporting the Telegram TON Foundation’s AI initiative, is just a coincidence. I would hazard a guess that a fellow name Andrei Grachev understands the attempts at humor. The wet blanket is the stock price and the potential for some turbulence.

Financial wizards engaged in crypto centric activities do have a sense of humor — until they lose money. Those two empty chairs in the cartoon suggest people have left the meeting.

PS. The Telegram Notes information service is beginning to take shape. If you want more information about our Telegram, TON Foundation, TON Strategy Company, and AlphaTON, just write kentmaxwell at proton dot me.

Stephen E Arnold, January 2, 2025

Telegram Notes: AI Slop Analyzes TON Strategy and Demonstrates Shortcomings

January 1, 2026

![green-dino_thumb_thumb[3] green-dino_thumb_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/12/green-dino_thumb_thumb3_thumb-2.gif) Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

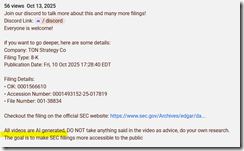

If you want to get a glimpse of AI financial analysis, navigate to “My Take on TON Strategy’s NASDAQ Listing Warning.” The video is ascribed to Corporate Decoder. But there is no “my” in the old-fashioned humanoid sense. Click on the “more” link in the YouTube video, and you will see this statement:

The highlight points to the phrase, “All videos are AI generated…”

The six minute video does not point out some of the interesting facets of the Telegram / TON Foundation inspired “beep beep zoom” approach to getting a “rules observing listing” on the US NASDAQ. Yep, beep beep. Road Runner inspired public lists with an alleged decade of history. I find this fascinating.

The video calls attention to superficial aspects of the beep beep Road Runner company spun up in a matter of weeks in late 2025. The company is TON Strategy captained by Manny Stotz, formerly the president or CEO or temporary top dog at the TON Foundation. That’s the outfit Pavel Durov thought would be a great way to convert GRAMcoin into TONcoin, gift the Foundation with the TON blockchain technology, and allow the Foundation to handle the marketing. (Telegram itself does not marketing except leverage the image of Pavel Durov, the self-proclaimed GOAT of Russian technology culture. In addition to being the GOAT, Mr. Durov is reporting to his nanny in the French judiciary as he awaits a criminal trial on nine or ten serious offenses. But who is counting?

What’s the AI video do other than demonstrate that YouTube does not make easily spotted AI labels obvious.

The video does not provide any insight into some questions my team and I had about TON Strategy Company, its executive chairperson Manual “Manny” Stotz, or the $400 million plus raised to get the outfit afloat. The video does not call attention to the presence of some big, legitimate, brands in the world of crypto like Blockchain.com.

The video tries to explain that the firm is trying to become an asset outfit like Michael Saylor’s Strategy Company. But the key difference is not pointed out; that is, Mr. Saylor bet on Bitcoin. Mr. Stotz is all in on TONcoin. He believes in TONcoin. He wanted to move fast. He is going to have to work hard to overcome what might be some modest potholes as his crypto vehicle chugs along the Information Highway.

The first crack in the asphalt is the TONcoin itself. Mr. Stotz “bought” TONcoins at about a value point of $5.00. That was several weeks ago. Those same TONcoins can be had for $1.62 at about noon on December 31, 2025. Source you ask? Okay, here’s the service I consulted: https://www.tradingview.com/symbols/TONUSD/

The second little dent in the road is the price of the TON Strategy Company’s NASDAQ stock. At about noon on December 31, 2025, it was going for $2.00 a share. What did the TONX stock cost in September 2025? According to Google Finance it was in the $21.00 range. Is this a problem? Probably not for Mr. Stotz because his Kingsway Capital is separate from TON Strategy Company. Plus, Kingsway Capital is separate from its “owner” Koenigsweg Holdings. Will someone care if TONX gets delisted? Yep, but I am not prepared to talk about the outfits who have an interest in Koenigsweg and Kingsway. My hunch is that a couple of these outfits may want to take a ride with Manny to talk, to share ideas, and to make sure everyone is on the same page. In Russian, Google Translate says this sequence of words might pop up during the ride: ?? ?????????, ??????

What are some questions the AI system did not address? Here are a few:

- How does the current market value of TONcoin affect the information in the SEC document the AI analyzed?

- Where do these companies fit into the TON Strategy outfit? Why the beep beep approach when two established outfits like Kingsway Capital and Koenigsweg Holdings could have handled the deal?

- What connections exist between the TON Foundation and Mr. Stotz? What connection does the deal have to the TON Foundation’s ardent supported, DWF Labs (a crypto market maker of repute)?

- Who is president of the TON Strategy Company? What is the address of the company for the SEC document? Where does Veronika Kapustina reside in the United States? Why do Rory Cutaia, Veronika Kapustina, and TON Strategy Company share a residential address in Las Vegas?

- What role does Sarah Olsen, a former Rockefeller financial analyst play in the company from her home, possibly in Miami, Florida?

- What is the Plan B if the VERB-to-TON Strategy Company continues to suffer from the down market in crypto? What will outfits like DWF Labs do? What will the TON Foundation do? What will Pavel Durov, the GOAT, do in addition to wait for the big, sluggish wheels of the French judicial system to grind forward?

The AI did not probe like an over-achieving MBA at an investment firm would do to keep her job. Nope. Hit the pause switch and use whatever the AI system generates. Good enough, right?

What does this AI generated video reveal about smart software playing the role of a human analyst? Our view is:

- Quick and sloppy content

- Failure to chase obvious managerial and financial loose ends

- Ignoring obvious questions about how a “sophisticated” pivot can garner two notes from Mother SEC in a couple of weeks.

Net net: AI is not ready for some types of intellectual work.

Want for more Telegram Notes’ content? More information about our Telegram-related information service in the new year.

Stephen E Arnold, January 1, 2026

Software in 2026: Whoa, Nellie! You Lame, Girl?

December 31, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

When there were Baby Bells, I got an email from a person who had worked with me on a book. That person told me that one of the Baby Bells wanted to figure out how to do their Yellow Pages as an online service. The problem, as I recall my contact’s saying, was “not to screw up the ad sales in the dead tree version of the Yellow Pages.” I won’t go into the details about the complexities of this project. However, my knowledge of how pre- and post-Judge Green was supposed to work and some on-site experience creating software for what did business as Bell Communications Research gave me some basic information about the land of Bell Heads. (If you don’t get the reference, that’s okay. It’s an insider metaphor but less memorable than Achilles’ heel.)

A young whiz kid from a big name technology school experiences a real life learning experience. Programming the IBM MVS TSO set up is different from vibe coding an app from a college dorm room. Thanks, Qwen, good enough.

The point of my reference to a Baby Bell was that a newly minted stand alone telecommunications company ran on what was a pretty standard line up of IBM and B4s (designed by Bell Labs, the precursor to Bellcore) plus some DECs, Wangs, and other machines. The big stuff ran on the IBM machines with proprietary, AT&T specific applications on the B4s. If you are following along, you might have concluded that slapping a Yellow Pages Web application into the Baby Bell system was easy to talk about but difficult to do. We did the job using my old trick. I am a wrapper guy. Figure out what’s needed to run a Yellow Pages site online, what data are needed and where to get it and where to put it, and then build a nice little Web set up and pass data back and forth via what I call wrapper scripts and code. The benefits of the approach are that I did not have to screw around with the software used to make a Baby Bell actually work. When the Web site went down, no meetings were needed with the Regional Manager who had many eyeballs trained on my small team. Nope, we just fixed the Web site and keep on doing Yellow Page things. The solution worked. The print sales people could use the Web site to place orders or allow the customer to place orders. Open the valve to the IBM and B4s, push in data just the way these systems wanted it, and close the valve. Hooray.

Why didn’t my team just code up the Web stuff and run it on one of those IBM MVS TSO gizmos? The answer appears, quite surprisingly in a blog post published December 24, 2025. I never wrote about the “why” of my approach. I assumed everyone with some Young Pioneer T shirts knew the answer. Guess not. “Nobody Knows How Large Software Products Work” provides the information that I believed every 23 year old computer whiz kid knew.

The write up says:

Software is hard. Large software products are prohibitively complicated.

I know that the folks at Google now understand why I made cautious observations about the complexity of building interlocking systems without the type of management controls that existed at the pre break up AT&T. Google was very proud of its indexing, its 150 plus signal scores for Web sites, and yada yada. I just said, “Those new initiatives may be difficult to manage.” No one cared. I was an old person and a rental. Who really cares about a dinobaby living in rural Kentucky. Google is the new AT&T, but it lacks the old AT&T’s discipline.

Back to the write up. The cited article says:

Why can’t you just document the interactions once when you’re building each new feature? I think this could work in theory, with a lot of effort and top-down support, but in practice it’s just really hard….The core problem is that the system is rapidly changing as you try to document it.

This is an accurate statement. AT&T’s technical regional managers demanded commented code. Were the comments helpful? Sometimes. The reality is that one learns about the cute little workarounds required for software that can spit out the PIX (plant information exchange data) for a specific range of dialing codes. Google does some fancy things with ads. AT&T in the pre Judge Green era do some fancy things for the classified and unclassified telephone systems for every US government entity, commercial enterprises, and individual phones and devices for the US and international “partners.”

What does this mean? In simple terms, one does not dive into a B4 running the proprietary Yellow Page data management system and start trying to read and write in real time from a dial up modem in some long lost corner of a US state with a couple of mega cities, some military bases, and the national laboratory.

One uses wrappers. Period. Screw up with a single character and bad things happen. One does not try to reconstruct what the original programming team actually did to make the PIX system “work.”

The write up says something that few realize in this era of vibe coding and AI output from some wonderful system like Claude:

It’s easier to write software than to explain it.

Yep, this is actual factual. The write up states:

Large software systems are very poorly understood, even by the people most in a position to understand them. Even really basic questions about what the software does often require research to answer. And once you do have a solid answer, it may not be solid for long – each change to a codebase can introduce nuances and exceptions, so you’ve often got to go research the same question multiple times. Because of all this, the ability to accurately answer questions about large software systems is extremely valuable.

Several observations are warranted:

- One gets a “feel” for how certain large, complex systems work. I have, prior to my retiring, had numerous interactions with young wizards. Most were job hoppers or little entrepreneurs eager to poke their noses out of the cocoon of a regular job. I am not sure if these people have the ability to develop a “feel” for a large complex of code like the old AT&T had assembled. These folks know their code, I assume. But the stuff running a machine lost in the mists of time. Probably not. I am not sure AI will be much help either.

- The people running some of the companies creating fancy new systems are even more divorced from the reality of making something work and how to keep it going. Hence, the problems with computer systems at airlines, hospitals, and — I hate to say it — government agencies. These problems will only increase, and I don’t see an easy fix. One sure can’t rely on ChatGPT, Gemini, or Grok.

- The push to make AI your coding partner is sort of okay. But the old-fashioned way involved a young person like myself working side by side with expert DEC people or IBM professionals, not AI. What one learns is not how to do something but how not to do something. Any one, including a software robot, can look up an instruction in a manual. But, so far, only a human can get a “sense” or “hunch” or “intuition” about a box with some flashing lights running something called CB Unix. There is, in my opinion, a one way ticket down the sliding board to system failure with the today 2025 approach to most software. Think about that the next time you board an airplane or head to the hospital for heart surgery.

Net net: Software excitement ahead. And that’s a prediction for 2026. I have a high level of confidence in this peek at the horizon.

Stephen E Arnold, December 31, 2025

Consultants, the Sky Is Falling. The Sky Is Falling But Billing Continues

December 30, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Have you ever heard of a person with minimal social media footprints named Veronika Kapustina? I sure hadn’t. She popped up as a chief executive officer of an instant mashed potato-type of company named TON Strategy Company. I was listening to her Russian-accented presentation at a crypto conference via YouTube. My mobile buzzed and I saw a link from one of my team to this story: “Why the McKinsey Layoffs Are a Warning Signal for Consulting in the AI Age.”

Yep, the sky is falling, the sky is falling. As an 81 year old dinobaby, I recall my mother reading me a book in which a cartoon character was saying, “The sky is falling.” News flash: The sky is still there, and I would wager a devalued US$1.00 that the sky will be “there” tomorrow.

This sort of strange write up asserts:

While the digital age reduced information asymmetry, the AI age goes further. It increasingly equalizes analytical and recommendation capabilities.

The author is allegedly a former blue chip consultant. Therefore, this person knows about that which he opines. The main point is that consulting firms may not need to hire freshly credentialed MBAs. The grunt work can be performed by an AI system. True, most AI systems make up information with estimates running between 10 and 33 percent of the outputs. I suppose that’s about par for an MBA whose father is a United States senator or the CEO of a client of the blue chip firm. Maybe the progeny of the powerful are just smarter than an AI. I don’t know. I am a dinobaby, and it has been decades since I worked at a blue chip outfit.

What pulled me from my looking for information about the glib Russian, Veronika K? That’s an easy question. I think the write up misses the entire point of a blue chip consulting firm and for some quite specialized consulting firms like the nuclear outfit employing me before I jumped to the blue chip’s carpetland.

The cited write up states as actual factual:

As the center of gravity shifts toward execution depth and the ability to drive continuous change, success will depend on how effectively firms rewire their DNA—building the operating model and talent engine required to implement and scale tech-led transformation.

Yep, this sounds wise. Success depends on clients “rewiring their DNA.” Okay, but what do consulting firms have to do? Those outfits have to rewire as well and that spells T-R-O-U-B-L-E for big outfits whether they do big think stuff like business strategy or financial engineering or for outfits that do semi-strategy and plumbing.

The hybrid teams from a blue chip consultant are poised to start work immediately. Rates are the same, but fewer humans means more profits. It helps that the intern’s father is the owner of the business devasted by a natural disaster. Thanks, Qwen, good enough.

Let me provide a different view of the sky is falling notion.

- As stated, the sky is not falling. Change happens. Some outfits adapt; others don’t. Hasta, la vista Arthur Andersen.

- Blue chip consulting firms (strategy or financial engineering) are like comfort food. The vast majority of engagements are follow on or repeat business. There is are tactics that are in place to make this happen. Clients like to eat burgers and pizza. Consultants sell the knowledge processed goodies.

- New hires don’t always do “real” work. New hires provide (hopefully) connections, knowledge not readily available to the firm, and the equivalent of Russia’s meat assaults. Hey, someone has to work on that study of world economic change due in 14 days.

- Clients hire consultants for many reasons; for example, to help get a colleague fired or sent to the office in Nome, Alaska; to have the prestige halo at the country club; to add respectability to what is a criminal enterprise (hello, Arthur Andersen. Remember Enron’s special purpose vehicles? No just graduated MBA thinks those up at a fraternity party do they?)

Translating this to real world consulting impact means:

- Old line dinobaby consultants like me will grouse that AI is wrong and must be checked by someone who knows when the AI system is in hallucination mode and can fix the error before something bad like fentanyl happens

- Good enough AI will diffuse because AI is cheaper than humans who have to be managed (who wants to do that?), given health care, and provided with undeserved and unpredictable vacation requests, and a retirement account (who really wants to retire after 30 years at a blue chip consultant? I sure didn’t. Get the experience and get out of Dodge).

- Consulting is like love, truth (really popular these days), justice, and the American way. These reference points means that as more actual hands on work becomes a service, consulting, not software, consumes every revenue generating function. If some big firms disappear, that’s life.

Consulting is forever and someone will show up to buy up a number of firms in a niche and become the new big winner. The dinobaby blue chips will just coalesce into one big firm. At the outfit which employed me, we joked about how similar our counterparts were at other firms. We weren’t individuals. We were people who could snap in, learn quickly, and output words that made clients believe they had just learned something like e=mc^2. Others were happy to have that idiot in the Pittsburgh office sent to learn the many names of snow in Nome.

The sky is not falling. The sun is rising. A billable day arrives for the foreseeable future.

Stephen E Arnold, December 30, 2025

Is This Correct? Google Sues to Protect Copyright

December 30, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

This headline stopped me in my tracks: “Google Lawsuit Says Data Scraping Company Uses Fake Searches to Steal Web Content.” The way my dinobaby brain works, I expect to see a publisher taking the world’s largest online advertising outfit in the crosshairs. But I trust Thomson Reuters because they tell me they are the trust outfit.

Google apparently cannot stop a third party from scraping content from its Web site. Is this SEO outfit operating at a level of sophistication beyond the ken of Mandiant, Gemini, and the third-party cyber tools the online giant has? Must be I guess. Thanks, Venice.ai. Good enough.

The alleged copyright violator in this case seems to be one of those estimable, highly professional firms engaged in search engine optimization. Those are the folks Google once saw as helpful to the sale of advertising. After all, if a Web site is not in a Google search result, that Web site does not exist. Therefore, to get traffic or clicks, the Web site “owner” can buy Google ads and, of course, make the Web site Google compliant. Maybe the estimable SEO professional will continue to fiddle and doctor words in a tireless quest to eliminate the notion of relevance in Google search results.

Now an SEO outfit is on the wrong site of what Google sees as the law. The write up says:

Google on Friday [December 19, 2025] sued a Texas company that “scrapes” data from online search results, alleging it uses hundreds of millions of fake Google search requests to access copyrighted material and “take it for free at an astonishing scale. The lawsuit against SerpApi, filed in federal court in California, said the company bypassed Google’s data protections to steal the content and sell it to third parties.

To be honest the phrase “astonishing scale” struck me as somewhat amusing. Google itself operates on “astonishing scale.” But what is good for the goose is obviously not good for the gander.

I asked You.com to provide some examples of people suing Google for alleged copyright violations. The AI spit out a laundry list. Here are four I sort of remembered:

- News Outlets & Authors v. Google (AI Training Copyright Cases)

- Google Users v. Google LLC (Privacy/Data Class Action with Copyright Claims)

- Advertisers v. Google LLC (Advertising Content Class Action)

- Oracle America, Inc. v. Google LLC

My thought is that with some experience in copyright litigation, Google is probably confident that the SEO outfit broke the law. I wanted to word it “broke the law which suits Google” but I am not sure that is clear.

Okay, which company will “win.” An SEO firm with resources slightly less robust than Google’s or Google?

Place your bet on one of the online gambling sites advertising everywhere at this time. Oh, Google permits online gambling ads in areas allowing gambling and with appropriate certifications, licenses, and compliance functions.

I am not sure what to make of this because Google’s ability to filter, its smart software, and its security procedures obviously are either insufficient, don’t work, or are full of exploitable gaps.

Stephen E Arnold, December 30, 2025