Oracle: Pricked by a Rose and Still Bleeding

April 15, 2025

How disappointing. DoublePulsar documents a senior tech giant’s duplicity in, “Oracle Attempt to Hide Serious Cybersecurity Incident from Customers in Oracle SaaS Service.” Blogger Kevin Beaumont cites reporting by Bleeping Computer as he tells us someone going by rose87168 announced in March they had breached certain Oracle services. The hacker offered to remove individual companies’ data for a price. They also invited Oracle to email them to discuss the matter. The company, however, immediately denied there had been a breach. It should know better by now.

Rose87168 responded by releasing evidence of the breach, piece by piece. For example, they shared a recording of an internal Oracle meeting, with details later verified by Bleeping Computer and Hudson Rock. They also shared the code for Oracle configuration files, which proved to be current. Beaumont writes:

“In data released to a journalist for validation, it has now become 100% clear to me that there has been cybersecurity incident at Oracle, involving systems which processed customer data. … All the systems impacted are directly managed by Oracle. Some of the data provided to journalists is current, too. This is a serious cybersecurity incident which impacts customers, in a platform managed by Oracle. Oracle are attempting to wordsmith statements around Oracle Cloud and use very specific words to avoid responsibility. This is not okay. Oracle need to clearly, openly and publicly communicate what happened, how it impacts customers, and what they’re doing about it. This is a matter of trust and responsibility. Step up, Oracle — or customers should start stepping off.”

In an update to the original post, Beaumont notes some linguistic slight-of-hand employed by the company:

“Oracle rebadged old Oracle Cloud services to be Oracle Classic. Oracle Classic has the security incident. Oracle are denying it on ‘Oracle Cloud’ by using this scope — but it’s still Oracle cloud services that Oracle manage. That’s part of the wordplay.”

However, it seems the firm finally admitted the breach was real to at least some users. Just not in in black and white. We learn:

“Multiple Oracle cloud customers have reached out to me to say Oracle have now confirmed a breach of their services. They are only doing so verbally, they will not write anything down, so they’re setting up meetings with large customers who query. This is similar behavior to the breach of medical PII in the ongoing breach at Oracle Health, where they will only provide details verbally and not in writing.”

So much for transparency. Beaumont pledges to keep investigating the breach and Oracle’s response to it. He invites us to follow his Mastodon account for updates.

Cynthis Murrell, April 15, 2025

The EU Rains on the US Cloud Parade

March 3, 2025

At least one European has caught on. Dutch blogger Bert Hubert is sounding the alarm to his fellow Europeans in the post, "It Is No Longer Safe to Move Our Governments and Societies to US Clouds." Governments and organizations across Europe have been transitioning systems to American cloud providers for reasons of cost and ease of use. Hubert implores them to prioritize security instead. He writes:

"We now have the bizarre situation that anyone with any sense can see that America is no longer a reliable partner, and that the entire large-scale US business world bows to Trump’s dictatorial will, but we STILL are doing everything we can to transfer entire governments and most of our own businesses to their clouds. Not only is it scary to have all your data available to US spying, it is also a huge risk for your business/government continuity. From now on, all our business processes can be brought to a halt with the push of a button in the US. And not only will everything then stop, will we ever get our data back? Or are we being held hostage? This is not a theoretical scenario, something like this has already happened."

US firms have been wildly successful in building reliance on their products around the world. So much so, we are told, that some officials would rather deny reality than switch to alternative systems. The post states:

"’Negotiating with reality’ is for example the letter three Dutch government ministers sent last week. Is it wise to report every applicant to your secret service directly to Google, just to get some statistics? The answer the government sent: even if we do that, we don’t, because ‘Google cannot see the IP address‘. This is complete nonsense of course, but it’s the kind of thing you tell yourself (or let others tell you) when you don’t want to face reality (or can’t)."

Though Hubert does not especially like Microsoft tools, for example, he admits Europeans are accustomed to them and have "become quite good at using them." But that is not enough reason to leave data vulnerable to "King Trump," he writes. Other options exist, even if they may require a bit of effort to implement. Security or convenience: pick one.

Cynthia Murrell, March 3, 2025

Researchers Raise Deepseek Security Concerns

February 25, 2025

What a shock. It seems there are some privacy concerns around Deepseek. We learn from the Boston Herald, “Researchers Link Deepseek’s Blockbuster Chatbot to Chinese Telecom Banned from Doing Business in US.” Former Wall Street Journal and now AP professional Byron Tau writes:

“The website of the Chinese artificial intelligence company Deepseek, whose chatbot became the most downloaded app in the United States, has computer code that could send some user login information to a Chinese state-owned telecommunications company that has been barred from operating in the United States, security researchers say. The web login page of Deepseek’s chatbot contains heavily obfuscated computer script that when deciphered shows connections to computer infrastructure owned by China Mobile, a state-owned telecommunications company.”

If this is giving you déjà vu, dear reader, you are not alone. This scenario seems much like the uproar around TikTok and its Chinese parent company ByteDance. But it is actually worse. ByteDance’s direct connection to the Chinese government is, as of yet, merely hypothetical. China Mobile, on the other hand, is known to have direct ties to the Chinese military. We learn:

“The U.S. Federal Communications Commission unanimously denied China Mobile authority to operate in the United States in 2019, citing ‘substantial’ national security concerns about links between the company and the Chinese state. In 2021, the Biden administration also issued sanctions limiting the ability of Americans to invest in China Mobile after the Pentagon linked it to the Chinese military.”

It was Canadian cybersecurity firm Feroot Security that discovered the code. The AP then had the findings verified by two academic cybersecurity experts. Might similar code be found within TikTok? Possibly. But, as the article notes, the information users feed into Deepseek is a bit different from the data TikTok collects:

“Users are increasingly putting sensitive data into generative AI systems — everything from confidential business information to highly personal details about themselves. People are using generative AI systems for spell-checking, research and even highly personal queries and conversations. The data security risks of such technology are magnified when the platform is owned by a geopolitical adversary and could represent an intelligence goldmine for a country, experts warn.”

Interesting. But what about CapCut, the ByteDance video thing?

Cynthia Murrell, February 25, 2025

Google and Personnel Vetting: Careless?

February 20, 2025

No smart software required. This dinobaby works the old fashioned way.

No smart software required. This dinobaby works the old fashioned way.

The Sundar & Prabhakar Comedy Show pulled another gag. This one did not delight audiences the way Prabhakar’s AI presentation did, nor does it outdo Google’s recent smart software gaffe. It is, however, a bit of a hoot for an outfit with money, smart people, and smart software.

I read the decidedly non-humorous news release from the Department of Justice titled “Superseding Indictment Charges Chinese National in Relation to Alleged Plan to Steal Proprietary AI Technology.” The write up states on February 4, 2025:

A federal grand jury returned a superseding indictment today charging Linwei Ding, also known as Leon Ding, 38, with seven counts of economic espionage and seven counts of theft of trade secrets in connection with an alleged plan to steal from Google LLC (Google) proprietary information related to AI technology. Ding was initially indicted in March 2024 on four counts of theft of trade secrets. The superseding indictment returned today describes seven categories of trade secrets stolen by Ding and charges Ding with seven counts of economic espionage and seven counts of theft of trade secrets.

Thanks, OpenAI, good enough.

Mr. Ding, obviously a Type A worker, appears to have quite industrious at the Google. He was not working for the online advertising giant; he was working for another entity. The DoJ news release describes his set up this way:

While Ding was employed by Google, he secretly affiliated himself with two People’s Republic of China (PRC)-based technology companies. Around June 2022, Ding was in discussions to be the Chief Technology Officer for an early-stage technology company based in the PRC. By May 2023, Ding had founded his own technology company focused on AI and machine learning in the PRC and was acting as the company’s CEO.

What technology caught Mr. Ding’s eye? The write up reports:

Ding intended to benefit the PRC government by stealing trade secrets from Google. Ding allegedly stole technology relating to the hardware infrastructure and software platform that allows Google’s supercomputing data center to train and serve large AI models. The trade secrets contain detailed information about the architecture and functionality of Google’s Tensor Processing Unit (TPU) chips and systems and Google’s Graphics Processing Unit (GPU) systems, the software that allows the chips to communicate and execute tasks, and the software that orchestrates thousands of chips into a supercomputer capable of training and executing cutting-edge AI workloads. The trade secrets also pertain to Google’s custom-designed SmartNIC, a type of network interface card used to enhance Google’s GPU, high performance, and cloud networking products.

At least, Mr. Ding validated the importance of some of Google’s sprawling technical insights. That’s a plus I assume.

One of the more colorful items in the DoJ news release concerned “evidence.” The DoJ says:

As alleged, Ding circulated a PowerPoint presentation to employees of his technology company citing PRC national policies encouraging the development of the domestic AI industry. He also created a PowerPoint presentation containing an application to a PRC talent program based in Shanghai. The superseding indictment describes how PRC-sponsored talent programs incentivize individuals engaged in research and development outside the PRC to transmit that knowledge and research to the PRC in exchange for salaries, research funds, lab space, or other incentives. Ding’s application for the talent program stated that his company’s product “will help China to have computing power infrastructure capabilities that are on par with the international level.”

Mr. Ding did not use Google’s cloud-based presentation program. I found the explicit desire to “help China” interesting. One wonders how Google’s Googley interview process run by Googley people failed to notice any indicators of Mr. Ding’s loyalties? Googlers are very confident of their Googliness, which obviously tolerates an insider threat who conveys data to a nation state known to be adversarial in its view of the United States.

I am a dinobaby, and I find this type of employee insider threat at Google. Google bought Mandiant. Google has internal security tools. Google has a very proactive stance about its security capabilities. However, in this case, I wonder if a Googler ever noticed that Mr. Ding used PowerPoint, not the Google-approved presentation program. No true Googler would use PowerPoint, an archaic, third party program Microsoft bought eons ago and has managed to pump full of steroids for decades.

Yep, the tell — Googlers who use Microsoft products. Sundar & Prabhakar will probably integrate a short bit into their act in the near future.

Stephen E Arnold, February 20, 2025

A Failure Retrospective

February 3, 2025

Every year has tech failures, some of them will join the zeitgeist as cultural phenomenons like Windows Vista, Windows Me, Apple’s Pippin game console, chatbots, etc. PC Mag runs down the flops in: “Yikes: Breaking Down the 10 Biggest Tech Fails of 2024.” The list starts with Intel’s horrible year with its booted CEO, poor chip performance. It follows up with the Salt Typhoon hack that proved (not that we didn’t already know it with TikTok) China is spying on every US citizen with a focus on bigwigs.

National Public Data lost 272 million social security numbers to a hacker. That was a great day in summer for hacker, but the summer travel season became a nightmare when a CrowdStrike faulty kernel update grounded over 2700 flights and practically locked down the US borders. Microsoft’s Recall, an AI search tool that took snapshots of user activity that could be recalled later was a concern. What if passwords and other sensitive information were recorded?

The fabulous Internet Archive was hacked and taken down by a bad actor to protest the Israel-Gaza conflict. It makes us worry about preserving Internet and other important media history. Rabbit and Humane released AI-powered hardware that was supposed to be a hands free way to use a digital assistant, but they failed. JuiceBox ended software support on its EV car chargers, while Scarlett Johansson’s voice was stolen by OpenAI for its Voice Mode feature. She sued.

The worst of the worst is this:

“Days after he announced plans to acquire Twitter in 2022, Elon Musk argued that the platform needed to be “politically neutral” in order for it to “deserve public trust.” This approach, he said, “effectively means upsetting the far right and the far left equally.” In March 2024, he also pledged to not donate to either US presidential candidate, but by July, he’d changed his tune dramatically, swapping neutrality for MAGA hats. “If we want to preserve freedom and a meritocracy in America, then Trump must win,” Musk tweeted in September. He seized the @America X handle to promote Trump, donated millions to his campaign, shared doctored and misleading clips of VP Kamala Harris, and is now working closely with the president-elect on an effort to cut government spending, which is most certainly a conflict of interest given his government contracts. Some have even suggested that he become Speaker of the House since you don’t have to be a member of Congress to hold that position. The shift sent many X users to alternatives like BlueSky, Threads, and Mastodon in the days after the US election.”

It doesn’t matter what Musk’s political beliefs are. He has no right to participate in politics.

Whitney Grace, February 3, 2025

National Security: A Last Minute Job?

January 20, 2025

On its way out the door, the Biden administration has enacted a prudent policy. Whether it will persist long under the new administration is anyone’s guess. The White House Briefing Room released a “Fact Sheet: Ensuring U.S. Security and Economic Strength in the Age of Artificial Intelligence.” The rule provides six key mechanisms on the diffusion of U.S. Technology. The statement specifies:

“In the wrong hands, powerful AI systems have the potential to exacerbate significant national security risks, including by enabling the development of weapons of mass destruction, supporting powerful offensive cyber operations, and aiding human rights abuses, such as mass surveillance. Today, countries of concern actively employ AI – including U.S.-made AI – in this way, and seek to undermine U.S. AI leadership. To enhance U.S. national security and economic strength, it is essential that we do not offshore this critical technology and that the world’s AI runs on American rails. It is important to work with AI companies and foreign governments to put in place critical security and trust standards as they build out their AI ecosystems. To strengthen U.S. security and economic strength, the Biden-Harris Administration today is releasing an Interim Final Rule on Artificial Intelligence Diffusion. It streamlines licensing hurdles for both large and small chip orders, bolsters U.S. AI leadership, and provides clarity to allied and partner nations about how they can benefit from AI. It builds on previous chip controls by thwarting smuggling, closing other loopholes, and raising AI security standards.”

The six mechanisms specify 18 key allies to whom no restrictions apply and create a couple trusted statuses other entities can attain. They also support cooperation between governments on export controls, clean energy, and technology security. As for “countries of concern,” the rule seeks to ensure certain advanced technologies do not make it into their hands. See the briefing for more details.

The measures add to previous security provisions, including the October 2022 and October 2023 chip controls. We are assured they were informed by conversations with stakeholders, bipartisan members of Congress, industry representatives, and foreign allies over the previous 10 months. Sounds like it was a lot of work. Let us hope it does not soon become wasted effort.

Cynthia Murrell, January 20, 2025

Racers, Start Your Work Around Engines

January 16, 2025

Prepared by a still-alive dinobaby.

Prepared by a still-alive dinobaby.

Companies are now prohibited from sending our personal information to specific, hostile nations. Because tech firms must be forced to exercise common sense, apparently. TechRadar reports, "US Government Says Companies Are No Longer Allowed to Send Bulk Data to these Nations." The restriction is the final step in implementing Executive Order 14117, which President Biden signed nearly a year ago. It is to take effect at the beginning of April.

The rule names six countries the DoJ says have “engaged in a long-term pattern or serious instances of conduct significantly adverse to the national security of the United States or the security and safety of U.S. persons”: China, Cuba, Iran, North Korea, Russia, and Venezuela. Writer Benedict Collins tells us:

"The Executive Order is aimed at preventing countries generally hostile to the US from using the data of US citizens in cyber espionage and influence campaigns, as well as building profiles of US citizens to be used in social engineering, phishing, blackmail, and identity theft campaigns. The final rule sets out the threshold for transactions of data that carry an unacceptable level of risk, alongside the different classes of transactions that are prohibited, restricted or exempt. Companies that violate the order will face civil and criminal penalties."

The restriction covers geolocation data; personal identifiers like social security numbers; biometric identifiers; personal health data; personal financial information; and data on our very cells. The agency clarifies some activities that are not prohibited:

"The DoJ also outlined the final rule does not apply to ‘medical, health, or science research or the development and marketing of new drugs’ and ‘also does not broadly prohibit U.S. persons from engaging in commercial transactions, including exchanging financial and other data as part of the sale of commercial goods and services with countries of concern or covered persons, or impose measures aimed at a broader decoupling of the substantial consumer, economic, scientific, and trade relationships that the United States has with other countries.’"

So, outside those exceptions, the idea is that US firms will not be sending our personal data to these hostile countries. That is the theory. However, organizations gather data from mobile phone apps, from exfiltrated mobile phone records, from “gray” data aggregators. How does one find entities providing conduits for information outflows? A bit of sleuthing on Telegram or searches on Dark Web search engines provide a number of contact points. Are the data reliable, accurate, and timely? Bad data are plentiful, but by acquiring or assembling information, bad actors send out their messages. Volume and human nature work.

Cynthia Murrell, January 16, 2025

Be Secure Like a Journalist

January 9, 2025

This is an official dinobaby post.

This is an official dinobaby post.

If you want to be secure like a journalist, Freedom.press has a how-to for you. The write up “The 2025 Journalist’s Digital Security Checklist” provides text combined with a sort of interactive design. For example, if you want to know more about an item on a checklist, just click the plus sign and the recommendations appear.

There are several sections in the document. Each addresses a specific security vector or issue. These are:

- Asses your risk

- Set up your mobile to be “secure”

- Protect your mobile from unwanted access

- Secure your communication channels

- Guard your documents from harm

- Manage your online profile

- Protect your research whilst browsing

- Avoid getting hacked

- Set up secure tip lines.

Most of the suggestions are useful. However, I would strongly recommend that any mobile phone user download this presentation from the December 2024 Chaos Computer Club meeting held after Christmas. There are some other suggestions which may be of interest to journalists, but these regard specific software such as Google’s Chrome browser, Apple’s wonderful iCloud, and Microsoft’s oh-so-secure operating system.

The best way for a journalist to be secure is to be a “ghost.” That implies some type of zero profile identity, burner phones, and other specific operational security methods. These, however, are likely to land a “real” journalist in hot water either with an employer or an outfit like a professional organization. A clever journalist would gain access to a sock puppet control software in order to manage a number of false personas at one time. Plus, there are old chestnuts like certain Dark Web services. Are these types of procedures truly secure?

In my experience, the only secure computing device is one that is unplugged in a locked room. The only secure information is that which one knows and has not written down or shared with anyone. Every time I meet a journalist unaware of specialized tools and services for law enforcement or intelligence professionals I know I can make that person squirm if I describe one of the hundreds of services about which journalists know nothing.

For starters, watch the CCC video. Another tip: Choose the country in which certain information is published with your name identifying you as an author carefully. Very carefully.

Stephen E Arnold, January 9, 2025

Insider Threats: More Than Threat Reports and Cumbersome Cyber Systems Are Needed

November 13, 2024

Sorry to disappoint you, but this blog post is written by a dumb humanoid. The art? We used MidJourney.

Sorry to disappoint you, but this blog post is written by a dumb humanoid. The art? We used MidJourney.

With actionable knowledge becoming increasingly concentrated, is it a surprise that bad actors go where the information is? One would think that organizations with high-value information would be more vigilant when it comes to hiring people from other countries, using faceless gig worker systems, or relying on an AI-infused résumé on LinkedIn. (Yep, that is a Microsoft entity.)

Thanks, OpenAI. Good enough.

The fact is that big technology outfits are supremely confident in their ability to do no wrong. Billions in revenue will boost one’s confidence in a firm’s management acumen. The UK newspaper Telegraph published “Why Chinese Spies Are Sending a Chill Through Silicon Valley.”

The write up says:

In recent years the US government has charged individuals with stealing technology from companies including Tesla, Apple and IBM and seeking to transfer it to China, often successfully. Last year, the intelligence chiefs of the “Five Eyes” nations clubbed together at Stanford University – the cradle of Silicon Valley innovation – to warn technology companies that they are increasingly under threat.

Did the technology outfits get the message?

The Telegram article adds:

Beijing’s mission to acquire cutting edge tech has been given greater urgency by strict US export controls, which have cut off China’s supply of advanced microchips and artificial intelligence systems. Ding, the former Google employee, is accused of stealing blueprints for the company’s AI chips. This has raised suspicions that the technology is being obtained illegally. US officials recently launched an investigation into how advanced chips had made it into a phone manufactured by China’s Huawei, amid concerns it is illegally bypassing a volley of American sanctions. Huawei has denied the claims.

With some non US engineers and professionals having skills needed by some of the high-flying outfits already aloft or working their hangers to launch their breakthrough product or service, US companies go through human resource and interview processes. However, many hires are made because a body is needed, someone knows the candidate, or the applicant is willing to work for less money than an equivalent person with a security clearance, for instance.

The result is that most knowledge centric organizations have zero idea about the security of their information. Remember Edward Snowden? He was visible. Others are not.

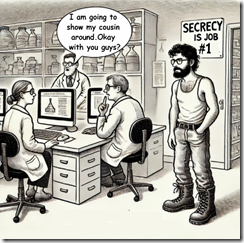

Let me share an anecdote without mentioning names or specific countries and companies.

A business colleague hailed from an Asian country. He maintained close ties with his family in his country of origin. He had a couple of cousins who worked in the US. I was at his company which provided computer equipment to the firm at which I was working in Silicon Valley. He explained to me that a certain “new” technology was going to be released later in the year. He gave me an overview of this “secret” project. I asked him where the data originated. He looked at me and said, “My cousin. I even got a demo and saw the prototype.”

I want to point out that this was not a hire. The information flowed along family lines. The sharing of information was okay because of the closeness of the family. I later learned the information was secret. I realized that doing an HR interview process is not going to keep secrets within an organization.

I ask the companies with cyber security software which has an insider threat identification capability, “How do you deal with family or high-school relationship information channels?”

The answer? Blank looks.

The Telegraph and most of the whiz bang HR methods and most of the cyber security systems don’t work. Cultural blind spots are a problem. Maybe smart software will prevent knowledge leakage. I think that some hard thinking needs to be applied to this problem. The Telegram write up does not tackle the job. I would assert that most organizations have fooled themselves. Billions and arrogance have interesting consequences.

Stephen E Arnold, November 13, 2024

Secure Phones Keep Appearing

October 31, 2024

The KDE community has developed an open source interface for mobile devices called Plasma Mobile. It allegedly turns any phone into a virtual fortress, promising a “privacy-respecting, open source and secure phone ecosystem.” This project is based on the original Plasma for desktops, an environment focused on security and flexibility. As with many open-source projects, Plasma Mobile is an imperfect work in progress. We learn:

“A pragmatic approach is taken that is inclusive to software regardless of toolkit, giving users the power to choose whichever software they want to use on their device. … Plasma Mobile is packaged in multiple distribution repositories, and so it can be installed on regular x86 based devices for testing. Have an old Android device? postmarketOS, is a project aiming to bring Linux to phones and offers Plasma Mobile as an available interface for the devices it supports. You can see the list of supported devices here, but on any device outside the main and community categories your mileage may vary. Some supported devices include the OnePlus 6, Pixel 3a and PinePhone. The interface is using KWin over Wayland and is now mostly stable, albeit a little rough around the edges in some areas. A subset of the normal KDE Plasma features are available, including widgets and activities, both of which are integrated into the Plasma Mobile UI. This makes it possible to use and develop for Plasma Mobile on your desktop/laptop. We aim to provide an experience (with both the shell and apps) that can provide a basic smartphone experience. This has mostly been accomplished, but we continue to work on improving shell stability and telephony support. You can find a list of mobile friendly KDE applications here. Of course, any Linux-based applications can also be used in Plasma Mobile.

KDE states its software is “for everyone, from kids to grandparents and from professionals to hobbyists.” However, it is clear that being an IT professional would certainly help. Is Plasma Mobile as secure as they claim? Time will tell.

Cynthia Murrell, October 31, 2024