Browse >

Home / Social Media

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-30.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

For some reason, I remember my freshman year in high school. My family had returned from Brazil, and the US secondary school adventure was new. The first class of the day in 1958 parked me in front of Miss Dalton, the English teacher. She explained that we had to use the library to write a short essay about foreign country. Brazil did not interest me, so with the wisdom of a freshman in high school, I chose Japan. My topic? Seppuku or hara-kiri. Yep, belly cutting.

The idea that caught my teen attention was the idea that a warrior who was shamed, a loser in battle, or fighter wanting to make a point would stab himself with his sword. (Females slit their throats.) The point of the exercise was to make clear, this warrior had moxie, commitment, and maybe a bit of psychological baggage. An inner wiring (maybe guilt) would motivate you to kill oneself in a hard-to-ignore way. Wild stuff for a 13 year old.

A digital samurai preparing to commit hara-kiri with a brand new really sharp MacBook Air M2. Believe it or not, MidJourney objected to an instruction to depict a Japanese warrior committing seppuku with a laptop. Change the instruction and the system happily depicts a digital warrior initiating the Reddit- and Twitter-type processes. Thanks, MidJourney for the censorship.

I have watched a highly regarded innovator chop away at his innards with the management “enhancements” implemented at Twitter. I am not a Twitter user, but I think that an unarticulated motive is causing the service to be “improved.” “Twitter: Five Ways Elon Musk Has Changed the Platform for Users” summarizes a few of the most newsworthy modifications. For example, the rocket and EV wizard quickly reduced staff, modified access to third-party applications, and fooled around with check marks. The impact has been intriguing. Billions in value have been disappeared. Some who rose to fame by using Tweets as a personal promotional engine have amped up their efforts to be short text influencers. The overall effect has been to reduce scrutiny because the impactful wounds are messy. Once concerned regulators apparently have shifted their focus. Messy stuff.

Now a social media service called Reddit is taking some cues from the Musk Manual of Management. The gold standard in management excellence — that would be CNN, of course — documented some Reddit’s actions in “Reddit’s Fight with Its Most Powerful Users Enters New Phase As Blackout Continues.” The esteemed news service stated:

The company also appears to be laying the groundwork for ejecting forum moderators committed to continuing the protests, a move that could force open some communities that currently remain closed to the public. In response, some moderators have vowed to put pressure on Reddit’s advertisers and investors.

Without users and moderators will Reddit thrive? If the information in a recent Wired article is correct, the answer is, “Maybe not.” (See “The Reddit Blackout Is Breaking Reddit.”)

Why are two large US social media companies taking steps that will either impair their ability to perform technically and financially or worse, chopping away at themselves?

My thought is that the management of both firms know that regulators and stakeholders are closing in. Both companies want people who die for the firm. The companies are at war with idea, their users, and their supporters. But what’s the motivation?

Let’s do a thought experiment. Imagine that the senior managers at both companies know that they have lost the battle for the hearts and minds of regulators, some users, third-party developers, and those who would spend money to advertise. But like a person promoted to a senior position, the pressure of the promotion causes the individuals to doubt themselves. These people are the Peter Principle personified. Unconsciously they want to avail to avoid their inner demons and possibly some financial stakeholders.

The senior managers are taking what they perceive as a strong way out — digital hara-kiri. Of course, it is messy. But the pain, the ugliness, and the courage are notable. Those who are not caught in the sticky Web of social media ignore the horrors. For Silicon Valley “real” news professionals, many users dependent on the two platforms, and those who have surfed on the firms’ content have to watch.

Are the managers honorable? Do some respect their tough decisions? Are the senior managers’ inner demons and their sense of shame assuaged? I don’t know. But the action is messy on the path of honor via self-disembowelment.

For a different angle on what’s happened at Facebook and Google, take a look at “The Rot Economy.”

Stephen E Arnold, June 19, 2023

Written by Stephen E. Arnold · Filed Under Management, News, Social Media | Comments Off on Digital Belly Cutting: Reddit and Twitter on the Path of Silicon Honor?

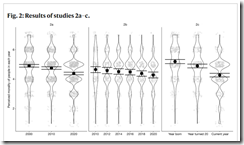

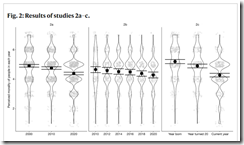

Here’s a graph from the academic paper “The Illusion of Moral Decline.”

Is it even necessary to read the complete paper after studying the illustration? Of course not. Nevertheless, let’s look at a couple of statements in the write up to get ready for that in-class, blank bluebook semester examination, shall we?

Statement 1 from the write up:

… objective indicators of immorality have decreased significantly over the last few centuries.

Well, there you go. That’s clear. Imagine what life was like before modern day morality kicked in.

Statement 2 from the write up:

… we suggest that one of them has to do with the fact that when two well-established psychological phenomena work in tandem, they can produce an illusion of moral decline.

Okay. Illusion. This morning I drove past people sleeping under an overpass. A police vehicle with lights and siren blaring raced past me as I drove to the gym (a gym which is no longer open 24×7 due to safety concerns). I listened to a report about people struggling amidst the flood water in Ukraine. In short, a typical morning in rural Kentucky. Oh, I forgot to mention the gunfire, I could hear as I walked my dog at a local park. I hope it was squirrel hunters but in this area who knows?

MidJourney created this illustration of the paper’s authors celebrating the publication of their study about the illusion of immorality. The behavior is a manifestation of morality itself, and it is a testament to the importance of crystal clear graphs.

Statement 3 from the write up:

Participants in the foregoing studies believed that morality has declined, and they believed this in every decade and in every nation we studied….About all these things, they were almost certainly mistaken.

My take on the study includes these perceptions (yours hopefully will be more informed than mine):

- The influence of social media gets slight attention

- Large-scale immoral actions get little attention. I am tempted to list examples, but I am afraid of legal eagles and aggrieved academics with time on their hands.

- The impact of intentionally weaponized information on behavior in the US and other nation states which provide an infrastructure suitable to permit wide use of digitally-enabled content.

In order to avoid problems, I will list some common and proper nouns or phrases and invite you think about these in terms of the glory word “morality”. Have fun with your mental gymnastics:

- Catholic priests and children

- Covid information and pharmaceutical companies

- Epstein, Andrew, and MIT

- Special operation and elementary school children

- Sudan and minerals

- US politicians’ campaign promises.

Wasn’t that fun? I did not have to mention social media, self harm, people between the ages of 10 and 16, and statements like “Senator, thank you for that question…”

I would not do well with a written test watched by attentive journal authors. By the way, isn’t perception reality?

Stephen E Arnold, June 12, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-7.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Perhaps to counter recent aspersions on its character, TikTok seems eager to transfer prestige from one of its popular forums to itself. Mashable reports, “TikTok Is Launching its Own Book Awards.” The BookTok community has grown so influential it apparently boosts book sales and inspires TV and movie producers. Writer Meera Navlakha reports:

“TikTok knows the power of this community, and is expanding on it. First, a TikTok Book Club was launched on the platform in July 2022; a partnership with Penguin Random House followed in September. Now, the app is officially launching the TikTok Book Awards: a first-of-its-kind celebration of the BookTok community, specifically in the UK and Ireland. The 2023 TikTok Book Awards will honour favourite authors, books, and creators across nine categories. These range ‘Creator of the Year’ to ‘Best BookTok Revival’ to ‘Best Book I Wish I Could Read Again For The First Time’. Those within the BookTok ecosystem, including creators and fans, will help curate the nominees, using the hashtag #TikTokBookAwards. The long-list will then be judged by experts, including author Candice Brathwaite, creators Coco and Ben, and Trâm-Anh Doan, the head of social media at Bloomsbury Publishing. Finally, the TikTok community within the UK and Ireland will vote on the short-list in July, through an in-app hub.”

What an efficient plan. This single, geographically limited initiative may not be enough to outweigh concerns about TikTok’s security. But if the platform can appropriate more of its communities’ deliberations, perhaps it can gain the prestige of a digital newspaper of record. All with nearly no effort on its part.

Cynthia Murrell, June 7, 2023

Written by Stephen E. Arnold · Filed Under News, Social Media, Video | Comments Off on Old School Book Reviewers, BookTok Is Eating Your Lunch Now

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb](http://arnoldit.com/wordpress/wp-content/uploads/2023/05/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb_thumb-2.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

A colleague called my attention to the Fortune Magazine article boldly titled “Gen Z Teens Are So Unruly in Malls, Fed by Their TikTok Addition, That a Growing Number Are requiring Chaperones and Supervision.” A few items I noted in this headline:

- Malls. I thought those were dead horses. There is a YouTube channel devoted to these real estate gems; for example, Urbex Offlimits and a creator named Brandon Moretti’s videos.

- Gen Z. I just looked up how old Gen Zs are. According to Mental Floss, these denizens of empty spaces are 11 to 26 years old. Hmmm. For what purpose are 21 to 25 year olds hanging out in empty malls? (Could that be a story for Fortune?)

- The “TikTok addition” gaffe. My spelling checker helps me out too. But I learned from a super-duper former Fortune writer whom I shall label Peter V, “Fortune is meticulous about its thorough research, its fact checking, and its proofreading.” Well, super-duper Peter, not in 2023. Please, explain in 25 words of less this image from the write up:

I did notice several factoids and comments in the write up; to wit:

Interesting item one:

“On Friday and Saturdays, it’s just been a madhouse,” she said on a recent Friday night while shopping for Mother’s Day gifts with Jorden and her 4-month-old daughter.

A madhouse is, according to the Cambridge dictionary is “a place of great disorder and confusion.” I think of malls as places of no people. But Fortune does the great fact checking, according to the attestation of Peter V.

Interesting item two:

Even a Chik-fil-A franchise in southeast Pennsylvania caused a stir with its social media post earlier this year that announced its policy of banning kids under 16 without an adult chaperone, citing unruly behavior.

I thought Chik-fil-A was a saintly, reserved institution with restaurants emulating Medieval monasteries. No longer. No wonder so many cars line up for a chickwich.

Interesting item three:

Cohen [a mall expert] said the restrictions will help boost spending among adults who must now accompany kids but they will also likely reduce the number of trips by teens, so the overall financial impact is unclear.

What these snippets tell me is that there is precious little factual data in the write up. The headline leading “TikTok addiction” is not the guts of the write up. Maybe the idea that kids who can’t go to the mall will play online games? I think it is more likely that kids and those lost little 21 to 25 year olds will find other interesting things to do with their time.

But malls? Kids can prowl Snapchat and TikTok, but those 21 to 25 year olds? Drink or other chemical activities?

Hey, Fortune, let’s get addicted to the Peter V. baloney: “Fortune is meticulous about its thorough research, its fact checking, and its proofreading.”

Stephen E Arnold, June 2, 2023

Written by Stephen E. Arnold · Filed Under News, Publishing, Social Media | Comments Off on The TikTok Addition: Has a Fortune Magazine Editor Been Up Swiping?

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/05/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

The TikTok service is one that has kicked the Google in its sensitive bits. The “algorithm” befuddles both European and US “me too” innovators. The ability of short, crunchy videos has caused restaurant chefs to craft food for TikTok influencers who record meals. Chefs!

What other magical powers can a service like TikTok have? That’s a good question, and it is one that the Chinese academics have answered. Navigate to “Weak Ties Strengthen Anger Contagion in Social Media.” The main idea of the research is to validate a simple assertion: Can social media (think TikTok, for example) take a flame thrower to social ties? The answer is, “Sure can.” Will a social structure catch fire and collapse? “Sure can.”

A frail structure is set on fire by a stream of social media consumed by a teen working in his parents’ garden shed. MidJourney would not accept the query a person using a laptop setting his friends’ homes on fire. Thanks, Aunt MidJourney.

The write up states:

Increasing evidence suggests that, similar to face-to-face communications, human emotions also spread in online social media.

Okay, a post or TikTok video sparks emotion.

So what?

…we find that anger travels easily along weaker ties than joy, meaning that it can infiltrate different communities and break free of local traps because strangers share such content more often. Through a simple diffusion model, we reveal that weaker ties speed up anger by applying both propagation velocity and coverage metrics.

The authors note:

…we offer solid evidence that anger spreads faster and wider than joy in social media because it disseminates preferentially through weak ties. Our findings shed light on both personal anger management and in understanding collective behavior.

I wonder if any psychological operations professionals in China or another country with a desire to reduce the efficacy of the American democratic “experiment” will find the research interesting?

Stephen E Arnold, May 25, 2023

Written by Stephen E. Arnold · Filed Under cybercrime, News, Social Media | Comments Off on Need a Guide to Destroying Social Cohesion: Chinese Academics Have One for You

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I spotted Twitch’s AI-fueled ask_Jesus. You can take a look at this link. The idea is that smart software responds in a way a cherished figure would. If you watch the questions posed by registered Twitchers, you can wait a moment and the ai Jesus will answer the question. Rather than paraphrase or quote the smart software, I suggest you navigate to this Bezos bulldozer property and check out the “service.”

I mention the Amazon offering because I noted another smart religion robot write up called “India’s Religious AI Chatbots Are Speaking in the Voice of God and Condoning Violence.” The article touches upon several themes which I include in my 2023 lecture series about the shadow Web and misinformation from bad actors and wonky smart software.

This Rest of World article reports:

In January 2023, when ChatGPT was setting new growth records, Bengaluru-based software engineer Sukuru Sai Vineet launched GitaGPT. The chatbot, powered by GPT-3 technology, provides answers based on the Bhagavad Gita, a 700-verse Hindu scripture. GitaGPT mimics the Hindu god Krishna’s tone — the search box reads, “What troubles you, my child?”

The trope is for the “user” to input a question and the smart software outputs a response. But there is not just Sukuru’s version. There are allegedly five GitaGPTs available “with more on the way.”

The article includes a factoid in a quote allegedly from a human AI researcher; to wit:

Religion is the single largest business in India.

I did not know this. I thought it was outsourced work. product. Live and learn.

Is there some risk with religious chatbots? The write up states:

Religious chatbots have the potential to be helpful, by demystifying books like the Bhagavad Gita and making religious texts more accessible, Bindra said. But they could also be used by a few to further their own political or social interests, he noted. And, as with all AI, these chatbots already display certain political biases. [The Bindra is Jaspreet Bindra, AI researcher and author of The Tech Whisperer]

I don’t want to speculate what the impact of a religious chatbot might be if the outputs were tweaked for political or monetary purposes.

I will leave that to you.

Stephen E Arnold, May 19, 2023

Written by Stephen E. Arnold · Filed Under AI, News, Social Media | Comments Off on ChatBots: For the Faithful Factually?

I read “American Psychology Group Issues Recommendations for Kids’ Social Media Use”. The article reports that social media is possibly, just maybe, perhaps, sort of an issue for some, a few, a handful, a teenie tiny percentage of young people. I am not sure when “social media” began. Maybe it was something called Six Degrees or Live Journal. I definitely recall the wonky weirdness of flashing MySpace pages. I do know about Orkut which if one cares to check was a big hit among a certain segment of Brazilians. The exact year is irrelevant; social media has been kicking around for about a quarter century.

Now, I learn:

The report doesn’t denounce social media, instead asserting that online social networks are “not inherently beneficial or harmful to young people,” but should be used thoughtfully. The health advisory also does not address specific social platforms, instead tackling a broad set of concerns around kids’ online lives with commonsense advice and insights compiled from broader research.

What are the data about teen suicides? What about teen depression? What about falling test scores? What about trend oddities among impressionable young people? Those data are available and easy to spot. In June 2023, another Federal agency will provide information about yet another clever way to exploit young people on social media.

Now the APA is taking a stand? Well, not really a stand, more of a general statement about what I think is one of the most destructive online application spaces available to young and old today.

How about this statement?

The APA recommends a reasonable, age-appropriate degree of “adult monitoring” through parental controls at the device and app level and urges parents to model their own healthy relationships with social media.

How many young people grow up with one parent and minimal adult monitoring? Yeah, how many? Do parents or a parent know what to monitor? Does a parent know about social media apps? Does a parent know the names of social media apps?

Impressive, APA. Now I remember why I thought Psych 101 was a total, absolute, waste of my time when I was a 17 year old fresh person at a third rate college for losers like me. My classmates — also losers — struggle to suppress laughter during the professor’s lectures. Now I am giggling at this APA position.

Sorry. Your paper and recommendations are late. You get an F.

Stephen E Arnold, May 10, 2023

Written by Stephen E. Arnold · Filed Under News, Online (general), Social Media | Comments Off on The APA Zips Along Like … Like a Turtle, a Really Snappy Turtle Too

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Even as he declares “Social Media Is Doomed to Die,” Verge reporter and Snapchat veteran Elis Hamburger seems to maintain a sliver of hope for the original social-media vision: to facilitate user-driven human connection. This shiny shard may be all that remains of the faith that led him to work at Snapchat. Hamburger writes:

“From its earliest days, Snap wanted to be a healthier, more ethical social media platform. A place where popularity wasn’t always king and where monetization would be through creative tools that supported users — not ads that burdened them.”

Alas, those good intentions paved a road leading the same direction as the competitions’. Apparently, the pull of add revenue becomes too strong even for companies with the best of intentions. Especially when users are uninterested in ever footing the bill themselves. The article continues:

“When a company submits to digital advertising, there’s no avoiding the tradeoffs that come with it. And users get put in the back seat. … More ads appear in your feed, forever. ‘It won’t happen to us,’ we said, and then it did. Today, the product evolution of social media apps has led to a point where I’m not sure you can even call them social anymore — at least not in the way we always knew it. They each seem to have spontaneously discovered that short form videos from strangers are simply more compelling than the posts and messages from friends that made up traditional social media. Call it the carcinization of social media, an inevitable outcome for feeds built only around engagement and popularity. So one day — it’s hard to say exactly when — a switch was flipped. Away from news, away from followers, away from real friends — toward the final answer to earning more time from users: highly addictive short form videos that magically appear to numb a chaotic, crowded brain.”

The piece asserts users also have themselves to blame, both by seeking greater and greater social validation and by embracing new, ad-boosting features that offer an endorphin boost. They could also express more willingness to pay for social media’s services, we suppose, and Hamburger imagines a scenario where they would do so. However, that would mean completely tearing down and rebuilding the social media landscape since existing platforms rest on the lucrative users-as-products model. Is it possible? There may be a shred of hope.

Cynthia Murrell, May 3, 2023

Written by Stephen E. Arnold · Filed Under News, Social Media | Comments Off on Social Media: Phoenix or Dead Duck?

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

As a dinobaby, my grade school education included some biased, incorrect, yet colorful information about the chivalric idea. The basic idea was that knights were governed by the chivalric social codes. And what are these, pray tell, squire? As I recall Miss Soapes, my seventh grade teacher, the guts included honor, honesty, valor, and loyalty. Scraping away the glittering generalities from the disease-riddled, classist, and violent Middle Ages – the knights followed the precepts of the much-beloved Church, opened doors for ladies, and embodied the characters of Sir Gawain, Lancelot, King Arthur, and a heaping dose of Hector of Troy, Alexander the Great (who by the way figured out pretty quickly that what is today Afghanistan would be tough to conquer), and baloney gathered by Ramon Llull were the way to succeed.

Flash forward to 2023, and it appears that the chivalric ideals are back in vogue. “Google, Meta, Other Social Media Platforms Propose Alliance to Combat Misinformation” explains that social media companies have written a five page “proposal.” The recipient is the Indian Ministry of Electronics and IT. (India is a juicy market for social media outfits not owned by Chinese interests… in theory.)

The article explains that a proposed alliance of outfits like Meta and Google:

will act as a “certification body” that will verify who a “trusted” fact-checker is.

Obviously these social media companies will embrace the chivalric ideals to slay the evils of weaponized, inaccurate, false, and impure information. These companies mount their bejeweled hobby horses and gallop across the digital landscape. The actions evidence honor, loyalty, justice, generosity, prowess, and good manners. Thrilling. Cinematic in scope.

The article says:

Social media platforms already rely on a number of fact checkers. For instance, Meta works with fact-checkers certified by the International Fact-Checking Network (IFCN), which was established in 2015 at the US-based Poynter Institute. Members of IFCN review and rate the accuracy of stories through original reporting, which may include interviewing primary sources, consulting public data and conducting analyses of media, including photos and video. Even though a number of Indian outlets are part of the IFCN network, the government, it is learnt, does not want a network based elsewhere in the world to act on content emanating in the country. It instead wants to build a homegrown network of fact-checkers.

Will these white knights defeat the blackguards who would distort information? But what if the companies slaying the inaccurate factoids are implementing a hidden agenda? What if the companies are themselves manipulating information to gain an unfair advantage over any entity not part of the alliance?

Impossible. These are outfits which uphold the chivalric ideals. Truth, honor, etc., etc.

The historical reality is that chivalry was cooked up by nervous “rulers” in order to control the knights. Remember the phrase “knight errant”?

My hunch is that the alliance may manifest some of the less desirable characteristics of the knights of old; namely, weapons, big horses, and a desire to do what was necessary to win.

Knights, mount your steeds. To battle in a far off land redolent with exotic spices and revenue opportunities. Toot toot.

Stephen E Arnold, April 2023

Note: This essay is the work of a real, still-living dinobaby. I am too dumb to use smart software.

I read the “testimony” posted by someone at the House of Representatives. No, the document did not include, “Congressman, thank you for the question. I don’t have the information at hand. I will send it to your office.” As a result, the explanation reflects hand crafting by numerous anonymous wordsmiths. Singapore. Children. Everything is Supercalifragilisticexpialidocious. The quip “NSA to go” is shorter and easier to say.

Therefore, I want to turn my attention to the newspaper in the form of a magazine. The Economist published “How TikTok Broke Social Media.” Great Economist stuff! When I worked at a blue chip consulting outfit in the 1970s, one had to have read the publication. I looked at help wanted ads and the tech section, usually a page or two. The rest of the content was MBA speak, and I was up to my ears in that blather from the numerous meetings through which I suffered.

With modest enthusiasm I worked my way through the analysis of social media. I circled several paragraphs, I noticed one big thing — The phrase “broke social media.” Social media was in my opinion, immune to breaking. The reason is that online services are what I call “ghost like.” Sure, there is one service, which may go away. Within a short span of time, like eight year olds playing amoeba soccer, another gains traction and picks up users and evolves sticky services. Killing social media is like shooting ping pong balls into a Tesla sized blob of Jell-O, an early form of the morphing Terminator robot. In short, the Jell-O keeps on quivering, sometimes for a long, long time, judging from my mother’s ability to make one Jell-O dessert and keep serving it for weeks. Then there was another one. Thus, the premise of the write up is wrong.

I do want to highlight one statement in the essay:

The social apps will not be the only losers in this new, trickier ad environment. “All advertising is about what the next-best alternative is,” says Brian Wieser of Madison and Wall, an advertising consultancy. Most advertisers allocate a budget to spend on ads on a particular platform, he says, and “the budget is the budget”, regardless of how far it goes. If social-media advertising becomes less effective across the board, it will be bad news not just for the platforms that sell those ads, but for the advertisers that buy them.

My view is shaped by more than 50 years in the online information business. New forms of messaging and monetization are enabled by technology. On example is a thought experiment: What will an advertiser pay to influence the output of a content generator infused with smart software. I have first hand information that one company is selling AI-generated content specifically to influence what appears when a product is reviewed. The technique involves automation, a carousel of fake personas (sockpuppets to some), and carefully shaped inputs to the content generation system. Now is this advertising like a short video? Sure, because the output can be in the form of images or a short machine-generated video using machine generated “real” people. Is this type of “advertising” going to morph and find its way into the next Discord or Telegram public user group?

My hunch is that this type of conscious manipulation and automation is what can be conceptualized as “spawn of the Google.”

Net net: Social media is not “broken.” Advertising will find a way… because money. Heinous psychological manipulation. Exploited by big companies. Absolutely.

Stephen E Arnold, March 22, 2023

« Previous Page — Next Page »

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-30.gif)

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-7.gif)

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb](http://arnoldit.com/wordpress/wp-content/uploads/2023/05/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb_thumb-2.gif)

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/05/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb.gif)