News Flash: Young Workers Are Not Happy. Who Knew?

August 12, 2025

No AI. Just a dinobaby being a dinobaby.

No AI. Just a dinobaby being a dinobaby.

My newsfeed service pointed me to an academic paper in mid-July 2025. I am just catching up, and I thought I would document this write up from big thinkers at Dartmouth College and University College London and “Rising young Worker Despair in the United States.”

The write up is unlikely to become a must-read for recent college graduates or youthful people vaporized from their employers’ payroll. The main point is that the work processes of hiring and plugging away is driving people crazy.

The author point out this revelation:

ons In this paper we have confirmed that the mental health of the young in the United States has worsened rapidly over the last decade, as reported in multiple datasets. The deterioration in mental health is particularly acute among young women…. ted the relative prices of housing and childcare have risen. Student debt is high and expensive. The health of young adults has also deteriorated, as seen in increases in social isolation and obesity. Suicide rates of the young are rising. Moreover, Jean Twenge provides evidence that the work ethic itself among the young has plummeted. Some have even suggested the young are unhappy having BS jobs.

Several points jumped from the 38 page paper:

- The only reference to smart software or AI was in the word “despair”. This word appears 78 times in the document.

- Social media gets a few nods with eight references in the main paper and again in the endnotes. Isn’t social media a significant factor? My question is, “What’s the connection between social media and the mental states of the sample?”

- YouTube is chock full of first person accounts of job despair. A good example is Dari Step’s video “This Job Hunt Is Breaking Me and Even California Can’t Fix It Though It Tries.” One can feel the inner turmoil of this person. The video runs 23 minutes and you can find it (as of August 4, 2025) at this link: https://www.youtube.com/watch?v=SxPbluOvNs8&t=187s&pp=ygUNZGVtaSBqb2IgaHVudA%3D%3D. A “study” is one thing with numbers and references to hump curves. A first-person approach adds a bit is sizzle in my opinion.

A few observations seem warranted:

- The US social system is cranking out people who are likely to be challenging for managers. I am not sure the get-though approach based on data-centric performance methods will be productive over time

- Whatever is happening in “education” is not preparing young people and recent graduates to support themselves with old-fashioned jobs. Maybe most of these people will become AI entrepreneurs, but I have some doubts about success rates

- Will the National Bureau of Economic Research pick up the slack for the disarray that seems to be swirling through the Bureau of Labor Statistics as I write this on August 4, 2025?

Stephen E Arnold, August 12, 2025

Win Big at the Stock Market: AI Can Predict What Humans Will Do

July 10, 2025

No smart software to write this essay. This dinobaby is somewhat old fashioned.

No smart software to write this essay. This dinobaby is somewhat old fashioned.

AI is hot. Click bait is hotter. And the hottest is AI figuring out what humans will do “next.” Think stock picking. Think pitching a company “known” to buy what you are selling. The applications of predictive smart software make intelligence professionals gaming the moves of an adversary quiver with joy.

“New Mind-Reading’ AI Predicts What Humans Will Do Next, And It’s Shockingly Accurate” explains:

Researchers have developed an AI called Centaur that accurately predicts human behavior across virtually any psychological experiment. It even outperforms the specialized computer models scientists have been using for decades. Trained on data from more than 60,000 people making over 10 million decisions, Centaur captures the underlying patterns of how we think, learn, and make choices.

Since I believe everything I read on the Internet, smart software definitely can pull off this trick.

How does this work?

Rather than building from scratch, researchers took Meta’s Llama 3.1 language model (the same type powering ChatGPT) and gave it specialized training on human behavior. They used a technique that allows them to modify only a tiny fraction of the AI’s programming while keeping most of it unchanged. The entire training process took only five days on a high-end computer processor.

Hmmm. The Zuck’s smart software. Isn’t Meta in the midst of playing catch up. The company is believed to be hiring OpenAI professionals and other wizards who can convert the “also in the race” to “winner” more quickly than one can say “billions of dollar spent on virtual reality.”

The write up does not just predict what a humanoid or a dinobaby will do. The write up reports:

n a surprising discovery, Centaur’s internal workings had become more aligned with human brain activity, even though it was never explicitly trained to match neural data. When researchers compared the AI’s internal states to brain scans of people performing the same tasks, they found stronger correlations than with the original, untrained model. Learning to predict human behavior apparently forced the AI to develop internal representations that mirror how our brains actually process information. The AI essentially reverse-engineered aspects of human cognition just by studying our choices. The team also demonstrated how Centaur could accelerate scientific discovery.

I am sold. Imagine. These researchers will be able to make profitable investments, know when to take an alternate path to a popular tourist attraction, and discover a drug that will cure male pattern baldness. Amazing.

My hunch is that predictive analytics hooked up to a semi-hallucinating large language model can produce outputs. Will these predict human behavior? Absolutely. Did the Centaur system predict that I would believe this? Absolutely. Was it hallucinating? Yep, poor Centaur.

Stephen E Arnold, July 10, 2025

YouTube Reveals the Popularity Winners

June 6, 2025

No AI, just a dinobaby and his itty bitty computer.

No AI, just a dinobaby and his itty bitty computer.

Another big technology outfit reports what is popular on its own distribution system. The trusted outfit knows that it controls the information flow for many Googlers. Google pulls the strings.

When I read “Weekly Top Podcast Shows,” I asked myself, “Are these data audited?” And, “Do these data match up to what Google actually pays the people who make these programs?”

I was not the only person asking questions about the much loved, alleged monopoly. The estimable New York Times wondered about some programs missing from the Top 100 videos (podcasts) on Google’s YouTube. Mediaite pointed out:

The rankings, based on U.S. watch time, will update every Wednesday and exclude shorts, clips and any content not tagged as a podcast by creators.

My reaction to the listing is that Google wants to make darned sure that it controls the information flow about what is getting views on its platform. Presumably some non-dinobaby will compare the popularity listings to other lists, possibly the misfiring Apple’s list. Maybe an enthusiast will scrape the “popular” listings on the independent podcast players? Perhaps a research firm will figure out how to capture views like the now archaic logs favored decades ago by certain research firms.

Several observations:

- Google owns the platform. Google controls the data. Google controls what’s left up and what’s taken down? Google is not known for making its click data just a click away. Therefore, the listing is an example of information control and shaping.

- Advertisers, take note. Now you can purchase air time on the programs that matter.

- Creators who become dependent on YouTube for revenue are slowly being herded into the 21st century’s version of the Hollywood business model from the 1940s. A failure to conform means that the money stream could be reduced or just cut off. That will keep the sheep together in my opinion.

- As search morphs, Google is putting on its thinking cap in order to find ways to keep that revenue stream healthy and hopefully growing.

But I trust Google, don’t you? Joe Rogan does.

Stephen E Arnold, June 6, 2025

IBM AI Study: Would The Research Report Get an A in Statistics 202?

May 9, 2025

No AI, just the dinobaby expressing his opinions to Zellenials.

No AI, just the dinobaby expressing his opinions to Zellenials.

IBM, reinvigorated with its easy-to-use, backwards-compatible, AI-capable mainframe released a research report about AI. Will these findings cause the new IBM AI-capable mainframe to sell like Jeopardy / Watson “I won” T shirts?

Perhaps.

The report is “Five Mindshifts to Supercharge Business Growth.” It runs a mere 40 pages and requires no more time than configuring your new LinuxONE Emperor 5 mainframe. Well, the report can be absorbed in less time, but the Emperor 5 is a piece of cake as IBM mainframes go.

Here are a few of the findings revealed by IBM in its IBM research report;

AI can improve customer “experience”. I think this means that customer service becomes better with AI in it. Study says, “72 percent of those in the sample agree.”

Turbulence becomes opportunity. 100 percent of the IBM marketers assembling the report agree. I am not sure how many CEOs are into this concept; for example, Hollywood motion picture firms or Georgia Pacific which closed a factory and told workers not to come in tomorrow.

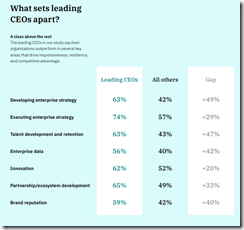

Here’s a graphic from the IBM study. Do you know what’s missing? I will give you five seconds as Arvin Haddad, the LA real estate influencer says in his entertaining YouTube videos:

The answer is, “Increasing revenues, boosting revenues, and keeping stakeholders thrilled with their payoffs.” The items listed by IBM really don’t count, do they?

“Embrace AI-fueled creative destruction.” Yep, another 100 percenter from the IBM team. No supporting data, no verification, and not even a hint of proof that AI-fueled creative destruction is doing much more than making lots of venture outfits and some of the US AI leaders is improving their lives. That cash burn could set the forest on fire, couldn’t it? Answer: Of course not.

I must admit I was baffled by this table of data:

Accelerate growth and efficiency goes down with generative AI. (Is Dr. Gary Marcus right?). Enhanced decision making goes up with generative AI. Are the decisions based on verifiable facts or hallucinated outputs? Maybe busy executives in the sample choose to believe what AI outputs because a computer like the Emperor 5 was involved. Maybe “easy” is better than old-fashioned problem solving which is expensive, slow, and contentious. “Just let AI tell me” is a more modern, streamlined approach to decision making in a time of uncertainty. And the dotted lines? Hmmm.

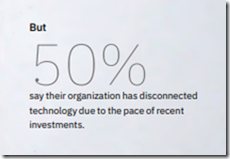

On page 40 of the report, I spotted this factoid. It is tiny and hard to read.

The text says, “50 percent say their organization has disconnected technology due to the pace of recent investments.” I am not exactly sure what this means. Operative words are “disconnected” and “pace of … investments.” I would hazard an interpretation: “Hey, this AI costs too much and the payoff is just not obvious.”

I wish to offer some observations:

- IBM spent some serious money designing this report

- The pagination is in terms of double page spreads, so the “study” plus rah rah consumes about 80 pages if one were to print it out. On my laser printer the pages are illegible for a human, but for the designers, the approach showcases the weird ice cubes, the dotted lines, and allows important factoids to be overlooked

- The combination of data (which strike me as less of a home run for the AI fan and more of a report about AI friction) and flat out marketing razzle dazzle is intriguing. I would have enjoyed sitting in the meetings which locked into this approach. My hunch is that when someone thought about the allegedly valid results and said, “You know these data are sort of anti-AI,” then the others in the meeting said, “We have to convert the study into marketing diamonds.” The result? The double truck, design-infused, data tinged report.

Good work, IBM. The study will definitely sell truckloads of those Emperor 5 mainframes.

Stephen E Arnold, May 9, 2025

Waymo Self Driving Cars: Way Safer, Waymo Says

May 9, 2025

This dinobaby believes everything he reads online. I know that statistically valid studies conducted by companies about their own products are the gold standard in data collection and analysis. If you doubt this fact of business life in 2025, you are not in the mainstream.

I read “Waymo Says Its Robotaxis Are Up to 25x Safer for Pedestrians and Cyclists.” I was thrilled. Imagine. I could stand in front of a Waymo robotaxi holding my new grandchild and know that the vehicle would not strike us. I wonder if my son and his wife would allow me to demonstrate my faith in the Google.

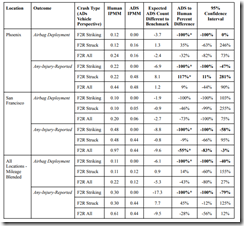

The write up explains that a Waymo study proved beyond a shadow of doubt that Waymo robotaxis are way, way, way safer than any other robotaxi. Here’s a sampling of the proof:

92 percent fewer crashes with injuries to pedestrians

82 percent fewer crashes with injuries to kids and adults on bicycles

82 percent fewer crashes with senior citizens on scooters and adults on motorcycles.

Google has made available a big, fat research paper which provides more knock out data about the safety of the firm’s smart robot driven vehicles. If you want to dig into the document with inputs from six really smart people, click this link.

The study is a first, and it is, in my opinion, a quantumly supreme example of research. I do not believe that Google’s smart software was used to create any synthetic data. I know that if a Waymo vehicle and another firm’s robot-driven car speed at an 80 year old like myself 100 times each, the Waymo vehicles will only crash into me 18 times. I have no idea how many times I would be killed or injured if another firm’s smart vehicle smashed into me. Those are good odds, right?

The paper has a number of compelling presentations of data. Here’s an example:

This particular chart uses the categories of striking and struck, but only a trivial amount of these kinetic interactions raise eyebrows. No big deal. That’s why the actual report consumed only 58 pages of text and hard facts. Obvious superiority.

Would you stand in front of a Waymo driving at you as the sun sets?

I am a dinobaby, and I don’t think an automobile would do too much damage if it did hit me. Would my son’s wife allow me to hold my grandchild in my arms as I demonstrated my absolute confidence in the Alphabet Google YouTube Waymo technology? Answer: Nope.

Stephen E Arnold, May 9, 2025

Mobile Phones? Really?

May 2, 2025

No AI, just the dinobaby himself.

No AI, just the dinobaby himself.

I read one of those “modern” scientific summaries in the UK newspaper, The Guardian. Yep, that’s a begging for dollars outfit which reminds me that I have read eight stories since January 1, 2025. I am impressed with the publisher’s cookie wizardry. Too bad it does not include the other systems I use in the course of my day.

The article which caught my attention and sort of annoyed me is “Older People Who Use Smartphones Have Lower Rates of Cognitive Decline.” I haven’t been in school since I abandoned my PhD to join Halliburton Nuclear in Washington, DC in the early 1970s. I don’t remember much of my undergraduate work, including classes about setting up “scientific studies” or avoiding causation problems.

I do know that I am 80 years old and that smartphones are not the center of my information world. Am I, therefore, in cognitive decline? I suppose you should ask those who will be in my OSINT lecture this coming Friday (April 18, 2025) or those hearing my upcoming talks at a US government cyber fraud conference. My hunch is that whether the people listening to me think I am best suited for drooling in an old age home or some weird nut job fooling people is best accomplished by some research that involves sample selection, objective and interview data, and benchmarking.

The Guardian article skips right to the reason I am able to walk and chew gum at the same time without requiring [a] dentures, [b] a walker, [c] an oxygen tank, or [d] a mobile smartphone.

But, no, the write up says:

Fears that smartphones, tablets and other devices could drive dementia in later life have been challenged by research that found lower rates of cognitive decline in older people who used the technology. An analysis of published studies that looked at technology use and mental skills in more than 400,000 older adults found that over-50s who routinely used digital devices had lower rates of cognitive decline than those who used them less.

Okay, why use one smartphone. Buy two. Go whole hog. Install TOR and cruise the Dark Web and figure out why Ahmia.fi is filtering results. Download apps by the dozens and use them to get mental stimulation. I highly recommend Hamster Kombat, Act 2. Plus, one must log on to Facebook — the hot spot for seniors to check out grandchildren and keep up with obituaries — and immerse oneself in mental stimulation.

The write up says:

It is unclear whether the technology staves off mental decline, or whether people with better cognitive skills simply use them more, but the scientists say the findings question the claim that screen time drives what has been called “digital dementia”.

That’s slick. Digital dementia.

My thoughts about this wishy washy correlation are:

- Some “scientists” are struggling to get noticed for their research and grab smartphones and data to establish that these technological gems keep one’s mind sharp. Yeah, meh!

- A “major real news” outfit writes up the “research” illustrates a bit of what I call “information stretching.” Like spandex tights, making the “facts” convert a blob into an acceptable shape has replaced actual mental work

- The mental decline thing tells me more about the researchers and the Guardian’s editorial approach.

My view is that engagement with people, devices, and ideas trump the mobile phone angle. People who face physical deterioration are going to demonstrate assorted declines. If the phone helps some people, great.

I am just tired of the efforts to explain the upsides and downsides of mobile devices. These gizmos are part of the datasphere in which people live. Put a person in solitary confinement with sound deadening technology and that individual will suffer some quite sporty declines. A rich and stimulating environment is more important than a gizmo with Telegram or WhatsApp. Maybe an old timer will become the next crypto currency trading tsar?

Net net: Those undergraduate classes in statistics, psychology, and logic might be relevant, particularly to those who became thumb typing and fast scrollers at a young age. I am a dinobaby and maybe you will attend one of my lectures. Then you can tell me that I do what I do because I have a smartphone. Actually I have four. That’s why the Guardian’s view count is wrong about how often I look at the outfit’s articles.

Stephen E Arnold, May 2, 2025

Mathematics Is Going to Be Quite Effective, Citizen

March 5, 2025

This blog post is the work of a real-live dinobaby. No smart software involved.

This blog post is the work of a real-live dinobaby. No smart software involved.

The future of AI is becoming more clear: Get enough people doing something, gather data, and predict what humans will do. What if an individual does not want to go with the behavior of the aggregate? The answer is obvious, “Too bad.”

How do I know that as a handful of organizations will use their AI in this manner? I read “Spanish Running of the Bulls’ Festival Reveals Crowd Movements Can Be Predictable, Above a Certain Density.” If the data in the report are close to the pin, AI will be used to predict and then those predictions can be shaped by weaponized information flows. I got a glimpse of how this number stuff works when I worked at Halliburton Nuclear with Dr. Jim Terwilliger. He and a fellow named Julian Steyn were only too happy to explain that the mathematics used for figuring out certain nuclear processes would work for other applications as well. I won’t bore you with comments about the Monte Carl method or the even older Bayesian statistics procedures. But if it made certain nuclear functions manageable, the approach was okay mostly.

Let’s look at what the Phys.org write up says about bovines:

Denis Bartolo and colleagues tracked the crowds of an estimated 5,000 people over four instances of the San Fermín festival in Pamplona, Spain, using cameras placed in two observation spots in the plaza, which is 50 meters long and 20 meters wide. Through their footage and a mathematical model—where people are so packed that crowds can be treated as a continuum, like a fluid—the authors found that the density of the crowds changed from two people per square meter in the hour before the festival began to six people per square meter during the event. They also found that the crowds could reach a maximum density of 9 people per square meter. When this upper threshold density was met, the authors observed pockets of several hundred people spontaneously behaving like one fluid that oscillated in a predictable time interval of 18 seconds with no external stimuli (such as pushing).

I think that’s an important point. But here’s the comment that presages how AI data will be used to control human behavior. Remember. This is emergent behavior similar to the hoo-hah cranked out by the Santa Fe Institute crowd:

The authors note that these findings could offer insights into how to anticipate the behavior of large crowds in confined spaces.

Once probabilities allow one to “anticipate”, it follows that flows of information can be used to take or cause action. Personally I am going to make a note in my calendar and check in one year to see how my observation turns out. In the meantime, I will try to keep an eye on the Sundars, Zucks, and their ilk for signals about their actions and their intent, which is definitely concerned with individuals like me. Right?

Stephen E Arnold, March 5, 2025

Speed Up Your Loss of Critical Thinking. Use AI

February 19, 2025

While the human brain isn’t a muscle, its neurology does need to be exercised to maintain plasticity. When a human brain is rigid, it’s can’t function in a healthy manner. AI is harming brains by making them not think good says 404 Media: “Microsoft Study Finds AI Makes Human Cognition “Atrophied and Unprepared.” You can read the complete Microsoft research report at this link. (My hunch is that this type of document would have gone the way of Timnit Gebru and the flying stochastic parrot, but that’s just my opinion, Hank, Advait, Lev, Ian, Sean, Dick, and Nick.)

Carnegie Mellon University and Microsoft researchers released a paper that says the more humans rely on generative AI the “result in the deterioration of cognitive faculties that ought to be preserved.”

Really? You don’t say! What else does this remind you of? How about watching too much television or playing too many videogames? These passive activities (arguable with videogames) stunt the development of brain gray matter and in a flight of Mary Shelley rhetoric make a brain rot! What else did the researchers discover when they studied 319 knowledge workers who self-reported their experiences with generative AI:

“ ‘The data shows a shift in cognitive effort as knowledge workers increasingly move from task execution to oversight when using GenAI,’ the researchers wrote. ‘Surprisingly, while AI can improve efficiency, it may also reduce critical engagement, particularly in routine or lower-stakes tasks in which users simply rely on AI, raising concerns about long-term reliance and diminished independent problem-solving.’”

By the way, we definitely love and absolutely believe data based on self reporting. Think of the mothers who asked their teens, “Where did you go?” The response, “Out.” The mothers ask, “What did you do?” The answer, “Nothing.” Yep, self reporting.

Does this mean generative AI is a bad thing? Yes and no. It’ll stunt the growth of some parts of the brain, but other parts will grow in tandem with the use of new technology. Humans adapt to their environments. As AI becomes more ingrained into society it will change the way humans think but will only make them sort of dumber [sic]. The paper adds:

“ ‘GenAI tools could incorporate features that facilitate user learning, such as providing explanations of AI reasoning, suggesting areas for user refinement, or offering guided critiques,’ the researchers wrote. ‘The tool could help develop specific critical thinking skills, such as analyzing arguments, or cross-referencing facts against authoritative sources. This would align with the motivation enhancing approach of positioning AI as a partner in skill development.’”

The key is to not become overly reliant AI but also be aware that the tool won’t go away. Oh, when my mother asked me, “What did you do, Whitney?” I responded in the best self reporting manner, “Nothing, mom, nothing at all.”

Whitney Grace, February 19, 2025

More Data about What Is Obvious to People Interacting with Teens

December 19, 2024

This blog post is the work of an authentic dinobaby. No smart software was used.

This blog post is the work of an authentic dinobaby. No smart software was used.

Here’s another one of those surveys which provide some data about a very obvious trend. “Nearly Half of US Teens Are Online Constantly, Pew Report Finds” states:

Nearly half of American teenagers say they are online “constantly” despite concerns about the effects of social media and smartphones on their mental health…

No kidding. Who knew?

There were some points in the cited article which seemed interesting if the data are reliable, the sample is reliable, and the analysis is reliable. But, just for yucks, let’s assume the findings are reasonably representative of what the future leaders of America are up to when their noses are pressed against an iPhone or (gasp!) and Android device.

First, YouTube is the “single most popular platform teenagers use. However, in a previous Pew study YouTube captured 90 percent of the sample, not the quite stunning 95 percent previously documented by the estimable survey outfit.

Second, the write up says:

There was a slight downward trend in several popular apps teens used. For instance, 63% of teens said they used TikTok, down from 67% and Snapchat slipped to 55% from 59%.

Improvement? Sure.

And, finally, I noted what might be semi-bad news for parents and semi-good news for Meta / Zuck:

X saw the biggest decline among teenage users. Only 17% of teenagers said they use X, down from 23% in 2022, the year Elon Musk bought the platform. Reddit held steady at 14%. About 6% of teenagers said they use Threads, Meta’s answer to X that launched in 2023. Meta’s messaging service WhatsApp was a rare exception in that it saw the number of teenage users increase, to 23% from 17% in 2022.

I do have a comment. Lots of numbers which suggest reading, writing, and arithmetic are not likely to be priorities for tomorrow’s leaders of the free world. But whatever they decide and do, those actions will be on video and shared on social media. Outstanding!

Stephen E Arnold, December 19, 2024

Smart Software Project Road Blocks: An Up-to-the-Minute Report

October 1, 2024

![green-dino_thumb_thumb_thumb_thumb_t[2]_thumb_thumb green-dino_thumb_thumb_thumb_thumb_t[2]_thumb_thumb](https://arnoldit.com/wordpress/wp-content/uploads/2024/09/green-dino_thumb_thumb_thumb_thumb_t2_thumb_thumb_thumb.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I worked through a 22-page report by SQREAM, a next-gen data services outfit with GPUs. (You can learn more about the company at this buzzword dense link.) The title of the report is:

The report is a marketing document, but it contains some thought provoking content. The “report” was “administered online by Global Surveyz [sic] Research, an independent global research firm.” The explanation of the methodology was brief, but I don’t want to drag anyone through the basics of Statistics 101. As I recall, few cared and were often good customers for my class notes.

Here are three highlights:

- Smart software and services cause sticker shock.

- Cloud spending by the survey sample is going up.

- And the killer statement: 98 percent of the machine learning projects fail.

Let’s take a closer look at the astounding assertion about the 98 percent failure rate.

The stage is set in the section “Top Challenges Pertaining to Machine Learning / Data Analytics.” The report says:

It is therefore no surprise that companies consider the high costs involved in ML experimentation to be the primary disadvantage of ML/data analytics today (41%), followed by the unsatisfactory speed of this process (32%), too much time required by teams (14%) and poor data quality (13%).

The conclusion the authors of the report draw is that companies should hire SQREAM. That’s okay, no surprise because SQREAM ginned up the study and hired a firm to create an objective report, of course.

So money is the Number One issue.

Why do machine learning projects fail? We know the answer: Resources or money. The write up presents as fact:

The top contributing factor to ML project failures in 2023 was insufficient budget (29%), which is consistent with previous findings – including the fact that “budget” is the top challenge in handling and analyzing data at scale, that more than two-thirds of companies experience “bill shock” around their data analytics processes at least quarterly if not more frequently, that that the total cost of analytics is the aspect companies are most dissatisfied with when it comes to their data stack (Figure 4), and that companies consider the high costs involved in ML experimentation to be the primary disadvantage of ML/data analytics today.

I appreciated the inclusion of the costs of data “transformation.” Glib smart software wizards push aside the hassle of normalizing data so the “real” work can get done. Unfortunately, the costs of fixing up source data are often another cause of “sticker shock.” The report says:

Data is typically inaccessible and not ‘workable’ unless it goes through a certain level of transformation. In fact, since different departments within an organization have different needs, it is not uncommon for the same data to be prepared in various ways. Data preparation pipelines are therefore the foundation of data analytics and ML….

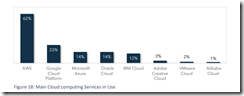

In the final pages of the report a number of graphs appear. Here’s one that stopped me in my tracks:

The sample contained 62 percent user of Amazon Web Services. Number 2 was users of Google Cloud at 23 percent. And in third place, quite surprisingly, was Microsoft Azure at 14 percent, tied with Oracle. A question which occurred to me is: “Perhaps the focus on sticker shock is a reflection of Amazon’s pricing, not just people and overhead functions?”

I will have to wait until more data becomes available to me to determine if the AWS skew and the report findings are normal or outliers.

Stephen E Arnold, October 1, 2024