YouTube and Its Content Play

March 31, 2013

Quite an interesting write up this: “Lessons Learned from YouTube’s $300 M Hole.” First, the “m” means millions. Second, the write up provides a useful thumbnail about Google’s content play for YouTube. The idea, if I understand it, was to enlist fresh thinking and get solid content from some “big names”.

The innovative approach elicited this comment in the write up:

There were a lot of recipients of this money, and many of them were major media companies trying their hand at online video that received some fat checks, up to $5M a piece, to launch TV-like channels. What we all found out is that, no matter how hard you push them and how much money you spend on them, YouTube doesn’t work like TV…and funding it that way is daft.

If the article is accurate, one channel earned back money. The other hundred or so did not.

The lessons learned from Google’s “daft” approach struck me as confirmation of the observations I offered in a report to a former client about Thomson Reuters’ “what the heck” leap into the great Gangnam style of YouTube; namely:

- Viewership and Google money are correlated

- Online video is different from “real” TV shows

- Meeting needs of users is different from meeting the needs of advertisers.

The conclusion of the write up struck me as illustrative of the Google approach:

With regards to the last lesson, allow me to submit to any YouTube employees out there that the ad agency doesn’t have the power in this equation. YouTube is a young company, it does not need to convert 100% of its value to dollars. Please, let the advertisers figure out for themselves how to tackle this very new medium instead of trying to shape the medium to meet their needs. Seems to me, that’s the strategy that got Google where it is today.

In short, Google may have lost contact with what made it successful.

Stephen E Arnold, March 31, 2013

Predictive Analytics Becoming an Important Governance Tool

March 31, 2013

Many of our cities need help right now. Those destined for default may need software to help with planning the future, and predictive analytics may be the answer. That is but one area where analytics could help our municipalities; GCN examines the relationship between such software and government agencies in “Analytics: Predicting the Future (and Past and Present).”

Though police are still a long way from the predictive power of 2002’s “Minority Report,” notes writer Rutrell Yasin, police departments in a number of places are using analytics software to forecast trouble. And the advantages are not limited to law-enforcement.

The article begins with a basic explanation of “predictive analytics,” but quickly moves on to some illustrations. Miami-Dade County, Florida, for example, uses products from IBM to manage water resources, traffic, and crime. One key advantage—interdepartmental collaboration. See the article for the details on that county’s use of this technology.

Though perhaps not the most popular of applications, predictive analysis is also now being used to enhance tax collection. So far, the IRS and the Australian Taxation Authority have embraced the tools, but certainly more tax agencies must be eyeing the possibilities. Any tax cheats out there—you have been warned.

Leave it to the CIA‘s head technology guy to capture the essence of the predictive analysis picture as we move into the future. Yasin writes:

“The real power of big data analytics will be unlocked when analytic tools are in the hands of everybody, not just among data scientists who will tell people how to use it, according to Gus Hunt, the CIA’s CTO, during a recent seminar on Big Data in Washington, D.C.

“‘We are going to have to get analytics and visualization [tools] that are so dead-simple easy to use, anybody can take advantage of them, anybody can use them,’ Hunt said.”

Are we there yet?

Cynthia Murrell, March 31, 2013

Sponsored by ArnoldIT.com, developer of Augmentext

Big Data and the New Mainframe Era

March 30, 2013

Short honk. Navigate to either your paper copy of the Wall Street Journal or the electronic version of “Demand Surges for Supercomputers.” The estimable Wall Street Journal asserts:

Sales of supercomputers priced at $500,000 and higher jumped 29% last year to $5.6 billion, research firm IDC estimated. That contrasted with demand for general-purpose servers, which fell 1.9% to $51.3 billion, the firm said.

Most folks assume that nifty cloud services are just the ticket for jobs requiring computational horsepower. Maybe not? For the cheerleaders for big data, the hyperbole to crunch bits every which way may usher in a new era of—hang on, gentle reader—the 21st century mainframe.

Amazon, Google, and Rackspace type number crunching solutions may not be enough for some applications. If big iron continues to sell along with big storage, I may dust off my old JCL reference book and brush up on DASDs.

Stephen E Arnold, March 30, 2013

Wolters Kluwer Sheds AccessData Interest

March 30, 2013

A group that specializes in software and services for the legal realm is shifting gears, we learn from “Wolters Kluwer Divests Its Minority Interest in AccessData Group” at Marketwire. Wolters Kluwer is jettisoning its 25 percent interest in AccessData Group, in a move they say will allow them to refocus. The press release states:

“The sale of its minority interest in AccessData Group reinforces Wolters Kluwer Corporate Legal Services'(CLS) focus on its core legal services markets. CLS will continue to strengthen its position as the partner of choice for legal professionals around the world, supporting them in managing corporate compliance, legal entities, lien portfolios, brands, and legal spend.

“AccessData Group was formed in 2010 by combining the businesses of AccessData and Summation, formerly part of CLS. AccessData Group operates in the digital investigation and litigation support market. Following this 2010 merger, Wolters Kluwer remained as a strategic investor to support the integration of Summation and AccessData.”

Wolters Kluwer Corporate Legal Services strives to stay on top of the newest tools for ensuring transparency, accountability, and accuracy. They began long, long ago in 1892 as the CT Corporation, and was bought just over a century later by the Wolters Kluwer Group .

Digital forensics, eDiscovery & litigation support, and cyber security are AccessData‘s areas of expertise. The company was founded in 1987, and is headquartered in Lindon, Utah. AccessData employs about 400 souls, and maintains offices in the U.K., Australia, and several major U.S. cities.

Cynthia Murrell, March 30, 2013

Sponsored by ArnoldIT.com, developer of Augmentext

Promise Best Practices: Encouraging Theoretical Innovation in Search

March 29, 2013

The photo below shows the goodies I got for giving my talk at Cebit in March 2013. I was hoping for a fat honorarium, expenses, and a dinner. I got a blue bag, a pen, a notepad, a 3.72 gigabyte thumb drive, and numerous long walks. The questionable hotel in which I stayed had no shuttle. Hitchhiking looked quite dangerous. Taxis were as rare as an educated person in Harrod’s Creek, and I was in the same city as Leibnitz Universität. Despite my precarious health, I hoofed it to the venue which was eerily deserted. I think only 40 percent of the available space was used by Cebit this year. The hall in which I found myself reminded me of an abandoned subway stop in Manhattan with fewer signs.

The PPromise goodies. Stuffed in my bag were hard copies of various PPromise documents. The most bulky of these in terms of paper were also on the 3.73 Gb thumb drive. Redundancy is a virtue I think.

Finally on March 23, 2013, I got around to snapping the photo of the freebies from the PPromise session and reading a monograph with this moniker:

Promise Participative Research Laboratory for Multimedia and Multilingual Information Systems Evaluation. FP7 ICT 20094.3, Intelligent Information Management. Deliverable 2.3 Best Practices Report.

The acronym should be “PPromise,” not “Promise.” The double “P” makes searching for the group’s information much easier in my opinion.

If one takes the first letter of “Promise Participative Research Laboratory for Multimedia and Multilingual Information Systems Evaluation” one gets PPromise. I suppose the single “P” was an editorial decision. I personally like “PP” but I live in a rural backwater where my neighbors shoot squirrels with automatic weapons and some folks manufacture and drink moonshine. Some people in other places shoot knowledge blanks and talk about moonshine. That’s what makes search experts and their analyses so darned interesting.

To point out the vagaries of information retrieval, my search to a publicly accessible version of the PPromise document returned a somewhat surprising result.

A couple more queries did the trick. You can get a copy of the document without the blue bag, the pen, the notepad, the 3.72 gigabyte thumb drive, and the long walk at http://www.promise-noe.eu/documents/10156/086010bb-0d3f-46ef-946f-f0bbeef305e8.

So what’s in the Best Practices Report? Straightaway you might not know that the focus of the whole PPromise project is search and retrieval. Indexing, anyone?

Let me explain what PPromise is or was, dive into the best practices report, and then wrap up with some observations about governments in general and enterprise search in particular.

GitHub Gets Some Love from Microsoft

March 29, 2013

GitHub is a big deal, the best possible brand of social media for developers, enabling open source code sharing and collaboration. Mac and Linux operating systems have long embraced GitHub, but acceptance by Microsoft has been a bit more reticent. However, Wired shares some good news in their article, “Microsoft Windows Gets More Love From Git.”

The article has this to say about the changes toward GitHub by Microsoft:

“The site is the home to more than 4.5 million open source projects, letting software coders share and collaborate on software code, and sometimes, people share other stuff too. But Atlassian — the Australian company behind popular developer tools like JIRA — . . . is rolling out a tool that will compete with GitHub’s Windows client: SourceTree, a visual tool for working with GitHub, BitBucket, Stash or any other code repository based on the code-management tools Git or Mercurial. SourceTree has long been available for Macintosh OS X, but as of Tuesday, Windows developers can download the public beta.”

This is the most recent example of the softening of relations between the two. A few months ago, Microsoft integrated support for GitHub into their Microsoft Visual Studio. So what does this mean? Open source is here to stay and Microsoft is scrambling to get on board. It also means that Microsoft is likely to start losing ground quickly in the enterprise search arena to smart open source or open core solutions like LucidWorks. Microsoft has never really been a leader in innovation, but it seems like now they are just hoping to stay in the game.

Emily Rae Aldridge, March 29, 2013

Sponsored by ArnoldIT.com, developer of Beyond Search

Google Adds Another AI Academic to the Mix

March 29, 2013

A recent career move from prominent algorithm designer Geoffrey Hinton has big implications, according to blogger Mohammed AlQuraishi’s post, “What Hinton’s Google Move Says About the Future of Machine Learning.” Hinton, an esteemed computer science professor at the University of Toronto, recently sold his neural-network machine-learning startup DNNresearch to Google. Though not transferring full-time to Google, the scientist reportedly plans to help the company implement his brainchild while remaining on at the university.

This development follows Google’s pursuit of several other gifted academic minds that specialize in large-scale machine learning. AlQuraishi says these moves indicate the future of machine learning and artificial intelligence. He writes:

“Machine learning in general is increasingly becoming dependent on large-scale data sets. In particular, the recent successes in deep learning have all relied on access to massive data sets and massive computing power. I believe it will become increasingly difficult to explore the algorithmic space without such access, and without the concomitant engineering expertise that is required. The behavior of machine learning algorithms, particularly neural networks, is dependent in a nonlinear fashion on the amount of data and computing power used. An algorithm that appears to be performing poorly on small data sets and short training times can begin to perform considerably better when these limitations are removed. This has in fact been in a nutshell the reason for the recent resurgence in neural networks.”

It is also the reason, the article asserts, that research in the area is destined to move from academia to the commercial sector. The piece goes on to compare what is happening now with the last century’s shift, when the development of computers moved from universities, supported by government funding, to the industrial sector. It is a thought-provoking comparison; the one-page article is worth a look.

Is AlQuraishi right, are the machine-learning breakthroughs about to shift to the corporate realm? If so, will that be good or bad for consumers? Stay tuned.

Cynthia Murrell, March 29, 2013

Sponsored by ArnoldIT.com, developer of Augmentext

Penning a Bestseller Not as Profitable as It Might Seem

March 29, 2013

So you wanna be a professional writer? You might not want to make Amazon’s bestseller list your marker of success; author Patrick Wensink lets us in on how little his place on that roster did for him financially in Salon’s, “My Amazon Bestseller Made Me Nothing.” In fact, as he tells it, making less than one would like on literary success is a burden most authors must bear.

After Wensink’s novel, “Broken Piano for President“, garnered media attention and joined such entries on Amazon’s bestseller list as “Hunger Games” and “Bossypants,” some folks assumed he was suddenly raking in the dough. He writes:

“I can sort of see why people thought I was going to start wearing monogrammed silk pajamas and smoking a pipe.

“But the truth is, there’s a reason most well-known writers still teach English. There’s a reason most authors drive dented cars. There’s a reason most writers have bad teeth. It’s not because we’ve chosen a life of poverty. It’s that poverty has chosen our profession.

“Even when there’s money in writing, there’s not much money.”

Wensink reports that, before taxes, he made just $12,000 on the 4,000 copies Amazon sold of his popular book. He isn’t complaining, he says—it is much more than he has made on any single project in the past.

And that is exactly the problem; I suppose I’ll have to find another way to make my millions.

Cynthia Murrell, March 29, 2013

Sponsored by ArnoldIT.com, developer of Augmentext

Thomson Reuters: The Pointy End of a Business Sector

March 28, 2013

Thomson Reuters has been a leader in professional publishing for many years. I lost track of the company after the management shake up which accompanied the departure of Michael Brown and some other top executives. Truth be told I was involved in work for the US government, and it was new, exciting, and relevant. My work for publishing companies trying to surf the digital revolution reminded me of my part time job air hammering slag at Keystone Steel & Wire Company.

I read “Data Don’t Add Up for Thomson Reuters.” (This online link can go dead or to a pay wall without warning, and I don’t have an easy way to update links in this free blog. So, there you go.) You can find the story in the printed version of the newspaper or online if you have a subscription. The printed version appears on page C-10, March 28, 2013 edition.

The main point is that Thomson Reuters has not been able to grow organically by selling more information to professionals or by buying promising companies and surfing on surging revenue streams. This is an important point, and I will return to it in a moment. The Wall Street Journal story said:

Shares of Thomson Reuters remain 13% below where they were when the deal closed in April 2008, partly reflecting difficulty integrating two large, international companies.

The article runs though other challenges which range from Bloomberg to Dow Jones, from ProQuest to LexisNexis. The article is short, so the list of challenges has been truncated to a handful of big names.

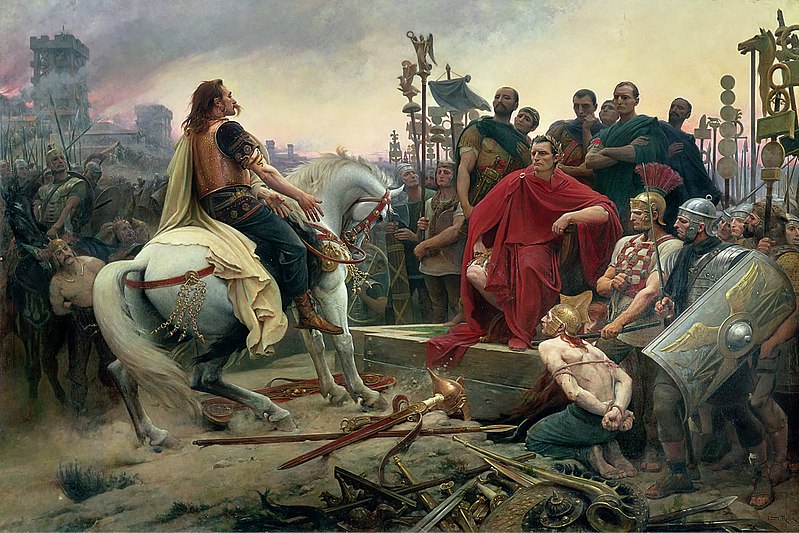

Do the professional publishing companies have access to talent on a par with Julius Caesar’s capabilities? In my opinion, without management of exceptional skill, professional publishing companies will be sucked through the rip in the fabric of credibility which Thomson Reuters’ pointed spear has created: Flat earnings, more wrenching cost cutting, and products which confuse customers and do not increase revenue and profits. Image from Wikipedia Vercingetorix write up.

But let’s set aside Thomson Reuters. I want to look at the Thomson Reuters’ situation as the pointy end of a spear. The idea is that Thomson Reuters has worked hard for 20 or 30 years to be the best managed, smartest, and most technologically adept company in the professional publishing sector. With hundreds of brands and almost total saturation of certain markets like trademark and patent information, legal information, and data for wheeler dealers—Thomson Reuters has been trying hard, very hard, to make the right moves. Is time running out?

Like the professional publishing sector which includes outfits as diverse as Cambridge Scientific Abstracts, Ebsco Electronic Publishing, Elsevier, and Wolters Kluwers to name a few outfits with hundreds of millions in revenue. Each of these companies share some components:

- Information is “must have” as opposed nice to have

- Information is for-fee, not free

- Customer segments are not spending in the way the analysts predicted

- Deals have not delivered significant new revenue

- Management shifts replace executives with similar, snap in type people. Innovative and disruptive folks find themselves sitting alone at company meetings.

MongoDB Upgrades to Enterprise

March 28, 2013

MongoDB is a go-to in the NoSQL database realm. The product has steadily gained more and more followers for its ability to house large amounts of data across several computer servers. The company behind Mongo, 10gen, is upping the game and appealing to the broader (and harder to please) enterprise crowd. Read the full details in the Wired article, “NoSQL Database MongoDB Reaches Beyond Software Coders.”

The articles states:

“But the company that develops Mongo — 10gen — is hoping to reach beyond the developers and into big businesses. On Tuesday, with this in mind, the company unveiled the ‘enterprise edition’ of the database that’s specifically designed for use in the business world. The version of the database includes a few tools you won’t find in the open source code. It’s an approach known as ‘open core’ — building proprietary features on an open source foundation.”

The open core model is a successful one. In fact, part of Mongo’s latest news is their partnership with LucidWorks, a company that pioneered the open core model. LucidWorks specializes in enterprise search through Apache Lucene and Solr. When the storage power of MongoDB’s NoSQL meets the search and discovery function of LucidWorks, enterprises are sure to find a winning combination.

Emily Rae Aldridge, March 28, 2013

Sponsored by ArnoldIT.com, developer of Beyond Search