Another Poobah Insight: Marketing Is an Opportunity

March 21, 2012

Please, read the entire write up “Marketing Is the Next Big Money Sector in Technology.” When you read it, you will want to forget the following factoids:

- Google has been generating significant revenue from online ad services for about a decade

- Facebook is working to monetize with a range of marketing services every single one of the 800 million plus Facebook users

- Start ups in and around marketing are flourishing as the scrub brush search engine optimizers of yore bite the dust. A good example is the list of exhibitors at this conference.

The hook for the story is a quote from an azure chip consultancy. The idea is that as traditional marketing methods flame out, crash, and burn, digital marketing is the future. So the direct mail of the past will become spam email of the future I predict. Imagine.

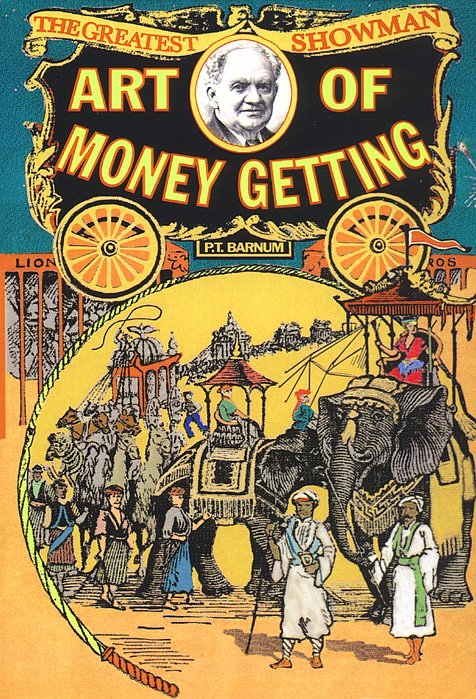

Marketing will chew up an organization’s information technology budget. The way this works is that since “everyone” will have a mobile device, the digital pitches will know who, what, where, why, and how a prospect thinks, feels, and expects. The revolution is on its way, and there’s no one happier than a Madison Avenue executive who contemplates the riches from the intersection of technology, hapless prospects, and good old fashioned hucksterism. The future looks like a digital PT Barnum I predict.

Are Search Vendors Embracing Desperation PR?

March 12, 2012

The addled goose is in recovery mode. I have been keeping my feathers calm and unruffled. I am maintaining a low profile. I have undertaken no travel for 2.5 months. I just float amidst the detritus of my pond filled with mine drainage run off. I don’t send spam. I don’t make sales calls. I don’t talk on the phone unless someone pays me. In short, I am out of gas, at the end of the trail, and ready for my goose to be cooked, but I want to express an opinion about desperation marketing as practiced by public relation professionals and PR firms’ search related clients.

A mine drainage pond. I stay here. I mind my business. I don’t spam unlike AtomicPR and Voce Communications type firms. In general, I bristle at desperation marketing, sales, and public relations.

Imagine my reaction when I get unsolicited email from a PR firm such as Porter Novelli. This Porter Novelli PR firm is my newest plight. Mercifully AtomicPR has either removed me from its spam list or figured out that I sell time just like an attorney but write about PR spam with annoying regularity.

The Porter Novelli outfit owns something call Voce Communications. Voce thinks I am a “real” journalist. I have been called many things, but “journalist” is a recent and inaccurate appellation. The only problem is that I sell time. In my opinion, “real” journalists mostly look for jobs, pretend to be experts on almost anything in the dictionary, worry about getting fired, or browse the franchise ads looking for the next Taco Bell type opportunity.

Spamming me and then reprimanding me for charging for my time are characteristics of what I call desperation marketing. In the last six months, desperation marketing is the latest accoutrement of the haute couture in PR saucisson. Deperation PR is either in vogue or a concomitant of what some describe as a “recovering economy.”

Here’s the scoop: I received an email wanting me to listen to some corporate search engine big wigs tell me about their latest and greatest software widget. The idea, even when I am paid to endure such pression de gonflage is tiring. When someone wants me to participate in a webinar, the notion is downright crazy. I usually reply, “Go away.”

To the Voce “expert” I fired off an email pointing the spammer to my cv (www.arnoldit.com/sitemap.html) and the About page of this blog. Usually the former political science major or failed middle school teacher replies, “We saw that you were a journalist in a list of bloggers.” After this lame comment, the PR Sasha or Trent stops moves on. No such luck with Voce’s laser minded desperation PR pro.

Here’s what I received:

[Bunny Rabbit] on behalf of EntropySoft. We have not yet had an opportunity to work together formally yet, but I wanted to reach out to you to see if we can arrange a conversation for you with the executives at EntropySoft – a company that I know you are familiar with given your recent conversation with BA Insights (which is an EntropySoft partner that uses our connector technology) and I know that you have mentioned EntropySoft in past articles for KMWorld.

The advantage that EntropySoft brings to the market is that through the use of its connector technology, EntropySoft can help companies make sense of unstructured data (or as you describe it – tsunami!) ensuring that IT teams can not only access or connect to just about everything worth connecting to in the KM universe, but that they can also act on it. Each EntropySoft connection is bi-directional: teams can access and act on everything. So from a SharePoint standpoint, EntropySoft’s connectors can now connect to everything in SharePoint (we just made news on this last week at the SPTechConference in San Francisco, see press release below) enabling search through FAST for SharePoint or the SharePoint Search Server Portal engine, as well as any other enterprise content management systems.

Do you have some available time on your calendar in the next week to have a phone conversation with EntropySoft? Please let me know what works for you.

Okay, I sure do know about EntropySoft. I have French clients. The two top chiens at EntropySoft know me and find me less than delectable. In the last year, the company has gunned its engine with additional financing, but for me, the outfit provides code widgets which hook one system to another. Useful stuff, but I am not going to flap my feathers in joy about this type of technology. Connectors are available from Oracle, open source, and outfits in Germany and India. Connector technology is important, but it is like many utility-centric technologies—out of sight and out of mind until the exception folder overflows. Then connectors get some attention. How often do you think about exporting an RFT 1.6 file from Framemaker? Exactly. Connectors. There but not the bell of the ball at the technology prom.

A book promoted on the Voce Communications Web site. I was not offered a “free” copy. I bet the book is for sale, just like my time. What would happen if I call the author and asked for a free copy? Hmmm.

More Allegations about Fast Search Impropriety

March 8, 2012

With legions of Microsoft Certified Resellers singing the praises of the FS4SP (formerly the Fast Search & Transfer search and retrieval system), sour notes are not easily heard. I don’t think many users of FS4SP know or care about the history of the company, its university-infused technology, or the machinations of the company’s senior management and Board of Directors. Ancient history.

I learned quite a bit in my close encounters with the Fast ESP technology. No, ESP does not mean extra sensory perception. ESP allegedly meant the enterprise search platform. Fast Search, before its purchase by Microsoft, was a platform, not a search engine. The idea was that the collection of components would be used to build applications in which search was an enabler. The idea was a good one, but search based applications required more than a PowerPoint to become a reality. The 64 bit Exalead system, developed long before Dassault acquired Exalead, was one of the first next generation, post Google systems to have a shot at delivering a viable search based application. (The race for SBAs, in my opinion, is not yet over, and there are some search vendors like PolySpot which are pushing in interesting new directions.) Fast Search was using marketing to pump up license deals. In fact, the marketing arm was more athletic than the firm’s engineering units. That, in my view, was the “issue” with Fast Search. Talk and demos were good. Implementation was a different platter of herring five ways.

Fast Search block diagram circa 2005. The system shows semantic and ontological components, asserts information on demand, and content publishing functions—all in addition to search and retrieval. Similar systems are marketed today, but hybrid content manipulation systems are often a work in progress in 2012. © Fast Search & Transfer

I once ended up with an interesting challenge resulting from a relatively large-scale, high-profile search implementation. Now you may have larger jobs than I typically get, but I was struggling with the shift from Inktomi to the AT&T Fast search system in order to index the public facing content of the US federal government.

Inktomi worked reasonably well, but the US government decided in its infinite wisdom to run a “free and open competition.” The usual suspects responded to the request for proposal and statement of work. I recall that “smarter than everyone else” Google ignored the US government’s requirements.

This image is from a presentation by Dr. Lervik about Digital Libraries, no date. The slide highlights the six key functions of the Fast Search search engine. These are extremely sophisticated functions. In 2012, only a few vendors can implement a single system with these operations running in the core platform. In fact, the wording could be used by search vendor marketers today. Fast Search knew where search was heading, but the future still has not arrived because writing about a function is different from delivering that function in a time and resource window which licensees can accommodate. © Fast Search & Transfer

Fast Search, with the guidance of savvy AT&T capture professionals, snagged the contract. That was a fateful procurement. Fast Search yielded to a team from Vivisimo and Microsoft. Then Microsoft bought Fast Search, and the US government began its shift to open source search. Another consequence is that Google, as you may know, never caught on in the US Federal government in the manner that I and others assumed the company would. I often wonder what would have happened if Google’s capture team had responded to the statement of work instead of pointing out that the requirements were not interesting.

Exogenous Complexity 5: Fees for Online Content

March 7, 2012

I wanted to capture some thoughts sparked by some recent articles about traditional publishing. If you believe that the good old days are coming back for newspapers and magazines, stop reading. If you want to know my thoughts about the challenges many, many traditional publishers face, soldier on. Want to set me straight. Please, use the comments section of this Web log. —Stephen E Arnold

Introduction

I would have commented on the Wall Street Journal’s “Papers Put Faith in Pay Walls” on Monday, March 5, 2012. Unfortunately, the dead tree version of the newspaper did not arrive until this morning (March 6, 2012). I was waiting to find out how long it would take the estimable Wall Street Journal to get my print subscription to me in rural Kentucky. The answer? A day as in “a day late and a dollar short.”

Here’s what I learned on page B5:

As more newspapers close the door on free access to their websites [sic], some publishers are still waiting for paying customers to pour in.

No mention of the alleged calisthenics in which the News Corp.’s staff have undertaken in order to get a story. But the message for me was clear. Newspapers, like most of those dependent on resource rich, non digital methods of generating revenue, have to do something. In the case of the alleged actions of the News Corp. I am hypothesizing that almost anything seems to be worth considering.

Is online to blame? Are dark forces of 12 year olds who download content the root of the challenges? Is technology going to solve another problem or just add to the existing challenges?

Making money online is a tough, thankless task. A happy quack to http://www.calwatchdog.com/tag/sisyphus/

My view is that pay walls are just one manifestation of the wrenching dislocations demographic preferences, technology, financial larking, and plain old stubbornness unleash. The Wall Street Journal explains several pay wall plans; for example, the Wall Street Journal is $207 a year versus the New York Times’s fee of $195.

The answer for me is that I did not miss the hard copy Wall Street Journal too much. I dropped the New York Times print subscription and I seem to be doing okay without that environmentally-hostile bundle of cellulose and chemically-infused ink. Furthermore, I don’t use either the Wall Street Journal or the New York Times online. The reason is that edutainment, soft features, recycled news releases, and sensationalism do not add value to my day in Harrod’s Creek, Kentucky. When I look at an aggregation of stories, sometimes I click and see a full text article from one of these two newspapers. Sometimes I get the story. Sometimes I get asked to sign up. If the story displays, fine. If not, I click to another tab.

When I worked at Halliburton NUS and then at Booz, Allen & Hamilton, reading the Wall Street Journal and the New York Times was part of the “package”. Now I am no longer a blue chip “package.” I am okay with that repositioning. The upside is that I don’t fool with leather briefcases, ties, and white shirts unless I have to attend a funeral. At my own, The downside is that I am getting old, and at age 67 less and less interested in MBA wackiness. I have no doubt I will be decked out in my “real” job attire. For now, I am okay with tan pants, a cheap nylon shirt, a worn Reebok warm up jacket, and whatever information I can view on my computing device.

Newspaper publishing has not adjusted to age as I have. Here’s a factoid from my notes about online revenue:

When printed content shifts to digital form, the online version shifts from “must have” to “nice to have”. As a result, the revenues from online cost more to generate and despite the higher costs, the margins suck. Publishers don’t like to accept the fact that the shift to online alters the value of the content. Publishers have high fixed costs, and online thrives when costs are driven as low as possible.

Net net: Higher costs and lower revenue are the status quo for most traditional publishers. Sure, there are exceptions, but these are often on a knife edge of survival. Check out the hapless Thomson Corp. Just don’t take the job of CEO because it is a revolving door peppered with logos of Thomson and Reuters and financial results which are deteriorating. Think Thomson is a winner? Jump to Wolters Kluwer, Pearson, or almost any other “real” publishing outfit. These are interesting environment for lawyers and accountants. Journalists are not quite as sanguine as those with golden parachutes and a year or two to “fix up” the balance sheets.

Ontoprise GmbH: Multiple Issues Says Wikipedia

March 3, 2012

Now Wikipedia is a go-to resource for Google. I heard from one of my colleagues that Wikipedia turns up as the top hit on a surprising number of queries. I don’t trust Wikipedia, but I don’t trust any encyclopedia produced by volunteers including volunteers. Volunteers often participate in a spoofing fiesta.

Note: I will be using this symbol when I write about subjects which trigger associations in my mind about use of words, bound phrases, and links to affect how results may be returned from Exalead.com, Jike.com, and Yandex.ru, among other modern Web indexing services either supported by government entities or commercial organizations.

I was updating my list of Overflight companies. We have added five companies to a new Overflight service called, quite imaginatively, Taxonomy Overflight. We have added five firms and are going through the process of figuring out if the outfits are in business or putting on a vaudeville act for paying customers.

The first five companies are:

We will be adding to the Taxonomy Overflight another group of companies on March 4, 2012. I have not yet decided how to “score” each vendor. For enterprise search Overflight, I use a goose method. Click here for an example: Overflight about Autonomy. Three ducks. Darned good.

I wanted to mention one quite interesting finding. We came across a company doing business as Ontoprise. The firm’s Web site is www.ontoprise.de. We are checking to see which companies have legitimate Web sites, no matter how sparse.

We noted that the Wikipedia entry for Ontoprise carried this somewhat interesting “warning”:

The gist of this warning is to give me a sense of caution, if not wariness, with regard to this company which offers products which delivered “ontologies.” The company’s research is called “Ontorule”, which has a faintly ominous sound to me. If I look at the naming of products from such firms as Convera before it experienced financial stress, Convera’s product naming was like science fiction but less dogmatic than Ontoprise’s language choice. So I cannot correlate Convera and Ontoprise on other than my personal “semantic”baloney detector. But Convera went south in a rather unexpected business action.

Exogenous Complexity 4: SEO and Big Data

February 29, 2012

Introduction

In the interview with Dr. Linda McIsaac, founder of Xyte, Inc., I learned that new analytic methods reveal high-value insights about human behavior. You can read the full interview in my Search Wizards Speak series at this link. The method involves an approach called Xyting and sophisticated analytic methods.

One example of the type of data which emerge from the Xyte method are these insights about Facebook users:

- Consumers who are most in tune with the written word are more likely to use Facebook. These consumers are the most frequent Internet users and use Facebook primarily to communicate with friends and connect with family.

- They like to keep their information up-to-date, meet new people, share photos, follow celebrities, share concerns, and solve people problems.

- They like to learn about and share experiences about new products. Advertisers should key in on this important segment because they are early adopters. They lead trends and influence others.

- The population segment that most frequents Facebook has a number of characteristics; for example, showing great compassion for others, wanting to be emotionally connected with others, having a natural intuition about people and how to relate to them, adapting well to change, embracing technology such as the Internet, and enjoying gossip and messages delivered in story form and liking to read and write.

- Facebook constituents are emotional, idealistic and romantic, yet can rationalize through situations. Many do not need concrete examples in order to comprehend new ideas.

I am not into social networks. Sure, some of our for-free content is available via social media channels, but where I live in rural Kentucky yelling down the hollow works quite well.

I read “How The Era Of ‘Big-Data’ Is Changing The Practice Of Online Marketing” and came away confused. You should work through the text, graphs, charts, and lingo yourself. I got a headache because most of the data struck me as slightly off center from what an outfit like Xyte has developed. More about this difference in a moment.

The thrust of the argument is that “big data” is now available to those who would generate traffic to client Web sites. Big data is described as “a torrent of digital data.” The author continues:

large sets of data that, when mined, could reveal insight about online marketing efforts. This includes data such as search rankings, site visits, SERPs and click-data. In the SEO realm alone at Conductor, for example, we collect tens of terabytes of search data for enterprise search marketers every month.

Like most SEO baloney, there are touchstones and jargon aplenty. For example, SERP, click data, enterprise search, and others. The intent is to suggest that one can pay a company to analyze big data and generate insights. The insights can be used to produce traffic to a Web page, make sales, or produce leads which can become sales. In a lousy business environment, such promises appeal to some people. Like most search engine optimization pitches, the desperate marketer may embrace the latest and greatest pitch. Little wonder there are growing numbers of unemployed professionals who failed to deliver the sales their employer wanted. The notion of desperation marketing fosters a services business who can assert to deliver sales and presumably job security for those who hire the SEO “experts.” I am okay with this type of business, and I am indifferent to the hollowness of the claims.

What interests me is this statement:

From our vantage point at Conductor, the move to the era of big data has been catalyzed by several distinct occurrences:

- Move to Thousands of Keywords: The old days of SEO involved tracking your top fifty keywords. Today, enterprise marketers are tracking up to thousands of keywords as the online landscape becomes increasingly competitive, marketers advance down the maturity spectrum and they work to continuously expand their zone of coverage in search.

- Growing Digital Assets: A recent Conductor study showed universal search results are now present in 8 out of 10 high-volume searches. The prevalence of digital media assets (e.g. images, video, maps, shopping, PPC) in the SERPs require marketers to get innovative about their search strategy.

- Multiple Search Engines: Early days of SEO involved periodically tracking your rank on Google. Today, marketers want to expand not just to Yahoo and Bing, but also to the dozens of search engines around the world as enterprise marketers expand their view to a global search presence.

All the above factors combined mean there are significant opportunities for an increase in both the breadth and volume of data available to search professionals.

Effective communication, in my experience, is not measured in “thousands of key words”. The notion of expanding the “zone of coverage” means that meaning is diffused. Of course, the intent of the key words is not getting a point across. The goal is to get traffic, make sales. This is the 2112 equivalent of the old America Online carpet bombing of CD ROMs decades ago. Good business for CD ROM manufacturers, I might add. Erosion of meaning opens the door to some exogenous complexity excitement I assert.

Exogenous Complexity 3: Being Clever

February 24, 2012

I just submitted my March 2012 column to Enterprise Technology Management, published in London by IMI Publishing. In that column I explored the impact of Google’s privacy stance on the firm’s enterprise software business. I am not letting any tiny cat out of a big bag when I suggested that the blow back might be a thorn in Googzilla’s extra large foot.

In this essay, I want to consider exogenous complexity in the context of the consumerization of information technology and, by extension, on information access in an organization. The spark for my thinking was the write up “Google, Safari and Our Final Privacy Wake-Up Call.”

Here’s a clever action. MIT students put a red truck on top of the dome. For more see http://radioboston.wbur.org/2011/04/06/mit-hacks.

If you do not have an iPad or an iPhone or an Android device, you will want to stop reading. Consumerization of information technology boils down to employees and contract workers who show up with mobile devices (yes, including laptops) at work. In the brave new world, the nanny instincts of traditional information technology managers are little more than annoying nags from a corporate mom.

The reality is that when consumer devices enter the workplace, three externalality happen in my experience.

First, security is mostly ineffective. Clever folks then exploit vulnerable systems. I think this is why clever people say that the customer is to blame. So clever exploits cluelessness. Clever is exogenous for the non clever. There are some actions an employer can take; for example, confiscating personal devices before the employee enters the work area. This works in certain law enforcement, intelligence, and a handful of other environments; for example, fabrication facilities in electronics or pharmaceuticals. Mobile devices have cameras and can “do” video. “Secret” processes can become un-secret in a nonce. In the free flowing, disorganized craziness of most organizations, personal devices are ignored or overlooked. In short, in a monitored financial trading environment, a professional can send messages outside the firm and the bank’s security and monitoring systems are happily ignorant. The cost of dropping a truly secure box around a work place is expensive and beyond the core competency of most information technology professionals.

Second, employees blur information which is “for work” with information which is “for friends, lovers, or acquaintances.” The exogenous factor is political. To fix the problem, rules are framed. The more rule applied to a flawed system, the greater the likelihood is that clever people will exploit systems which ignore the rules. Clever actions, therefore, increase. In short, this is a variation of the Facebook phenomena when a posting can reach many people quickly or lie dormant until the data load explodes like long forgotten Fourth of July fire cracker. As people chase the fire, clever folks exploit the fire. Information time bombs are not thought about by most senior managers, but they are on the radar of those involved in a legal matter and in the minds of some disgruntled programmers. The half life of information is less well understood by most professionals than the difference between a uranium based reactor and a thorium based reactor. Work and life information are blended, and in my opinion, the compound is a dangerous one.

Third, vendors focusing on consumerizing information technology spur adoption of devices and practices which cannot be easily controlled. The data-Hoovering processes, therefore, can suck up information which is proprietary, of high value, and potentially damaging to the information owner. Information is not “like sand grains.” Some information is valueless; other information commands a high price. In fact, modern content processing and data analytic systems can take fragments of information and “fuse” them. To most people these amalgams are of little interest. But to someone with specialized knowledge, the fused data are not god nuggets, the fused data are a chunky rosy diamond, maybe a Pink Panther. As a result, an exogenous factor increases the flow of high value data through uncontrolled channels.

A happy quack to Gunaxin. You can see how clever, computer situations, and real life blend in this “pranking” poster. I would have described the wrapping of equipment in plastic “clever.” But I am the fume hood guy, Woodruff High School, 1958 to 1962. Image source: http://humor.gunaxin.com/five-funny-prank-fails/48387

Now, let’s think about being clever. When I was in high school, I was one of a group of 25 students who were placed in an “advanced” program. Part of the program included attending universities for additional course work. I ended up at the University of Illinois at age 15. I went back to regular high school, did some other Fancy Dan learning programs, and eventually graduated. My specialty was tricking students in “regular” chemistry into modifying their experiments to produce interesting results. One of these suggestions resulted in a fume hood catching fire. Another dispersed carbon strands through the school’s ventilation system. I thought I was clever, but eventually Mr. Shepherd, the chemistry teach, found out that I was the “clever” one. I sat in the hall for the balance of the semester. I adapted quickly, got an A, and became semi-famous. I was already sitting in the hall for writing essays filled with double entendres. Sigh. Clever has its burdens. Some clever folks just retreat into a private world. The Internet is ideal for providing an environment in which isolated clever people can find a “friend.” Once a couple of clever folks hook up, the result is lots of clever activity. Most of the clever activity is not appreciated by the non clever. There is the social angle and the understanding angle. In order to explain a clever action, one has to be somewhat clever. The non clever have no clue what has been done, why, when, or how. There is a general annoyance factor associated with any clever action. So, clever usually gets masked or shrouded in something along the lines, “Gee, I am sorry” or “Goodness gracious, I did not think you would be annoyed.” Apologies usually work because the non clever believe the person saying “I’m sorry” really means it. Nah. I never meant it. I did not pay for the fume hood or the air filter replacement. Clever, right?

What happens when folks from the type of academic experience I had go to work in big companies. Well, it is sink or swim. I have been fortunate because my “real” work experiences began at Halliburton Nuclear Services and continued at Booz, Allen & Hamilton when it was a solid blue chip firm, not the azure chip outfit it is today. The fact that I was surrounded by nuclear engineers whose idea of socializing was arguing about Monte Carlo code and nuclear fuel degradation at the local exercise club. At Booz, Allen the environment was not as erudite as the nuclear outfit, but there were lots of bright people who were actually able to conduct a normal conversation. Nevertheless, the Type As made life interesting for one another, senior managers, clients, and family. Ooops. At the Booz, Allen I knew, one’s family was one’s colleagues. Most spouses had no idea about the odd ball world of big time consulting. There were exceptions. Some folks married a secretary or colleague. That way the spouse knew what work was like. Others just married the firm, converting “quality time” into two days with the dependents at a posh resort.

So clever usually causes one to seek out other clever people or find a circle of friends who appreciate the heat generated by aluminum powder in an oxygen rich environment. When a company employs clever people, it is possible to generalize:

Clever people do clever things.

What’s this mean in search and information access? You probably already know that clever people often have a healthy sense of self worth. There is also arrogance, a most charming quality among other clever people. The non-clever find the arrogance “thing” less appealing.

Let’s talk about information access.

Let’s assume that a clever person wants to know where a particular group of users navigate via a mobile device or a traditional browser. Clever folks know about persistent cookies, workarounds for default privacy settings, spoofing built in browser functions, or installation of rogue code which resets certain user selected settings on a heartbeat or restart. Now those in my advanced class would get a kick out these types of actions. Clever people appreciate the work of clever people. When the work leaves the “non advanced” in a clueless state, the fun curve does the hockey stick schtick. So clever enthuses those who are clever. The unclever are, by definition, clueless and not impressed. For really nifty clever actions, the unclever get annoyed, maybe mad. I was threatened by one student when the Friday afternoon fume hood event took place. Fortunately my debate coach intervened. Hey, I was winning and a broken nose would have imperiled my chances at the tournament on Saturday.

Now more exogenous complexity. Those who are clever often ignore unintended consequences. I could have been expelled, but I figured my getting into big trouble would have created problems with far reaching implications. I won a State Championship in the year of the fume hood. I won some silly scholarship. I published a story in the St Louis Post Dispatch called “Burger Boat Drive In.” I had a poem in a national anthology. So, I concluded that a little sport in regular chemistry class would not have any significant impact. I was correct.

However, when clever people do clever things in a larger arena, then the assumptions have to be recalibrated. Clever people may not look beyond their cube or outside their computer’s display. That’s when the exogenous complexity thing kicks in.

So Google’s clever folks allegedly did some work arounds. But the work around allowed Microsoft to launch an attack on Google. Then the media picked up on the work around and the Microsoft push back. The event allowed me to raise the question, “So workers bring their own consumerized device to work. What’s being tracked? Do you know? Answer: Nope.” What’s Google do? Apologize. Hey, this worked for me with the fume hood event, but on a global stage when organizations are pretty much lost in space when it comes to control of information, effective security, and managing crazed 20 somethings—wow.

In short, the datasphere encourages and rewards exogenous behavior by clever people. Those who are unclever take actions which sets off a flood of actions which benefit the clever.

Clever. Good sometimes. Other times. Not so good. But it is better to be clever than unclever. Exogenous factors reward the clever and brutalize the unclever.

Stephen E Arnold, February 24, 2012

Sponsored by Pandia.com

Exogenous Complexity 2: The Search Appliance

February 15, 2012

I noted a story about Fujitsu and its search appliance. What was interesting is that the product is being rolled out in Germany, a country where search and retrieval are often provided by European vendors. In fact, when I hear about Germany, I think about Exorbyte (structured data), Ontoprise (ontologies), SAP (for what it is worth, TREX and Inxight), and Lucene/Solr. I also know that Fabasoft Mindbreeze has some traction in Germany as does Microsoft with its Fast Search & Technology solution. Fast operated a translation and technical center in Germany for a while. Reaching farther into Europe, there are solutions in Norway, France, Italy, and Spain. Each of these countries’ enterprise search and retrieval vendors have customers in Germany. Even Oracle with its mixed search history with Germany’s major newspaper has customers. IBM is on the job as well, although I don’t know if Watson has made the nine hour flight from JFK to Frankfort yet. Google’s GSA or Google Search Appliance has made the trip, and, from what I understand, the results have been okay. Google itself commands more than 90 percent of the Web search traffic.

The key point. The search appliance is supposed to be simple. No complexity. An appliance. A search toaster which my dear, departed mother could operate.

The work is from Steinman Studios. A happy quack to http://steinmanstudios.com/german.html for the image which I finally tracked down.

In short, if your company operates in Germany, you have quite a few choices for a search and retrieval solution. The question becomes, “Why Fujitsu?” My response, “I don’t have a clue.”

Here’s the story which triggered my thoughts about exogenous complexity: “New Fujitsu Powered Enterprise Search Appliance Launched in Europe Through Stordis.” The news releases can disappear, so you may have to hunt around for this article and my link is dead.

Built on Fujitsu high performance hardware, the new appliance combines industry leading search software from Perfect Search Corporation with the Fujitsu NuVola Private Cloud Platform, to deliver security and ultimate scalability. Perfect Search’s patented software enables user to search up to a billion documents using a single appliance. The appliance uses unique disk based indexing rather than memory, requiring a fraction of the hardware and reducing overall solution costs, even when compared to open source alternatives solutions…Originally developed by Fujitsu Frontech North America, the PerfectSearch appliance is now being exclusively marketed throughout Europe by high performance technology distributor Stordis. PerfectSearch is the first of a series of new enterprise appliances based on the Fujitsu NuVola Private Cloud Platform that Stordis will be bringing to the European market during 2012.

No problem with the use of a US technology in a Japanese product sold in the German market via an intermediary with which I was not familiar. The Japanese are savvy managers, so this is a great idea.

What’s this play have to do with exogenous complexity?

A Fairy Tale: AOL Was Facebook a Long Time Ago

February 8, 2012

The Wall Street Journal amuses me. A Murdoch property, the newspaper does its best to minimize the best of “real” News Corp. journalism. I appreciate objective editorials which present oracular explanations of meaningful events in the world of “real” business.

A good read is “How AOL—Aka Facebook 1.0—Blew Its Lead” by Jesse Kornbluth. What is interesting is that this is a report from a person with Guccis on the ground. According to my hard copy edition, February 8, 2012, page A15:

Mr. Kornbluth was editorial director of America Online from 1997 to 2003. He now edits Headbutler.com.

I did a quick search on Facebook 3.0—aka Google—and learned from no less an authority than the Huffington Post the Mr. Kornbluth edits a blog which is a “cultural concierge service.” He is a “real” journalist and has been a contributing editor for Vanity Fair and new York, and a contributor to the New Yorker, the New York Times, etc.”

The addled goose is still in recovery mode, sort of like a very old restore from the now disappeared Fastback program. Thinking of old software and AOL, I think in 1999America Online was in hog heaven in terms of stock price. I recall shares coming in the $40 to $100 range. The accounting issues of 1993 were behind the company. The merger with Time Warner was a done deal by mid January 2000. The $350 billion was a nice round number. The New York Times marked the 10th anniversary in its “analysis” on January 11, 2010, with the story “How the AOL-Time Warner Merger Went So Wrong.”

Now I learn that AOL was Facebook 1.0. I had forgotten about AOL’s chat rooms. When I think of chat rooms, I recall CompuServe, but I was never into AOL despite the outstanding marketing campaign with the jazzy CD ROMs that seemed to be everywhere. Here’s Mr. Kornbluth’s Facebook parallel:

Exogenous Complexity 1: Search

January 31, 2012

I am now using the phrase “exogenous complexity” to describe systems, methods, processes, and procedures which are likely to fail due to outside factors. This initial post focuses on indexing, but I will extend the concept to other content centric applications in the future. Disagree with me? Use the comments section of this blog, please.

What is an outside factor?

Let’s think about value adding indexing, content enrichment, or metatagging. The idea is that unstructured text contains entities, facts, bound phrases, and other identifiable entities. A key word search system is mostly blind to the meaning of a number in the form nnn nn nnnn, which in the United States is the pattern for a Social Security Number. There are similar patterns in Federal Express, financial, and other types of sequences. The idea is that a system will recognize these strings and tag them appropriately; for example:

nnn nn nnn Social Security Number

Thus, a query for Social Security Numbers will return a string of digits matching the pattern. The same logic can be applied to certain entities and with the help of a knowledge base, Bayesian numerical recipes, and other techniques such as synonym expansion determine that a query for Obama residence will return White House or a query for the White House will return links to the Obama residence.

One wishes that value added indexing systems were as predictable as a kabuki drama. What vendors of next generation content processing systems participate in is a kabuki which leads to failure two thirds of the time. A tragedy? It depends on whom one asks.

The problem is that companies offering automated solutions to value adding indexing, content enrichment, or metatagging are likely to fail for three reasons:

First, there is the issue of humans who use language in unexpected or what some poets call “fresh” or “metaphoric” methods. English is synthetic in that any string of sounds can be used in quite unexpected ways. Whether it is the use of the name of the fruit “mango” as a code name for software or whether it is the conversion of a noun like information into a verb like informationize which appears in Japanese government English language documents, the automated system may miss the boat. When the boat is missed, continued iterations try to arrive at the correct linkage, but anyone who has used fully automated systems know or who paid attention in math class, the recovery from an initial error can be time consuming and sometimes difficult. Therefore, an automated system—no matter how clever—may find itself fooled by the stream of content flowing through its content processing work flow. The user pays the price because false drops mean more work and suggestions which are not just off the mark, the suggestions are difficult for a human to figure out. You can get the inside dope on why poor suggestions are an issue in Thining, Fast and Slow.