Semantic: Scholar and Search

September 1, 2021

The new three musketeers could be named Semantic, Scholar, and Search. What’s missing is a digital d’Artagnan. What are three valiant mousquetaires up to? Fixing search for scholarly information.

To learn why smart software goes off the rails, navigate to “Building a Better Search Engine for Semantic Scholar.” The essay documents how a group of guardsmen fixed up search which is sort of intelligent and sort of sensitive to language ambiguities like “cell”: A biological cell or “cell” in wireless call admission control. Yep, English and other languages require context to figure out what someone might be trying to say. Less tricky for bounded domains, but quite interesting for essay writing or tweets.

Please, read the article because it makes clear some of the manual interventions required to make search deliver objective, on point results. The essay is important because it talks about issues most search and retrieval “experts” prefer to keep under their kepis. Imagine what one can do with the knobs and dials in this system to generate non-objective and off point results. That would be exciting in certain scholarly fields I think.

Here are some quotes which suggest that Fancy Dan algorithmic shortcuts like those enabled by Snorkel-type solutions; for example:

Quote A

The best-trained model still makes some bizarre mistakes, and posthoc correction is needed to fix them.

Meaning: Expensive human and maybe machine processes are needed to get the model outputs back into the realm of mostly accurate.

Quote B

Here’s another:

Machine learning wisdom 101 says that “the more data the better,” but this is an oversimplification. The data has to be relevant, and it’s helpful to remove irrelevant data. We ended up needing to remove about one-third of our data that didn’t satisfy a heuristic “does it make sense” filter.

Meaning: Rough sets may be cheaper to produce but may be more expensive in the long run. Why? The outputs are just wonky, at odds with what an expert in a field knows, or just plain wrong. Does this make you curious about black box smart software? If not, it should.

Quote C

And what about this statement:

The model learned that recent papers are better than older papers, even though there was no monotonicity constraint on this feature (the only feature without such a constraint). Academic search users like recent papers, as one might expect!

Meaning: The three musketeers like their information new, fresh, and crunchy. From my point of view, this is a great reason to delete the backfiles. Even thought “old” papers may contain high value information, the new breed wants recent papers. Give ‘em what they want and save money on storage and other computational processes.

Net Net

My hunch is that many people think that search is solved. What’s the big deal? Everything is available on the Web. Free Web search is great. But commercial search systems like LexisNexis and Compendex with for fee content are chugging along.

A free and open source approach is a good concept. The trajectory of innovation points to a need for continued research and innovation. The three musketeers might find themselves replaced with a more efficient and unmanageable force like smart software trained by the Légion étrangère drunk on digital pastis.

Stephen E Arnold, September 1, 2021

SEO Relevance Destroyers and Semantic Search

August 18, 2021

Search Engine Journal describes to SEO professionals how the game has changed since early days, when it was all about keywords and backlinks, in “Semantic Search: What it Is & Why it Matters.” Writer Aleh Barysevich emphasizes:

“Now, you need to understand what those keywords mean, provide rich information that contextualizes those keywords, and firmly understand user intent. These things are vital for SEO in an age of semantic search, where machine learning and natural language processing are helping search engines understand context and consumers better. In this piece, you’ll learn what semantic search is, why it’s essential for SEO, and how to optimize your content for it.”

Semantic search strives to comprehend each searcher’s intent, a query’s context, and the relationships between words. The increased use of voice search adds another level of complexity. Barysevich traces Google’s semantic search evolution from 2012’s Knowledge Graph to 2019’s BERT. SEO advice follows, including tips like these: focus on topics instead of keywords, optimize site structure, and continue to offer authoritative backlinks. The write-up concludes:

“Understanding how Google understands intent in intelligent ways is essential to SEO. Semantic search should be top of mind when creating content. In conjunction, do not forget about how this works with Google E-A-T principles. Mediocre content offerings and old-school SEO tricks simply won’t cut it anymore, especially as search engines get better at understanding context, the relationships between concepts, and user intent. Content should be relevant and high-quality, but it should also zero in on searcher intent and be technically optimized for indexing and ranking. If you manage to strike that balance, then you’re on the right track.”

Or one could simply purchase Google ads. That’s where traffic really comes from, right?

Cynthia Murrell, August 17, 2021

Milvus and Mishards: Search Marches and Marches

August 13, 2021

I read “How We Used Semantic Search to Make Our Search 10x Smarter.” I am fully supportive of better search. Smarter? Maybe.

The write up comes from Zilliz which describes itself this way: The developer of Milvus “the world’s most advanced vector database, to accelerate the development of next generation data fabric.”

The system has a search component which is Elasticsearch. The secret sauce which makes the 10x claim is a group of value adding features; for instance, similarity and clustering.

The idea is that a user enters a word or phrase and the system gets related information without entering a string of synonyms or a particularly precise term. I was immediately reminded of Endeca without the MBAs doing manual fiddling and the computational burden the Endeca system and method imposed on constrained data sets. (Anyone remember the demo about wine?)

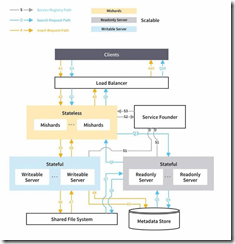

This particular write up includes some diagrams which reveal how the system operates. The diagrams like the one shown below are clear, but I

the world’s most advanced vector database, to accelerate the development of next generation data fabric.

The idea is “similarity search.” If you want to know more, navigate to https://zilliz.com. Ten times smarter. Maybe.

Stephen E Arnold, August 13, 2021

The Semantic Web Identity Crisis? More Like Intellectual Cotton Candy?

February 22, 2021

“The Semantic Web identity Crisis: In Search of the Trivialities That Never Were” is a 5,700 word essay about confusion. The write up asserts that those engaged in Semantic Web research have an “ill defined sense of identity.” What I liked about the essay is that semantic progress has been made, but moving from 80 percent of the journey over the last 20 percent is going to be difficult. I would add that making the Semantic Web “work” may be impossible.

The write up explains:

In this article, we make the case for a return to our roots of “Web” and “semantics”, from which we as a Semantic Web community—what’s in a name—seem to have drifted in search for other pursuits that, however interesting, perhaps needlessly distract us from the quest we had tasked ourselves with. In covering this journey, we have no choice but to trace those meandering footsteps along the many detours of our community—yet this time around with a promise to come back home in the end.

Does the write up “come back home”?

In order to succeed, we will need to hold ourselves to a new, significantly higher standard. For too many years, we have expected engineers and software developers to take up the remaining 20%, as if they were the ones needing to catch up with us. Our fallacy has been our insistence that the remaining part of the road solely consisted of code to be written. We have been blind to the substantial research challenges we would surely face if we would only take our experiments out of our safe environments into the open Web. Turns out that the engineers and developers have moved on and are creating their own solutions, bypassing many of the lessons we already learned, because we stubbornly refused to acknowledge the amount of research needed to turn our theories into practice. As we were not ready for the Web, more pragmatic people started taking over.

From my point of view, it looks as if the Semantic Web thing is like a flashy yacht with its rudders and bow thrusters stuck in one position. The boat goes in circles. That would drive the passengers and crew bonkers.

Stephen E Arnold, February 22, 2021

Where Did You Say “Put the Semantic Layer”?

February 10, 2021

Eager to add value to their pricey cloud data-warehouses, cloud vendors are making a case for processing analytics right on their platforms. Providers of independent analytics platforms note such an approach falls short for the many companies that have data in multiple places. VentureBeat reports, “Contest for Control Over the Semantic Layer for Analytics Begins in Earnest.” Writer Michael Vizard tells us:

“Naturally, providers of analytics and business intelligence (BI) applications are treating data warehouses as another source from which to pull data. Snowflake, however, is making a case for processing analytics in its data warehouse. For example, in addition to processing data locally within its in-memory server, Alteryx is now allowing end users to process data directly in the Snowflake cloud. At the same time, however, startups that enable end users to process data using a semantic layer that spans multiple clouds are emerging. A case in point is Kyligence, a provider of an analytics platform for Big Data based on open source Apache Kylin software.”

Alteryx itself acknowledges the limitations of data-analysis solutions that reside on one cloudy platform. The write-up reports:

“Alteryx remains committed to a hybrid cloud strategy, chief marketing officer Sharmila Mulligan said. Most organizations will have data that resides both in multiple clouds and on-premises for years to come. The idea that all of an organization’s data will reside in a single data warehouse in the cloud is fanciful, Mulligan said. ‘Data is always going to exist in multiple platforms,’ she said. ‘Most organizations are going to wind up with multiple data warehouses.’”

Kyligence is one firm working to capitalize on that decentralization. Its analytics platform pulls data from multiple platforms in an online analytical processing database. The company has raised nearly $50 million, and is releasing an enterprise edition of Apache Kylin that will run on AWS and Azure. It remains to be seen whether data warehouses can convince companies to process data on their platforms, but the push is clearly part of the current trend—the pursuit of a never-ending flow of data.

Cynthia Murrell, February 10, 2021

SEO Semantics and the Vibrant Vivid Vees

January 29, 2021

Years ago, one of the executives at Vivisimo, which was acquired by IBM, told me about the three Vees. These were the Vees of Vivisimo’s metasearch system. The individual, who shall remain nameless, whispered: Volume, Velocity, and Variety. He smiled enigmatically. In a short time, the three Vees were popping up in the context of machine learning, artificial intelligence, and content discovery.

The three Vivisimo Vees seem to capture the magic and mystery of digital data flows. I am not on that wheezing bus in Havana.

Volume is indeed a characteristic of online information. Even if one has a trickle of Word documents to review each day, the individual reading, editing, and commenting on a report has a sense that there are more Word documents flying around than the handful in this morning’s email. But in the context of our datasphere, no one knows how much digital data exist, what it contains, who has access, etc. Volume is a fundamental characteristic of today’s datasphere. The only way to contain data is to pull the plug. That is not going to happen unless there is something larger than Google. Maybe a massive cyber attack?

The second Vee is variety. From the point of view of the Vivisimo person, variety referred to the content that text centric system processed. Text, unlike a tidy database file, is usually a mess. Without structure, transform and load outfits have been working for decades to convert the messy into the orderly or at least pull out certain chunks so that one can extract key words, dates, and may entities with reasonable accuracy. Today there is a lot of variety; however, for every new variant old ones become irrelevant. At best, the variety challenge is like a person in a raft trying to paddle to keep from being swamped with intentional and unintentional content types. How about those encrypted message? Another hurdle for the indexing outfit: Decryption, metadata extraction and assignment, and processing throughput. So the variety Vee is handled by focusing on a subset of content. Too bad for those who think that “all” information is online.

The third Vee is a fave among the real time crowd. The idea that streams and flows of data in real time can be processed on the fly, patterns identified, advanced analytics applied, and high value data emitted. This notion is a good one when working in print shop in the 17th century. Those workflows don’t make any sense when figuring out the stream of data produced by an unidentified drone which may be weaponized. Furthermore, if a monitoring device notes a several millisecond pattern before a person’s heart attack, that’s not too helpful when the afflicted individual falls over dead a second later. What is “real time”? Answer: There are many types, so the fix is to focus, narrow, winnow, and go for a high probability signal. Sometimes it works; sometimes it doesn’t.

The three Vees are a clever and memorable marketing play. A company can explain how its system manages each of these issues for a particular customer use case. The one size fit all idea is not what generates information processing revenues. Service fees, subscriptions, and customization are the money spinners.

The write up “The Four V’s of Semantic Search” adds another Vee to the Vivisimo three: Veracity. I don’t want to argue “truth” because in the datasphere for every factoid on one side of an argument, even a Bing search can generate counter examples. What’s interesting is that this veracity Vee is presented as part of search engine optimization using semantic techniques. Here’s a segment I circled:

The fourth V is about how accurate the information is that you share, which speaks about your expertise in the given subject and to your honesty. Google cares about whether the information you share is true or not and real or not, because this is what Googles [sic] audience cares about. That’s why you won’t usually get search results that point to the fake news sites.

Got that. Marketing hoo hah, sloganeering, and word candy — just like the three Vivisimo Vees.

Stephen E Arnold, January 29, 2021

Marketing Insight or Marketing Desperation?

January 6, 2021

A couple of weeks ago, I became aware of a shift in techno babble. Here are some examples and their sources:

Fire-and-forget. Shoot a missile and smart software does the rest… when necessary. Source: War News

Hyperedge replacement graph grammars (HRGs). A baffler. Source: Something called NEURIPS

Performative. I think this means go fast or complete a task in a better way. Source: Mashable

Proceleration. The Age of Earthquakes.

Tangential content. The idea is that information does not have to be related; for example, if you write about car polish for a living, including articles about zebras is a good thing. Source: Next Web

Transition from pets to cattle. Moving from the status of a beloved poodle to a single, soon to be eaten bovine. Source: Amazon AWS

Fascinating terminology. Time for digital detox and maybe red tagging. No, I don’t know what these terms means either. I assume that vendors of smart software which can learn without human fiddling knows these terms and many more because of experience intelligence platforms.

Stephen E Arnold, January 6, 2021

Expert System Has Embraced the AI Revolution

November 19, 2020

It’s official. Expert System S.p. A. (Italy) is now Expert.ai. I know because the firm’s Web site displays this message:

Expert System has moved along a business path like one of those Amalfi coast cliff side roads: Breathtaking turns, chilling confrontations with other vehicles, and a lack of guard rails.

Repositioning a big rig is a thrill for sure.

The company’s tag line is:

It’s time to make all data actionable.

Yep, “all.” Even video, encrypted messages among employees, and confidential compensation data? Sure, “all.”

Plus, the firm has tweaked its description of its focus to assert:

Expert.ai is the premier artificial intelligence platform for language understanding. Its unique hybrid approach to NL combines symbolic human-like comprehension and machine learning to transform language-intensive processes into practical knowledge, providing the insight required to improve decision making throughout organizations.

Vendors of search and content processing widgets are responding to today’s business environment with marketing. Expert System was founded in 1989 in Modena, Italy.

Premier too.

Stephen E Arnold, November 19, 2020

Fixing Language: No Problem

August 7, 2020

Many years ago I studied with a fellow who was the world’s expert on the morpheme _burger. Yep, hamburger, cheeseburger, dumbburger, nothingburger, and so on. Dr. Lev Sudek (I think that was his last name but after 50 years former teachers blur in my mind like a smidgen of mustard on a stupidburger.) I do recall his lecture on Indo-European languages, the importance of Sanskrit, and the complexity of Lithuanian nouns. (Why Lithuanian? Many, many inflections.) Those languages evolving or de-volving from Sanskrit or ur-Sanskrit differentiated among male, female, singular, neuter, plural, and others. I am thinking 16 for nouns but again I am blurring the Sriacha on the Incredible burger.

This morning, as I wandered past the Memoryburger Restaurant, I spotted “These Are the Most Gender-Biased Languages in the World (Hint: English Has a Problem).” The write up points out that Carnegie Mellon analyzed languages and created a list of biased languages. What are the languages with an implicit problem regarding bias? Here a list of the top 10 gender abusing, sexist pig languages:

- Danish

- German

- Norwegian

- Dutch

- Romanian

- English

- Hebrew

- Swedish

- Mandarin

- Persian

English is number 6, and if I understand Fast Company’s headline, English has a problem. Apparently Chinese and Persian do too, but the write up tiptoes around these linguistic land mines. Go with the Covid ridden, socially unstable, and financially stressed English speakers. Yes, ignore the Danes, the Germans, Norwegians, Dutch, and Romanians.

So what’s the fix for the offensive English speakers? The write up dodges this question, narrowing to algorithmic bias. I learned:

The implications are profound: This may partially explain where some early stereotypes about gender and work come from. Children as young as 2 exercise these biases, which cannot be explained by kids’ lived experiences (such as their own parents’ jobs, or seeing, say, many female nurses). The results could also be useful in combating algorithmic bias.

Profound indeed. But the French have a simple, logical, and “c’est top” solution. The Académie Française. This outfit is the reason why an American draws a sneer when asking where the computer store is in Nimes. The Académie Française does not want anyone trying to speak French to use a disgraced term like computer.

How’s that working out? Hashtag and Franglish are chugging right along. That means that legislating language is not getting much traction. You can read a 290 page dissertation about the dust up. Check out “The Non Sexist Language Debate in French and English.” A real thriller.

The likelihood of enforcing specific language and usage changes on the 10 worst offenders strikes me as slim. Language changes, and I am not sure the morpheme –burger expert understood decades ago how politicallycorrectburgers could fit into an intellectual menu.

Stephen E Arnold, August 7, 2020

Twitch: Semantic Search Stream to Lure Gamers, Trolls, and Gals?

July 31, 2020

Amazon Twitch may be more versatile than providing the young at heart with hours of sophisticated content. There are electronic games, trolls (lots of trolls armed with weird icons), and what appear to be females.

Now Twitch will be moving along the content spectrum with the addition of a stream about semgrep. If you are not on a first name basis, semgrep is a semantic search thing. You can join in for free, no waiting rooms, and no big technical hurdles. I suppose one could create a lecture about semantic methods in TikTok 30-second videos which might be a first for the non-invasive, controversial app. Nah, go for Twitch. Skip YouTube and Facebook. Go Bezos bulldozer.

Navigate to https://twitch.tv and go to the jeanqasaur stream. The time on July 31, 2020? The show begins at 4 pm US Eastern time.

The program is definitely perceived by some as super important. A motivated semantic wizard posted a message on the TweetedTimes.com semantic page. Here’s what the message looks like:

DarkCyber’s suggestions:

- Do not become distracted by Raj recruiting, Bad Bunny, or Celestial Fitness. Keep your eye on the grep as it were.

- Sign up because Amazon wants you to be part of the family. Prime members may receive extra Bezos bucks somewhere down the line

- Exercise good grammar, be respectful, and keep your clothes on. Twitch banned SweetSaltyPeach who reinvented herself as RachelKay, Web developer, fashion model, and gamer icon. You may have to reincarnate yourself too.

- Avoid the lure of Animal Crossing Arabia II.

Stephen E Arnold, July 31, 2020