Amazon Statistical Factoid: House Brands

July 18, 2022

I read “Amazon Slashing Private-Label Selection amid Weak Sales: Report.” The source is the estimable Fox News, which like the Wall Street Journal, is affiliated with the fantastic Murdoch information potentate. Within the Fox-ish story is a factoid, which I am not able to absorb without some of that faux salt stuff sold to some with cardiac issues.

Here is the factoid. Believe it or not.

As of 2020, Amazon’s private-label business offered 45 house brands accounting for 243,000 products.

As I understand it, the idea for slapping a private label on a product is to capture sales that would otherwise go to a merchant. That merchant may make more money than some outfits I know. One of those unnamed outfits simply approaches the manufacturer, places a big order with a special label, and offer the product at a price that delivers the cash to the “me too” merchant.

I assume that successful outfits like Mother Teresa’s original fund raising organization and Amazon-like companies would never attempt to get more money and discriminate against the poor and down trodden. Nope, never. Ever.

Let’s assume that Amazon is changing its house brand policy. Okay, why? What are some possible reasons?

- Amazon wants to reduce its costs and rethink how it interacts with the rock-solid third party sells apparatus. (I love plurals which I can spell apparati, albeit incorrectly.)

- Amazon wants to amass some fungible evidence that it is a really equitable outfit, eager to operate in a fair, transparent manner.

- Amazon’s forecast team senses trouble ahead.

I think Amazon may be facing some headwinds: Prime Day doldrums, assorted legal hassles about certain products, and backlash potential from regulators.

Yeah, and some less Fox-y news is here.

Stephen E Arnold, July 18, 2022

When It Comes to AI, Who Is Wrong? Sorry, Who Is Right? Who Is on First? I Don’t Know.

July 15, 2022

When I read revelations about alleged issues with smart software I think about the famous Abbott & Costello routine “Who’s on First?” If you are not familiar with this comedy classic you can find a version at this link.

I read “30% of Google’s Emotions Dataset is Mislabeled.” Who says, “I don’t know.”

The write up asserts:

Last year, Google released their “GoEmotions” dataset: a human-labeled dataset of 58K Reddit comments categorized according to 27 emotions. The problem? A whopping 30% of the dataset is severely mislabeled! (We tried training a model on the dataset ourselves, but noticed deep quality issues. So we took 1000 random comments, asked Surgers whether the original emotion was reasonably accurate, and found strong errors in 308 of them.) How are you supposed to train and evaluate machine learning models when your data is so wrong?

Who? Surgers. What? Yes, what’s in charge of synthetic data? What? Yes. I don’t know. Okay, I don’t know what’s going on in this write up.

Wow.

The article contains some examples of humans mislabeling data. Today these labels are metadata, not index terms or classification codes. Metadata. “I never metadata I didn’t like.” Really.

The article in my opinion is actually pro-Google. Why?

Why’s responsible for Google and its goal of eliminating as many humans from a process once deemed appropriate for subject matter experts. SMEs are too expensive and slow for today’s metadata mavens.

What’s the fix? Synthetic data which relies only a a few humans and eventually (one theorizes) no humans at all. Really? Yes, Really works with Snorkel-type technology.

I enjoyed this statement from the cited article:

If you want to deploy ML models that work in the real world, it’s time for a focus on high-quality datasets over bigger models – just listen, after all, to Andrew Ng’s focus on data-centric AI. Hopefully Google learns this too! Otherwise those big, beautiful traps may get censored into oblivion, and all the rich nuances of language and humor with it…

What? Yeah, I know. What’s in charge of synthetic data. The idea is for Google whopper approach to smart software resolves these issues and others as well. What’s “high quality”? I bet you didn’t know quality requires Google scoring algorithms. What? In the manager’s seat.

Stephen E Arnold, July 15, 2022

TikTok: Slipping and Dipping or Plotting and Planning?

July 15, 2022

I read “TikTok Aborts Europe, US Expansion Ambitions Shortly After US Senate Inquiry.” Surprising? Not really. TikTok and its ByteDance Ltd. “partner” is it appears rethinking how to capitalize on its popularity among the most avid, short attention span clickers. The article explains that TikTok is not too keen on selling via its baby super app. The reasons are, according to the cited article and the estimable orange newspaper, are “internal problems and failure to gain traction with consumers.”

With the management savvy of the Chinese government, it seems to me that resolving “internal problems” was a straightforward process. Identify the dissenter and let the re-education camps work their magic. The problem with “traction” is that I don’t see much hard evidence that a super app which bundles promoting, buying and selling is unpopular with consumers. The TikTok generation is pretty happy following an influencer and buying whatever the person pitches: Coffee, wellness stuff, makeup, and “so cute” gym clothes.

For me the news story is too far from the horseshoe stake of credibility. I think we have a PR play engineered to get people to say, “See, TikTok is a company which recognizes that it cannot do everything.”

I am skeptical. Here are three reasons I spelled out to my colleagues at lunch today:

- TikTok denizens are selling and are unlikely to stop. At some point, ByteDance is going to want a piece of the action.

- TikTok is becoming a super app. Its users will demand additional functionality. If it is not delivered, the clever little clickers will create add ins. Will ByteDance sit on its hands and fail to monetize enhancements and extensions to the TikTok app?

- TikTok does not want to be shut down; therefore, cooing and trying to avoid getting in trouble with US and European regulators is a high priority. Why? The data are priceless.

Net net: Will TikTok do the adulting to behave in a non capitalistic manner? Pick one: [a] No or [b] No. This is less of a company versus company action and more of a government playing Go against an opponent playing checkers.

Stephen E Arnold, July 15, 2022

Google Smart Software: Is This an Important Statement?

July 15, 2022

I read “Human-Centered Mechanism Design with Democratic AI” continues Google’s PR campaign to win the smart software war. Like the recent interview with a DeepMind executive on the exciting Lex Fridman podcast and YouTube programs, the message is clear: The Google’s smart software is not “alive”. (Interesting PR speak begins about 1 hours and 20 minutes into the two hour plus interview. The subtext is in my opinion, “Nope, no problem. We have smart software lassoed with our systems and methods.” Okay, I think I understand framing, filtering, and messaging designed to permit online advertising to be better, faster, and maybe cheaper.

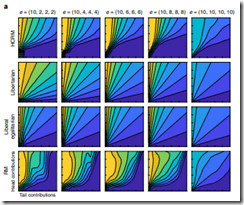

This most recent contribution uses language many previous Googley papers do not; for example, “human” and “democratic.” The article includes graphics which I must confess I found a bit difficult to figure out. Here’s an illustrative image which baffled me:

The Google and its assorted legal eagles created this image from the data mostly referenced in the cited article. Yes, Google and attendant legal eagles, you are the ultimate authorities for this image from the cited article in Nature.

Those involved with business intelligence will marvel at Google’s use of different types of visualizations to make absolutely crystal clear the researchers’ insights, findings, and data.

Great work.

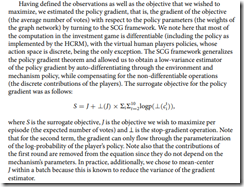

I did note one passage on page nine of the Nature article:

Here is the operative language used to explain some of the democratic methods:

Defined

Objective

We wished to maximize

Estimated

Auto-differentiating

We chose

Net net: Researchers at the Google determine and then steer the system. Human-centered design meshes with the Snorkel and synthetic data methods I presume. And bias? Where there are humans, there may be bias. How human-centered were the management decisions about certain staff in the Google smart software units?

Stephen E Arnold, July 15, 2022

Never Enough! Even Google Needs To Buy Data

July 15, 2022

Google needs to buy data. Say what? That seems to be a contrary sentence, but The Verge explains that: “Google Is Paying The Wikimedia Foundation For Better Access To Information.” It would make sense that Google would buy large datasets to feed/instruct its AI projects, but why would the search engine need to buy Wikimedia Foundation information? Google is one of the first Wikimedia Enterprise customers and the company wants to buy its data to be the most accurate and up-to-date search engine. The Wikimedia Foundation is a non-profit organization that created Wikipedia.

Google’s crawlers already scrape Wikipedia’s pages, but that has the downside of being outdated and inaccurate. Google will no longer need to rely on free data dumps and free APIs. Wikimedia Enterprise customers are given access to proprietary APIs that recycle and process information at larger rates. Google has loved Wikipedia for years:

“Although you may not notice it, Google uses Wikimedia’s services in a number of ways. The most obvious is within its “knowledge panels,” which appear on the side of search results pages when you look up the people, places, or things within Google’s massive database. Wikipedia is one of the sources Google frequently uses to populate the information inside these panels. Google also cites Wikipedia in the information panels it adds to some YouTube videos to fight misinformation and conspiracy theories (although it didn’t really inform Wikimedia of its plans to do so ahead of time).”

Google has not explained how it will use the Wikimedia Foundation, but we can surmise that it will be cited more and pushed to the top of search results more. The Internet Archive also is a Wikimedia Foundation customer.

Whitney Grace, July 15, 2022

Facebook, Twitter, Etc. May Have a Voting Issue

July 15, 2022

Former President Donald Trump claimed that any news not in his favor was “fake news.” While Trump’s claim is not true, large amounts of fake news has been swirling around the Internet since his administration and before. It is only getting worse, especially with conspiracy theorists that believe the 2020 election was fixed. Salon shares how the conspiracy theorists are chasing another fake villain: “‘Big Lie’ Vigilantes Publish Targets Online-But Facebook and Twitter Are Asleep At The Wheel.”

People who believe that the 2020 election was stolen from Trump have “ballot mules” between their crosshairs. Ballot mules are accused of dropping off absentee ballots during the previous election. Vigilantes have been encouraged to bring ballot mules to justice. They are using social media to “track them down.”

Facebook, Twitter, TikTok, and other social media platforms have policies that forbid violence, harassment, and impersonation of government officials. The vigilantes posted information about the purported ballot mules, but the pictures do not show them engaging in illegal activity. Luckily none of the “ballot mules” have been harmed.

“Disinformation researchers from the nonpartisan clean-government nonprofit Common Cause alerted Facebook and Twitter that the platforms were allowing users to post such incendiary claims in May. Not only did the claims lack evidence that crimes had been committed, but experts worry that poll workers, volunteers, and regular voters could face unwarranted harassment or physical harm if they are wrongfully accused of illegal election activity…

Emma Steiner, a disinformation analyst with Common Cause who sent warnings to the social-media companies, says the lack of action suggests that tech companies relaxed their efforts to police election-related threats ahead of the 2022 midterms. ‘This is the new playbook, and I’m worried that platforms are not prepared to deal with this tactic that encourages dangerous behavior,’ Steiner said.”

There is also a documentary Trump titled called 2000 Mules that claims ballot mules submitted thousands of false absentee ballots. Attorney General William Barr and other reputable people debunked the “documentary.” While the 2020 election was not rigged, conspiracy theorists creating and believing misinformation could damage the democratic process and the US’s future.

Whitney Grace, July 15, 2022

Commercializing Cyber Crime with Search and Retrieval

July 14, 2022

I read “Ransomware Gangs Offer Ability to Search Stolen Data.” The write up reports:

Bleeping Computer reported today that the ALPHV/BlackCat ransomware gang was the first to offer the feature, announcing that they have created a searchable database with leaks from nonpaying victims. The hackers said that their stolen data had been fully indexed and that the search feature included support for finding information by filename or by content available in documents and images. The BlackCat ransomware gang claims it is offering the search service to make it easier for cybercriminals to find passwords or other confidential information.

Other alleged bad actors are offering a search function as well. These are Lockbit and Karakurt.

Several observations:

- Commercialization of cyber crime has been a characteristic of some of the more forward-leaning bad actors

- The availability of open source search makes it easy to add functionality

- More productization is inevitable; for example, subscriptions to Crime as a Service.

Net net: The focus of crime analysts and investigators may have to embrace enablers like Internet Service Providers, cloud services, and open source code repositories.

Stephen E Arnold, July 14, 2022

Life Too Crazy? Game-ify It

July 14, 2022

A decade or more ago, one of my project managers explained that NASA was into games. The idea was interesting, but the majority of our work did not involve computer games. Several years ago I mentioned to a concert promoter that online games were generating “music.” That individual, who lived in Nevada, said he had a couple of employees tracking the influence of game music on the music events he organized. In a sense, I have been aware of the game-ification of digital thinking and innovation.

The write up “How the Technology Behind Fortnite Is Being Used to Design IRL Buildings” added another angle to my admittedly shallow understanding of the eGame revolution. The write up explains:

…the technology behind Fortnite and other video games is being adapted for use in the very real world of architecture, urban planning, and development.

Just as Apple imposes the mobile interface on its laptop and desktop computers, the eGame influence is moving beyond a simple way to pass time. (Time, I would point out, that might better be spent reading, volunteering, studying physics, working math problems, or walking in the woods.)

Several thoughts crossed my mind:

- Those who grow up immersed in eGames appear to be interested in pushing the “not really real” idea into areas once decidedly un-game like. Will the “real” world turn into more of a game than it already is? The answer, I surmise, is, “Yes.”

- Hooking up a game engine to an architect leads to what seems a logical link. Plug the game engine into AutoCAD, SolidWorks, something jazzier. Let smart software convert the game world into drawings, specs, and part supplier components or a model for a 3D printer. Seems like a useful project for a architect entrepreneur (assuming that the two words do not generate an oxymoron).

- Create artifacts which make it very clear that one is living in a simulation. Imagine a world in which a smart car will not run over a person standing next to a traffic cone.

What happens if one hooks the ByteDance super app into the eGame software? Answer to some CIA and British intel professionals: Happy Chinese propaganda professionals.

Stephen E Arnold, July 14, 2022

Paper Envisions an Open Science Platform for Chemistry Researchers

July 14, 2022

What could be accomplished if machine learning were harnessed to help scientists connect, collaborate, and build on each other’s findings? A team of researchers ponders “Making the Collective Knowledge of Chemistry Open and Machine Actionable.” Researchers Kevin Maik Jablonka, Luc Patiny, and Berend Smit hope their suggestions will bring the field of chemistry closer to FAIR principles (findable, accessible, interoperable, and reusable). The paper, published by Nature Chemistry, observes:

“Chemical research is still largely centered around paper-based lab notebooks, and the publication of data is often more an afterthought than an integral part of the process. Here we argue that a modular open-science platform for chemistry would be beneficial not only for data-mining studies but also, well beyond that, for the entire chemistry community. Much progress has been made over the past few years in developing technologies such as electronic lab notebooks that aim to address data-management concerns. This will help make chemical data reusable, however it is only one step. We highlight the importance of centering open-science initiatives around open, machine-actionable data and emphasize that most of the required technologies already exist—we only need to connect, polish and embrace them.”

The authors go on to describe how to do just that using structured and open data with semantic tools. In order to make the transition as smooth as possible, the team suggests data capture should be similar to the way chemists already work. Data should also be generated in a standardized format other researchers can easily use. A formal ontology will be important here. For consistency and accessibility, the paper also recommends building a modular data-analysis platform with a common interface and standardized protocols. This open-science platform would replace the hodgepodge of different, often proprietary, tools currently in use. It would also make publication of data a seamless, and centralized, part of the process. See the paper for all the details. The authors conclude:

“We emphasize that the technology is here not only to facilitate the process of publishing data in a FAIR format to satisfy the sponsors, but also to ensure that the combination of chemical data, FAIR principles and openness gives scientists the possibility to harvest all data so that all chemists can have access to the collective knowledge of everybody’s successful, partly successful and even ‘failed’ experiments.”

Cynthia Murrell, July 14, 2022

Bias and Deep Fake Concerns Limit AI Image Applications

July 14, 2022

This is why we can’t have nice things. AI-generated image technology has reached dramatic heights, but even its makers agree giving the public unfettered access is still a very bad idea. CNN Business explains, “AI Made These Stunning Images. Here’s Why Experts Are Worried.” In a nutshell: bias and deep fakes.

OpenAI’s DALL-E 2 and Google’s Imagen have been showing off their impressive abilities, and even venturing into some real-world applications, but within very careful limitations. Reporter Rachel Metz reveals:

“Neither DALL-E 2 nor Imagen is currently available to the public. Yet they share an issue with many others that already are: they can also produce disturbing results that reflect the gender and cultural biases of the data on which they were trained — data that includes millions of images pulled from the internet. The bias in these AI systems presents a serious issue, experts told CNN Business. The technology can perpetuate hurtful biases and stereotypes. They’re concerned that the open-ended nature of these systems — which makes them adept at generating all kinds of images from words — and their ability to automate image-making means they could automate bias on a massive scale. They also have the potential to be used for nefarious purposes, such as spreading disinformation. ‘Until those harms can be prevented, we’re not really talking about systems that can be used out in the open, in the real world,’ said Arthur Holland Michel, a senior fellow at Carnegie Council for Ethics in International Affairs who researches AI and surveillance technologies. … Holland Michel is also concerned that no amount of safeguards can prevent such systems from being used maliciously, noting that deepfakes — a cutting-edge application of AI to create videos that purport to show someone doing or saying something they didn’t actually do or say — were initially harnessed to create faux pornography.”

That is indeed perfectly awful. So, for now, both OpenAI and Google Research are mostly sticking to animals and other cute subjects while prohibiting the depiction of humans or anything might be disturbing. Even so, bias can creep in. For example, Imagen was tasked with depicting oil paintings of a “royal raccoon” king and queen. What could go wrong? Alas, the AI demonstrated its Western bias by interpreting “royal” in a distinctly European style. Oh well. At least the regal raccoons are cuties, just not in one’s attic.

Cynthia Murrell, July 14, 2022