If Math Is Running Out of Problems, Will AI Help Out the Humans?

July 26, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read “Math Is Running Out of Problems.” The write up appeared in Medium and when I clicked I was not asked to join, pay, or turn a cartwheel. (Does Medium think 80-year-old dinobabies can turn cartwheels? The answer is, “Hey, doofus, if you want to read Medium articles pay up.)

Thanks, MSFT Copilot. Good enough, just like smart security software.

I worked through the free essay, which is a reprise of an earlier essay on the topic of running out of math problems. These reason that few cared about the topic is that most people cannot make change. Thinking about a world without math problems is an intellectual task which takes time from scamming the elderly, doom scrolling, generating synthetic information, or watching reruns of I Love Lucy.

The main point of the essay in my opinion is:

…take a look at any undergraduate text in mathematics. How many of them will mention recent research in mathematics from the last couple decades? I’ve never seen it.

New and math problems is an oxymoron.

I think the author is correct. As specialization becomes more desirable to a person, leaving the rest of the world behind is a consequence. But the issue arises in other disciplines. Consider artificial intelligence. That jazzy phrase embraces a number of mathematical premises, but it boils down to a few chestnuts, roasted, seasoned, and mixed with some interesting ethanols. (How about that wild and crazy Sir Thomas Bayes?)

My view is that as the apparent pace of information flow erodes social and cultural structures, the quest for “new” pushes a frantic individual to come up with a novelty. The problem with a novelty is that it takes one’s eye off the ball and ultimately the game itself. The present state of affairs in math was evident decades ago.

What’s interesting is that this issue is not new. In the early 1980s, Dialog Information Services hosted a mathematics database called xxx. The person representing the MATHFILE database (now called MathSciNet) told me in 1981:

We are having a difficult time finding people to review increasingly narrow and highly specialized papers about an almost unknown area of mathematics.

Flash forward to 2024. Now this problem is getting attention in 2024 and no one seems to care?

Several observations:

- Like smart software, maybe humans are running out of high-value information? Chasing ever smaller mathematical “insights” may be a reminder that humans and their vaunted creativity has limits, hard limits.

- If the premise of the paper is correct, the issue should be evident in other fields as well. I would suggest the creation of a “me too” index. The idea is that for a period of history, one can calculate how many knock off ideas grab the coat tails of an innovation. My hunch is that the state of most modern technical insight is high on the me too index. No, I am not counting “original” TikTok-type information objects.

- The fragmentation which seems apparent to me in mathematics and that interesting field of mathematical physics mirrors the fragmentation of certain cultural precepts; for example, ethical behavior. Why is everything “so bad”? The answer is, “Specialization.”

Net net: The pursuit of the ever more specialized insight hastens the erosion of larger ideas and cultural knowledge. We have come a long way in four decades. The direction is clear. It is not just a math problem. It is a now problem and it is pervasive. I want a hat that says, “I’m glad I’m old.”

Stephen E Arnold, July 26, 2024

Social Scoring Is a Thing and in Use in the US and EU Now

April 9, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Social scoring is a thing.

The EU AI regulations are not too keen on slapping an acceptability number on people or a social score. That’s a quaint idea because the mechanisms for doing exactly that are available. Furthermore, these are not controlled by the EU, and they are not constrained in a meaningful way in the US. The availability of mechanisms for scoring a person’s behaviors chug along within the zippy world of marketing. For those who pay attention to policeware and intelware, many of the mechanisms are implemented in specialized software.

Will the two match up? Thanks, MSFT Copilot. Good enough.

There’s a good rundown of the social scoring tools in “The Role of Sentiment Analysis in Marketing.” The content is focused on uses “emotional” and behavioral signals to sell stuff. However, the software and data sets yield high value information for other purposes. For example, an individual with access to data about the video viewing and Web site browsing about a person or a cluster of persons can make some interesting observations about that person or group.

Let me highlight some of the software mentioned in the write up. There is an explanation of the discipline of “sentiment analysis.” A person engaged in business intelligence, investigations, or planning a disinformation campaign will have to mentally transcode the lingo into a more practical vocabulary, but that’s no big deal. The write up then explains how “sentiment analysis” makes it possible to push a person’s buttons. The information makes clear that a service with a TikTok-type recommendation system or feed of “you will probably like this” can exert control over an individual’s ideas, behavior, and perception of what’s true or false.

The guts of the write up is a series of brief profiles of eight applications available to a marketer, PR team, or intelligence agency’s software developers. The products described are:

- Sprout Social. Yep, it’s wonderful. The company wrote the essay I am writing about.

- Reputation. Hello, social scoring for “trust” or “influence”

- Monkeylearn. What’s the sentiment of content? Monkeylearn can tell you.

- Lexalytics. This is an old-timer in sentiment analysis.

- Talkwalker. A content scraper with analysis and filter tools. The company is not “into” over-the-transom inquiries

If you have been thinking about the EU’s AI regulations, you might formulate an idea that existing software may irritate some regulators. My team and I think that AI regulations may bump into companies and government groups already using these tools. Working out the regulatory interactions between AI regulations and what has been a reasonably robust software and data niche will be interesting.

In the meantime, ask yourself, “How many intelware and policeware systems implement either these tools or similar tools?” In my AI presentation at the April 2024 US National Cyber Crime Conference, I will provide a glimpse of the future by describing a European company which includes some of these functions. Regulations do not control technology nor innovation.

Stephen E Arnold, April 9, 2024

Why Humans Follow Techno Feudal Lords and Ladies

December 19, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

“Seduced By The Machine” is an interesting blend of human’s willingness to follow the leader and Silicon Valley revisionism. The article points out:

We’re so obsessed by the question of whether machines are rising to the level of humans that we fail to notice how the humans are becoming more like machines.

I agree. The write up offers an explanation — it’s arriving a little late because the Internet has been around for decades:

Increasingly we also have our goals defined for us by technology and by modern bureaucratic systems (governments, schools, corporations). But instead of providing us with something equally rich and well-fitted, they can only offer us pre-fabricated values, standardized for populations. Apps and employers issue instructions with quantifiable metrics. You want good health – you need to do this many steps, or achieve this BMI. You want expertise? You need to get these grades. You want a promotion? Hit these performance numbers. You want refreshing sleep? Better raise your average number of hours.

A modern high-tech pied piper leads many to a sanitized Burning Man? Sounds like fun. Look at the funny outfit. The music is a TikTok hit. The followers are looking forward to their next “experience.” Thanks, MSFT Copilot. One try for this cartoon. Good enough again.

The idea is that technology offers a short cut. Who doesn’t like a short cut? Do you want to write music in the manner of Herr Bach or do you want to do the loop and sample thing?

The article explores the impact of metrics; that is, the idea of letting Spotify make clear what a hit song requires. Now apply that malleability and success incentive to getting fit, getting start up funding, or any other friction-filled task. Let’s find some Teflon, folks.

The write up concludes with this:

Human beings tend to prefer certainty over doubt, comprehensibility to confusion. Quantified metrics are extremely good at offering certainty and comprehensibility. They seduce us with the promise of what Nguyen calls “value clarity”. Hard and fast numbers enable us to easily set goals, justify decisions, and communicate what we’ve done. But humans reach true fulfilment by way of doubt, error and confusion. We’re odd like that.

Hot button alert! Uncertainty means risk. Therefore, reduce risk. Rely on an “authority,” “data,” or “research.” What if the authority sells advertising? What if the data are intentionally poisoned (a somewhat trivial task according to watchers of disinformation outfits)? What if the research is made up? (I am thinking of the Stanford University president and the Harvard ethic whiz. Both allegedly invented data; both found themselves in hot water. But no one seems to have cared.

With smart software — despite its hyperbolic marketing and its role as the next really Big Thing — finding its way into a wide range of business and specialized systems, just trust the machine output. I went for a routine check up. One machine reported I was near death. The doctor was recommending a number of immediate remediation measures. I pointed out that the data came from a single somewhat older device. No one knew who verified its accuracy. No one knew if the device was repaired. I noted that I was indeed still alive and asked if the somewhat nervous looking medical professional would get a different device to gather the data. Hopefully that will happen.

Is it a positive when the new pied piper of Hamelin wants to have control in order to generate revenue? Is it a positive when education produces individuals who do not ask, “Is the output accurate?” Some day, dinobabies like me will indeed be dead. Will the willingness of humans to follow the pied piper be different?

Absolutely not. This dinobaby is alive and kicking, no matter what the aged diagnostic machine said. Gentle reader, can you identify fake, synthetic, or just plain wrong data? If you answer yes, you may be in the top tier of actual thinkers. Those who are gatekeepers of information will define reality and take your money whether you want to give it up or not.

Stephen E Arnold, December 19, 2023

Predictive Analytics and Law Enforcement: Some Questions Arise

October 17, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[2] Vea4_thumb_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t2_thumb-16.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

We wish we could prevent crime before it happens. With AI and predictive analytics it seems possible but Wired shares that “Predictive Policing Software Terrible At Predicting Crimes.” Plainfield, NJ’s police department purchased Geolitica predictive software and it was not a wise use go tax payer money. The Markup, a nonprofit investigative organization that wants technology serve the common good, reported Geolitica’s accuracy:

“We examined 23,631 predictions generated by Geolitica between February 25 and December 18, 2018, for the Plainfield Police Department (PD). Each prediction we analyzed from the company’s algorithm indicated that one type of crime was likely to occur in a location not patrolled by Plainfield PD. In the end, the success rate was less than half a percent. Fewer than 100 of the predictions lined up with a crime in the predicted category, that was also later reported to police.”

The Markup also analyzed predictions for robberies and aggravated results that would occur in Plainfield and it was 0.6%. Burglary predictions were worse at 0.1%.

The police weren’t really interested in using Geolitica either. They wanted to be accurate in predicting and reducing crime. The Plainfield, NJ hardly used the software and discontinued the program. Geolitica charged $20,500 for a year subscription then $15,5000 for year renewals. Geolitica had inconsistencies with information. Police found training and experience to be as effective as the predictions the software offered.

Geolitica will go out off business at the end of 2023. The law enforcement technology company SoundThinking hired Geolitica’s engineering team and will acquire some of their IP too. Police software companies are changing their products and services to manage police department data.

Crime data are important. Where crimes and victimization occur should be recorded and analyzed. Newark, New Jersey, used risk terrain modeling (RTM) to identify areas where aggravated assaults would occur. They used land data and found that vacant lots were large crime locations.

Predictive methods have value, but they also have application to specific use cases. Math is not the answer to some challenges.

Whitney Grace, October 17, 2023

LLM Unreliable? Probably Absolutely No Big Deal Whatsoever For Sure

July 19, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-40.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

My team and I are working on an interesting project. Part of that work requires that we grind through papers, journal articles, and self-published (and essentially unverifiable) comments about smart software.

“What do you mean the outputs from the smart software I have been using for my homework delivers the wrong answer?” says this disappointed user of a browser and word processor with artificial intelligence baked in. Is she damning recursion? MidJourney created this emotion-packed image of a person who has learned that she has been accursed of plagiarism by her Sociology 215 professor.

Not surprisingly, we come across some wild and crazy information. On rare occasions we come across a paper, mostly ignored, which presents information that confirms many of our tests of smart software. When we do tests, we arrive with specific queries in mind. These relate to the behaviors of bad actors; for example, online services which front for cyber criminals, systems which are purpose built to make it time consuming to unmask a bad actor, and determine what person owns a particular domain engaged in the sale of fullz.

You can probably guess that most of the smart and dumb online finding services are of little or no help. We have to check these, however, simply because we want to be thorough. At a meeting last week, one of my team members who has a degree in library science, pointed out that the outputs from the services we use were becoming less useful than they were several months ago. I don’t spend too much time testing these services because I am a dinobaby and I run projects. My doing days are over. But I do listen to informed feedback. Her comment was one I had not seen in the Google PR onslaught about its method, the utterances of Sam AI-Man at OpenAI, or from the assorted LinkedIn gurus who post about smart software.

Then I spotted “How Is ChatGPT’s Behavior Changing over Time?”

I think the authors of the paper have documented what my team member articulated to me and others working on a smart software project. The paper states is polite academic prose:

Our findings demonstrate that the behavior of GPT-3.5 and GPT-4 has varied significantly over a relatively short amount of time.

The authors provide some data, a few diagrams, and some footnotes.

What is fascinating is that the most significant item in the journal article, in my opinion, is the use of the word “drifts.” Here’s the specific line:

Monitoring reveals substantial LLM drifts.

Yep, drifts.

What exactly is a drift in a numerical mélange like a large language model, its algorithms, and its probabilistic pulsing? In a nutshell, LLMs are formed by humans and use information to some degree created by humans. The idea is that sharp corners are created from decisions and data which may have rounded corners or be the equivalent of wad of Play-Doh after a kindergartener manipulates the stuff. The idea is that layers of numerical recipes are hooked together to output information useful to a human or system.

Those who worked with early versions of the Autonomy Neuro Linguistic black box know about the Play-Doh effect. Train the system on a crafted set of documents (information). Run test queries. Adjust a few knobs and dials afforded by the Autonomy system. Turn it loose on the Word documents and other content for which filters were installed. Then let users run queries.

To be upfront, using the early version of Autonomy in 1999 or 2000 was pretty darned good. However, Autonomy recommended that the system be retrained every few months.

Why?

The answer, as I recall, is that as new data were encountered by the Autonomy Neuro Linguistic engine, the engine had to cope with new words, names of companies, and phrases. Without retraining, the system would use what it had from its initial set up and tuning. Without retraining or recalibration, the Autonomy system would return results which were less useful in some situations. Operate a system without retraining, the results would degrade over time.

Math types labor to make inference-hooked and probabilistic systems stay on course. The systems today use tricks that make a controlled vocabulary look like the tool of a dinobaby like me. Without getting into the weeds, the Autonomy system would drift.

And what does the cited paper say, “LLM drift too.”

What does this mean? Here’s my dinobaby list of items to keep in mind:

- Smart software, if left to its own devices, will degrade over time; that is, outputs will drift from what the user wants. Feedback from users accelerates the drift because some feedback is from the smart software’s point of view is spot on even if it is crazy or off the wall. Do this over a period of time and you get what the paper’s authors and my team member pointed out: Degradation.

- Users who know how to look at a system’s outputs and validate or identify off the mark results can take corrective action; that is, ignore the outputs or fix them up. This is not common, and it requires specialized knowledge, time, and mental sharpness. Those who depend on TikTok or a smart system may not have these qualities in equal amounts.

- Entrepreneurs want money, power, or a new Tesla. Bringing up issues about smart software growing increasingly crazy like the dinobaby down the street is not valued. Hence, substantive problems with smart systems will require time, money, and expertise to remediate. Who wants that? Smart software is designed to improve efficiency, reduce costs, and make money. The result is a group of individuals who do PR, not up-to-snuff software.

Will anyone pay attention to this cited journal article? Sure, a few interns and maybe a graduate student or two. But at this time, the trend is that AI works and AI applied to something delivers a solution. Is that solution reliable or is it just good enough? What if the outputs deteriorate in a subtle way over time? What’s the fix? Who is responsible? The engineer who fiddled with thresholds? The VP of product development who dismissed objections about inherent bias in outputs?

I think you may have an answer to these questions. As a dinobaby, I can say, “Folks, I don’t have a clue about fixing up the smart software juggernaut.” I am skeptical of those who say, “Hey, it just works.” Okay, I hope you are correct.

Stephen E Arnold, July 19, 2023

Researchers Break New Ground with a Turkey Baster and Zoom

April 4, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I do not recall much about my pre-school days. I do recall dropping off at different times my two children at their pre-schools. My recollections are fuzzy. I recall horrible finger paintings carried to the automobile and several times a month, mashed pieces of cake. I recall quite a bit of laughing, shouting, and jabbering about classmates whom I did not know. Truth be told I did not want to meet these progeny of highly educated, upwardly mobile parents who wore clothes with exposed logos and drove Volvo station wagons. I did not want to meet the classmates. The idea of interviewing pre-kindergarten children struck me as a waste of time and an opportunity to get chocolate Baskin & Robbins cake smeared on my suit. (I am a dinobaby, remember. Dress for success. White shirt. Conservative tie. Yada yada._

I thought (briefly, very briefly) about the essay in Science Daily titled “Preschoolers Prefer to Learn from a Competent Robot Than an Incompetent Human.” The “real news” article reported without one hint of sarcastic ironical skepticism:

We can see that by age five, children are choosing to learn from a competent teacher over someone who is more familiar to them — even if the competent teacher is a robot…

Okay. How were these data gathered? I absolutely loved the use of Zoom, a turkey baster, and nonsense terms like “fep.”

Fascinating. First, the idea of using Zoom and a turkey baster would never roamed across this dinobaby’s mind. Second, the intuitive leap by the researchers that pre-schoolers who finger-paint would like to undertake this deeply intellectual task with a robot, not a human. The human, from my experience, is necessary to prevent the delightful sprouts from eating the paint. Third, I wonder if the research team’s first year statistics professor explained the concept of a valid sample.

One thing is clear from the research. Teachers, your days are numbered unless you participate in the Singularity with Ray Kurzweil or are part of the school systems’ administrative group riding the nepotism bus.

“Fep.” A good word to describe certain types of research.

Stephen E Arnold, April 4, 2023

More Impressive Than Winning at Go: WolframAlpha and ChatGPT

March 27, 2023

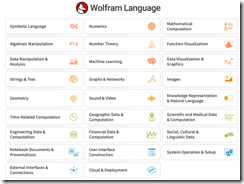

For a rocket scientist, Stephen Wolfram and his team are reasonably practical. I want to call your attention to this article on Dr. Wolfram’s Web site: “ChatGPT Gets Its Wolfram Superpowers.” In the essay, Dr. Wolfram explains how the computational system behind WolframAlpha can be augmented with ChatGPT. The examples in his write up are interesting and instructive. What can the WolframAlpha ChatGPT plug in do? Quite a bit.

I found this passage interesting:

ChatGPT + Wolfram can be thought of as the first truly large-scale statistical + symbolic “AI” system…. in ChatGPT + Wolfram we’re now able to leverage the whole stack: from the pure “statistical neural net” of ChatGPT, through the “computationally anchored” natural language understanding of Wolfram|Alpha, to the whole computational language and computational knowledge of Wolfram Language.

The WolframAlpha module works with these information needs:

The Wolfram Language modules does some numerical cartwheels, and I was impressed. I am not sure how high school calculus teachers will respond to the WolframAlpha – ChatGPT mash up, however. Here’s a snapshot of what Wolfram Language can do at this time:

One helpful aspect of Dr. Wolfram’s essay is that he notes differences between an earlier version of ChatGPT and the one used for the mash up. Navigate to this link and sign up for the plug in.

Stephen E Arnold, March 27, 2023

Making Data Incomprehensible

March 8, 2023

I spotted a short write up with 100 pictures called “1 Dataset 100 Visualizations.” The write up presents a simple table:

And converts or presents the data 100 different ways.

Here’s an example:

My reactions to the examples are:

- Why are the colors presented with low contrast. Many of the charts’ graphics are incomprehensible. The texts’ illegibility underscores the disconnect between being understood and being in a weird world of incomprehensibility.

- What’s wrong with the basic table? It works. Why create a graph? Oh, I know. To be clever. Nope, not clever. Performative demonstration of numerical expertise perhaps?

- The Wall Street Journal and other “real news” organizations love these types of obscurification. I can visualize the goose bumps which form on the arms of these individuals. The anticipation of making something fuzzy is a thrilling moment.

Yikes. Marketing methods to be unclear.

Stephen E Arnold, March 8, 2023

Worthless Data Work: Sorry, No Sympathy from Me

February 27, 2023

I read a personal essay about “data work.” The title is interesting: “Most Data Work Seems Fundamentally Worthless.” I am not sure of the age of the essayist, but the pain is evident in the word choice; for example: Flavor of despair (yes, synesthesia in a modern technology awakening write up!), hopeless passivity (yes, a digital Sisyphus!), essentially fraudulent (shades of Bernie Madoff!), fire myself (okay, self loathing and an inner destructive voice), and much, much more.

But the point is not the author for me. The big idea is that when it comes to data, most people want a chart and don’t want to fool around with numbers, statistical procedures, data validation, and context of the how, where, and what of the collection process.

Let’s go to the write up:

How on earth could we have what seemed to be an entire industry of people who all knew their jobs were pointless?

Like Elizabeth Barrett Browning, the essayist enumerates the wrongs of data analytics as a vaudeville act:

- Talking about data is not “doing” data

- Garbage in, garbage out

- No clue about the reason for an analysis

- Making marketing and others angry

- Unethical colleagues wallowing in easy money

What’s ahead? I liked these statements which are similar to what a digital Walt Whitman via ChatGPT might say:

I’ve punched this all out over one evening, and I’m still figuring things out myself, but here’s what I’ve got so far… that’s what feels right to me – those of us who are despairing, we’re chasing quality and meaning, and we can’t do it while we’re taking orders from people with the wrong vision, the wrong incentives, at dysfunctional organizations, and with data that makes our tasks fundamentally impossible in the first place. Quality takes time, and right now, it definitely feels like there isn’t much of a place for that in the workplace.

Imagine. The data and working with it has an inherent negative impact. We live in a data driven world. Is that why many processes are dysfunctional. Hey, Sisyphus, what are the metrics on your progress with the rock?

Stephen E Arnold, February 27, 2023

Confessions? It Is That Time of Year

December 23, 2022

Forget St. Augustine.

Big data, data science, or whatever you want to call is was the precursor to artificial intelligence. Tech people pursued careers in the field, but after the synergy and hype wore off the real work began. According to WD in his RYX,R blog post: “Goodbye, Data Science,” the work is tedious, low-value, unwilling, and left little room for career growth.

WD worked as a data scientist for a few years, then quit in pursuit of the higher calling as a data engineer. He will be working on the implementation of data science instead of its origins. He explained why he left in four points:

• “The work is downstream of engineering, product, and office politics, meaning the work was only often as good as the weakest link in that chain.

• Nobody knew or even cared what the difference was between good and bad data science work. Meaning you could suck at your job or be incredible at it and you’d get nearly the same regards in either case.

• The work was often very low value-add to the business (often compensating for incompetence up the management chain).

• When the work’s value-add exceeded the labor costs, it was often personally unfulfilling (e.g. tuning a parameter to make the business extra money).”

WD’s experiences sound like everyone who is disenchanted with their line of work. He worked with managers who would not listen when they were told stupid projects would fail. The managers were more concerned with keeping their bosses and shareholders happy. He also mentioned that engineers are inflamed with self-grandeur and scientists are bad at code. He worked with young and older data people who did not know what they were doing.

As a data engineer, WD has more free time, more autonomy, better career advancements, and will continue to learn.

Whitney Grace, December 23, 2022