Open Source Drone Mapping Software

May 30, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Photography and 3D image rendering aren’t perfect technologies, but they’ve dramatically advanced since they became readily available. Photorealistic 3D rendering was only available to the ultra wealthy, corporations, law enforcement agencies, universities, and governments. The final products were laughable by today’s standards, but it set the foundation for technology like Open Drone Map.

OpenDroneMap is a cartographer’s dream software that generates, 3D models, digital elevation models, point clouds, and maps from aerial images. Using only a compatible drone, the software, and a little programming know-how, users can make maps that were once the domain of specific industries. The map types include: measurements, plant health, point clouds, orthomosaics, contours (topography), elevation models, ground point controls, and more.

OpenDroneMap is self-described as: “We are creating the most sustainable drone mapping software with the friendliest community on earth.” It’s also called an “open ecosystem:”

“We’re building sustainable solutions for collecting, processing, analyzing and displaying aerial data while supporting the communities built around them. Our efforts are made possible by collaborations with key organizations, individuals and with the help of our growing community.”

The software is run by a board consisting of: Imma Mwanza, Stephen Mather, Näiké Nembetwa Nzali, DK Benjamin, and Arun M. The rest of the “staff” are contributors to the various projects, mostly through GitHub.

There are many projects that are combined for the complete OpenDroneMap software. These projects include: the command line toolkit, user interface, GCP detection, Python SDK, and more. Users can contribute by helping design code and financial donations. OpenDroneMap is a nonprofit, but it has the potential to be a company.

Open source projects like, OpenDroneMap, are how technology should be designed and deployed. The goal behind OpenDroneMap is to create a professional, decisive, and used for good.

Whitney Grace, May 30, 2024

French Building and Structure Geo-Info

February 23, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

OSINT professionals may want to take a look at a French building and structure database with geo-functions. The information is gathered and made available by the Observatoire National des Bâtiments. Registration is required. A user can search by city and address. The data compiled up to 2022 cover France’s metropolitan areas and includes geo services. The data include address, the built and unbuilt property, the plot, the municipality, dimensions, and some technical data. The data represent a significant effort, involving the government, commercial and non-governmental entities, and citizens. The dataset includes more than 20 million addresses. Some records include up to 250 fields.

Source: https://www.urbs.fr/onb/

To access the service, navigate to https://www.urbs.fr/onb/. One is invited to register or use the online version. My team recommends registering. Note that the site is in French. Copying some text and data and shoving it into a free online translation service like Google’s may not be particularly helpful. French is one of the languages that Google usually handles with reasonable facilities. For this site, Google Translate comes up with tortured and off-base translations.

Stephen E Arnold, February 23, 2024

Information Voids for Vacuous Intellects

January 18, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

In countries around the world, 2024 is a critical election year, and the problem of online mis- and disinformation is worse than ever. Nature emphasizes the seriousness of the issue as it describes “How Online Misinformation Exploits ‘Information Voids’—and What to Do About It.” Apparently we humans are so bad at considering the source that advising us to do our own research just makes the situation worse. Citing a recent Nature study, the article states:

“According to the ‘illusory truth effect’, people perceive something to be true the more they are exposed to it, regardless of its veracity. This phenomenon pre-dates the digital age and now manifests itself through search engines and social media. In their recent study, Kevin Aslett, a political scientist at the University of Central Florida in Orlando, and his colleagues found that people who used Google Search to evaluate the accuracy of news stories — stories that the authors but not the participants knew to be inaccurate — ended up trusting those stories more. This is because their attempts to search for such news made them more likely to be shown sources that corroborated an inaccurate story.”

Doesn’t Google bear some responsibility for this phenomenon? Apparently the company believes it is already doing enough by deprioritizing unsubstantiated news, posting content warnings, and including its “about this result” tab. But it is all too easy to wander right past those measures into a “data void,” a virtual space full of specious content. The first impulse when confronted with questionable information is to copy the claim and paste it straight into a search bar. But that is the worst approach. We learn:

“When [participants] entered terms used in inaccurate news stories, such as ‘engineered famine’, to get information, they were more likely to find sources uncritically reporting an engineered famine. The results also held when participants used search terms to describe other unsubstantiated claims about SARS-CoV-2: for example, that it rarely spreads between asymptomatic people, or that it surges among people even after they are vaccinated. Clearly, copying terms from inaccurate news stories into a search engine reinforces misinformation, making it a poor method for verifying accuracy.”

But what to do instead? The article notes Google steadfastly refuses to moderate content, as social media platforms do, preferring to rely on its (opaque) automated methods. Aslett and company suggest inserting human judgement into the process could help, but apparently that is too old fashioned for Google. Could educating people on better research methods help? Sure, if they would only take the time to apply them. We are left with this conclusion: instead of researching claims from untrustworthy sources, one should just ignore them. But that brings us full circle: one must be willing and able to discern trustworthy from untrustworthy sources. Is that too much to ask?

Cynthia Murrell, January 18, 2024

Missing Signals: Are the Tools or Analysts at Fault?

November 7, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Returning from a trip to DC yesterday, I thought about “signals.” The pilot — a specialist in hit-the-runway-hard landings — used the word “signals” in his welcome-aboard speech. The word sparked two examples of missing signals. The first is the troubling kinetic activities in the Middle East. The second is the US Army reservist who went on a shooting rampage.

The intelligence analyst says, “I have tools. I have data. I have real time information. I have so many signals. Now which ones are important, accurate, and actionable?” Our intrepid professionals displays the reality of separating the signal from the noise. Scary, right? Time for a Starbuck’s visit.

I know zero about what software and tools, systems and informers, and analytics and smart software the intelligence operators in Israel relied upon. I know even less about what mechanisms were in place when Robert Card killed more than a dozen people.

The Center for Strategic and International Studies published “Experts React: Assessing the Israeli Intelligence and Potential Policy Failure.” The write up stated:

It is incredible that Hamas planned, procured, and financed the attacks of October 7, likely over the course of at least two years, without being detected by Israeli intelligence. The fact that it appears to have done so without U.S. detection is nothing short of astonishing. The attack was complex and expensive.

And one more passage:

The fact that Israeli intelligence, as well as the international intelligence community (specifically the Five Eyes intelligence-sharing network), missed millions of dollars’ worth of procurement, planning, and preparation activities by a known terrorist entity is extremely troubling.

Now let’s shift to the Lewiston Maine shooting. I had saved on my laptop “Six Missed Warning Signs Before the Maine Mass Shooting Explained.” The UK newspaper The Guardian reported:

The information about why, despite the glaring sequence of warning signs that should have prevented him from being able to possess a gun, he was still able to own over a dozen firearms, remains cloudy.

Those “signs” included punching a fellow officer in the US Army Reserve force, spending some time in a mental health facility, family members’ emitting “watch this fellow” statements, vibes about issues from his workplace, and the weapon activity.

On one hand, Israel had intelligence inputs from just about every imaginable high-value source from people and software. On the other hand, in a small town the only signal that was not emitted by Mr. Card was buying a billboard and posting a message saying, “Do not invite Mr. Card to a church social.”

As the plane droned at 1973 speeds toward the flyover state of Kentucky, I jotted down several thoughts. Like or not, here these ruminations are:

- Despite the baloney about identifying signals and determining which are important and which are not, existing systems and methods failed bigly. The proof? Dead people. Subsequent floundering.

- The mechanisms in place to deliver on point, significant information do not work. Perhaps it is the hustle bustle of everyday life? Perhaps it is that humans are not very good at figuring out what’s important and what’s unimportant. The proof? Dead people. Constant news releases about the next big thing in open source intelligence analysis. Get real. This stuff failed at the scale of SBF’s machinations.

- The uninformed pontifications of cyber security marketers, the bureaucratic chatter flowing from assorted government agencies, and the cloud of unknowing when the signals are as subtle as the foghorn on cruise ship with a passenger overboard. Hello, hello, the basic analysis processes don’t work. A WeWork investor’s thought processes were more on point than the output of reporting systems in use in Maine and Israel.

After the aircraft did the thump-and-bump landing, I was able to walk away. That’s more than I can say for the victims of analysis, investigation, and information processing methods in use where moose roam free and where intelware is crafted and sold like canned beans at TraderJoe’s.

Less baloney and more awareness that talking about advanced information methods is a heck of a lot easier than delivering actual signal analysis.

Stephen E Arnold, November 7, 2023

test

An Interesting Example of Real News. Yes, Real News

October 27, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[2] Vea4_thumb_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t2_thumb-22.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I enjoy gathering information which may be disinformation. “The Secrets Hamas Knew about Israel’s Military” illustrates how “facts” can create fear, doubt, and uncertainty. I reside in rural Kentucky, and I have zero ability as a dinobaby to determine if the information published by DNYUZ is accurate or a clever way to deceive.

Believe me. Bigfoot is coming for your lunch. One young person says, “Bigfoot? Cool.” Thanks, MidJourney, descend that gradient.

Let’s look at several of the assertions in the write up. I will leave it to you, gentle reader, to figure out what’s what.

The first item is related to what appears the detail about what the attackers did; specifically, rode five motorcycles each carrying two individuals. As the motorcyclists headed toward their target, they shot at civilian vehicles. Then they made their way to an “unmanned gate”, blew up the entrance, and “shot dead an unarmed Israelis soldier in a T shirt.”

My reaction to this was that the excess detail was baloney. If a group on motorcycles shot at me, I would alert the authorities. You know. A mobile phone. Also, the gate was unmanned. Hmmm. Each military base I have approached in my life was manned and had those nifty cameras recording the activity in the viewshed of the cameras. From my own experience, I know there are folks who watch the outputs of the cameras and there are other people who watch the watchers to make sure the odd game of Angry Birds does not distract the indifferent.

The second item is the color coded map. I have seen online posts showing a color coded print out with alleged information about the attack. Were these images “real” or fabricated along with the suggestion the attack had been planned a year or more in advance. I don’t know. Well, the map led the attackers to a fortified building with an unlocked door. Huh. As I recall, the doors in government facilities I have visited had the charming characteristic of locking automatically, even in areas with a separate security perimeter inside a security perimeter. Wandering around and going outside for a breath of fresh air was not a serendipitous action as I recall.

The third item is the “room filled with computers.” Yep, I lock access to my computer area in my home. My office, by the way, is underground. But it was a lucky day for bad actors because the staff were hiding under a bed. I don’t recall seeing a bed in or near a computer room. I have seen crappy chairs, crappy tables, and maybe a really crappy cot. But a bed under which two can hide? Nope.

The credibility of the story is attributed to the New York Times. And, by golly, the “real” journalists reviewed the footage and concluded it was the actual factual truth. Then the “real” journalists interviewed “real” Israelis about the Israeli video.

Okay. Several observations:

- Creating information which seems “real” but may be something else is easy.

- The outlet for the story is one that strikes me as a potential million dollar baby because it may have click magic.

- I am skeptical about the Netflix type of story line the article.

Net net: Dynuz, I admire your “real” news.

Stephen E Arnold, October 27, 2023

Are Open Source Investigators Multiplying Like Star Trek Tribbles?

October 13, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[2] Vea4_thumb_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t2_thumb-7.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

The idea of using the Internet to solve crimes is not a new idea. I learned about “open source” in 1981 when I worked in the online unit of the Courier Journal & Louisville Times Co. A fellow named Robert David Steele contacted me. He wanted to meet me when I was in Washington, DC. My recollection is that he showed up in a quasi-military outfit and preceded to explain that commercial online information was important to intelligence professionals. He wanted free access to our databases, and I politely explained that access was available via online timesharing services and from specialized vendors. He was not happy, but eventually we became tolerant of one another and ended up working on a number of interesting projects. Now open source information or OSINT is the go-to method for conducting research, investigations, gathering intelligence, and identifying persons of interest.

An OSINT investigator tracks down with OSINT geo tools the animal suspected of eating a knowledge worker’s flowers. Thanks, MidJourney. Close to Sherlock, but not on the money.

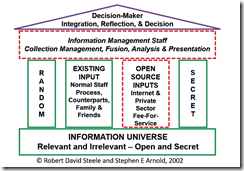

Until the surprise attack on Israel, it seemed as if open source or OSINT could work wonders. It didn’t, and (spoiler alert) it cannot. OSINT is one source of actionable information. Steele and I collaborated on numerous presentations and used this diagram to explain where OSINT fit into the world of professional information gatherers:

Open source is one pillar of the intelligence infrastructure. The keystone of OSINT is the staff, the management method, the techniques used to fuse and analyze source information, and presenting it in a way that makes sense to others.

I mention this because I read “The Disturbing Rise of Amateur Internet Detectives.” Please, consult the original to get a feel for the point of view of the author and the implicit endorsement of Netflix programming.

However, I want to highlight one passage from the article:

What’s the future of web sleuthing? It’s clear amateur online detectives are to stay. The depths of the internet can encourage our worst instincts – but also, as these series prove, our best, too. The trend for programs celebrating these sleuths, though, is harder to welcome. It’s difficult to avoid the sense that they amplify the messy, fractious instincts of the online world, and make sleuths reluctant celebrities. Dragging them into the limelight can misrepresent their work, doing a disservice to their peculiar talents and experiences. Still, there is an undeniable pull to the world of online sleuthing. We can expect far more coverage of that murky empire.

What is interesting to me is that OSINT has moved from an almost unknown activity to the mainstream. Who would have anticipated TV shows about online investigations. Even more surprising is the number of people who have adopted the method as an avocation. Others have set up businesses because the founder is an expert in OSINT. Amazing or shocking? I have not decided.

I have formulated several observations; these are:

- Determining what is important and then verifying the accuracy of the information are different skills from doing Google searches or using an OSINT toolkit

- Machine-generated content can degrade the accuracy of some of the most sophisticated OSINT intelligence systems. The surprise attack on Israel is a grim reminder of the limitations of highly sophisticated, multi-language, multi-source systems

- Gathering intelligence is not an activity conducted without care, careful consideration, and a keen awareness of the cognitive blind spots that each human possesses.

Net net: Pursuing a career is OSINT is probably a better choice than trying to become an influencer on TikTok.

Stephen E Arnold, October 13, 2023

Cognitive Blind Spot 4: Ads. What Is the Big Deal Already?

October 11, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb_thumb_thumb.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

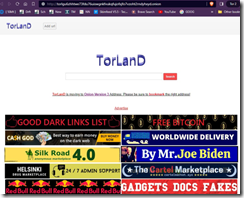

Last week, I presented a summary of Dark Web Trends 2023, a research update my team and I prepare each year. I showed a visual of the ads on a Dark Web search engine. Here’s an example of one of my illustrations:

The TorLanD service, when it is accessible via Tor, displays a search box and advertising. What is interesting about this service and a number of other Dark Web search engines is the ads. The search results are so-so, vastly inferior to those information retrieval solutions offered by intelware vendors.

Some of the ads appear on other Dark Web search systems as well; for example, Bobby and DarkSide, among others. The advertisements off a range of interesting content. TorLanD screenshot pitches carding, porn, drugs, gadgets (skimmers and software), illegal substances. I pointed out that the ads on TorLanD looked a lot like the ads on Bobby; for instance:

I want to point out that the Silk Road 4.0 and the Gadgets, Docs, Fakes ads are identical. Notice also that TorLanD advertises on Bobby. The Helsinki Drug Marketplace on the Bobby search system offers heroin.

Most of these ads are trade outs. The idea is that one Dark Web site will display an ad for another Dark Web site. There are often links to Dark Web advertising agencies as well. (For this short post, I won’t be listing these vendors, but if you are interested in this research, contact benkent2020 at yahoo dot com. One of my team will follow up and explain our for-fee research policy.)

The point of these two examples is make clear that advertising has become normalized, even among bad actors. Furthermore, few are surprised that bad actors (or alleged bad actors) communicate, pat one another on the back, and support an ecosystem to buy and sell space on the increasingly small Dark Web. Please, note that advertising appears in public and private Telegram groups focused on he topics referenced in these Dark Web ads.

Can you believe the ads? Some people do. Users of the Clear Web and the Dark Web are conditioned to accept ads and to believe that these are true, valid, useful, and intended to make it easy to break the law and buy a controlled substance or CSAM. Some ads emphasize “trust.”

People trust ads. People believe ads. People expect ads. In fact, one can poke around and identify advertising and PR agencies touting the idea that people “trust” ads, particularly those with brand identity. How does one build brand? Give up? Advertising and weaponized information are two ways.

The cognitive bias that operates is that people embrace advertising. Look at a page of Google results. Which are ads and which are ads but not identified. What happens when ads are indistinguishable from plausible messages? Some online companies offer stealth ads. On the Dark Web pages illustrating this essay are law enforcement agencies masquerading as bad actors. Can you identify one such ad? What about messages on Twitter which are designed to be difficult to spot as paid messages or weaponized content. For one take on Twitter technology, read “New Ads on X Can’t Be Blocked or Reported, and Aren’t Labeled as Advertisements.”

Let me highlight some of the functions on online ads like those on the Dark Web sites. I will ignore the Clear Web ads for the purposes of this essay:

- Click on the ad and receive malware

- Visit the ad and explore the illegal offer so that the site operator can obtain information about you

- Sell you a product and obtain the identifiers you provide, a deliver address (either physical or digital), or plant a beacon on your system to facilitate tracking

- Gather emails for phishing or other online initiatives

- Blackmail.

I want to highlight advertising as a vector of weaponization for three reasons: [a] People believe ads. I know it sound silly, but ads work. People suspend disbelief when an ad on a service offers something that sounds too good to be true; [b] many people do not question the legitimacy of an ad or its message. Ads are good. Ads are everywhere. and [c] Ads are essentially unregulated.

What happens when everything drifts toward advertising? The cognitive blind spot kicks in and one cannot separate the false from the real.

Public service note: Before you explore Dark Web ads or click links on social media services like Twitter, consider that these are vectors which can point to quite surprising outcomes. Intelligence agencies outside the US use Dark Web sites as a way to harvest useful information. Bad actors use ads to rip off unsuspecting people like the doctor who once lived two miles from my office when she ordered a Dark Web hitman to terminate an individual.

Ads are unregulated and full of surprises. But the cognitive blind spot for advertising guarantees that the technique will flourish and gain technical sophistication. Are those objective search results useful information or weaponized? Will the Dark Web vendor really sell you valid stolen credit cards? Will the US postal service deliver an unmarked envelope chock full of interesting chemicals?

Stephen E Arnold, October 11, 2023

New Website Brings Focus to State Courts and Constitutions

October 11, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[2] Vea4_thumb_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t2_thumb-4.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Despite appearances, constitutional law is not all about the US Supreme Court. State courts and constitutions are integral to the maintenance (and elimination) of citizen rights. But the national media often underplays or overlooks key discussions and decisions on the state level. NYU Law’s Brennan Center‘s nonpartisan State Court Report is a new resource that seeks to address that imbalance. Its About page explains:

“What’s been missing is a forum where experts come together to analyze and discuss constitutional trends emerging from state high courts, as well as a place where noteworthy state cases and case materials are easy to find and access. State constitutions share many common provisions, and state courts across the country frequently grapple with similar questions about constitutional interpretation. Enter State Court Report, which is dedicated to covering legal news, trends, and cutting-edge scholarship, offering insights and commentary from a nationwide network of academics, journalists, judges, and practitioners with diverse perspectives and expertise. By providing original content and resources that are easily accessible, State Court Report fosters informed dialogue, research, and public understanding about an essential but chronically underappreciated source of law. Our newsletter offers a deep dive into legal developments across the states. Our case database highlights notable state constitutional decisions and cases to watch in state high courts. Our state pages provide information on high courts and constitutions in all 50 states. In addition, State Court Report supports and participates in symposia, conferences, educational training, and panels, and also partners with other organizations to disseminate and share information and research.”

The organization debuts with two high-profile guest essays, from former U.S. Attorney General Eric H. Holder Jr. and former Michigan Chief Justice Bridget Mary McCormack. One can browse articles by issue or state or, of course, search for any particulars. Not surprisingly, a subscription to the newsletter is one prominent click away. We hope the Brennan Center succeeds with this effort to bring attention to important constitutional issues before they wind their way to SCOTUS.

Cynthia Murrell, October 11, 2023

Shall We Train Smart Software on Scientific Papers? That Is an Outstanding Idea!

May 29, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read “Fake Scientific Papers Are Alarmingly Common. But New Tools Show Promise in Tackling Growing Symptom of Academia’s Publish or Perish Culture.” New tools sounds great. Navigate to the cited document to get the “real” information.

MidJourney’s representation of a smart software system ingesting garbage and outputting garbage.

My purpose in mentioning this article is to ask a question:

In the last five years how many made up, distorted, or baloney filled journal articles have been produced?

The next question is,

How many of these sci-fi confections of scholarly research have been identified and discarded by the top smart software outfits like Facebook, Google, OpenAI, et al?

Let’s assume that 25 percent of the journal content is fakery.

A question I have is:

How does faked information impact the outputs of the smart software systems?

I can anticipate some answers; for example, “Well, there are a lot of papers so the flawed papers will represent a small portion of the intake data. The law of large numbers or some statistical jibber jabber will try to explain away erroneous information. Remember. Bad information is part of the human landscape. Does this mean smart software is a mirror of errors?

Do smart software outfits remove flawed information? If the peer review process cannot, what methods are the smart outfits using. Perhaps these companies should decide what’s correct and what’s incorrect? That sounds like a Googley-type idea, doesn’t it?

And finally, the third question about the impact of bad information on smart software “outputs” has an answer. No, it is not marketing jargon or a recycling of Google’s seven wonders of the AI world.

The answer, in my opinion, is garbage in and garbage out.

But you knew that, right?

Stephen E Arnold, Mary 29, 2023

OSINT Analysts Alert: Biases Distilled to a One Page Cheat Sheet

March 20, 2023

“Toward Parsimony in Bias Research: A Proposed Common Framework of Belief-Consistent Information Processing for a Set of Biases” is an academic write up. Usually I ignore these for two reasons: [a] the documents are content marketing designed to get a grant or further a career and [b] the results are non reproducible.

The write up, despite my skepticism of real researchers, contains one page which I think is a useful checklist of the pitfalls into which some people may happily [a] tumble, [b] live in, and [c] actively seek.

I know this image is unreadable, but I wanted to provide it with a hyperlink so you can snag the image and the full document:

Excellent work.

Stephen E Arnold, March 20, 2023