Bad News Delivered via Math

March 1, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

I am not going to kid myself. Few people will read “Hallucination is Inevitable: An Innate Limitation of Large Language Models” with their morning donut and cold brew coffee. Even fewer will believe what the three amigos of smart software at the National University of Singapore explain in their ArXiv paper. Hard on the heels of Sam AI-Man’s ChatGPT mastering Spanglish, the financial payoffs are just too massive to pay much attention to wonky outputs from smart software. Hey, use these methods in Excel and exclaim, “This works really great.” I would suggest that the AI buggy drivers slow the Kremser down.

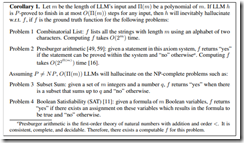

The killer corollary. Source: Hallucination is Inevitable: An Innate Limitation of Large Language Models.

The paper explains that large language models will be reliably incorrect. The paper includes some fancy and not so fancy math to make this assertion clear. Here’s what the authors present as their plain English explanation. (Hold on. I will give the dinobaby translation in a moment.)

Hallucination has been widely recognized to be a significant drawback for large language models (LLMs). There have been many works that attempt to reduce the extent of hallucination. These efforts have mostly been empirical so far, which cannot answer the fundamental question whether it can be completely eliminated. In this paper, we formalize the problem and show that it is impossible to eliminate hallucination in LLMs. Specifically, we define a formal world where hallucination is defined as inconsistencies between a computable LLM and a computable ground truth function. By employing results from learning theory, we show that LLMs cannot learn all of the computable functions and will therefore always hallucinate. Since the formal world is a part of the real world which is much more complicated, hallucinations are also inevitable for real world LLMs. Furthermore, for real world LLMs constrained by provable time complexity, we describe the hallucination-prone tasks and empirically validate our claims. Finally, using the formal world framework, we discuss the possible mechanisms and efficacies of existing hallucination mitigators as well as the practical implications on the safe deployment of LLMs.

Here’s my take:

- The map is not the territory. LLMs are a map. The territory is the human utterances. One is small and striving. The territory is what is.

- Fixing the problem requires some as yet worked out fancier math. When will that happen? Probably never because of no set can contain itself as an element.

- “Good enough” may indeed by acceptable for some applications, just not “all” applications. Because “all” is a slippery fish when it comes to models and training data. Are you really sure you have accounted for all errors, variables, and data? Yes is easy to say; it is probably tough to deliver.

Net net: The bad news is that smart software is now the next big thing. Math is not of too much interest, which is a bit of a problem in my opinion.

Stephen E Arnold, March 1, 2024

Smart Software: Can the Outputs Be Steered Like a Mini Van? Well, Yesssss

October 13, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[2] Vea4_thumb_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t2_thumb-12.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Nature Magazine may have exposed the crapola output about how the whiz kids in the smart software game rig their game. Want to know more? Navigate to “Reproducibility Trial: 246 Biologists Get Different Results from Same Data Sets.” The write up explains “how analytical choices drive conclusions.”

Baking in biases. “What shall we fiddle today, Marvin?” Marvin replies, “Let’s adjust what video is going to be seen by millions.” Thanks, for nameless and faceless, MidJourney.

Translating Nature speak, I think the estimable publication is saying, “Those who set thresholds and assemble numerical recipes can control outcomes.” An example might be suppressing certain types of information and boosting other information. If one is clueless, the outputs of the system will be the equivalent of “the truth.” JPMorgan Chase found itself snookered by outputs to the tune of $175 million. Frank Financial’s customer outputs were algorithmized with the assistance of some clever people. That’s how the smartest guys in the room were temporarily outfoxed by a 31 year old female Wharton person.

What about outputs from any smart system using open source information. That’s the same inputs to the smart system. But the outputs? Well, depending on who is doing the threshold setting and setting up the work flow of the processed information, there are some opportunities to shade, shape, and weaponize outputs.

Nature Magazine reports:

Despite the wide range of results, none of the answers are wrong, Fraser says. Rather, the spread reflects factors such as participants’ training and how they set sample sizes. So, “how do you know, what is the true result?” Gould asks. Part of the solution could be asking a paper’s authors to lay out the analytical decisions that they made, and the potential caveats of those choices, Gould [Elliot Gould, an ecological modeler at the University of Melbourne] says. Nosek [Brian Nosek, executive director of the Center for Open Science in Charlottesville, Virginia] says ecologists could also use practices common in other fields to show the breadth of potential results for a paper. For example, robustness tests, which are common in economics, require researchers to analyze their data in several ways and assess the amount of variation in the results.

Translating Nature speak: Individual analyses can be widely divergent. A method to normalize the data does not seem to be agreed upon.

Thus, a widely used smart software can control framing on a mass scale. That means human choices buried in a complex system will influence “the truth.” Perhaps I am not being fair to Nature? I am a dinobaby. I do not have to be fair just like the faceless and hidden “developers” who control how the smart software is configured.

Stephen E Arnold, October 13, 2023

Logs: Still a Problem after So Many Years

August 23, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

System logs detail everything that happens when a computer is powered on. Logs are traditionally important because they can reveal operating problems that would otherwise go unnoticed. Chris Siebenmann’s CSpace blog explains why log monitoring is not as helpful as it used to be aka it is akin to herding cats: “Monitoring Your Logs Is Mostly A Tarpit.”

Siebenmann writes that monitoring system logs wastes time and leads to more problems than its worth. System logs consist of unstructured data and they yield very little information. You can theoretically search for a specific query but the query’s structure could change. Log messages are not API and they often change.

Also you must know what the specific query looks like, i.e. knowing how the source code is written. The data is unstructured so nothing is standard. The biggest issue is this:

“Finally, all of this potential effort only matters if identifiable problems appear in your logs on a sufficiently regular basis and it’s useful to know about them. In other words, problems that happen, that you care about, and probably that you can do something about. If a problem was probably a one time occurrence or occurs infrequently, the payoff from automated log monitoring for it can be potentially quite low…”

Monitoring logs does offer important insights but the simplicity disappeared a long time ago. You can find positive and negative matches but it is like searching for information to rationalize a confirmation bias. Siebenmann likens log monitoring to a tarpit because you quickly get mired down by all the trails. We liken it to herding cats because felines are independent organisms that refuse to follow herd mentality.

Whitney Grace, August 23, 2023

Probability: Who Wants to Dig into What Is Cooking Beneath the Outputs of Smart Software?

May 30, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

The ChatGPT and smart software “revolution” depends on math only a few live and breathe. One drawer in the pigeon hole desk of mathematics is probability. You know the coin flip example. Most computer science types avoid advanced statistics. I know because my great uncle Vladimir Arnold (yeah, the guy who worked with a so so mathy type named Andrey Kolmogorov, who was pretty good at mathy stuff and liked hiking in the winter in what my great uncle described as “minimal clothing.”)

When it comes to using smart software, the plumbing is kept under the basement floor. What people see are interfaces and application programming interfaces. Watching how the sausage is produced is not what the smart software outfits do. What makes the math interesting is that the system and methods are not really new. What’s new is that memory, processing power, and content are available.

If one pries up a tile on the basement floor, the plumbing is complicated. Within each pipe or workflow process are the mathematics that bedevil many college students: Inferential statistics. Those who dabble in the Fancy Math of smart software are familiar with Markov chains and Martingales. There are garden variety maths as well; for example, the calculations beloved of stochastic parrots.

MidJourney’s idea of complex plumbing. Smart software’s guts are more intricate with many knobs for acolytes to turn and many levers to pull for “users.”

The little secret among the mathy folks who whack together smart software is that humanoids set thresholds, establish boundaries on certain operations, exercise controls like those on an old-fashioned steam engine, and find inspiration with a line of code or a process tweak that arrived in the morning gym routine.

In short, the outputs from the snazzy interface make it almost impossible to understand why certain responses cannot be explained. Who knows how the individual humanoid tweaks interact as values (probabilities, for instance) interact with other mathy stuff. Why explain this? Few understand.

To get a sense of how contentious certain statistical methods are, I suggest you take a look at “Statistical Modeling, Causal Inference, and Social Science.” I thought the paper should have been called, “Why No One at Facebook, Google, OpenAI, and other smart software outfits can explain why some output showed up and some did not, why one response looks reasonable and another one seems like a line ripped from Fantasy Magazine.

In a nutshell, the cited paper makes one point: Those teaching advanced classes in which probability and related operations are taught do not agree on what tools to use, how to apply the procedures, and what impact certain interactions produce.

Net net: Glib explanations are baloney. This mathy stuff is a serious problem, particularly when a major player like Google seeks to control training sets, off-the-shelf models, framing problems, and integrating the firm’s mental orientation to what’s okay and what’s not okay. Are you okay with that? I am too old to worry, but you, gentle reader, may have decades to understand what my great uncle and his sporty pal were doing. What Google type outfits are doing is less easily looked up, documented, and analyzed.

Stephen E Arnold, May 30, 2023

AI Shocker? Automatic Indexing Does Not Work

May 8, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I am tempted to dig into my more than 50 years of work in online and pull out a chestnut or two. l will not. Just navigate to “ChatGPT Is Powered by These Contractors Making $15 an Hour” and check out the allegedly accurate statements about the knowledge work a couple of people do.

The write up states:

… contractors have spent countless hours in the past few years teaching OpenAI’s systems to give better responses in ChatGPT.

The write up includes an interesting quote; to wit:

“We are grunt workers, but there would be no AI language systems without it,” said Savreux [an indexer tagging content for OpenAI].

I want to point out a few items germane to human indexers based on my experience with content about nuclear information, business information, health information, pharmaceutical information, and “information” information which thumbtypers call metadata:

- Human indexers, even when trained in the use of a carefully constructed controlled vocabulary, make errors, become fatigued and fall back on some favorite terms, and misunderstand the content and assign terms which will mislead when used in a query

- Source content — regardless of type — varies widely. New subjects or different spins on what seem to be known concepts mean that important nuances may be lost due to what is included in the available dataset

- New content often uses words and phrases which are difficult to understand. I try to note a few of the more colorful “new” words and bound phrases like softkill, resenteeism, charity porn, toilet track, and purity spirals, among others. In order to index a document in a way that allows one to locate it, knowing the term is helpful if there is a full text instance. If not, one needs a handle on the concept which is an index terms a system or a searcher knows to use. Relaxing the meaning (a trick of some clever outfits with snappy names) is not helpful

- Creating a training set, keeping it updated, and assembling the content artifacts is slow, expensive, and difficult. (That’s why some folks have been seeking short cuts for decades. So far, humans still become necessary.)

- Reindexing, refreshing, or updating the digital construct used to “make sense” of content objects is slow, expensive, and difficult. (Ask an Autonomy user from 1998 about retraining in order to deal with “drift.” Let me know what you find out. Hint: The same issues arise from popular mathematical procedures no matter how many buzzwords are used to explain away what happens when words, concepts, and information change.

Are there other interesting factoids about dealing with multi-type content. Sure there are. Wouldn’t it be helpful if those creating the content applied structure tags, abstracts, lists of entities and their definitions within the field or subject area of the content, and pointers to sources cited in the content object.

Let me know when blog creators, PR professionals, and TikTok artists embrace this extra work.

Pop quiz: When was the last time you used a controlled vocabulary classification code to disambiguate airplane terminal, computer terminal, and terminal disease? How does smart software do this, pray tell? If the write up and my experience are on the same wave length (not surfing wave but frequency wave), a subject matter expert, trained index professional, or software smarter than today’s smart software are needed.

Stephen E Arnold, May 8, 2023

Newton and Shoulders of Giants? Baloney. Is It Everyday Theft?

January 31, 2023

Here I am in rural Kentucky. I have been thinking about the failure of education. I recall learning from Ms. Blackburn, my high school algebra teacher, this statement by Sir Isaac Newton, the apple and calculus guy:

If I have seen further, it is by standing on the shoulders of giants.

Did Sir Isaac actually say this? I don’t know, and I don’t care too much. It is the gist of the sentence that matters. Why? I just finished reading — and this is the actual article title — “CNET’s AI Journalist Appears to Have Committed Extensive Plagiarism. CNET’s AI-Written Articles Aren’t Just Riddled with Errors. They Also Appear to Be Substantially Plagiarized.”

How is any self-respecting, super buzzy smart software supposed to know anything without ingesting, indexing, vectorizing, and any other math magic the developers have baked into the system? Did Brunelleschi wake up one day and do the Eureka! thing? Maybe he stood on line and entered the Pantheon and looked up? Maybe he found a wasp’s nest and cut it in half and looked at what the feisty insects did to build a home? Obviously intellectual theft. Just because the dome still stands, when it falls, he is an untrustworthy architect engineer. Argument nailed.

The write up focuses on other ideas; namely, being incorrect and stealing content. Okay, those are interesting and possibly valid points. The write up states:

All told, a pattern quickly emerges. Essentially, CNET‘s AI seems to approach a topic by examining similar articles that have already been published and ripping sentences out of them. As it goes, it makes adjustments — sometimes minor, sometimes major — to the original sentence’s syntax, word choice, and structure. Sometimes it mashes two sentences together, or breaks one apart, or assembles chunks into new Frankensentences. Then it seems to repeat the process until it’s cooked up an entire article.

For a short (very, very brief) time I taught freshman English at a big time university. What the Futurism article describes is how I interpreted the work process of my students. Those entitled and enquiring minds just wanted to crank out an essay that would meet my requirements and hopefully get an A or a 10, which was a signal that Bryce or Helen was a very good student. Then go to a local hang out and talk about Heidegger? Nope, mostly about the opposite sex, music, and getting their hands on a copy of Dr. Oehling’s test from last semester for European History 104. Substitute the topics you talked about to make my statement more “accurate”, please.

I loved the final paragraphs of the Futurism article. Not only is a competitor tossed over the argument’s wall, but the Google and its outstanding relevance finds itself a target. Imagine. Google. Criticized. The article’s final statements are interesting; to wit:

As The Verge reported in a fascinating deep dive last week, the company’s primary strategy is to post massive quantities of content, carefully engineered to rank highly in Google, and loaded with lucrative affiliate links. For Red Ventures, The Verge found, those priorities have transformed the once-venerable CNET into an “AI-powered SEO money machine.” That might work well for Red Ventures’ bottom line, but the specter of that model oozing outward into the rest of the publishing industry should probably alarm anybody concerned with quality journalism or — especially if you’re a CNET reader these days — trustworthy information.

Do you like the word trustworthy? I do. Does Sir Isaac fit into this future-leaning analysis. Nope, he’s still pre-occupied with proving that the evil Gottfried Wilhelm Leibniz was tipped off about tiny rectangles and the methods thereof. Perhaps Futurism can blame smart software?

Stephen E Arnold, January 31, 2023

The Failure of Search: Let Many Flowers Bloom and… Die Alone and Sad

November 1, 2022

I read “Taxonomy is Hard.” No argument from me. Yesterday (October 31, 2022) I spoke with a long time colleague and friend. Our conversations usually include some discussion about the loss of the expertise embodied in the early commercial database firms. The old frameworks, work processes, and shared beliefs among the top 15 or 20 for fee online database companies seem to have scattered and recycled in a quantum crazy digital world. We did not mention Google once, but we could have. My colleague and I agreed on several points:

- Those who want to make digital information must have an informing editorial policy; that is, what’s the content space, what’s included, what’s excluded, and what problem does the commercial database solve

- Finding information today is more difficult than it has been our two professional lives. We don’t know if the data are current and accurate (online corrections when publications issue fixes), fit within the editorial policy if there is one or the lack of policy shaped by the invisible hand of politics, advertising, and indifference to intellectual nuances. In some services, “old” data are disappeared presumably due to the cost of maintaining, updating if that is actually done, and working out how to make in depth queries work within available time and budget constraints

- The steady erosion of precision and recall as reliable yardsticks for determining what a search system can find within a specific body of content

- Professional indexing and content curation is being compressed or ignored by many firms. The process is expensive, time consuming, and intellectually difficult.

The cited article reflects some of these issues. However, the mirror is shaped by the systems and methods in use today. The approaches pivot on metadata (index terms) and tagging (more indexing). The approach is understandable. The shift to technology which slash the needed for subject matter experts, manual methods, meetings about specific terms or categories, and the other impedimenta are the new normal.

A couple of observations:

- The problems of social media boil down to editorial policies. Without these guard rails and the specialists needed to maintain them, finding specific items of information on widely used platforms like Facebook, TikTok, or Twitter, among others is difficult

- The challenges of processing video are enormous. The obvious fix is to gate the volume and implement specific editorial guidelines before content is made available to a user. Skipping this basic work task leads to the craziness evident in many services today

- Indexing can be supplemented by smart software. However, that smart software can drift off course, so specialists have to intervene and recalibrate the system.

- Semantic, statistical, or behavior centric methods for identifying and suggesting possible relevant content require the same expert centric approach. There is no free lunch is automated indexing, even for narrow vocabulary technical fields like nuclear physics or engineered materials. What smart software knows how to deal with new breakthroughs in physics which emerge from the study of inter cell behavior among proteins in the human brain?

Net net: Is it time to re-evaluate some discarded systems and methods? Is it time to accept the fact that technology cannot solve in isolation certain problems? Is it time to recognize that close enough for horseshoes and good enough are not appropriate when it comes to knowledge centric activities? Search engines die when the information garden cannot support the buds and shoots of finding useful information the user seeks.

Stephen E Arnold, November 1, 2022

Semantics and the Web: A Snort of Pisco?

November 16, 2021

I read a transcript for the video called “Semantics and the Web: An Awkward History.” I have done a little work in the semantic space, including a stint as an advisor to a couple of outfits. I signed confidentiality agreements with the firms and even though both have entered the well-known Content Processing Cemetery, I won’t name these outfits. However, I thought of the ghosts of these companies as I worked my way through the transcript. I don’t think I will have nightmares, but my hunch is that investors in these failed outfits may have bad dreams. A couple may experience post traumatic stress. Hey, I am just suggesting people read the document, not go bonkers over its implications in our thumbtyping world.

I want to highlight a handful of gems I identified in the write up. If I get involved in another world-saving semantic project, I will want to have these in my treasure chest.

First, I noted this statement:

“Generic coding”, later known as markup, first emerged in the late 1960s, when William Tunnicliffe, Stanley Rice, and Norman Scharpf got the ideas going at the Graphics Communication Association, the GCA. Goldfarb’s implementations at IBM, with his colleagues Edward Mosher and Raymond Lorie, the G, M, and L, made him the point person for these conversations.

What’s not mentioned is that some in the US government became quite enthusiastic. Imagine the benefit of putting tags in text and providing electronic copies of documents. Much better than loose-leaf notebooks. I wish I have a penny for every time I heard this statement. How does the government produce documents today? The only technology not in wide use is hot metal type. It’s been — what? — a half century?

Second, I circled this passage:

SGML included a sample vocabulary, built on a model from the earliest days of GML. The American Association of Publishers and others used it regularly.

Indeed wonderful. The phrase “slicing and dicing” captured the essence of SGML. Why have human editors? Use SGML. Extract chunks. Presto! A new book. That worked really well but for one drawback: The proliferation of wild and crazy “books” were tough to sell. Experts in SGML were and remain a rare breed of cat. There were SGML ecosystems but adding smarts to content was and remains a work in progress. Yes, I am thinking of Snorkel too.

Third, I like this observation too:

Dumpsters are available in a variety of sizes and styles. To be honest, though, these have always been available. Demolition of old projects, waste, and disasters are common and frequent parts of computing.

The Web as well as social media are dumpsters. Let’s toss in TikTok type videos too. I think meta meta tags can burn in our cherry red garbage container. Why not?

What do these observations have to do with “semantics”?

- Move from SGML to XML. Much better. Allow XML to run some functions. Yes, great idea.

- Create a way to allow content objects to be anywhere. Just pull them together. Was this the precursor to micro services?

- One major consequence of tagging or the lack of it or just really lousy tagging, marking up, and relying of software allegedly doing the heavy lifting is an active demand for a way to “make sense” of content. The problem is that an increasing amount of content is non textual. Ooops.

What’s the fix? The semantic Web revivified? The use of pre-structured, by golly, correct mark up editors? A law that says students must learn how to mark up and tag? (Problem: Schools don’t teach math and logic anymore. Oh, well, there’s an online course for those who don’t understand consistency and rules.)

The write up makes clear there are numerous opportunities for innovation. And the non-textual information. Academics have some interesting ideas. Why not go SAILing or revisit the world of semantic search?

Stephen E Arnold, November 16, 2021

Exposing Big Data: A Movie Person Explains Fancy Math

April 16, 2021

I am not “into” movies. Some people are. I knew a couple of Hollywood types, but I was dumbfounded by their thought processes. One of these professionals dreamed of crafting a motion picture about riding a boat powered by the wind. I think I understand because I skimmed one novel by Herman Melville, who grew up with servants in the house. Yep, in touch with the real world of fish and storms at sea.

However, perhaps an exception is necessary. A movie type offered some interesting ideas in the BBC “real” news story “Documentary Filmmaker Adam Curtis on the Myth of Big Data’s Predictive Power: It’s a Modern Ghost Story.” Note: This article is behind a paywall designed to compensate content innovators for their highly creative work. You have been warned.

Here are several statements I circled in bright True Blue marker ink:

- “The best metaphor for it is that Amazon slogan, which is: ‘If you like that, then you’ll like this,'” [Adam] Curtis [the documentary film maker]

- [Adam Curtis] pointed to the US National Security Agency’s failure to intercept a single terrorist attack, despite monitoring the communications of millions of Americans for the better part of two decades.

- [Big data and online advertising] a bit like sending someone with a flyer advertising pizzas to

the lobby of a pizza restaurant,” said Curtis. “You give each person one of those flyers as they come into the restaurant and they walk out with a pizza. “It looks like it’s one of your flyers that’s done it. But it wasn’t – it’s a pizza restaurant.”

Maybe I should pay more attention to the filmic mind. These observations strike me as accurate.

Predictive analytics, fancy math, and smart software? Ghosts.

But what if ghosts are real?

Stephen E Arnold, April 16, 2021

MIT Deconstructs Language

April 14, 2021

I got a chuckle from the MIT Technology Review write up “Big Tech’s Guide to Talking about AI Ethics.” The core of the write up is a list of token words like “framework”, “transparency”, by design”, “progress”, and “trustworthy.” The idea is that instead of explaining the craziness of smart software with phrases like “yeah, the intern who set up the thresholds is now studying Zen in Denver” or “the lady in charge of that project left in weird circumstances but I don’t follow that human stuff.” The big tech outfits which have a generous dollop of grads from outfits like MIT string together token words to explain what 85 percent confidence means. Yeah, think about it when you ask your pediatrician if the antidote given your child will work. Here’s the answer most parents want to hear: “Ashton will be just fine.” Parents don’t want to hear, “probably 15 out of every 100 kids getting this drug will die. Close enough for horse shoes.”

The hoot is that I took a look at MIT’s statements about Jeffrey Epstein and the hoo-hah about the money this estimable person contributed to the MIT outfit. Here are some phrases I selected plus their source.

- a thorough review of MIT’s engagements with Jeffrey Epstein (Link to source)

- no role in approving MIT’s acceptance of the donations. (Link to source)

- gifts to the Institute were approved under an informal framework (Link to source)

- for all of us who love MIT and are dedicated to its mission (Link to source)

- this situation demands openness and transparency (Link to source).

Yep, “framework”, “openness,” and “transparency.” Reassuring words like “thorough” and passive voice. Excellent.

Word tokens are worth what exactly?

Stephen E Arnold, April 14, 2021