VPNs, Snake Oil, and Privacy

July 2, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Earlier this year, I had occasion to meet a wild and crazy entrepreneur who told me that he had the next big thing in virtual private networks. I listened to the words and tried to convert the brightly-covered verbal storm into something I could understand. I failed. The VPN, as I recall the energizer bunny powered start up impresario needed to be reinvented.

Source: https://www.leviathansecurity.com/blog/tunnelvision

I knew that the individual’s knowledge of VPNs was — how shall I phrase it — limited. As an educational outreach, I forwarded to the person who wants to be really, really rich the article “Novel Attack against Virtually All VPN Apps Neuters Their Entire Purpose.” The write up focuses on an exploit which compromises the “secrecy” the VPN user desires. I hopes the serial entrepreneur notes this passage:

“The attacker can read, drop or modify the leaked traffic and the victim maintains their connection to both the VPN and the Internet.”

Technical know how is required, but the point is that VPNs are often designed to:

- Capture data about the VPN user and other quite interesting metadata. These data are then used either for marketing, search engine optimization, or simple information monitoring.

- A way to get from a VPN hungry customer a credit card which can be billed every month for a long, long time. The customer believes a VPN adds security when zipping around from Web site to online service. Ignorance is bliss, and these VPN customers are usually happy.

- A large-scale industrial operation which sells VPN services to repackagers who buy bulk VPN bandwidth and sell it high. The winner is the “enabler” or specialized hosting provider who delivers a vanilla VPN service on the cheap and ignores what the resellers say and do. At one of the law enforcement / intel conferences I attended I heard someone mention the name of an ISP in Romania. I think the name of this outfit was M247 or something similar. Is this a large scale VPN utility? I don’t know, but I may take a closer look because Romania is an interesting country with some interesting online influencers who are often in the news.

The write up includes quite a bit of technical detail. There is one interesting factoid that took care to highlight for the VPN oriented entrepreneur:

Interestingly, Android is the only operating system that fully immunizes VPN apps from the attack because it doesn’t implement option 121. For all other OSes, there are no complete fixes. When apps run on Linux there’s a setting that minimizes the effects, but even then TunnelVision can be used to exploit a side channel that can be used to de-anonymize destination traffic and perform targeted denial-of-service attacks. Network firewalls can also be configured to deny inbound and outbound traffic to and from the physical interface. This remedy is problematic for two reasons: (1) a VPN user connecting to an untrusted network has no ability to control the firewall and (2) it opens the same side channel present with the Linux mitigation. The most effective fixes are to run the VPN inside of a virtual machine whose network adapter isn’t in bridged mode or to connect the VPN to the Internet through the Wi-Fi network of a cellular device.

What’s this mean? In a nutshell, Google did something helpful. By design or by accident? I don’t know. You pick the option that matches your perception of the Android mobile operating system.

This passage includes one of those observations which could be helpful to the aspiring bad actor. Run the VPN inside of a virtual machine and connect to Internet via a Wi-Fi network or mobile cellular service.

Several observations are warranted:

- The idea of a “private network” is not new. A good question to pose is, “Is there a way to create a private network that cannot be detected using conventional traffic monitoring and sniffing tools? Could that be the next big thing for some online services designed for bad actors?

- The lack of knowledge about VPNs makes it possible for data harvesters and worse to offer free or low cost VPN service and bilk some customers out of their credit card data and money.

- Bad actors are — at some point — going to invest time, money, and programming resources in developing a method to leapfrog the venerable and vulnerable VPN. When that happens, excitement will ensue.

Net net: Is there a solution to VPN trickery? Sure, but that involves many moving parts. I am not holding my breath.

Stephen E Arnold, July 2, 2024

The TikTok Flap: Wings on a Locomotive?

March 20, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I find the TikTok flap interesting. The app was purposeless until someone discovered that pre-teens and those with similar mental architecture would watch short videos on semi-forbidden subjects; for instance, see-through dresses, the thrill of synthetic opioids, updating the Roman vomitorium for a quick exit from parental reality, and the always-compelling self-harm presentations. But TikTok is not just a content juicer; it can provide some useful data in its log files. Cross correlating these data can provide some useful insights into human behavior. Slicing geographically makes it possible to do wonderful things. Apply some filters and a psychological profile can be output from a helpful intelware system. Whether these types of data surfing take place is not important to me. The infrastructure exists and can be used (with or without authorization) by anyone with access to the data.

Like bird wings on a steam engine, the ban on TikTok might not fly. Thanks, MSFT Copilot. How is your security revamp coming along?

What’s interesting to me is that the US Congress took action to make some changes in the TikTok business model. My view is that social media services required pre-emptive regulation when they first poked their furry, smiling faces into young users’ immature brains. I gave several talks about the risks of social media online in the 1990s. I even suggested remediating actions at the open source intelligence conferences operated by Major Robert David Steele, a former CIA professional and conference entrepreneur. As I recall, no one paid any attention. I am not sure anyone knew what I was talking about. Intelligence, then, was not into the strange new thing of open source intelligence and weaponized content.

Flash forward to 2024, after the US government geared up to “ban” or “force ByteDance” to divest itself of TikTok, many interesting opinions flooded the poorly maintained and rapidly deteriorating information highway. I want to highlight two of these write ups, their main points, and offer a few observations. (I understand that no one cared 30 years ago, but perhaps a few people will pay attention as I write this on March 16, 2024.)

The first write up is “A TikTok Ban Is a Pointless Political Turd for Democrats.” The language sets the scene for the analysis. I think the main point is:

Banning TikTok, but refusing to pass a useful privacy law or regulate the data broker industry is entirely decorative. The data broker industry routinely collects all manner of sensitive U.S. consumer location, demographic, and behavior data from a massive array of apps, telecom networks, services, vehicles, smart doorbells and devices (many of them *gasp* built in China), then sells access to detailed data profiles to any nitwit with two nickels to rub together, including Chinese, Russian, and Iranian intelligence. Often without securing or encrypting the data. And routinely under the false pretense that this is all ok because the underlying data has been “anonymized” (a completely meaningless term). The harm of this regulation-optional surveillance free-for-all has been obvious for decades, but has been made even more obvious post-Roe. Congress has chosen, time and time again, to ignore all of this.

The second write up is “The TikTok Situation Is a Mess.” This write up eschews the colorful language of the TechDirt essay. Its main point, in my opinion, is:

TikTok clearly has a huge influence over a massive portion of the country, and the company isn’t doing much to actually assure lawmakers that situation isn’t something to worry about.

Thus, the article makes clear its concern about the outstanding individuals serving in a representative government in Washington, DC, the true home of ethical behavior in the United States:

Congress is a bunch of out-of-touch hypocrites.

What do I make of these essays? Let me share my observations:

- It is too late to “fix up” the TikTok problem or clean up the DC “mess.” The time to act was decades ago.

- Virtual private networks and more sophisticated “get around” technology will be tapped by fifth graders to the short form videos about forbidden subjects can be consumed. How long will it take a savvy fifth grader to “teach” her classmates about a point-and-click VPN? Two or three minutes. Will the hungry minds recall the information? Yep.

- The idea that “privacy” has not been regulated in the US is a fascinating point. Who exactly was pro-privacy in the wake of 9/11? Who exactly declined to use Google’s services as information about the firm’s data hoovering surfaced in the early 2000s? I will not provide the answer to this question because Google’s 90 percent plus share of the online search market presents the answer.

Net net: TikTok is one example of a software with a penchant for capturing data and retaining those data in a form which can be processed for nuggets of information. One can point to Alibaba.com, CapCut.com, Temu.com or my old Huawei mobile phone which loved to connect to servers in Singapore until our fiddling with the device killed it dead. ![]()

Stephen E Arnold, March 20, 2024

Worried about TikTok? Do Not Overlook CapCut

March 18, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I find the excitement about TikTok interesting. The US wants to play the reciprocity card; that is, China disallows US apps so the US can ban TikTok. How influential is TikTok? US elected officials learned first hand that TikTok users can get messages through to what is often a quite unresponsive cluster of elected officials. But let’s leave TikTok aside.

Thanks, MSFT Copilot. Good enough.

What do you know about the ByteDance cloud software CapCut? Ah, you have never heard of it. That’s not surprising because it is aimed at those who make videos for TikTok (big surprise) and other video platforms like YouTube.

CapCut has been gaining supporters like the happy-go-lucky people who published “how to” videos about CapCut on YouTube. On TikTok, CapCut short form videos have tallied billions of views. What makes it interesting to me is that it wants to phone home, store content in the “cloud”, and provide high-end tools to handle some tricky video situations like weird backgrounds on AI generated videos.

The product CapCut was named (I believe) JianYing or Viamaker (the story varies by source) which means nothing to me. The Google suggests its meanings could range from hard to paper cut out. I am not sure I buy these suggestions because Chinese is a linguistic slippery fish. Is that a question or a horse? In 2020, the app got a bit of shove into the world outside of the estimable Middle Kingdom.

Why is this important to me? Here are my reasons for creating this short post:

- Based on my tests of the app, it has some of the same data hoovering functions of TikTok

- The data of images and information about the users provides another source of potentially high value information to those with access to the information

- Data from “casual” videos might be quite useful when the person making the video has landed a job in a US national laboratory or in one the high-tech playgrounds in Silicon Valley. Am I suggesting blackmail? Of course not, but a release of certain imagery might be an interesting test of the videographer’s self-esteem.

If you want to know more about CapCut, try these links:

- Download (ideally to a burner phone or a PC specifically set up to test interesting software) at www.capcut.com

- Read about the company CapCut in this 2023 Recorded Future write up

- Learn about CapCut’s privacy issues in this Bloomberg story.

Net net: Clever stuff but who is paying attention. Parents? Regulators? Chinese intelligence operatives?

Stephen E Arnold, March 18, 2024

Meta Never Met a Kid Data Set It Did Not Find Useful

January 5, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Adults are ripe targets for data exploitation in modern capitalism. While adults fight for their online privacy, most have rolled over and accepted the inevitable consumer Big Brother. When big tech companies go after monetizing kids, however, that’s when adults fight back like rabid bears. Engadget writes about how Meta is fighting against the federal government about kids’ data: “Meta Sues FTC To Block New Restrictions On Monetizing Kids’ Data.”

Meta is taking the FTC to court to prevent them from reopening a 2020 $5 billion landmark privacy case and to allow the company to monetize kids’ data on its apps. Meta is suing the FTC, because a federal judge ruled that the FTC can expand with new, more stringent rules about how Meta is allowed to conduct business.

Meta claims the FTC is out for a power grab and is acting unconstitutionally, while the FTC reports the claimants consistently violates the 2020 settlement and the Children’s Online Privacy Protection Act. FTC wants its new rules to limit Meta’s facial recognition usage and initiate a moratorium on new products and services until a third party audits them for privacy compliance.

Meta is not a huge fan of the US Federal Trade Commission:

“The FTC has been a consistent thorn in Meta’s side, as the agency tried to stop the company’s acquisition of VR software developer Within on the grounds that the deal would deter "future innovation and competitive rivalry." The agency dropped this bid after a series of legal setbacks. It also opened up an investigation into the company’s VR arm, accusing Meta of anti-competitive behavior."

The FTC is doing what government agencies are supposed to do: protect its citizens from greedy and harmful practices like those from big business. The FTC can enforce laws and force big businesses to pay fines, put leaders in jail, or even shut them down. But regulators have been decades ramping up to take meaningful action. The result? The thrashing over kiddie data.

Whitney Grace, January 5, 2024

Mastercard and Customer Information: A Lone Ranger?

October 26, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[2] Vea4_thumb_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t2_thumb-24.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

In my lectures, I often include a pointer to sites selling personal data. Earlier this month, I explained that the clever founder of Frank Financial acquired email information about high school students from two off-the-radar data brokers. These data were mixed with “real” high school student email addresses to provide a frothy soup of more than a million email addresses. These looked okay. The synthetic information was “good enough” to cause JPMorgan Chase to output a bundle of money to the alleged entrepreneur winners.

A fisherman chasing a slippery eel named Trust. Thanks, MidJourney. You do have a knack for recycling Godzilla art, don’t you?

I thought about JPMorgan Chase when I read “Mastercard Should Stop Selling Our Data.” The article makes clear that Mastercard sells its customers (users?) data. Mastercard is a financial institution. JPMC is a financial institution. One sells information; the other gets snookered by data. I assume that’s the yin and yang of doing business in the US.

The larger question is, “Are financial institutions operating in a manner harmful to themselves (JPMC) and harmful to others (personal data about Mastercard customers (users?). My hunch is that today I am living in an “anything goes” environment. Would the Great Gatsby be even greater today? Why not own Long Island and its railroad? That sounds like a plan similar to those of high fliers, doesn’t it?

The cited article has a bias. The Electronic Frontier Foundation is allegedly looking out for me. I suppose that’s a good thing. The article aims to convince me; for example:

the company’s position as a global payments technology company affords it “access to enormous amounts of information derived from the financial lives of millions, and its monetization strategies tell a broader story of the data economy that’s gone too far.” Knowing where you shop, just by itself, can reveal a lot about who you are. Mastercard takes this a step further, as U.S. PIRG reported, by analyzing the amount and frequency of transactions, plus the location, date, and time to create categories of cardholders and make inferences about what type of shopper you may be. In some cases, this means predicting who’s a “big spender” or which cardholders Mastercard thinks will be “high-value”—predictions used to target certain people and encourage them to spend more money.

Are outfits like Chase Visa selling their customer (user) data? (Yep, the same JPMC whose eagle eyed acquisitions’ team could not identify synthetic data) and enables some Amazon credit card activities. Also, what about men-in-the-middle like Amazon? The data from its much-loved online shopping, book store, and content brokering service might be valuable to some I surmise? How much would an entity pay for information about an Amazon customer who purchased item X (a 3D printer) and purchased Kindle books about firearm related topics be worth?

The EFF article uses a word which gives me the willies: Trust. For a time, when I was working in different government agencies, the phrase “trust but verify” was in wide use. Am I able to trust the EFF and its interpretation from a unit of the Public Interest Network? Am I able to trust a report about data brokering? Am I able to trust an outfit like JPMC?

My thought is that if JPMC itself can be fooled by a 31 year old and a specious online app, “trust” is not the word I can associate with any entity’s action in today’s business environment.

This dinobaby is definitely glad to be old.

Stephen E Arnold, October 26, 2023

Google and Its Use of the Word “Public”: A Clever and Revenue-Generating Policy Edit

July 6, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-9.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

If one has the cash, one can purchase user-generated data from more than 500 data publishers in the US. Some of these outfits are unknown. When a liberal Wall Street Journal reporter learns about Venntel or one of these outfits, outrage ensues. I am not going to explain how data from a user finds its ways into the hands of a commercial data aggregator or database publisher. Why not Google it? Let me know how helpful that research will be.

Why are these outfits important? The reasons include:

- Direct from app information obtained when a clueless mobile user accepts the Terms of Use. Do you hear the slurping sounds?

- Organizations with financial data and savvy data wranglers who cross correlate data from multiple sources?

- Outfits which assemble real-time or near-real-time user location data. How useful are those data in identifying military locations with a population of individuals who exercise wearing helpful heart and step monitoring devices?

Navigate to “Google’s Updated Privacy Policy States It Can Use Public Data to Train its AI Models.” The write up does not make clear what “public data” are. My hunch is that the Google is not exceptionally helpful with its definitions of important “obvious” concepts. The disconnect is the point of the policy change. Public data or third-party data can be purchased, licensed, used on a cloud service like an Oracle-like BlueKai clone, or obtained as part of a commercial deal with everyone’s favorite online service LexisNexis or one of its units.

A big advertiser demonstrates joy after reading about Google’s detailed prospect targeting reports. Dossiers of big buck buyers are available to those relying on Google for online text and video sales and marketing. The image of this happy media buyer is from the elves at MidJourney.

The write up states with typical Silicon Valley “real” news flair:

By updating its policy, it’s letting people know and making it clear that anything they publicly post online could be used to train Bard, its future versions and any other generative AI product Google develops.

Okay. “the weekend” mentioned in the write up is the 4th of July weekend. Is this a hot news or a slow news time? If you picked “hot”, you are respectfully wrong.

Now back to “public.” Think in terms of Google’s licensing third-party data, cross correlating those data with its log data generated by users, and any proprietary data obtained by Google’s Android or Chrome software, Gmail, its office apps, and any other data which a user clicking one of those “Agree” boxes cheerfully mouses through.

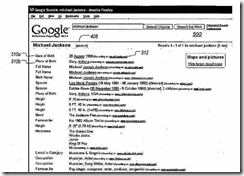

The idea, if the information in Google patent US7774328 B2. What’s interesting is that this granted patent does not include a quite helpful figure from the patent application US2007 0198481. Here’s the 16 year old figure. The subject is Michael Jackson. The text is difficult to read (write your Congressman or Senator to complain). The output is a machine generated dossier about the pop star. Note that it includes aliases. Other useful data are in the report. The granted patent presents more vanilla versions of the dossier generator, however.

The use of “public” data may enhance the type of dossier or other meaty report about a person. How about a map showing the travels of a person prior to providing a geo-fence about an individual’s location on a specific day and time. Useful for some applications? If these “inventions” are real, then the potential use cases are interesting. Advertisers will probably be interested? Can you think of other use cases? I can.

The cited article focuses on AI. I think that more substantive use cases fit nicely with the shift in “policy” for public data. Have your asked yourself, “What will Mandiant professionals find interesting in cross correlated data?”

Stephen E Arnold, July 6, 2023

Datasette: Useful Tool for Crime Analysts

February 15, 2023

If you want to explore data sets, you may want to take a look at the “open source multi-tool for exploring and publishing data.” The Datasette Swiss Army knife “is a tool for exploring and publishing data.”

The company says,

It helps people take data of any shape, analyze and explore it, and publish it as an interactive website and accompanying API. Datasette is aimed at data journalists, museum curators, archivists, local governments, scientists, researchers and anyone else who has data that they wish to share with the world. It is part of a wider ecosystem of 42 tools and 110 plugins dedicated to making working with structured data as productive as possible.

A handful of demos are available. Worth a look.

Stephen E Arnold, February 15, 2023

The Internet: Cue the Music. Hit It, Regrets, I Have Had a Few

December 21, 2022

I have been around online for a few years. I know some folks who were involved in creating what is called “the Internet.” I watched one of these luminaries unbutton his shirt and display a tee with the message, “TCP on everything.” Cute, cute, indeed. (I had the task of introducing this individual only to watch the disrobing and the P on everything joke. Tip: It was not a joke.)

Imagine my reaction when I read “Inventor of the World Wide Web Wants Us to Reclaim Our Data from Tech Giants.” The write up states:

…in an era of growing concern over privacy, he believes it’s time for us to reclaim our personal data.

Who wants this? Tim Berners-Lee and a startup. Content marketing or a sincere effort to derail the core functionality of ad trackers, beacons, cookies which expire in 99 years, etc., etc.

The article reports:

Berners-Lee hopes his platform will give control back to internet users. “I think the public has been concerned about privacy — the fact that these platforms have a huge amount of data, and they abuse it,” he says. “But I think what they’re missing sometimes is the lack of empowerment. You need to get back to a situation where you have autonomy, you have control of all your data.”

The idea is that Web 3 will deliver a different reality.

Do you remember this lyric:

Yes, there were times I’m sure you knew

When I bit off more than I could chew

But through it all, when there was doubt

I ate it up and spit it out

I faced it all and I stood tall and did it my way.

The my becomes big tech, and it is the information highway. There’s no exit, no turnaround, and no real chance of change before I log off for the final time.

Yeah, digital regrets. How’s that working out at Amazon, Facebook, Google, Twitter, and Microsoft among others? Unintended consequences and now the visionaries are standing tall on piles of money and data.

Change? Sure, right away.

Stephen E Arnold, December 21, 2022

TikTok: Algorithmic Data Slurping

November 14, 2022

There are several reasons TikTok rocketed to social-media dominance in just a few years. For example, Its user friendly creation tools plus a library of licensed tunes make it easy to create engaging content. Then there was the billion-dollar marketing campaign that enticed users away from Facebook and Instagram. But, according to the Guardian, it was the recommendation engine behind its For You Page (FYP) that really did the trick. Writer Alex Hern describes “How TikTok’s Algorithm Made It a Success: ‘It Pushes the Boundaries.’” He tells us:

“The FYP is the default screen new users see when opening the app. Even if you don’t follow a single other account, you’ll find it immediately populated with a never-ending stream of short clips culled from what’s popular across the service. That decision already gave the company a leg up compared to the competition: a Facebook or Twitter account with no friends or followers is a lonely, barren place, but TikTok is engaging from day one. It’s what happens next that is the company’s secret sauce, though. As you scroll through the FYP, the makeup of videos you’re presented with slowly begins to change, until, the app’s regular users say, it becomes almost uncannily good at predicting what videos from around the site are going to pique your interest.”

And so a user is hooked. Beyond the basics, specifically how the algorithm works is a mystery even, we’re told, to those who program it. We do know the AI takes the initiative. Instead of only waiting for users to select a video or tap a reaction, it serves up test content and tweaks suggestions based on how its suggestions are received. This approach has another benefit. It ensures each video posted on the platform is seen by at least one user, and every positive interaction multiplies its reach. That is how popular content creators quickly amass followers.

Success can be measured different ways, of course. Though TikTok has captured a record number of users, it is not doing so well in the critical monetization category. Estimates put its 2021 revenue at less than 5% of Facebook’s, and efforts to export its e-commerce component have not gone as hoped. Still, it looks like the company is ready to try, try again. Will its persistence pay off?

Cynthia Murrell, November 14, 2022

TikTok: Allegations of Data Sharing with China! Why?

June 21, 2022

If one takes a long view about an operation, some planners find information about the behavior of children or older, yet immature, creatures potentially useful. What if a teenager, puts up a TikTok video presenting allegedly “real” illegal actions? Might that teen in three or four years be a target for soft persuasion? Leaking the video to an employer? No, of course not. Who would take such an action?

I read “Leaked Audio from 80 Internal TikTok Meetings Shows That US User Data Has Been Repeatedly Accessed from China.” Let’s assume that this allegation has a tiny shred of credibility. The financially-challenged Buzzfeed might be angling for clicks. Nevertheless, I noted this passage:

…according to leaked audio from more than 80 internal TikTok meetings, China-based employees of ByteDance have repeatedly accessed nonpublic data about US TikTok users…

Is the audio deeply faked? Could the audio be edited by a budding sound engineer?

Sure.

And what’s with the TikTok “connection” to Oracle? Probably just a coincidence like one of Oracle’s investment units participating in Board meetings for Voyager Labs. A China-linked firm was on the Board for a while. No big deal. Voyager Labs? What does that outfit do? Perhaps it is the Manchester Square office and the delightful restaurants close at hand?

The write up refers to data brokers too. That’s interesting. If a nation state wants app generated data, why not license it. No one pays much attention to “marketing services” which acquire and normalize user data, right?

Buzzfeed tried to reach a wizard at Booz, Allen. That did not work out. Why not drive to Tyson’s Corner and hang out in the Ritz Carlton at lunch time. Get a Booz, Allen expert in the wild.

Yep, China. No problem. Take a longer-term view for creating something interesting like an insider who provides a user name and password. Happens every day and will into the future. Plan ahead I assume.

Real news? Good question.

Stephen E Arnold, June 21, 2022