Google: Good at Quantum and Maybe Better at Discarding Intra-Company Messages

February 28, 2023

Google has already declared quantum supremacy. The supremos have outsupremed themselves, if this story in the UK Independent is accurate:

Okay, supremacy but error problems. Supremacy but a significant shift. Then the word “plague.”

The write up states in what strikes me a Google PR recyclish way:

Google researchers say they have found a way of building the technology so that it corrects those errors. The company says it is a breakthrough on a par with its announcement three years ago that it had reached “quantum supremacy”, and represents a milestone on the way to the functional use of quantum computers.

The write up continues:

Dr Julian Kelly, director of quantum hardware at Google Quantum AI, said: “The engineering constraints (of building a quantum computer) certainly are feasible. “It’s a big challenge – it’s something that we have to work on, but by no means that blocks us from, for example, making a large-scale machine.”

What seems to be a similar challenge appears in “DOJ Seeks Court Sanctions against Google over Intentional Destruction of Chat Logs.” This write up is less of a rah rah for the quantum complexity crowd and more for a simpler problem: Retaining employee communications amidst the legal issues through which the Google is wading. The write up says:

Google should face court sanctions over “intentional and repeated destruction” of company chat logs that the US government expected to use in its antitrust case targeting Google’s search business, the Justice Department said Thursday [February 23, 2023]. Despite Google’s promises to preserve internal communications relevant to the suit, for years the company maintained a policy of deleting certain employee chats automatically after 24 hours, DOJ said in a filing in District of Columbia federal court. The practice has harmed the US government’s case against the tech giant, DOJ alleged.

That seems clear, certainly clearer than the assertions about 49 physical qubits and 17 physical qubits being equal to the quantum supremacy assertion several years ago.

How can one company be adept at manipulating qubits and mal-adept at saving chat messages? Wait! Wait!

Maybe Google is equally adept: Manipulating qubits and manipulating digital information.

Strike the quantum fluff and focus on the manipulating of information. Is that a breakthrough?

Stephen E Arnold, February 28, 2023

Does a LLamA Bite? No, But It Can Be Snarky

February 28, 2023

Everyone in Harrod’s Creek knows the name Yann LeCun. The general view is that when it comes to smart software, this wizard wrote or helped write the book. I spotted a tweet thread “LLaMA Is a New *Open-Source*, High-Performance Large Language Model from Meta AI – FAIR.” The link to the Facebook research paper “LLaMA: Open and Efficient Foundation Language Models” explains the innovation for smart software enthusiasts. In a nutshell, the Zuck approach is bigger, faster, and trained without using data not available to everyone. Also, it does not require Googzilla scale hardware for some applications.

That’s the first tip off that the technical paper has a snarky sparkle. Exactly what data have been used to train Google and other large language models. The implicit idea is that the legal eagles flock to sue for copyright violating actions, the Zuckers are alleged flying in clean air.

Here are a few other snarkifications I spotted:

- Use small models trained on more data. The idea is that others train big Googzilla sized models trained on data, some of which is not public available

- The Zuck approach an “efficient implementation of the causal multi-head attention operator.” The idea is that the Zuck method is more efficient; therefore, better

- In testing performance, the results are all over the place. The reason? The method for determining performance is not very good. Okay, still Meta is better. The implication is that one should trust Facebook. Okay. That’s scientific.

- And cheaper? Sure. There will be fewer legal fees to deal with pesky legal challenges about fair use.

What’s my take? Another open source tool will lead to applications built on top of the Zuckbook’s approach.

Now the developers and users will have to decide if the LLamA can bite? Does Facebook have its wizardly head in the Azure clouds? Will the Sages of Amazon take note?

Tough questions. At first glance, llamas have other means of defending themselves. Teeth may not be needed. Yes, that’s snarky.

Stephen E Arnold, February 28, 2023

Worthless Data Work: Sorry, No Sympathy from Me

February 27, 2023

I read a personal essay about “data work.” The title is interesting: “Most Data Work Seems Fundamentally Worthless.” I am not sure of the age of the essayist, but the pain is evident in the word choice; for example: Flavor of despair (yes, synesthesia in a modern technology awakening write up!), hopeless passivity (yes, a digital Sisyphus!), essentially fraudulent (shades of Bernie Madoff!), fire myself (okay, self loathing and an inner destructive voice), and much, much more.

But the point is not the author for me. The big idea is that when it comes to data, most people want a chart and don’t want to fool around with numbers, statistical procedures, data validation, and context of the how, where, and what of the collection process.

Let’s go to the write up:

How on earth could we have what seemed to be an entire industry of people who all knew their jobs were pointless?

Like Elizabeth Barrett Browning, the essayist enumerates the wrongs of data analytics as a vaudeville act:

- Talking about data is not “doing” data

- Garbage in, garbage out

- No clue about the reason for an analysis

- Making marketing and others angry

- Unethical colleagues wallowing in easy money

What’s ahead? I liked these statements which are similar to what a digital Walt Whitman via ChatGPT might say:

I’ve punched this all out over one evening, and I’m still figuring things out myself, but here’s what I’ve got so far… that’s what feels right to me – those of us who are despairing, we’re chasing quality and meaning, and we can’t do it while we’re taking orders from people with the wrong vision, the wrong incentives, at dysfunctional organizations, and with data that makes our tasks fundamentally impossible in the first place. Quality takes time, and right now, it definitely feels like there isn’t much of a place for that in the workplace.

Imagine. The data and working with it has an inherent negative impact. We live in a data driven world. Is that why many processes are dysfunctional. Hey, Sisyphus, what are the metrics on your progress with the rock?

Stephen E Arnold, February 27, 2023

Is the UK Stupid? Well, Maybe, But Government Officials Have Identified Some Targets

February 27, 2023

I live in good, old Kentucky, rural Kentucky, according to my deceased father-in-law. I am not an Anglophile. The country kicked my ancestors out in 1575 for not going with the flow. Nevertheless, I am reluctant to slap “even more stupid” on ideas generated by those who draft regulations. A number of experts get involved. Data are collected. Opinions are gathered from government sources and others. The result is a proposal to address a problem.

The write up “UK Proposes Even More Stupid Ideas for Directly Regulating the Internet, Service Providers” makes clear that governments have not been particularly successful with its most recent ideas for updating the UK’s 1990 Computer Misuse Act. The reasons offered are good; for example, reducing cyber crime and conducting investigations. The downside of the ideas is that governments make mistakes. Governmental powers creep outward over time; that is, government becomes more invasive.

The article highlights the suggested changes that the people drafting the modifications suggest:

- Seize domains and Internet Protocol addresses

- Use of contractors for this process

- Restrict algorithm-manufactured domain names

- Ability to go after the registrar and the entity registering the domain name

- Making these capabilities available to other government entities

- A court review

- Mandatory data retention

- Redefining copying data as theft

- Expanded investigatory activities.

I am not a lawyer, but these proposals are troubling.

I want to point out that whoever drafted the proposal is like a tracking dog with an okay nose. Based on our research for an upcoming lecture to some US government officials, it is clear that domain name registries warrant additional scrutiny. We have identified certain ISPs as active enablers of bad actors because there is no effective oversight on these commercial and sometimes non-governmental organizations or non-profit “do good” entities. We have identified transnational telecommunications and service providers who turn a blind eye to the actions of other enterprises in the “chain” which enables Internet access.

The UK proposal seems interesting and a launch point for discussion, the tracking dog has focused attention on one of the “shadow” activities enabled by lax regulators. Hopefully more scrutiny will be directed at the complicated and essentially Wild West populated by enablers of criminal activity like human trafficking, weapons sales, contraband and controlled substance marketplaces, domain name fraud, malware distribution, and similar activities.

At least a tracking dog is heading along what might be an interesting path to explore.

Stephen E Arnold, February 27, 2023

MBAs Rejoice: Traditional Forecasting Methods Have to Be Reinvented

February 27, 2023

The excitement among the blue chip consultants will be building in the next few months. The Financial Times (the orange newspaper) has announced “CEOs Forced to Ditch Decades of Forecasting Habits.” But what to use? The answer will be crafted by McKinsey, Bain, Booz, Allen, et al. Even the azure chip outfits will get in on the money train too. Imagine all those people who have to do budgets have to find a new way. Plugging numbers into Excel and dragging the little square will no longer be enough.

The article reports:

auditing firms worry that the forecasts their corporate clients submit to them for sign-off are impossible to assess.

Uncertainty and risk: These are two concepts known to give some of those in responsible positions indigestion. The article states:

It is not just the traditional variables of financial modeling such as inflation and consumer spending that have become harder to predict. The past few years have also provided some unexpected lessons on how business and society cope with shocks and uncertainty.

Several observations:

- Crafting “different” or “novel” forecasting methods will accelerate the use of smart software in blue chip consulting firms. By definition, MBAs are out of ideas which work in the new reality.

- Senior managers will be making decisions in an environment in which the payoff from their decisions will create faster turnover among the managerial ranks as uncertainty morphs into bad decisions for which “someone” must be held accountable.

- Predictive models may replace informed decisions based on experience.

Net net: Heisenberg uncertainty principle accounting marks a new era in budget forecasting and job security.

Stephen E Arnold, February 27, 2023

How about This Intelligence Blindspot: Poisoned Data for Smart Software

February 23, 2023

One of the authors is a Googler. I think this is important because the Google is into synthetic data; that is, machine generated information for training large language models or what I cynically refer to as “smart software.”

The article / maybe reproducible research is “Poisoning Web Scale Datasets Is Practical.” Nine authors of whom four are Googlers have concluded that a bad actor, government, rich outfit, or crafty students in Computer Science 301 can inject information into content destined to be used for training. How can this be accomplished. The answer is either by humans, ChatGPT outputs from an engineered query, or a combination. Why would someone want to “poison” Web accessible or thinly veiled commercial datasets? Gee, I don’t know. Oh, wait, how about control information and framing of issues? Nah, who would want to do that?

The paper’s authors conclude with more than one-third of that Google goodness. No, wait. There are no conclusions. Also, there are no end notes. What there is a road map explaining the mechanism for poisoning.

One key point for me is the question, “How is poisoning related to the use of synthetic data?”

My hunch is that synthetic data are more easily manipulated than going through the hoops to poison publicly accessible data. That’s time and resource intensive. The synthetic data angle makes it more difficult to identify the type of manipulations in the generation of a synthetic data set which could be mingled with “live” or allegedly-real data.

Net net: Open source information and intelligence may have a blindspot because it is not easy to determine what’s right, accurate, appropriate, correct, or factual. Are there implications for smart machine analysis of digital information? Yep, in my opinion already flawed systems will be less reliable and the users may not know why.

Stephen E Arnold, February 23, 2023

A Challenge for Intelware: Outputs Based on Baloney

February 23, 2023

I read a thought-troubling write up “Chat GPT: Writing Could Be on the Wall for Telling Human and AI Apart.” The main idea is:

historians will struggle to tell which texts were written by humans and which by artificial intelligence unless a “digital watermark” is added to all computer-generated material…

I noted this passage:

Last month researchers at the University of Maryland in the US said it was possible to “embed signals into generated text that are invisible to humans but algorithmically detectable” by identifying certain patterns of word fragments.

Great idea except:

- The US smart software is not the only code a bad actor could use. Germany’s wizards are moving forward with Aleph Alpha

- There is an assumption that “old” digital information will be available. Digital ephemera applies to everything to information on government Web sites which get minimal traffic to cost cutting at Web indexing outfits which see “old” data as a drain on profits, not a boon to historians

- Digital watermarks are likely to be like “bulletproof” hosting and advanced cyber security systems: The bullets get through and the cyber security systems are insecure.

What about intelware for law enforcement and intelligence professionals, crime analysts, and as-yet-unreplaced paralegals trying to make sense of available information? GIGO: Garbage in, garbage out.

Stephen E Arnold, February 23, 2023

What Happens When Misinformation Is Sucked Up by Smart Software? Maybe Nothing?

February 22, 2023

I noted an article called “New Research Finds Rampant Misinformation Spreading on WhatsApp within Diasporic Communities.” The source is the Daily Targum. I mention this because the news source is the Rutgers University Campus news service. The article provides some information about a study of misinformation on that lovable Facebook property WhatsApp.

Several points in the article caught my attention:

- Misinformation on WhatsApp caused people to be killed; Twitter did its part too

- There is an absence of fact checking

- There are no controls to stop the spread of misinformation

What is interesting about studies conducted by prestigious universities is that often the findings are neither novel nor surprising. In fact, nothing about social media companies reluctance to spend money or launch ethical methods is new.

What are the consequences? Nothing much: Abusive behavior, social disruption, and, oh, one more thing, deaths.

Stephen E Arnold, February 22, 2023

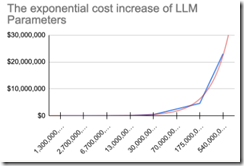

A Different View of Smart Software with a Killer Cost Graph

February 22, 2023

I read “The AI Crowd is Mad.” I don’t agree. I think the “in” word is hallucinatory. Several writes up have described the activities of Google and Microsoft as an “arm’s race.” I am not sure about that characterization either.

The write up includes a statement with which I agree; to wit:

… when listening to podcasters discussing the technology’s potential, a stereotypical assessment is that these models already have a pretty good accuracy, but that with (1) more training, (2) web-browsing support and (3) the capabilities to reference sources, their accuracy problem can be fixed entirely.

In my 50 plus year career in online information and systems, some problems keep getting kicked down the road. New technology appears and stubs its toe on one of those cans. Rusted cans can slice the careless sprinter on the Information Superhighway and kill the speedy wizard via the tough to see Clostridium tetani bacterium. The surface problem is one thing; the problem which chugs unseen below the surface may be a different beastie. Search and retrieval is one of those “problems” which has been difficult to solve. Just ask someone who frittered away beaucoup bucks improving search. Please, don’t confuse monetization with effective precision and recall.

The write up also includes this statement which resonated with me:

if we can’t trust the model’s outcomes, and we paste-in a to-be-summarized text that we haven’t read, then how can we possibly trust the summary without reading the to-be-summarized text?

Trust comes up frequently when discussing smart software. In fact, the Sundar and Prabhakar script often includes the word “trust.” My response has been and will be “Google = trust? Sure.” I am not willing to trust Microsoft’s Sidney or whatever it is calling itself today. After one update, we could not print. Yep, skill in marketing is not reliable software.

But the highlight of the write up is this chart. For the purpose of this blog post, let’s assume the numbers are close enough for horseshoes:

Source: https://proofinprogress.com/posts/2023-02-01/the-ai-crowd-is-mad.html

What the data suggest to me is that training and retraining models is expensive. Google figured this out. The company wants to train using synthetic data. I suppose it will be better than the content generated by organizations purposely pumping misinformation into the public text pool. Many companies have discovered that models, not just queries, can be engineered to deliver results which the super software wizards did not think about too much. (Remember dying from that cut toe on the Information Superhighway?)

The cited essay includes another wonderful question. Here it is:

But why aren’t Siri and Watson getting smarter?

May I suggest the reasons based on our dabbling with AI infused machine indexing of business information in 1981:

- Language is slippery, more slippery than an eel in Vedius Pollio’s eel pond. Thus, subject matter experts have to fiddle to make sure the words in content and the words in a query sort of overlap or overlap enough for the searcher to locate the needed information.

- Narrow domains on scientific, technical, and medical text are easier to index via a software. Broad domains like general content are more difficult for the software. A static model and the new content “drift.” This is okay as long as the two are steered together. Who has the time, money, or inclination to admit that software intelligence and human intelligence are not yet the same except in PowerPoint pitch decks and academic papers with mostly non reproducible results. But who wants narrow domains. Go broad and big or go home.

- The basic math and procedures may be old. Autonomy’s Neuro Linguistic Programming method was crafted by a stats-mad guy in the 18th century. What needs to be fiddled with are [a] sequences of procedures, [b] thresholds for a decision point, [c] software add ons that work around problems that no one knew existed until some smarty pants posts a flub on Twitter, among other issues.

Net net: We are in the midst of a marketing war. The AI part of the dust up is significant, but with the application of flawed smart software to the generation of content which may be incorrect, another challenge awaits: The Edsel and New Coke of artificial intelligence.

Stephen E Arnold, February 22, 2023

Stop ChatGPT Now Because We Are Google!

February 21, 2023

Another week, another jaunt to a foreign country to sound the alarm which says to me: “Stop ChatGPT now! We mean it. We are the Google.”

I wonder if there is a vaudeville poster advertising the show that is currently playing in Europe and the US? What would that poster look like? Would a smart software system generate a Yugo-sized billboard like this:

In my opinion, the message and getting it broadcast via an estimable publication like the Metro.co.uk tabloid-like Web site is high comedy. No, the reality of the Metro article is different. The headline reads: “Google Issues Urgent Warning to the Millions of People Using ChatGPT” reports:

A boss at Google has hit out at ChatGPT for giving ‘convincing but completely fictitious’ answers.

And who is the boss? None other than the other half of the management act Sundar and Prabhakar. What’s ChatGPT doing wrong? Getting too much publicity? Lousy search results have been the gold standard since relevance was kicked to the curb. Advertising is the best way to deliver what the user wants because users don’t know what they want. Now we see the Google: Red alert, reactionary, and high school science club antics.

Yep.

And the outfit which touted that it solved protein folding and achieved quantum supremacy cares about technology and people. The write up includes this line about Google’s concern:

This is the only way we will be able to keep the trust of the public.

As I noted in a LinkedIn post in response to a high class consultant’s comment about smart software. I replied, “Google trust?”

Several observations:

- Google like Microsoft cares about money and market position. The trust thing muddies the waters in my opinion. Microsoft and security? Google and alleged monopoly advertising practices?

- Google is pitching the hallucination angle pretty hard. Does Google mention Forrest Timothy Hayes who died of a drug overdose in the company of a non-technical Google contractor. See this story. Who at Google is hallucinating?

- Google does not know how to respond to Microsoft’s marketing play. Google’s response is to travel outside the US explaining that the sky is falling. What’s falling is Google’s marketing effectiveness data about itself I surmise.

Net net: My conclusion about Google’s anti-Microsoft ChatGPT marketing play is, “Is this another comedy act being tested on the road before opening in New York City?” This act may knock George Burns and Gracie Allen from top billing. Let’s ask Bard.

Stephen E Arnold, February 21, 2023