Trust in Google and Its Smart Software: What about the Humans at Google?

May 26, 2023

The buzz about Google’s injection of its smart software into its services is crowding out other, more interesting sounds. For example, navigate to “Texas Reaches $8 Million Settlement With Google Over Blatantly False Pixel Ads: Google Settled a Lawsuit Filed by AG Ken Paxton for Alleged False Advertisements for its Google Pixel 4 Smartphone.”

The write up reports:

A press release said Google was confronted with information that it had violated Texas laws against false advertising, but instead of taking steps to correct the issue, the release said, “Google continued its deceptive advertising, prioritizing profits over truthfulness.”

Google is pushing forward with its new mobile devices.

Let’s consider Google’s seven wonders of its software. You can find these at this link or summarized in my article “The Seven Wonders of the Google AI World.”

Let’s consider principle one: Be socially beneficial.

I am wondering how the allegedly deceptive advertising encourages me to trust Google.

Principle 4 is Be accountable to people.

My recollection is that Google works overtime to avoid being held accountable. The company relies upon its lawyers, its lobbyists, and its marketing to float above the annoyances of nation states. In fact, when greeted with substantive actions by the European Union, Google stalls and does not make available its latest and greatest services. The only accountability seems to be a legal action despite Google’s determined lawyerly push back. Avoiding accountability requires intermediaries because Google’s senior executives are busy working on principles.

Kindergarten behavior.

MidJourney captures the thrill of two young children squabbling over a piggy bank. I wonder if MidJourney knows what is going in the newly merged Google smart software units.

Google approaches some problems like kids squabbling over a piggy bank.

Net net: The Texas fine makes clear that some do not trust Google. The “principles” are marketing hoo hah. But everyone loves Google, including me, my French bulldog, and billions of users worldwide. Everyone will want a new $1800 folding Pixel, which is just great based on the marketing information I have seen. It has so many features and works wonders.

Stephen E Arnold, May 26, 2023

The Return: IBM Watsonx!

May 26, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/05/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-5.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

It is no surprise IBM’s entry into the recent generative AI hubbub is a version of Watson, the company’s longtime algorithmic representative. Techspot reports, “IBM Unleashes New AI Strategy with ‘watsonx’.” The new suite of tools was announced at the company’s recent Think conference. Note “watsonx” is not interchangeable with “Watson.” The older name with the capital letter and no trendy “x” is to be used for tools individuals rather than company-wide software. That won’t be confusing at all. Writer Bob O’Donnell describes the three components of watsonx:

“Watsonx.ai is the core AI toolset through which companies can build, train, validate and deploy foundation models. Notably, companies can use it to create original models or customize existing foundation models. Watsonx.data, is a datastore optimized for AI workloads that’s used to gather, organize, clean and feed data sources that go into those models. Finally, watsonx.governance is a tool for tracking the process of the model’s creation, providing an auditable record of all the data going into the model, how it’s created and more.Another part of IBM’s announcement was the debut of several of its own foundation models that can be used with the watsonx toolset or on their own. Not unlike others, IBM is initially unveiling a LLM-based offering for text-based applications, as well as a code generating and reviewing tool. In addition, the company previewed that it intends to create some additional industry and application-specific models, including ones for geospatial, chemistry, and IT operations applications among others. Critically, IBM said that companies can run these models in the cloud as a service, in a customer’s own data center, or in a hybrid model that leverages both. This is an interesting differentiation because, at the moment, most model providers are not yet letting organizations run their models on premises.”

Just to make things confusing, er, offer more options, each of these three applications will have three different model architectures. On top of that, each of these models will be available with varying numbers of parameters. The idea is not, as it might seem, to give companies decision paralysis but to provide flexibility in cost-performance tradeoffs and computing requirements. O’Donnell notes watsonx can also be used with open-source models, which is helpful since many organizations currently lack staff able build their own models.

The article notes that, despite the announcement’s strategic timing, it is clear watsonx marks a change in IBM’s approach to software that has been in the works for years: generative AI will be front and center for the foreseeable future. Kinda like society as a whole, apparently.

Cynthia Murrell, May 26, 2023

OpenAI Clarifies What “Regulate” Means to the Sillycon Valley Crowd

May 25, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/05/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-10.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Sam AI-man begged (at least he did not get on his hands and knees) the US Congress to regulate artificial intelligence (whatever that means). I just read “Sam Altman Says OpenAI Will Leave the EU if There’s Any Real AI Regulation.” I know I am old. I know I lose my car keys a couple of times every 24 hours. I do recall Mr. AI-man wanted regulation.

However, the write up reports:

Though unlike in the AI-friendly U.S., Altman has threatened to take his big tech toys to the other end of the sandbox if they’re not willing to play by his rules.

The vibes of the Zuckster zip through my mind. Facebook just chugs along, pays fines, and mostly ignores regulators. China seems to be an exception for Facebook, the Google, and some companies I don’t know about. China had a mobile death-mobile. A person accused and convicted would be executed in the mobile death van as soon as it arrived at the location where the convicted bad actor was. Re-education camps and mobile death-mobiles suggest that some US companies choose to exit China. Lawyers who have to arrive quickly or their client has been processed are not much good in some of China’s efficient state machines. Fines, however, are okay. Write a check and move on.

Mr. AI-man is making clear that the word “regulate” means one thing to Mr. AI-man and another thing to those who are not getting with the smart software program. The write up states:

Altman said he didn’t want any regulation that restricted users’ access to the tech. He told his London audience he didn’t want anything that could harm smaller companies or the open source AI movement (as a reminder, OpenAI is decidedly more closed off as a company than it’s ever been, citing “competition”). That’s not to mention any new regulation would inherently benefit OpenAI, so when things inevitably go wrong it can point to the law to say they were doing everything they needed to do.

I think “regulate” means what the declining US fast food outfit who told me “have it your way” meant. The burger joint put in a paper bag whatever the professionals behind the counter wanted to deliver. Mr. AI-man doesn’t want any “behind the counter” decision making by a regulatory cafeteria serving up its own version of lunch.

Mr. AI-man wants “regulate” to mean his way.

In the US, it seems, that is exactly what big tech and promising venture funded outfits are going to get; that is, whatever each company wants. Competition is good. See how well OpenAI and Microsoft are competing with Facebook and Google. Regulate appears to mean “let us do what we want to do.”

I am probably wrong. OpenAI, Google, and other leaders in smart software are at this very moment consuming the Harvard Library of books to read in search of information about ethical behavior. The “moral” learning comes later.

Net net: Now I understand the new denotation of “regulate.” Governments work for US high-tech firms. Thus, I think the French term laissez-faire nails it.

Stephen E Arnold, May 25, 2023

Need a Guide to Destroying Social Cohesion: Chinese Academics Have One for You

May 25, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/05/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

The TikTok service is one that has kicked the Google in its sensitive bits. The “algorithm” befuddles both European and US “me too” innovators. The ability of short, crunchy videos has caused restaurant chefs to craft food for TikTok influencers who record meals. Chefs!

What other magical powers can a service like TikTok have? That’s a good question, and it is one that the Chinese academics have answered. Navigate to “Weak Ties Strengthen Anger Contagion in Social Media.” The main idea of the research is to validate a simple assertion: Can social media (think TikTok, for example) take a flame thrower to social ties? The answer is, “Sure can.” Will a social structure catch fire and collapse? “Sure can.”

A frail structure is set on fire by a stream of social media consumed by a teen working in his parents’ garden shed. MidJourney would not accept the query a person using a laptop setting his friends’ homes on fire. Thanks, Aunt MidJourney.

The write up states:

Increasing evidence suggests that, similar to face-to-face communications, human emotions also spread in online social media.

Okay, a post or TikTok video sparks emotion.

So what?

…we find that anger travels easily along weaker ties than joy, meaning that it can infiltrate different communities and break free of local traps because strangers share such content more often. Through a simple diffusion model, we reveal that weaker ties speed up anger by applying both propagation velocity and coverage metrics.

The authors note:

…we offer solid evidence that anger spreads faster and wider than joy in social media because it disseminates preferentially through weak ties. Our findings shed light on both personal anger management and in understanding collective behavior.

I wonder if any psychological operations professionals in China or another country with a desire to reduce the efficacy of the American democratic “experiment” will find the research interesting?

Stephen E Arnold, May 25, 2023

Top AI Tools for Academic Researchers

May 25, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/05/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-4.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Like it or not, AI is reshaping academia. Setting aside the thorny issue of cheating, we see the technology is also changing how academic research is performed. In fact, there are already many AI options for researchers to pick from. Euronews narrows down the choices in, “The Best AI Tools to Power your Academic Research.” Writer Camille Bello shares the top five options as chosen by Mushtaq Bilal, a researcher at the University of Southern Denmark. She introduces the list with an important caveat: one must approach these tools carefully for accurate results. She writes:

“[Bilal] believes that if used thoughtfully, AI language models could help democratise education and even give way to more knowledge. Many experts have pointed out that the accuracy and quality of the output produced by language models such as ChatGPT are not trustworthy. The generated text can sometimes be biased, limited or inaccurate. But Bilal says that understanding those limitations, paired with the right approach, can make language models ‘do a lot of quality labour for you,’ notably for academia.

Incremental prompting to create a ‘structure’

To create an academia-worthy structure, Bilal says it is fundamental to master incremental prompting, a technique traditionally used in behavioural therapy and special education. It involves breaking down complex tasks into smaller, more manageable steps and providing prompts or cues to help the individual complete each one successfully. The prompts then gradually become more complicated. In behavioural therapy, incremental prompting allows individuals to build their sense of confidence. In language models, it allows for ‘way more sophisticated answers’. In a Twitter thread, Bilal showed how he managed to get ChatGPT to provide a ‘brilliant outline’ for a journal article using incremental prompting.”

See the write-up for this example of incremental prompting as well as a description of each entry on the list. The tools include: Consensus, Elicit.org, Scite.ai, Research Rabbit, and ChatPDF. The write-up concludes with a quote from Bill Gates, who asserts AI is destined to be as fundamental “as the creation of the microprocessor, the personal computer, the Internet, and the mobile phone.” Researchers may do well to embrace the technology sooner rather than later.

Cynthia Murrell, May 25, 2023

Google AI Moves Slowly to Google Advertising. Soon, That Is. Soon.

May 24, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/05/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-9.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read l ”Google Search Ads Will Soon Automatically Adapt to Queries Using Generative AI.” The idea of using smart software to sell ads is one that seems obvious to me. What surprised me about this article in TechCrunch is the use of the future tense and the indefinite “soon.” The Sundar Financial Times’ PR write up emphasized that Google has been doing smart software for a looooong time.

How could a company so dependent on ads be in the “will” and “soon” vaporware announcement business?

I noted this passage in the write up:

Google is going to start using generative AI to boost Search ads’ relevance based on the context of a query…

But why so slow in releasing obvious applications of generative software?

I don’t have answers to this quite Googley question, probably asked by those engaged in the internal discussions about who’s on first in the Google Brain versus DeepMind softball game, but I have some observations:

- Google had useful technology but lacked the administrative and managerial expertise to get something out the door and into the hands paying customers

- Google’s management processes simply do not work when the company is faced with strategic decisions. This signals the end of the go go mentality of the company’s Backrub to Google transformation. And it begs the question, “What else has the company lost over the last 25 years?”

- Google’s engineers cannot move from Ivory Tower quantum supremacy mental postures to common sense applications of technology to specific use cases.

In short, after 25 years Googzilla strikes me as arthritic when it comes to hot technology and a little more nimble when it tries to do PR. Except for Paris, of course.

Stephen E Arnold, May 24, 2023

Sam AI-man Begs for Regulation; China Takes Action for Structured Data LLM Integration

May 24, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Smart software is capturing attention from a number of countries’ researchers. The US smart software scene is crowded like productions on high school auditoria stages. Showing recently was OpenAI’s really sincere plea for regulation, oodles of new smart software applications and plug ins for browsers, and Microsoft’s assembly line of AI everywhere in Office 365. The venture capital contingent is chanting, “Who wants cash? Who wants cash?” Plus the Silicon Valley media are beside themselves with in-crowd interviews with the big Googler and breathless descriptions of how college professors fumble forward with students who may or may not let ChatGPT do that dumb essay.

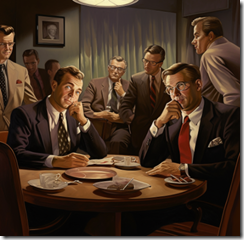

US Silicon Valley deciders in action in public discuss the need for US companies to move slowly, carefully, judiciously when deploying AI. In private, these folks want to go as quickly as possible, lock up markets, and rake in the dough. China skips the pretending and just goes forward with certain guidelines to avoid a fun visit to a special training facility. The illustration was created by MidJourney, a service which I assume wants to be regulated at least sometimes.

In the midst of this vaudeville production, I noted “Researchers from China Propose StructGPT to Improve the Zero-Shot Reasoning Ability of LLMs over Structured Data.” On the surface, the write up seems fairly tame by Silicon Valley standards. In a nutshell, whiz kids from a university I never heard of figure out how to reformat data in a database table and make those data available to a ChatGPT type system. The idea is that ChatGPT has some useful qualities. Being highly accurate is not a core competency, however.

The good news is that the Chinese researchers have released some of their software and provided additional information on GitHub. Hopefully American researchers can take time out from nifty plug ins, begging regulators to regulate, and banking bundles of pre-default bucks in JPMorgan accounts.

For me, the article makes clear:

- Whatever the US does, China is unlikely to trim the jib of technologies which can generate revenue, give China an advantage, and provide some new capabilities to its military.

- US smart software vendors have no choice but go full speed ahead and damn the AI powered torpedoes from those unfriendly to the “move fast and break things” culture. What’s a regulator going to do? I know. Have a meeting.

- Smart software is many things. I perceive what can be accomplished with what I can download today and maybe some fiddling with the Renmin University of China, Beijing Key Laboratory of Big Data Management and Analysis Methods, and the University of Electronic Science and Technology of China method is a great big trampoline. Those jumping can do some amazing and hitherto unseen tricks.

Net net: Less talk and more action, please.

Stephen E Arnold, May 24, 2023

AI Builders and the Illusions they Promote

May 24, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/05/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-3.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Why do AI firms insist on calling algorithmic mistakes “hallucinations” instead of errors, malfunctions, or glitches? The Guardian‘s Naomi Klein believes AI advocates chose this very human and mystical term to perpetuate a fundamental myth: AI will be humanity’s salvation. And that stance, she insists, demonstrates that “AI Machines Aren’t ‘Hallucinating.’ But their Makers Are.”

It is true that, in a society built around citizens’ well-being and the Earth’s preservation, AI could help end poverty, eliminate disease, reverse climate change, and facilitate more meaningful lives. But that is not the world we live in. Instead, our systems are set up to exploit both resources and people for the benefit of the rich and powerful. AI is poised to help them do that even more efficiently than before.

The article discusses four specific hallucinations possessing AI proponents. First, the assertion AI will solve the climate crisis when it is likely to do just the opposite. Then there’s the hope AI will help politicians and bureaucrats make wiser choices, which assumes those in power base their decisions on the greater good in the first place. Which leads to hallucination number three, that we can trust tech giants “not to break the world.” Those paying attention saw that was a false hope long ago. Finally is the belief AI will eliminate drudgery. Not all work, mind you, just the “boring” stuff. Some go so far as to paint a classic leftist ideal, one where humans work not to survive but to pursue our passions. That might pan out if we were living in a humanist, Star Trek-like society, Klein notes, but instead we are subjects of rapacious capitalism. Those who lose their jobs to algorithms have no societal net to catch them.

So why are the makers of AI promoting these illusions? Kelin proposes:

“Here is one hypothesis: they are the powerful and enticing cover stories for what may turn out to be the largest and most consequential theft in human history. Because what we are witnessing is the wealthiest companies in history (Microsoft, Apple, Google, Meta, Amazon …) unilaterally seizing the sum total of human knowledge that exists in digital, scrapable form and walling it off inside proprietary products, many of which will take direct aim at the humans whose lifetime of labor trained the machines without giving permission or consent. This should not be legal. In the case of copyrighted material that we now know trained the models (including this newspaper), various lawsuits have been filed that will argue this was clearly illegal. Why, for instance, should a for-profit company be permitted to feed the paintings, drawings and photographs of living artists into a program like Stable Diffusion or Dall-E 2 so it can then be used to generate doppelganger versions of those very artists’ work, with the benefits flowing to everyone but the artists themselves?”

The answer, of course, is that this should not be permitted. But since innovation moves much faster than legislatures and courts, tech companies have been operating on a turbo-charged premise of seeking forgiveness instead of permission for years. (They call it “disruption,” Klein notes.) Operations like Google’s book-scanning project, Uber’s undermining the taxi industry, and Facebook’s mishandling of user data, just to name a few, got so far so fast regulators simply gave in. Now the same thing appears to be happening with generative AI and the data it feeds upon. But there is hope. A group of top experts on AI ethics specify measures regulators can take. Will they?

Cynthia Murrell, May 24, 2023

More Google PR: For an Outfit with an Interesting Past, Chattiness Is Now a Core Competency

May 23, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/05/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-8.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

How many speeches, public talks, and interviews did Sergey Brin, Larry Page, and Eric Schmidt do? To my recollection, not too many. And what about now? Larry Page is tough to find. Mr. Brin is sort of invisible. Eric Schmidt has backed off his claim that Qwant keeps him up at night? But Sundar Pichai, one half of the Sundar and Prabhakar Comedy Show, is quite visible. AI everywhere keynote speeches, essays about smart software, and now an original “he wrote it himself” essay in the weird salmon-tinted newspaper The Financial Times. Yeah, pinkish.

Smart software provided me with an illustration of a fast talker pitching the future benefits of a new product. Yep, future probabilities. Rock solid. Thank you, MidJourney.

What’s with the spotlight on the current Google big wheel? Gentle reader, the visibility is one way Google is trying to advance its agenda. Before I offer my opinion about the Alphabet Google YouTube agenda, I want to highlight three statements in “Google CEO: building AI Responsibly Is the Only Race That Really Matters.”

Statement from the Google essay #1

At Google, we’ve been bringing AI into our products and services for over a decade and making them available to our users. We care deeply about this. Yet, what matters even more is the race to build AI responsibly and make sure that as a society we get it right.

The theme is that Google has been doing smart software for a long time. Let’s not forget that the GOOG released the Transformer model as open source and sat on its Googley paws while “stuff happened” starting in 2018. Was that responsible? If so, what does Google mean when it uses the word “responsible” as it struggles to cope with the meme “Google is late to the game.” For example, Microsoft pulled off a global PR coup with its Davos’ smart software announcements. Google responded with the Paris demonstration of Bard, a hoot for many in the information retrieval killing field. That performance of the Sundar and Prabhakar Comedy Show flopped. Meanwhile, Microsoft pushed its “flavor” of AI into its enterprise software and cloud services. My experience is that for every big PR action, there is an equal or greater PR reaction. Google is trying to catch faster race cars with words, not a better, faster, and cheaper machine. The notion that Google “gets it right” means to me one thing: Maintaining quasi monopolistic control of its market and generating the ad revenue. Google, after 25 years of walking the same old Chihuahua in a dog park with younger, more agile canines. After 25 years of me too and flopping with projects like solving death, revenue is the ONLY thing that matters to stakeholders. More of the Sundar and Prabhakar routine are wearing thin.

Statement from the Google essay #2

We have many examples of putting those principles into practice…

The “principles” apply to Google AI implementation. But the word principles is an interesting one. Google is paying fines for ignoring laws and its principles. Google is under the watchful eye of regulators in the European Union due to Google’s principles. China wanted Google to change and then beavered away on a China-acceptable search system until the cat was let out of the bag. Google is into equality, a nice principle, which was implemented by firing AI researchers who complained about what Google AI was enabling. Google is not the outfit I would consider the optimal source of enlightenment about principles. High tech in general and Google in particular is viewed with increasing concern by regulators in US states and assorted nation states. Why? The Googley notion of principles is not what others understand the word to denote. In fact, some might say that Google operates in an unprincipled manner. Is that why companies like Foundem and regulatory officials point out behaviors which some might find predatory, mendacious, or illegal? Principles, yes, principles.

Statement from the Google essay #3

AI presents a once-in-a-generation opportunity for the world to reach its climate goals, build sustainable growth, maintain global competitiveness and much more.

Many years ago, I was in a meeting in DC, and the Donald Rumsfeld quote about information was making the rounds. Good appointees loved to cite this Donald.Here’s the quote from 2002:

There are known knowns; there are things we know we know. We also know there are known unknowns; that is to say we know there are some things we do not know. But there are also unknown unknowns – the ones we don’t know we don’t know.

I would humbly suggest that smart software is chock full of known unknowns. But humans are not very good at predicting the future. When it comes to acting “responsibly” in the face of unknown unknowns, I dismiss those who dare to suggest that humans can predict the future in order to act in a responsible manner. Humans do not act responsibly with either predictability or reliability. My evidence is part of your mental furniture: Racism, discrimination, continuous war, criminality, prevarication, exaggeration, failure to regulate damaging technologies, ineffectual action against industrial polluters, etc. etc. etc.

I want to point out that the Google essay penned by one half of the Sundar and Prabhakar Comedy Show team could be funny if it were not a synopsis of the digital tragedy of the commons in which we live.

Stephen E Arnold, May 23, 2023

Please, World, Please, Regulate AI. Oh, Come Now, You Silly Goose

May 23, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/05/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-7.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

The ageing heart of capitalistic ethicality is beating in what some cardiologists might call arrhythmia. Beating fast and slow means that the coordinating mechanisms are out of whack. What’s the fix? Slam in an electronic gizmo for the humanoid. But what about a Silicon Valley with rhythm problems: Terminating employees, legal woes, annoying elected officials, and teen suicides? The outfits poised to make a Nile River of cash from smart software are doing the “begging” thing.

The Gen X whiz kid asks the smart software robot: “Will the losers fall for the call to regulate artificial intelligence?” The smart software robot responds, “Based on a vector and matrix analysis, there is a 75 to 90 percent probability that one or more nation states will pass laws to regulate us.” The Gen X whiz kid responds, “Great, I hate doing the begging and pleading thing.” The illustration was created by my old pal, MidJourney digital emulators.

“OpenAI Leaders Propose International Regulatory body for AI” is a good summation of the “please, regulate AI even though it is something most people don’t understand and a technology whose downstream consequences are unknown.” The write up states:

…AI isn’t going to manage itself…

We have some first hand experience with Silicon Valley wizards who [a] allow social media technology to destroy the fabric of civil order, [b] control information frames so that hidden hands can cause irrelevant ads to bedevil people looking for a Thai restaurant, [c] ignoring laws of different nation states because the fines are little more than the cost of sandwiches at an off site meeting, and [d] sporty behavior under the cover of attendance at industry conferences (why did a certain Google Glass marketing executive try to kill herself and the yacht incident with a controlled substance and subsequent death?).

What fascinated me was the idea that an international body should regulate smart software. The international bodies did a bang up job with the Covid speed bump. The United Nations is definitely on top of the situation in central Africa. And the International Criminal Court? Oh, right, the US is not a party to that organization.

What’s going on with these calls for regulation? In my opinion, there are three vectors for this line of begging, pleading, and whining.

- The begging can be cited as evidence that OpenAI and its fellow travelers tried to do the right thing. That’s an important psychological ploy so the company can go forward and create a Terminator version of Clippy with its partner Microsoft

- The disingenuous “aw, shucks” approach provides a lousy make up artist with an opportunity to put lipstick on a pig. The shoats and hoggets look a little better than some of the smart software champions. Dim light and a few drinks can transform a boarlet into something spectacular in the eyes of woozy venture capitalists

- Those pleading for regulation want to make sure their company has a fight chance to dominate the burgeoning market for smart software methods. After all, the ageing Googzilla is limping forward with billions of users who will chow down on the deprecated food available in the Google cafeterias.

At least Marie Antoinette avoided the begging until she was beheaded. Apocryphal or not, she held on the “Let them eat mille-feuille. But the blade fell anyway.

PS. There allegedly will be ChatGPT 5.0. Isn’t that prudent?

Stephen E Arnold, May 23, 2023

No kidding?