AI and Non-State Actors

June 16, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-19.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

“AI Weapons Need a Safe Back Door for Human Control” contains a couple of interesting statements.

The first is a quote from Hugh Durrant-Whyte, director of the Centre for Translational Data Science at the University of Sydney. He allegedly said:

China is investing arguably twice as much as everyone else put together. We need to recognize that it genuinely has gone to town. If you look at the payments, if you look at the number of publications, if you look at the companies that are involved, it is quite significant. And yet, it’s important to point out that the US is still dominant in this area.

For me, the important point is the investment gap. Perhaps the US should be more aggressive in its identifying and funding promising smart software companies?

The second statement which caught my attention was:

James Black, assistant director of defense and security research group RAND Europe, warned that non-state actors could lead in the proliferation of AI-enhanced weapons systems. “A lot of stuff is very much going to be difficult to control from a non-proliferation perspective, due to its inherent software-based nature. A lot of our export controls and non-proliferation regimes that exist are very much focused on old-school traditional hardware…

Several observations:

- Smart software ups the ante in modern warfare, intelligence, and law enforcement activities

- The smart software technology has been released into the wild. As a result, bad actors have access to advanced tools

- The investment gap is important but the need for skilled smart software engineers, mathematicians, and support personnel is critical in the US. University research departments are, in my opinion, less and less productive. The concentration of research in the hands of a few large publicly traded companies suggests that military, intelligence, and law enforcement priorities will be ignored.

Net net: China, personnel, and institution biases require attention by senior officials. These issues are not fooling around with Twitter scale. More is at stake. Urgent action is needed, which may be uncomfortable for fans of TikTok and expensive dinners in Washington, DC.

Stephen E Arnold, June 16, 2023

Is Smart Software Above Navel Gazing: Nope, and It Does Not Care

June 15, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb_thumb_thumb_thumb_thumb Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb_thumb_thumb_thumb_thumb](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb_thumb_thumb_thumb_thumb_thumb_thumb.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Synthetic data. Statistical smoothing. Recursive methods. When we presented our lecture “OSINT Blindspots” at the 2023 National Cyber Crime Conference, the audience perked up. The terms might have been familiar, but our framing caught the more than 100 investigators’ attention. The problem my son (Erik) and I described was butt simple: Faked data will derail a prosecution if an expert witness explains that machine-generated output may be wrong.

We provided some examples, ranging from a respected executive who obfuscates his “real” business from a red-herring business. We profiled how information about a fervid Christian adherence to God’s precepts overshadowed a Ponzi scheme. We explained how an American living in Eastern Europe openly flaunts social norms in order to distract authorities from an encrypted email business set up to allow easy, seamless communication for interesting people. And we included more examples.

An executive at a big time artificial intelligence firm looks over his domain and asks himself, “How long will it take for the boobs and boobettes to figure out that our smart software is wonky?” The illustration was spit out by the clever bits and bytes at MidJourney.

What’s the point in this blog post? Who cares besides analysts, lawyers, and investigators who have to winnow facts which are verifiable from shadow or ghost information activities?

It turns out that a handful of academics seem to have an interest in information manipulation. Their angle of vision is broader than my team’s. We focus on enforcement; the academics focus on tenure or getting grants. That’s okay. Different points of view lead to interesting conclusions.

Consider this academic and probably tough to figure out illustration from “The Curse of Recursion: Training on Generated Data Makes Models Forget”:

A less turgid summary of the researchers’ findings appears at this location.

The main idea is that gee-whiz methods like Snorkel and small language models have an interesting “feature.” They forget; that is, as these models ingest fake data they drift, get lost, or go off the rails. Synthetic cloth, unlike natural cotton T shirts, look like shirts. But on a hot day, those super duper modern fabrics can cause a person to perspire and probably emit unusual odors.

The authors introduce and explain “model collapse.” I am no academic. My interpretation of the glorious academic prose is that the numerical recipes, systems, and methods don’t work like the nifty demonstrations. In fact, over time, the models degrade. The hapless humanoids who are dependent on these lack the means to figure out what’s on point and what’s incorrect. The danger, obviously, is that clueless and lazy users of smart software make more mistakes in judgment than a person might otherwise reach.

The paper includes fancy mathematics and more charts which do not exactly deliver on the promise of a picture is worth a thousand words. Let me highlight one statement from the journal article:

Our evaluation suggests a “first mover advantage” when it comes to training models such as LLMs. In our work we demonstrate that training on samples from another generative model can induce a distribution shift, which over time causes Model Collapse. This in turn causes the model to mis-perceive the underlying learning task. To make sure that learning is sustained over a long time period, one needs to make sure that access to the original data source is preserved and that additional data not generated by LLMs remain available over time. The need to distinguish data generated by LLMs from other data raises questions around the provenance of content that is crawled from the Internet: it is unclear how content generated by LLMs can be tracked at scale. One option is community-wide coordination to ensure that different parties involved in LLM creation and deployment share the information needed to resolve questions of provenance. Otherwise, it may become increasingly difficult to train newer versions of LLMs without access to data that was crawled from the Internet prior to the mass adoption of the technology, or direct access to data generated by humans at scale.

Bang on.

What the academics do not point out are some “real world” business issues:

- Solving this problem costs money; the point of synthetic and machine-generated data is to reduce costs. Cost reduction wins.

- Furthermore, fixing up models takes time. In order to keep indexes fresh, delays are not part of the game plan for companies eager to dominate a market which Accenture pegs as worth trillions of dollars. (See this wild and crazy number.)

- Fiddling around to improve existing models is secondary to capturing the hearts and minds of those eager to worship a few big outfits’ approach to smart software. No one wants to see the problem because that takes mental effort. Those inside one of firms vying to own information framing don’t want to be the nail that sticks up. Not only do the nails get pounded down, they are forced to leave the platform. I call this the Dr. Timnit Gebru effect.

Net net: Good paper. Nothing substantive will change in the short or near term.

Stephen E Arnold, June 15, 2023

Two Creatures from the Future Confront a Difficult Puzzle

June 15, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-13.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I was interested in a suggestion a colleague made to me at lunch. “Check out the new printed World Book encyclopedia.”

I replied, “A new one. Printed? Doesn’t information change quickly today.”

My lunch colleague said, “That’s what I have heard.”

I offered, “Who wants a printed, hard-to-change content objects? Where’s the fun in sneaky or sockpuppet edits? Do you really want to go back to non-fluid information?”

My hungry debate opponent said, “What? Do you mean misinformation is good?”

I said, “It’s a digital world. Get with the program.”

Navigate to World Book.com and check out the 10 page sample about dinosaurs. When I scanned the entry, there was no information about dinobabies. I was disappointed because the dinosaur segment is bittersweet for these reasons:

- The printed encyclopedia is a dinosaur of sorts, an expensive one to produce and print at that

- As a dinobaby, I was expecting an IBM logo or maybe an illustration of a just-RIF’ed IBM worker talking with her attorney about age discrimination

- Those who want to fill a bookshelf can buy books at a second hand bookstore or connect with a zippy home designer to make the shelf tasteful. I think there is wallpaper of books on a shelf as an alternative.

Two aliens are trying to figure out what a single volume of a World Book encyclopedia contains? I assume the creatures will be holding the volume 6 “I”, the one with information about the Internet. The image comes from the creative bits at MidJourney.

Let me dip into my past. Ah, you are not interested? Tough. Here we go down memory lane:

In 1953 or 1954, my father had an opportunity to work in Brazil. Off our family went. One of the must-haves was a set of World Book encyclopedias. The covers were brown; the pictures were most black and white; and the information was, according to my parents, accurate.

The schools in Campinas, Brazil, at that time used one language. Portuguese. No teacher spoke English. Therefore, after failing every class except mathematics, my parents decided to get me a tutor. The course work was provided by something called Calvert in Baltimore, Maryland. My teacher would explain the lesson, watch me read, ask me a couple of questions, and bail out after an hour or two. That lasted about as long as my stint in the Campinas school near our house. My tutor found himself on the business end of a snake. The snake lived; the tutor died.

My father — a practical accountant — concluded that I should read the World Book encyclopedia. Every volume. I think there were about 20 plus a couple of annual supplements. My mother monitored my progress and made me write summaries of the “interesting” articles. I recall that interesting or not, I did one summary a day and kept my parents happy.

I hate World Books. I was in the fourth or fifth grade. Campinas had great weather. There were many things to do. Watch the tarantulas congregate in our garage. Monitor the vultures circling my mother when she sunbathed on our deck. Kick a soccer ball when the students got out of school. (I always played. I sucked, but I had a leather, size five ball. Prior to our moving to the neighborhood, the kids my age played soccer with a rock wrapped in rags. The ball was my passport to an abuse free stint in rural Brazil.)

But a big chunk of my time was gobbled by the yawing white maw of a World Book.

When we returned to the US, I entered the seventh grade. No one at the public school in Illinois asked about my classes in Brazil. I just showed up in Miss Soape’s classroom and did the assignments. I do know one thing for sure: I was the only student in my class who did not have to read the assigned work. Reading the World Book granted me a free ride through grade school, high school, and the first couple of years at college.

Do I recommend that grade school kids read the World Book cover to cover?

No, I don’t. I had no choice. I had no teacher. I had no radio because the electricity was on several hours a day. There was no TV because there were no broadcasts in Campinas. There were no English language anything. Thus, the World Book, which I hate, was the only game in town.

Will I buy the print edition of the 2023 World Book? Not a chance.

Will other people? My hunch is that sales will be a slog outside of library acquisitions and a few interior decorators trying to add color to a client’s book shelf.

I may be a dinobaby, but I have figured out how to look up information online.

The book thing: I think many young people will be as baffled about an encyclopedia as the two aliens in the illustration.

By the way, the full set is about $1,200. A cheap smartphone can be had for about $250. What will kids use to look up information? If you said, the printed encyclopedia, you are a rare bird. If you move to a remote spot on earth, you will definitely want to lug a set with you. Starlink can be expensive.

Stephen E Arnold, June 14, 2023

Can You Create Better with AI? Sure, Even If You Are Picasso or a TikTok Star

June 15, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-18.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Do we need to worry about how generative AI will change the world? Yes, but no more than we had to fear automation, the printing press, horseless carriages, and the Internet. The current technology revolution is analogous to the Industrial Revolutions and technology advancements of past centuries. University of Chicago history professor Ada Palmer is aware of humanity’s cyclical relationship with technology and she discusses it in her Microsoft Unlocked piece: “We Are An Information Revolution Species.”

Palmer explains that the human species has been living in an information revolution for twenty generations. She provides historical examples and how people bemoan changes. The changes arguably remove the “art” from tasks. These tasks, however, are simplified and allow humans to create more. It also frees up humanity’s time to conquer harder problems. Changes in technology spur a democratization of information. They also mean that jobs change, so humans need to adapt their skills for continual survival.

Palmer says that AI is just another tool as humanity progresses. She asserts that the bigger problems are outdated systems that no longer serve the current society. While technology has evolved so has humanity:

“This revolution will be faster, but we have something the Gutenberg generations lacked: we understand social safety nets. We know we need them, how to make them. We have centuries of examples of how to handle information revolutions well or badly. We know the cup is already leaking, the actor and the artist already struggling as the megacorp grows rich. Policy is everything. We know we can do this well or badly. The only sure road to real life dystopia is if we convince ourselves dystopia is unavoidable, and fail to try for something better.”

AI does need a social safety net so it does not transform into a sentient computer hell-bent on world domination. Palmer should point out that humans learn from their imaginations too. Star Trek or 2001: A Space Odyssey anyone?

A digital Sistine Chapel from a savant in Cairo, Illinois. Oh, right, Cairo, Illinois, is gone. But nevertheless…

Whitney Grace, June 15, 2023

Is This for Interns, Contractors, and Others Whom You Trust?

June 14, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb_thumb_thumb-3.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Not too far from where my office is located, an esteemed health care institution is in its second month of a slight glitch. The word in Harrod’s Creek is that security methods at use at a major hospital were — how shall I frame this — a bit like the 2022-2023 University of Kentucky’s’ basketball team’s defense. In Harrod’s Creek lingo, this statement would translate to standard English as “them ‘Cats did truly suck.”

A young temporary worker looks at her boss. She says, “Yes, I plugged a USB drive into this computer because I need to move your PowerPoint to a different machine to complete the presentation.” The boss says, “Okay, you can use the desktop in my office. I have to go to a cyber security meeting. See you after lunch. Text me if you need a password to something.” The illustration for this hypothetical conversation emerged from the fountain of innovation known as MidJourney.

The chatter about assorted Federal agencies’ cyber personnel meeting with the institution’s own cyber experts are flitting around. When multiple Federal entities park their unobtrusive and sometimes large black SUVs close to the main entrance, someone is likely to notice.

This short blog post, however, is not about the lame duck cyber security at the health care facility. (I would add an anecdote about an experience I had in 2022. I showed up for a check up at a unit of the health care facility. Upon arriving, I pronounced my date of birth and my name. The professional on duty said, “We have an appointment for your wife and we have her medical records.” Well, that was a trivial administrative error: Wrong patient, confidential information shipped to another facility, and zero idea how that could happen. I made the appointment myself and provided the required information. That’s a great computer systems and super duper security in my book.)

The question at hand, however, is: “How can a profitable, marketing oriented, big time in their mind health care outfit, suffer a catastrophic security breach?”

I shall point you to one possible pathway: Temporary workers, interns, and contractors. I will not mention other types of insiders.

Please, point your browser to Hak5.org and read about the USB Rubber Ducky. With a starting price of $80US, this USB stick has some functions which can accomplish some interesting actions. The marketing collateral explains:

Computers trust humans. Humans use keyboards. Hence the universal spec — HID, or Human Interface Device. A keyboard presents itself as a HID, and in turn it’s inherently trusted as human by the computer. The USB Rubber Ducky — which looks like an innocent flash drive to humans — abuses this trust to deliver powerful payloads, injecting keystrokes at superhuman speeds.

With the USB Rubby Ducky, one can:

- Install backdoors

- Covertly exfiltrate documents

- Capture credential

- Execute compound actions.

Plus, if there is a USB port, the Rubber Ducky will work.

I mention this device because it may not too difficult for a bad actor to find ways into certain types of super duper cyber secure networks. Plus temporary workers and even interns welcome a coffee in an organization’s cafeteria or a nearby coffee shop. Kick in a donut and a smile and someone may plug the drive in for free!

Stephen E Arnold, June 14, 2023

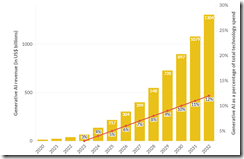

Smart Software: The Dream of Big Money Raining for Decades

June 14, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb_thumb_thumb-2.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

The illustration — from the crafty zeros and ones at MidJourney — depicts a young computer scientist reveling in the cash generated from his AI-infused innovation.

For a budding wizard, the idea of cash falling around the humanoid is invigorating. It is called a “coder’s high” or Silicon Valley fever. There is no known cure, even when FTX-type implosions doom a fellow traveler to months of litigation and some hard time among individuals typically not in an advanced math program.

Where’s the cyclone of cash originate?

I would submit that articles like “Generative AI Revenue Is Set to Reach US$1.3 Trillion in 2032” are like catnip to a typical feline living amidst the cubes at a Google-type company or in the apartment of a significant other adjacent a blue chip university in the US.

Here’s the chart that makes it easy to see the slope of the growth:

I want to point out that this confection is the result of the mid tier outfit IDC and the fascinating Bloomberg terminal. Therefore, I assume that it is rock solid, based on in-depth primary research, and deep analysis by third-party consultants. I do, however, reserve the right to think that the chart could have been produced by an intern eager to hit the gym and grabbing a sushi special before the good stuff was gone.

Will generative AI hit the $1.3 trillion target in nine years? In the hospital for recovering victims of spreadsheet fever, the coder’s high might slow recovery. But many believe — indeed, fervently hope to experience the realities of William James’s mystics in his Varieties of Religious Experience.

My goodness, the vision of money from Generative AI is infectious. So regulate mysticism? Erect guard rails to prevent those with a coder’s high from driving off the Information Superhighway?

Get real.

Stephen E Arnold, June 12, 2023

Can One Be Accurate, Responsible, and Trusted If One Plagiarizes

June 14, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-17.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Now that AI is such a hot topic, tech companies cannot afford to hold back due to small flaws. Like a tendency to spit out incorrect information, for example. One behemoth seems to have found a quick fix for that particular wrinkle: simple plagiarism. Eager to incorporate AI into its flagship Search platform, Google recently released a beta version to select users. Forbes contributor Matt Novak was among the lucky few and shares his observations in, “Google’s New AI-Powered Search Is a Beautiful Plagiarism Machine.”

The author takes us through his query and results on storing live oysters in the fridge, complete with screenshots of the Googlebot’s response. (Short answer: you can for a few days if you cover them with a damp towel.) He highlights passages that were lifted from websites, some with and some without tiny tweaks. To be fair, Google does link to its source pages alongside the pilfered passages. But why click through when you’ve already gotten what you came for? Novak writes:

“There are positive and negative things about this new Google Search experience. If you followed Google’s advice, you’d probably be just fine storing your oysters in the fridge, which is to say you won’t get sick. But, again, the reason Google’s advice is accurate brings us immediately to the negative: It’s just copying from websites and giving people no incentive to actually visit those websites.

Why does any of this matter? Because Google Search is easily the biggest driver of traffic for the vast majority of online publishers, whether it’s major newspapers or small independent blogs. And this change to Google’s most important product has the potential to devastate their already dwindling coffers. … Online publishers rely on people clicking on their stories. It’s how they generate revenue, whether that’s in the sale of subscriptions or the sale of those eyeballs to advertisers. But it’s not clear that this new form of Google Search will drive the same kind of traffic that it did over the past two decades.”

Ironically, Google’s AI may shoot itself in the foot by reducing traffic to informative websites: it needs their content to answer queries. Quite the conundrum it has made for itself.

Cynthia Murrell, June 14, 2023

Trust: Some in the European Union Do Not Believe the Google. Gee, Why?

June 13, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb_thumb_thumb_thumb Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb_thumb_thumb_thumb](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb_thumb_thumb_thumb_thumb_thumb.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read “Google’s Ad Tech Dominance Spurs More Antitrust Charges, Report Says.” The write up seems to say that some EU regulators do not trust the Google. Trust is a popular word at the alleged monopoly. Yep, trust is what makes Google’s smart software so darned good.

A lawyer for a high tech outfit in the ad game says, “Commissioner, thank you for the question. You can trust my client. We adhere to the highest standards of ethical behavior. We put our customers first. We are the embodiment of ethical behavior. We use advanced technology to enhance everyone’s experience with our systems.” The rotund lawyer is a confection generated by MidJourney, an example of in this case, pretty smart software.

The write up says:

These latest charges come after Google spent years battling and frequently bending to the EU on antitrust complaints. Seeming to get bigger and bigger every year, Google has faced billions in antitrust fines since 2017, following EU challenges probing Google’s search monopoly, Android licensing, Shopping integration with search, and bundling of its advertising platform with its custom search engine program.

The article makes an interesting point, almost as an afterthought:

…Google’s ad revenue has continued increasing, even as online advertising competition has become much stiffer…

The article does not ask this question, “Why is Google making more money when scrutiny and restrictions are ramping up?”

From my vantage point in the old age “home” in rural Kentucky, I certainly have zero useful data about this interesting situation, assuming that it is true of course. But, for the nonce, let’s speculate, shall we?

Possibility A: Google is a monopoly and makes money no matter what laws, rules, and policies are articulated. Game is now in extra time. Could the referee be bent?

This idea is simple. Google’s control of ad inventory, ad options, and ad channels is just a good, old-fashioned system monopoly. Maybe TikTok and Facebook offer options, but even with those channels, Google offers options. Who can resist this pitch: “Buy from us, not the Chinese. Or, buy from us, not the metaverse guy.”

Possibility B: Google advertising is addictive and maybe instinctual. Mice never learn and just repeat their behaviors.

Once there is a cheese pay off for the mouse, those mice are learning creatures and in some wild and non-reproducible experiments inherit their parents’ prior learning. Wow. Genetics dictate the use of Google advertising by people who are hard wired to be Googley.

Possibility C: Google’s home base does not regulate the company in a meaningful way.

The result is an advanced and hardened technology which is better, faster, and maybe cheaper than other options. How can the EU, with is squabbling “union”, hope to compete with what is weaponized content delivery build on a smart, adaptive global system? The answer is, “It can’t.”

Net net: After a quarter century, what’s more organized for action, a regulatory entity or the Google? I bet you know the answer, don’t you?

Stephen E Arnold, June xx, 2023

Sam AI-man Speak: What I Meant about India Was… Really, Really Positive

June 13, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb_thumb_thumb Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb_thumb_thumb](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb_thumb_thumb_thumb_thumb.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I have noted Sam AI-man of OpenAI and his way with words. I called attention to an article which quoted him as suggesting that India would be forever chasing the Usain Bolt of smart software. Who is that? you may ask. The answer is, Sam AI-man.

MidJourney’s incredible insight engine generated an image of a young, impatient business man getting a robot to write his next speech. Good move, young business man. Go with regressing to the norm and recycling truisms.

The remarkable explainer appears in “Unacademy CEO Responds To Sam Altman’s Hopeless Remark; Says Accept The Reality.” Here’s the statement I noted:

Following the initial response, Altman clarified his remarks, stating that they were taken out of context. He emphasized that his comments were specifically focused on the challenge of competing with OpenAI using a mere $10 million investment. Altman clarified that his intention was to highlight the difficulty of attempting to rival OpenAI under such constrained financial circumstances. By providing this clarification, he aimed to address any misconceptions that may have arisen from his earlier statement.

To see the original “hopeless” remark, navigate to this link.

Sam AI-man is an icon. My hunch is that his public statements have most people in awe, maybe breathless. But India as hopeless in smart software. Just not too swift. Why not let ChatGPT craft one’s public statements. Those answers are usually quite diplomatic, even if wrong or wonky some times.

Stephen E Arnold, June 13, 2023

Sam AI-man: India Is Hopeless When It Comes to AI. What, Sam? Hopeless!

June 13, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb_thumb-2.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Sam Altman (aka to me and my research team as Sam AI-man) comes up with interesting statements. I am not sure if Sam AI-man crafts them himself or if his utterances are the work of ChatGPT or a well-paid, carefully groomed publicist. I don’t think it matters. I just find his statements interesting examples of worshipped tech leader speak.

MidJourney presents an image of a young Sam AI-man explaining to one of his mentors that he is hopeless. Sam AI-man has been riding this particular pony named arrogance since he was a wee lad. At least that’s what I take away from the machine generated illustration. Your interpretation may be different. Sam AI is just being helpful.

Navigate to “Sam Altman Calls India Building ChatGPT-Like Tool Hopeless. Tech Mahindra CEO Says Challenge Accepted.” The write up reports that a former Google wizard asked Sam AI-man about India’s ability to craft its own smart software, an equivalent to OpenAI. Sam AI-man replied in true Silicon Valley style:

“The way this works is we’re going to tell you, it’s totally hopeless to compete with us on training foundation models you shouldn’t try, and it’s your job to like try anyway. And I believe both of those things. I think it is pretty hopeless,” Altman said, in reply.

That’s a sporty answer. Sam AI-man may have a future working as an ambassador or as a negotiator in the Hague for the exciting war crimes trials bound to come.

I would suggest that Sam AI-man, prepare for this new role by gathering basic information to answer these questions:

- Why are so many of India’s best and brightest generating math tutorials on YouTube which describe computational tricks which are insightful, not usually taught in Palo Alto high schools, and relevant to smart software math?

- How many mathematicians are generated in India each graduation cycle? How many does the US produce in the same time period? (Include India’s nationals studying in US universities and graduating with their cohort?

- How many Srinivasa Ramanujans are chugging along in India’s mathy environment? How many are projected to come along in the next five years?

- How many Indian nationals work on smart software at Facebook, Google, Microsoft, and OpenAI and similar firms at this time?

- What open source tools are available to Indian mathematicians to use as a launch pad for smart software frameworks and systems?

My thought is that “pretty hopeless” is a very Sam AI-man phrase. It captures the essence of arrogance, cultural insensitivity, and bluntness that makes Silicon Valley prose so memorable.

Congrats, Sam AI-man. Great insight. Classy too if the write up is “real news” and not generated by ChatGPT.

Stephen E Arnold, June 12, 2023