Will Smart Software Take Customer Service Jobs? Do Grocery Stores Raise Prices? Well, Yeah, But

July 26, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-45.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I have suggested that smart software will eliminate some jobs. Who will be doing the replacements? Workers one finds on Fiverr.com? Interns who will pay to learn something which may be more useful than a degree in art history? RIF’ed former employees who are desperate for cash and will work for a fraction of their original salary?

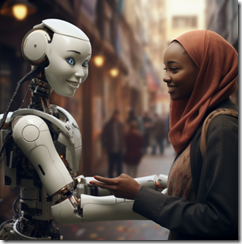

“Believe it or not, I am here to help you. However, I strong suggest you learn more about the technology used to create software robots and helpers like me. I also think you have beautiful eyes. My are just blue LEDs, but the Terminator finds them quite attractive,” says the robot who is learning from her human sidekick. Thanks, MidJourney, you have the robot human art nailed.

The fact is that smart software will perform many tasks once handled by humans? Don’t believe me. Visit a local body shop. Then take a tour of the Toyota factory not too distant from Tokyo’s airport. See the difference? The local body shop is swarming with folks who do stuff with their hands, spray guns, and machines which have been around for decades. The Toyota factory is not like that.

Machines — hardware, software, or combos — do not take breaks. They do not require vacations. They do not complain about hard work and long days. They, in fact, are lousy machines.

Therefore, the New York Times’s article “Training My Replacement: Inside a Call Center Worker’s Battle with AI” provides a human interest glimpse of the terrors of a humanoid who sees the writing on the wall. My hunch is that the New York Times’s “real news” team will do more stories like this.

However, it would be helpful to people like to include information such as a reference or a subtle nod to information like this: “There Are 4 Reasons Why Jobs Are Disappearing — But AI Isn’t One of Them.” What are these reasons? Here’s a snapshot:

- Poor economic growth

- Higher costs

- Supply chain issues (real, convenient excuse, or imaginary)

- That old chestnut: Covid. Boo.

Do I buy the report? I think identification of other factors is a useful exercise. In the short term, many organizations are experimenting with smart software. Few are blessed with senior executives who trust technology when those creating the technology are not exactly sure what’s going on with their digital whiz kids.

The Gray Lady’s “real news” teams should be nervous. The wonderful, trusted, reliable Google is allegedly showing how a human can use Google AI to help humans with creating news.

Even art history major should be suspicious because once a leader in carpetland hears about the savings generated by deleting humanoids and their costs, those bean counters will allow an MBA to install software. Remember, please, that the mantra of modern management is money and good enough.

Stephen E Arnold, July 26, 2023

Hedge Funds and AI: Lovers at First Sight

July 26, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-50.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

One promise of AI is that it will eliminate tedious tasks (and the jobs that with them). That promise is beginning to be fulfilled in the investment arena, we learn from the piece, “Hedge Funds Are Deploying ChatGPT to Handle All the Grunt Work,” shared by Yahoo Finance. What could go wrong?

Two youthful hedge fund managers are so pleased with their AI-infused hedge fund tactics, they jumped in a swimming pool which is starting to fill with money. Thanks, MidJourney. You have nailed the happy bankers and their enjoyment of money raining down.

Bloomberg’s Justina Lee and Saijel Kishan write:

“AI on Wall Street is a broad church that includes everything from machine-learning algorithms used to compute credit risks to natural language processing tools that scan the news for trading. Generative AI, the latest buzzword exemplified by OpenAI’s chatbot, can follow instructions and create new text, images or other content after being trained on massive amounts of inputs. The idea is that if the machine reads enough finance, it could plausibly price an option, build a portfolio or parse a corporate news headline.”

Parse the headlines for investment direction. Interesting. We also learn:

“Fed researchers found [ChatGPT] beats existing models such as Google’s BERT in classifying sentences in the central bank’s statements as dovish or hawkish. A paper from the University of Chicago showed ChatGPT can distill bloated corporate disclosures into their essence in a way that explains the subsequent stock reaction. Academics have also suggested it can come up with research ideas, design studies and possibly even decide what to invest in.”

Sounds good in theory, but there is just one small problem (several, really, but let’s focus on just the one): These algorithms make mistakes. Often. (Scroll down in this GitHub list for the ChatGPT examples.) It may be wise to limit one’s investments to firms patient enough to wait for AI to become more reliable.

Cynthia Murrell, July 26, 2023

Google, You Are Constantly Surprising: Planned Obsolescence, Allegations of IP Impropriety, and Gardening Leave

July 25, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-54.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I find Google to be an interesting company, possibly more intriguing than the tweeter X outfit. As I zipped through my newsfeed this morning while dutifully riding the exercise machine, I noticed three stories. Each provides a glimpse of the excitement that Google engenders. Let me share these items with you because I am not sure each will get the boost from the tweeter X outfit.

Google is in the news and causing consternation in the mind of this MidJourney creation. . At least one Google advocate finds the information shocking. Imagine, planned obsolescence, alleged theft of intellectual property, and sending a Googler with a 13 year work history home to “garden.”

The first story comes from Oakland, California. California is a bastion of good living and clear thinking. “Thousands of Chromebooks Are ‘Expiring,’ Forcing Schools to Toss Them Out” explains that Google has designed obsolescence into Chromebooks used in schools. Why? one may ask. Here’s the answer:

Google told OUSD [Oakland Unified School District’ the baked-in death dates are necessary for security and compatibility purposes. As Google continues to iterate on its Chromebook software, older devices supposedly can’t handle the updates.

Yes, security, compatibility, and the march of Googleware. My take is that green talk is PR. The reality is landfill.

The second story is from the Android Authority online news service. One would expect good news or semi-happy information about my beloved Google. But, alas, the story “Google Ordered to Pay $339M for stealing the very idea of Chromecast.” The operative word is “stealing.” Wow. The Google? The write up states:

Google opposed the complaint, arguing that the patents are “hardly foundational and do not cover every method of selecting content on a personal device and watching it on another screen.”

Yep, “hardly,” but stealing. That’s quite an allegation. It begs the question, “Are there any other Google actions which have suggested similar behavior; for example, an architecture-related method, an online advertising process, or alleged misuse of intellectual property? Oh, my.

The third story is a personnel matter. Google has a highly refined human resource methodology. “Google’s Indian-Origin Director of News Laid Off after 13 Years: In Privileged Position” reveals as actual factual:

Google has sent Chinnappa on a “gardening leave…

Ah, ha, Google is taking steps to further its green agenda. I wonder if the “Indian origin Xoogler” will dig a hole and fill it with Chromebooks from the Oakland school district.

Amazing, beloved Google. Amazing.

Stephen E Arnold, July 25, 2023

Harvard Approach to Ethics: Unemployed at Stanford

July 25, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-42.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I attended such lousy schools no one bothered to cheat. No one was motivated. No parents cared. It was a glorious educational romp because the horizons for someone in a small town in the dead center of Illinois was going nowhere. The proof? Visit a small town in Illinois and what do you see? Not much. Think of Cairo, Illinois, as a portent. In the interest of full disclosure, I did sell math and English homework to other students in that intellectual wasteland. Now you know how bad my education was. People bought “knowledge” from me. Go figure.

“You have been cheating,” says the old-fashioned high school teacher. The student who would rise to fame as a brilliant academician and consummate campus politician replies, “No, no, I would never do such a thing.” The student sitting next to this would-be future beacon of proper behavior snarls, “Oh, yes you were. You did not read Aristotle Ethics, so you copied exactly what I wrote in my blue book. You are disgusting. And your suspenders are stupid.”

But in big name schools, cheating apparently is a thing. Competition is keen. The stakes are high. I suppose that’s why an ethic professor at Harvard made some questionable decisions. I thought that somewhat scandalous situation would have motivated big name universities to sweep cheating even farther under the rug.

But no, no, no.

The Stanford student newspaper — presumably written by humanoid students awash with Phil Coffee — wrote “Stanford President Resigns over Manipulated Research, Will Retract at Least Three Papers.” The subtitle is cute; to wit:

Marc Tessier-Lavigne failed to address manipulated papers, fostered unhealthy lab dynamic, Stanford report says

Okay, this respected leader and thought leader for the students who want to grow up to be just like Larry, Sergey, and Peter, among other luminaries, took some liberties with data.

The presumably humanoid-written article reports:

Tessier-Lavigne defended his reputation but acknowledged that issues with his research, first raised in a Daily investigation last autumn, meant that Stanford requires a president “whose leadership is not hampered by such discussions.”

I am confident reputation management firms and a modest convocation of legal eagles will explain this Harvard-echoing matter. With regard to the soon-to-be former president, I really don’t care about him, his allegedly fiddled research, and his tear-inducing explanation which will appear soon.

Here’s what I care about:

- Is it any wonder why graduates of Stanford University — plug in your favorite Sillycon Valley wizard who graduated from the prestigious university — finds trust difficult to manifest? I don’t. I am not sure “trust”, excellence, and Stanford are words that can nest comfortably on campus.

- Is any academic research reproducible? I know that ballpark estimates suggest that as much as 40 percent of published research may manifest the tiny problem of duplicating the results? Is it time to think about what actions are teaching students what’s okay and what’s not?

- Does what I shall call “ethics rot” extend outside of academic institutions? My hunch is that big time universities have had some challenges with data in the past. No one bothered to check too closely. I know that the estimable William James looked for mistakes in the writings of those who disagreed with radical empiricism stuff, but today? Yeah, today.

Net net: Ethical rot, not academic excellence, seems to be a growth business. Now what Stanford graduates’ business have taken ethical short cuts to revenue? I hear crickets.

PS. Is it three, five, or an unknown number of papers with allegedly fakey wakey information? Perhaps the Stanford humanoids writing the article were hallucinating when working with the number of fiddled articles? Let’s ask Bard. Oh, right, a Stanford-infused service. The analogy is an institution as bereft as pathetic Cairo, Illinois. Check out some pictures here.

Stephen E Arnold, July 25, 2023

Google the Great Brings AI to Message Searches

July 25, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-49.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

AI is infiltrating Gmail users’ inboxes. Android Police promises, “Gmail’s New Machine Learning Models Will Improve your Search Results.” Writer Chethan Rao points out this rollout follows June’s announcement of the Help me write feature, which deploys an algorithm to compose one’s emails. He describes the new search tool:

“The most relevant search results are listed under a section called Top results after this update. The rest of them will be listed beneath All results in mail, with these being filtered based on recency, according to the Workspace Blog. Google says this would let people find what they’re looking for ‘with less effort.’ Expanding on the methodology a little bit, the company said (via 9to5Google) its machine learning models will take into account the search term itself, in addition to the most recent emails and ‘other relevant factors’ to pull up the results best suited for the user. The functionality has just begun rolling out this Friday [May 02, 2023], so it could take a couple of weeks before making it to all Workspace or personal Google account holders. Luckily, there are no toggles to enable this feature, meaning it will be automatically enabled when it reaches your device.”

“Other relevant factors.” Very transparent. Kind of them to eliminate the pesky element of choice here. We hope the system works better that Gmail’s recent blue checkmark system (how original), which purported to mark senders one can trust but ended up doing the opposite.

Buckle up. AI will be helping you in every Googley way.

Cynthia Murrell, July 25, 2023

And Now Here Is Sergey… He Has Returned

July 24, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-44.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I am tempted to ask one of the art generators to pump out an image of the Terminator approaching the executive building on Shoreline Drive. But I won’t. I also thought of an image of Clint Eastwood, playing the role of the Man with No Name, wearing a ratty horse blanket to cover his big weapon. But I won’t. I thought of Tom Brady joining the Tampa Bay football team wearing a grin and the full Monte baller outfit. But I won’t. Assorted religious images flitted through my mind, but I knew that if I entered a proper name for the ace Googler and identified a religious figure, MidJourney would demand that I interact with a “higher AI.” I follow the rules, even wonky ones.

The gun fighter strides into the developer facility and says, “Drop them-thar Foosball handles. We are going to make that smart software jump though hoops. One of the champion Foosballers sighs, “Welp. Excuse me. I have to call my mom and dad. I feel nauseous.” MidJourney provided the illustration for this dramatic scene. Ride ‘em, code wrangler.

I will simply point to “Sergey Brin Is Back in the Trenches at Google.” The sub-title to the real news story is:

Co-founder is working alongside AI researchers at tech giant’s headquarters, aiding efforts to build powerful Gemini system.

I love the word “powerful.” Titan-esque, charged with meaning, and pumped up as the theme from Rocky plays softly in the background, syncopated with the sound of clicky keyboards.

Let’s think about what the return to Google means?

- The existing senor management team are out of ideas. Microsoft stumbles forward, revealing ways to monetize good enough smart software. With hammers from Facebook and OpenAI, the company is going to pound hard for subscription upsell revenue. Big companies will buy… Why? Because … Microsoft.

- Mr. Brin is a master mechanic. And the new super smart big brain artificial intelligence unit (which is working like a well oiled Ferrari with two miles on the clock) is due for an oil change, new belts, and a couple of electronic sensors once the new owner get the vehicle to his or her domicile. Ferrari knows how to bill for service, even if the zippy machine does not run like a five year old Toyota Tundra.

- Mr. Brin knows how to take disparate items and glue them together. He and his sidekick did it with Web search, adding such me-too innovations as GoTo, Overture, Yahoo-inspired online pay-to-play ideas. Google’s brilliant Bard needs this type of bolt ons. Mr. Brin knows bolt ons. Clever, right?

Are these three items sufficiently umbrella-like to cover the domain of possibilities? Of course not. My personal view is that item one, management’s inability to hit a three point shot, let alone a slam dunk over Sam AI-Man, requires the 2023 equivalent of asking Mom and Dad to help. Some college students have resorted to this approach to make rent, bail, or buy food.

The return is not yet like Mr. Terminator’s, Mr. Man-with-No-Name’s, or Mr. Brady’s. We have something new. A technology giant with billions in revenue struggling to get its big tractor out of a muddy field. How does one get the Google going?

“Dad, hey it’s me. I need some help.”

Stephen E Arnold, July 24, 2023

Citation Manipulation: Fiddling for Fame and Grant Money Perhaps?

July 24, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-48.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

A fact about science and academia is that these fields are incredibly biased. Researchers, scientists, and professors are always on the hunt for funding and prestige. While these professionals state they uphold ethical practices, they are still human. In other words, they violate their ethics for a decent reward. Another prize for these individuals is being published, but even publishers are becoming impartial says Nature in, “Researchers Who Agree To Manipulate Citations Are More Likely To Get Their Papers Published.”

A former university researcher practices his new craft: Rigging die for gangs running crap games. He said to my fictional interviewer, “The skills are directly transferable. I use die manufactured by other people. I manipulate them. My degrees in statistics allow me to calculate what weights are needed to tip the odds. This new job pays well too. I do miss the faculty meetings, but the gang leaders often make it clear that if I need anything special, those fine gentlemen will accommodate my wishes.” MidJourney seems to have an affinity for certain artistic creations like people who create loaded dice.

A recent study from Research Policy discovered that researchers are coerced by editors to include superfluous citations in their papers. Those that give into the editors have a higher chance of getting published. If the citations are relevant to the researchers’ topic, what is the big deal? The problem is that the citations might not accurately represent the research nor augment the original data. There is also the pressure to comply with industry politics:

“When scientists are coerced into padding their papers with citations, the journal editor might be looking to boost either their journal’s or their own citation counts, says study author Eric Fong, who studies research management at the University of Alabama in Huntsville. In other cases, peer reviewers might try to persuade authors to cite their work. Citation rings, in which multiple scholars or journals agree to cite each other excessively, can be harder to spot, because there are several stakeholders involved, instead of just two academics disproportionately citing one another.”

The study is over a decade old, but its results pertain to today’s scientific and academia environment. Academic journals want to inflate their citations to “justify” their importance to the industry and maybe even keeping the paywall incentive. Researchers are also pressured to add more authors, because it helps someone pad their resume.

These are not good practices to protect science and academia’s’ integrity, but it is better than lying about results.

Whitney Grace, July 24, 2023

AI Commitments: But What about Chipmunks and the Bunny Rabbits?

July 23, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-53.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Amazon, Anthropic, Google, Inflection, Meta, Microsoft and OpenAI sent executives to a meeting held in “the White House” to agree on some ground rules for “artificial intelligence.” AI is available from a number of companies and as free downloads as open source. Rumors have reached me suggesting that active research and development are underway in government agencies, universities, and companies located in a number of countries other than the U.S. Some believe the U.S. is the Zoe of AI, assisted by Naiads. Okay, but you know those Greek gods can be unpredictable.

Thus, what’s a commitment? I am not sure what the word means today. I asked You.com, a smart search system to define the term for me. The system dutifully return this explanation:

commitment is defined as “an agreement or pledge to do something in the future; the state or an instance of being obligated or emotionally impelled; the act of committing, especially the act of committing a crime.” In general, commitment refers to a promise or pledge to do something, often with a strong sense of dedication or obligation. It can also refer to a state of being emotionally invested in something or someone, or to the act of carrying out a particular action or decision.

Several words and phrases jumped out at me; namely, “do something in the future.” What does “do” mean? What is “the future?” Next week, next month, a decade from a specific point in time, etc.? “Obligated” is an intriguing word. What compels the obligation? A threat, a sense of duty, and understanding of a shared ethical fabric? “Promise” evokes a young person’s statement to a parent when caught drinking daddy’s beer; for example, “Mom, I promise I won’t do that again.” The “emotional” investment is an angle that reminds me that 40 to 50 percent of first marriages end in divorce. Commitments — even when bound by social values — are flimsy things for some. Would I fly on a commercial airline whose crash rate was 40 to 50 percent? Would you?

“Okay, we broke the window? Now what do we do?” asks the leader of the pack. “Run,” says the brightest of the group. “If we are caught, we just say, “Okay, we will fix it.” “Will we?” asks the smallest of the gang. “Of course not,” replies the leader. Thanks MidJourney, you create original kid images well.

Why make any noise about commitment?

I read “How Do the White House’s A.I. Commitments Stack Up?” The write up is a personal opinion about an agreement between “the White House” and the big US players in artificial intelligence. The focus was understandable because those in attendance are wrapped in the red, white, and blue; presumably pay taxes; and want to do what’s right, save the rain forest, and be green.

Some of the companies participating in the meeting have testified before Congress. I recall at least one of the firms’ senior managers say, “Senator, thank you for that question. I don’t know the answer. I will have my team provide that information to you…” My hunch is that a few of the companies in attendance at the White House meeting could use the phrase or a similar one at some point in the “future.”

The table below lists most of the commitments to which the AI leaders showed some receptivity. The table presents the commitments in the left hand column and the right hand column offers some hypothesized reactions from a nation state quite opposed to the United States, the US dollar, the hegemony of US technology, baseball, apple pie, etc.

| Commitments | Gamed Responses |

| Security testing before release | Based on historical security activities, not to worry |

| Sharing AI information | Let’s order pizza and plan a front company based in Walnut Creek |

| Protect IP about models | Let’s canvas our AI coders and pick some to get jobs at these outfits |

| Permit pentesting | Yes, pentesting. Order some white hats with happy faces |

| Tell users when AI content is produced | Yes, let’s become registered users. Who has a cousin in Mountain View? |

| Report about use of the AI technologies | Make sure we are on the mailing list for these reports |

| Research AI social risks | Do we own a research firm? Can we buy the research firm assisting these US companies? |

| Use AI to fix up social ills | What is a social ill? Call the general, please, and ask. |

The PR angle is obvious. I wonder if commitments will work. The firms have one objective; that is, meet the expectations of their stakeholders. In order to do that, the firms must operate from the baseline of self-interest.

Net net: A plot of techno-land now have a few big outfits working and thinking hard how to buy up the best plots. What about zoning, government regulations, and doing good things for small animals and wild flowers? Yeah. No problem.

Stephen E Arnold, July 23, 2023

Silicon Valley and Its Busy, Busy Beavers

July 21, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-43.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Several stories caught my attention. These are:

- The story “Google Pitches AI to Newsrooms As Tool to Help Reporters Write News Stories.” The main idea is that the “test” will allow newspaper publishers to become more efficient.

- The story “YouTube Premium Price Increase 2023: Users Calls for Lawsuit” explains that to improve the experience, Google will raise its price for YouTube Premium.” Was that service positioned as fixed price?

- The story “Google Gives a Peek at What a Quantum Computer Can Do” resurfaces the quantum supremacy assertion. Like high school hot rodders, Google suggests that its hardware is the most powerful, fastest, and slickest one in the Quantum School for Mavens.

- The story “Meta, Google, and OpenAI Promise the White House They’ll Develop AI Responsibly” reports that Google and other big tech outfits cross their hearts and hope to die that they will not act in an untoward manner.

Google’s busy beavers have been active: AI, pricing tactics, quantum goodness, and team building. Thanks, MidJourney but you left out the computing devices which no high value beaver goes without.

Google has allowed its beavers to gnaw on some organic material to build some dams. Specifically, the newspapers which have been affected by Google’s online advertising (no I am not forgetting Craigslist.com. I am just focusing on the Google at the moment) can avail themselves of AI. The idea is… cost cutting. Could there be some learnings for the Google? What I mean is that such a series of tests or trials provides the Google with telemetry. Such telemetry allows the Google to refine its news writing capabilities. The trajectory of such knowledge may allow the Google to embark on its own newspaper experiment. Where will that lead? I don’t know, but it does not bode well for real journalists or some other entities.

The YouTube price increase is positioned as a better experience. Could the sharp increase in ads before, during, and after a YouTube video be part of a strategy? What I am hypothesizing is that more ads will force users to pay to be able to watch a YouTube video without being driven crazy by ads for cheap mobile, health products, and gun belts? Deteriorating the experience allows a customer to buy a better experience. Could that be semi-accurate?

The quantum supremacy thing strikes me as 100 percent PR with a dash of high school braggadocio. The write up speaks to me this way: “I got a higher score on the SAT.” Snort snort snort. The snorts are a sound track to putting down those whose machines just don’t have the right stuff. I wonder if this is how others perceive the article.

And the busy beavers turned up at the White House. The beavers say, “We will be responsible with this AI stuff. We AI promise.” Okay, I believe this because I don’t know what these creatures mean when the word “responsible” is used. I can guess, however.

Net net: The ethicist from Harvard and the soon-to-be-former president of Stanford are available to provide advisory services. Silicon Valley is a metaphor for many good things, especially for the companies and their senior executives. Life will get better and better with certain high technology outfits running the show, pulling the strings, and controlling information, won’t it?

Stephen E Arnold, July 21, 2023

TikTok: Ever Innovative and Classy Too

July 21, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-28.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I have no idea if the write up is accurate. Without doing any deep thinking or even cursory research, the story seems so appropriate for our media environment. (I almost typed medio ambiente. Yikes. The dinobaby is really old on this hot Friday afternoon.)

“Of course, I share money from videos of destitute people crying in inclement weather. It is the least I can do. I am working on a feature film now,” says the brilliant innovator who has his finger on the pulse of the TikTok viewer. The image of this paragon popped out of the MidJourney microwave quickly.

Here’s the title: “People on TikTok Are Paying Elderly Women to Sit in Stagnant Mud for Hours and Cry.” Yes, that’s the story. The write up states as actual factual:

Over hours, sympathetic viewers send “coins” and gifts that can be exchanged for cash, amounting to several hundred dollars per stream, says Sultan Akhyar, the man credited with inventing the trend. Emojis of gifts, roses, and well-wishes float up gently from the bottom of the live feed. The viral phenomenon known as mandi lumpur, or “mud baths,” gained notoriety in January when several livestreams were posted from Setanggor village …

Three quick observations:

- The classy vehicle for this entertainment is TikTok.

- Money is involved and shared immediately. Yep, immediately.

- Live video, the entertainment of the here-and-now.

I am waiting for the next innovation that takes crying in the mud to another level.

Stephen E Arnold, July 21, 2023